Technology peripherals

Technology peripherals

AI

AI

Synthetic Amodal Perception Dataset AmodalSynthDrive: An Innovative Solution for Autonomous Driving

Synthetic Amodal Perception Dataset AmodalSynthDrive: An Innovative Solution for Autonomous Driving

Synthetic Amodal Perception Dataset AmodalSynthDrive: An Innovative Solution for Autonomous Driving

- Paper link: https://arxiv.org/pdf/2309.06547.pdf

- Dataset link: http://amodalsynthdrive.cs.uni-freiburg.de

Abstract

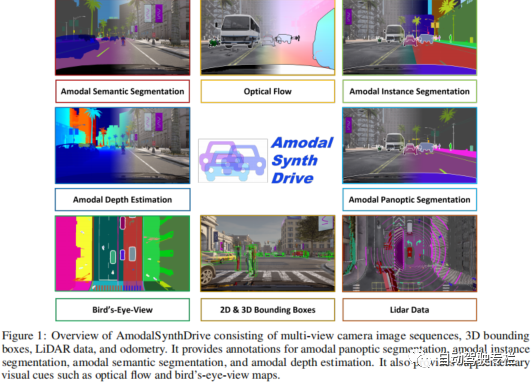

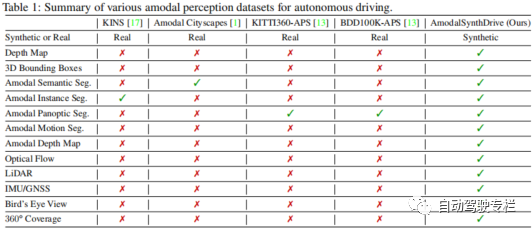

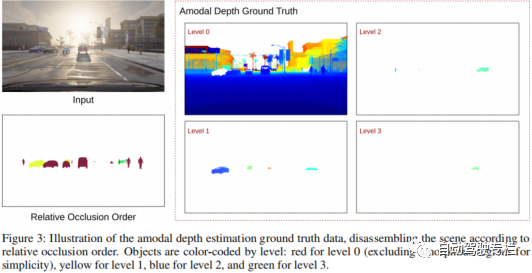

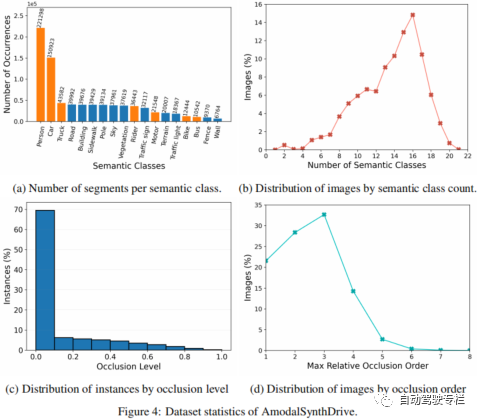

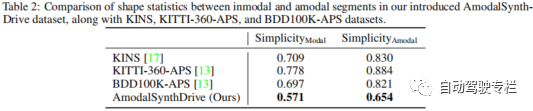

This article introduces AmodalSynthDrive: a tool for automatic A synthetic amodal perception dataset for driving. Unlike humans, who can effortlessly estimate the entirety of an object even in the presence of partial occlusion, modern computer vision algorithms still find this aspect extremely challenging. Exploiting this amodal perception for autonomous driving remains largely unexplored due to the lack of suitable data sets. The generation of these datasets is mainly affected by the expensive annotation cost and the need to mitigate the interference caused by the annotator's subjectivity in accurately labeling occluded areas. To address these limitations, this paper introduces AmodalSynthDrive, a synthetic multi-task amodal perception dataset. The dataset provides multi-view camera images, 3D bounding boxes, lidar data, and odometry for 150 driving sequences, including over 1M object annotations under various traffic, weather, and lighting conditions. AmodalSynthDrive supports a variety of amodal scene understanding tasks, including the introduction of amodal depth estimation to enhance spatial understanding. This article evaluates several baselines for each task to illustrate the challenges and sets up a public benchmark server.

Main contributions

The contributions of this article are summarized as follows:

1) This article proposes the AmodalSynthDrive data set, which is a A comprehensive synthetic amodal perception dataset for urban driving scenarios with multiple data sources;

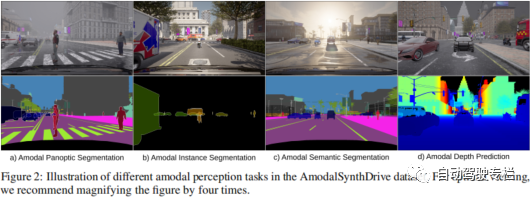

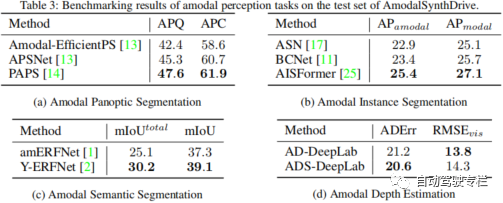

This paper proposes a benchmark for amodal perception tasks, including amodal semantics Segmentation, Amodal Instance Segmentation and Amodal Panorama Segmentation

3) Novel amodal depth estimation tasks are designed to facilitate enhanced spatial understanding. This paper demonstrates the feasibility of this new task through several baselines.

Paper pictures and tables

#

Summary

Perception is a key task for autonomous vehicles, but current methods still lack the amodal understanding required to interpret complex traffic scenes. Therefore, this paper proposes AmodalSynthDrive, a multimodal synthetic perception dataset for autonomous driving. With synthetic images and lidar point clouds, we provide a comprehensive dataset that includes ground-truth annotated data for basic amodal perception tasks and introduce a new task to enhance spatial understanding called Amodal depth estimation. This paper provides over 60,000 individual image sets, each of which contains amodal instance segmentation, amodal semantic segmentation, amodal panoramic segmentation, optical flow, 2D and 3D bounding boxes, amodal depth, and bird's-eye view. Figure related data. Through AmodalSynthDrive, this paper provides various baselines and believes that this work will pave the way for novel research on amodal scene understanding in dynamic urban environments

Original link: https://mp.weixin.qq.com/s/7cXqFbMoljcs6dQOLU3SAQ

The above is the detailed content of Synthetic Amodal Perception Dataset AmodalSynthDrive: An Innovative Solution for Autonomous Driving. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

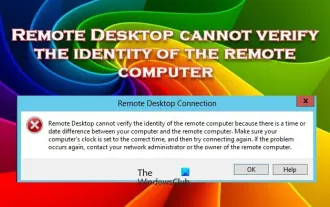

Remote Desktop cannot authenticate the remote computer's identity

Feb 29, 2024 pm 12:30 PM

Remote Desktop cannot authenticate the remote computer's identity

Feb 29, 2024 pm 12:30 PM

Windows Remote Desktop Service allows users to access computers remotely, which is very convenient for people who need to work remotely. However, problems can be encountered when users cannot connect to the remote computer or when Remote Desktop cannot authenticate the computer's identity. This may be caused by network connection issues or certificate verification failure. In this case, the user may need to check the network connection, ensure that the remote computer is online, and try to reconnect. Also, ensuring that the remote computer's authentication options are configured correctly is key to resolving the issue. Such problems with Windows Remote Desktop Services can usually be resolved by carefully checking and adjusting settings. Remote Desktop cannot verify the identity of the remote computer due to a time or date difference. Please make sure your calculations

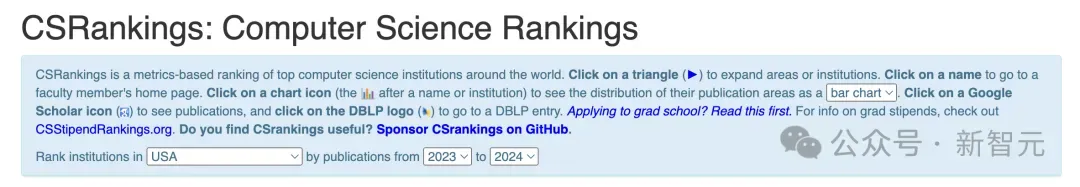

2024 CSRankings National Computer Science Rankings Released! CMU dominates the list, MIT falls out of the top 5

Mar 25, 2024 pm 06:01 PM

2024 CSRankings National Computer Science Rankings Released! CMU dominates the list, MIT falls out of the top 5

Mar 25, 2024 pm 06:01 PM

The 2024CSRankings National Computer Science Major Rankings have just been released! This year, in the ranking of the best CS universities in the United States, Carnegie Mellon University (CMU) ranks among the best in the country and in the field of CS, while the University of Illinois at Urbana-Champaign (UIUC) has been ranked second for six consecutive years. Georgia Tech ranked third. Then, Stanford University, University of California at San Diego, University of Michigan, and University of Washington tied for fourth place in the world. It is worth noting that MIT's ranking fell and fell out of the top five. CSRankings is a global university ranking project in the field of computer science initiated by Professor Emery Berger of the School of Computer and Information Sciences at the University of Massachusetts Amherst. The ranking is based on objective

What is e in computer

Aug 31, 2023 am 09:36 AM

What is e in computer

Aug 31, 2023 am 09:36 AM

The "e" of computer is the scientific notation symbol. The letter "e" is used as the exponent separator in scientific notation, which means "multiplied to the power of 10". In scientific notation, a number is usually written as M × 10^E, where M is a number between 1 and 10 and E represents the exponent.

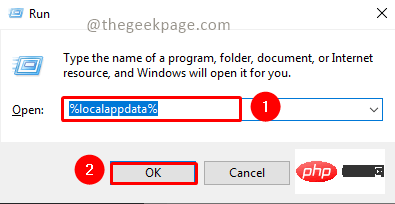

Fix: Microsoft Teams error code 80090016 Your computer's Trusted Platform module has failed

Apr 19, 2023 pm 09:28 PM

Fix: Microsoft Teams error code 80090016 Your computer's Trusted Platform module has failed

Apr 19, 2023 pm 09:28 PM

<p>MSTeams is the trusted platform to communicate, chat or call with teammates and colleagues. Error code 80090016 on MSTeams and the message <strong>Your computer's Trusted Platform Module has failed</strong> may cause difficulty logging in. The app will not allow you to log in until the error code is resolved. If you encounter such messages while opening MS Teams or any other Microsoft application, then this article can guide you to resolve the issue. </p><h2&

What does computer cu mean?

Aug 15, 2023 am 09:58 AM

What does computer cu mean?

Aug 15, 2023 am 09:58 AM

The meaning of cu in a computer depends on the context: 1. Control Unit, in the central processor of a computer, CU is the component responsible for coordinating and controlling the entire computing process; 2. Compute Unit, in a graphics processor or other accelerated processor, CU is the basic unit for processing parallel computing tasks.

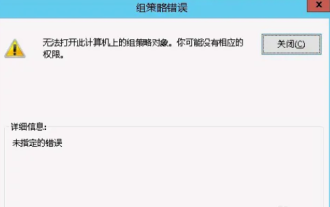

Unable to open the Group Policy object on this computer

Feb 07, 2024 pm 02:00 PM

Unable to open the Group Policy object on this computer

Feb 07, 2024 pm 02:00 PM

Occasionally, the operating system may malfunction when using a computer. The problem I encountered today was that when accessing gpedit.msc, the system prompted that the Group Policy object could not be opened because the correct permissions may be lacking. The Group Policy object on this computer could not be opened. Solution: 1. When accessing gpedit.msc, the system prompts that the Group Policy object on this computer cannot be opened because of lack of permissions. Details: The system cannot locate the path specified. 2. After the user clicks the close button, the following error window pops up. 3. Check the log records immediately and combine the recorded information to find that the problem lies in the C:\Windows\System32\GroupPolicy\Machine\registry.pol file

Python script to log out of computer

Sep 05, 2023 am 08:37 AM

Python script to log out of computer

Sep 05, 2023 am 08:37 AM

In today's digital age, automation plays a vital role in streamlining and simplifying various tasks. One of these tasks is to log off the computer, which is usually done manually by selecting the logout option from the operating system's user interface. But what if we could automate this process using a Python script? In this blog post, we'll explore how to create a Python script that can log off your computer with just a few lines of code. In this article, we'll walk through the step-by-step process of creating a Python script for logging out of your computer. We'll cover the necessary prerequisites, discuss different ways to log out programmatically, and provide a step-by-step guide to writing the script. Additionally, we will address platform-specific considerations and highlight best practices

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A