IT House News on October 12, Docker recently held the Dockercon 23 conference in Los Angeles, launched a new Docker GenAI stack , allowing Docker container technology to seamlessly integrate Neo4j graph database and LangChain model Linking technology and Ollama for executing large language models.

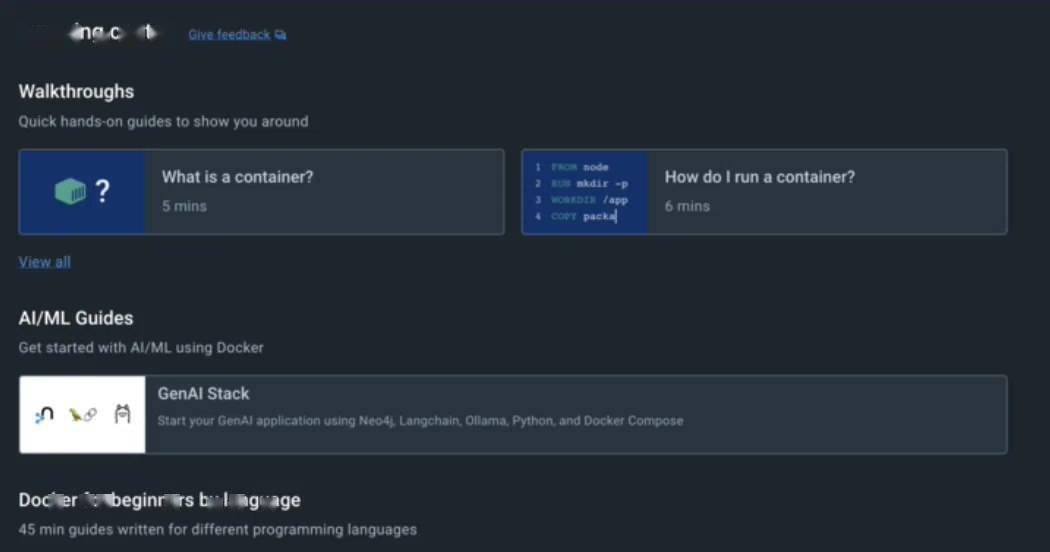

The Docker GenAI stack primarily simplifies the development of generative AI applications, simplifying processes such as vector databases by introducing the Neo4j graph database, and through the capabilities provided by the Ollama platform, large language models such as Llama 2 can be run natively.

The Docker GenAI stack is designed to alleviate the complexity of configuring these different elements in containers, simplifying the entire process. The entire Docker GenAI stack is available for free and can be run locally on developers’ systems. Docker is designed to provide enterprises with available deployment and commercial support options as applications evolve.

Unlike other rich generative AI development tools on the market, Docker is launching its specialized GenAI tool, called Docker AI.

Docker AI is trained on Docker-proprietary data accumulated from millions of Dockerfiles, compose files, and error logs, providing resources that can correct errors directly in the developer workflow. This tool is designed to improve the developer experience by making troubleshooting and fixing issues more manageable.

IT House attaches a link to the press release here, and interested users can read it in depth.

The above is the detailed content of Docker launches generative AI stack and Docker AI. For more information, please follow other related articles on the PHP Chinese website!

mysql default transaction isolation level

mysql default transaction isolation level

What is digital currency

What is digital currency

The difference between arrow functions and ordinary functions

The difference between arrow functions and ordinary functions

Clean up junk in win10

Clean up junk in win10

special symbol point

special symbol point

What keys do arrows refer to in computers?

What keys do arrows refer to in computers?

How to use the Print() function in Python

How to use the Print() function in Python

There is an extra blank page in Word and I cannot delete it.

There is an extra blank page in Word and I cannot delete it.