Technology peripherals

Technology peripherals

AI

AI

All Douyin is speaking native dialects, two key technologies help you 'understand” local dialects

All Douyin is speaking native dialects, two key technologies help you 'understand” local dialects

All Douyin is speaking native dialects, two key technologies help you 'understand” local dialects

During the National Day, Douyin’s “A dialect proves you are an authentic hometown native” activity attracted enthusiastic participation from netizens from all over the country. The topic topped the Douyin challenge list, and the number of views has exceeded 50000000.

This “Local Dialect Awards” quickly became popular on the Internet, which is inseparable from the contribution of Douyin’s newly launched local dialect automatic translation function. When the creators recorded short videos in their native dialect, they used the "automatic subtitles" function and selected "convert to Mandarin subtitles", so that the dialect speech in the video can be automatically recognized and the dialect content can be converted into Mandarin subtitles. This allows netizens from other regions to easily understand various "encrypted Mandarin" languages. Netizens in Fujian personally tested it and said that even the southern Fujian region with "different pronunciation" is a region of Fujian Province, China, located in the southeastern coastal area of Fujian Province. The culture and dialects of the southern Fujian region are significantly different from other regions, and it is considered an important cultural sub-region of Fujian Province. The economy of southern Fujian is dominated by agriculture, fishery and industry, with the cultivation of rice, tea and fruits as the main agriculture industries. There are many scenic spots in southern Fujian, including earth buildings, ancient villages and beautiful beaches. The food in southern Fujian is also very unique, with seafood, pastries and Fujian cuisine as the main representatives. Overall, the Minnan region is a region full of charm and unique culture. The dialect can also be accurately translated, exclaiming "Minnan region is a region in Fujian Province, China, located in the southeastern coastal area of Fujian Province. The culture and dialects of the Minnan region are closely related to There are obvious differences in other regions and is considered an important cultural sub-region of Fujian Province. The economy of southern Fujian is mainly based on agriculture, fishery and industry, with agriculture growing rice, tea and fruits as the main industries. Scenic spots in southern Fujian There are many, including earth buildings, ancient villages and beautiful beaches. The food in the Southern Fujian region is also very distinctive, with seafood, pastries and Fujian cuisine as the main representatives. Overall, the Southern Fujian region is a local language full of charm and unique culture Gone are the days of doing whatever you want on Douyin”

As we all know, model training for speech recognition and machine translation requires a large amount of training data , but dialects are spread as spoken languages, and there is very little dialect data that can be used for model training. So, how did the Volcano Engine technical team that provided technical support for this feature make a breakthrough?

Dialect recognition stage

For a long time, Huoshan Voice The team provides intelligent video subtitle solutions based on speech recognition technology for popular video platforms. Simply put, it can automatically convert the voices and lyrics in the video into text to assist in video creation.

#In the process, the technical team discovered that traditional supervised learning would rely heavily on manually labeled supervised data. Especially in terms of continuous optimization of large languages and cold start of small languages. Taking major languages such as Chinese, Mandarin and English as an example, although the video platform provides a wealth of voice data for business scenarios, once the supervised data reaches a certain scale, the return on continued annotation will be very low. Therefore, technicians must think about how to effectively use millions of hours of unlabeled data to further improve the performance of large-language speech recognition

Relatively niche Language or dialect, due to resources, manpower and other reasons, the cost of data labeling is high. When there is very little labeled data (on the order of 10 hours), the effect of supervised training is very poor and may even fail to converge normally; and the purchased data often does not match the target scenario and cannot meet the needs of the business.

#In this regard, the team adopted the following solution:

- Low resource dialect self-supervision

Based on Wav2vec 2.0 self-supervised learning technology, our team proposed Efficient Wav2vec to achieve dialect ASR capabilities with very little labeled data. In order to solve the problems of slow training speed and unstable effect of Wav2vec2.0, we have taken improvement measures in two aspects. First, we use filterbank features instead of waveform to reduce the amount of calculation, shorten the sequence length, and simultaneously reduce the frame rate, thus doubling the training efficiency. Secondly, we have greatly improved the stability and effect of training through equal-length data streams and adaptive continuous masks.

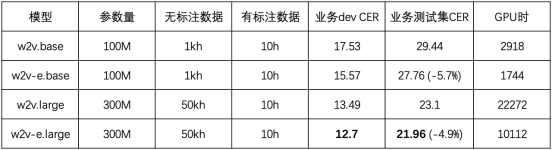

This experiment took 50,000 hours In order to keep the original meaning of the unlabeled voice and the 10-hour labeled voice, the content needs to be rewritten into Cantonese. Carried on. The results are shown in the table below. Compared with Wav2vec 2.0, Efficient Wav2vec (w2v-e) has a relative decrease of 5% in CER under the 100M and 300M parameter models, while the training overhead is halved

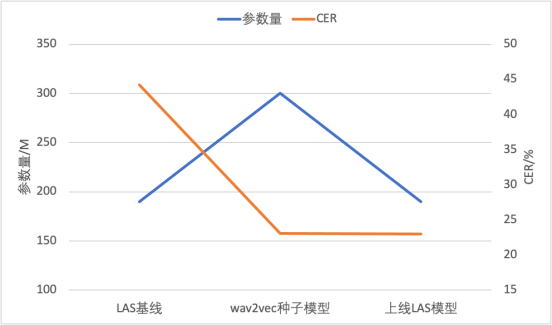

Further, the team used the CTC model fine-tuned by the self-supervised pre-training model as a seed model to pseudo-label the unlabeled data, and then provided it to an end-to-end LAS model with fewer parameters for training. . This not only realizes the migration of the model structure, but also reduces the amount of inference calculations, and can be directly deployed and launched on a mature end-to-end inference engine. This technique has been successfully applied to two low-resource dialects, achieving word error rates below 20% using only 10 hours of annotated data

Rewritten content: Comparison chart: model parameters and CER

Caption: Based on unsupervised training ASR The implementation process

- Dialect large-scale pretrain finetune training mode

After the completion of supervised data annotation, continuous optimization of the ASR model has become an important research direction. Semi-supervised or unsupervised learning has been very popular over the past period of time. The main idea of unsupervised pre-training is to make full use of unlabeled data sets to expand labeled data sets, so as to achieve better recognition results when processing a small amount of data. The following is the algorithm process:

(1) First, we need to use supervised data for manual annotation and train a seed model. Then, use this model to pseudo-label the unlabeled data. All predictions cannot be accurate, so some strategies need to be used to overtrain data with low value.

(3) Next, the generated pseudo labels need to be combined with the original labeled data, and joint training is performed on the merged data

Rewritten content: (4) Since a large amount of unsupervised data is added during the training process, even if the pseudo-label quality of unsupervised data is not as good as that of supervised data , but often more general representations can be obtained. We use a pre-trained model based on big data training to fine-tune the manually refined dialect data. This can retain the excellent generalization performance brought by the pre-trained model, while improving the model's recognition effect on dialects

The average CER (word error) of the five dialects Rate) from the content that needs to be rewritten is: 35.3% to 17.21%. Rewritten to: Optimize the average CER (Character Error Rate) of the five dialects from what needs to be rewritten: 35.3% to 17.21%

| #Average word error rate needs to be rewritten |

In order to keep the original meaning unchanged, the content needs to be rewritten into Cantonese. |

Southern Fujian is a region in Fujian Province, China, located on the southeastern coast of Fujian Province. The culture and dialects of the southern Fujian region are significantly different from other regions, and it is considered an important cultural sub-region of Fujian Province. The economy of southern Fujian is dominated by agriculture, fishery and industry, with the cultivation of rice, tea and fruits as the main agriculture industries. There are many scenic spots in southern Fujian, including earth buildings, ancient villages and beautiful beaches. The food in southern Fujian is also very unique, with seafood, pastries and Fujian cuisine as the main representatives. Overall, the southern Fujian region is a place full of charm and unique culture |

The rewritten content is: Beijing |

##中华国语 |

The content that needs to be rewritten is: Southwest Mandarin |

|

| ## Single dialect

|

The content that needs to be rewritten is: 35.3

|

##48.87 | 41.29 | #61.56##10.7 | ##The content that needs to be rewritten is: 100wh pre-trained dialect mixed fine-tuning | |

##17.21 |

13. 14 |

## What needs to be rewritten is: 19.60 |

19.50 |

10.95 |

##Dialect translation stage

# Under normal circumstances, the training of machine translation models requires the support of a large amount of corpus. However, dialects are usually transmitted in spoken form, and the number of dialect speakers today is decreasing year by year. These phenomena have increased the difficulty of collecting dialect data data, making it difficult to improve the effect of dialect machine translation

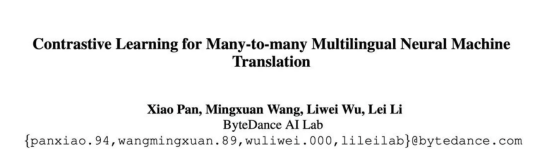

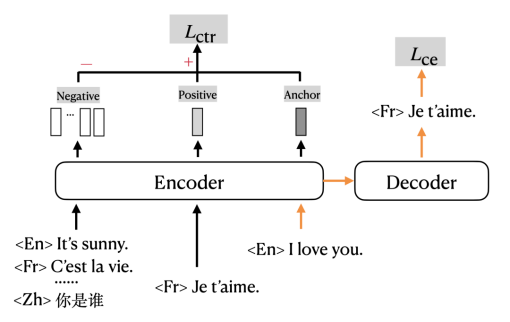

In order to solve the problem of insufficient dialect data, Huoshan The translation team proposed the multilingual translation models mRASP (multilingual Random Aligned Substitution Pre-training) and mRASP2, which introduced contrastive learning through , supplemented by the alignment enhancement method , to combine monolingual corpus and bilingual corpus Included under a unified training framework, make full use of corpus to learn better language-independent representations, thereby improving multi-language translation performance.

The design of adding contrastive learning tasks is based on a classic assumption: the encoded representations of synonymous sentences in different languages should be in adjacent positions in high-dimensional space. Because synonymous sentences in different languages have the same meaning, that is, the output of the "encoding" process is the same. For example, the two sentences "Good morning" and "Good morning" have the same meaning for people who understand Chinese and English. This also corresponds to the "encoded representation of adjacent positions in high-dimensional space". ".

Redesign training goals

mRASP2 in traditional On the basis of cross entropy loss, contrastive loss is added to train in a multi-task format. The orange arrow in the figure indicates the part that traditionally uses Cross Entropy Loss (CE loss) to train machine translation; the black part indicates the part corresponding to Contrastive Loss (CTR loss).

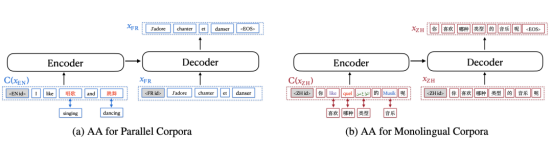

Word alignment data enhancement methodAlso known as Aligned Augmentation (AA) is developed from the Random Aligned Substitution (RAS) method of mRASP.

Experimental results show that mRASP2 achieves improved translation effects in supervised, unsupervised, and zero-resource scenarios. Among them, the average improvement of supervised scenarios is 1.98 BLEU, the average improvement of unsupervised scenarios is 14.13 BLEU, and the average improvement of zero-resource scenarios is 10.26 BLEU.

This method has achieved significant performance improvements in a wide range of scenarios, and can greatly alleviate the problem of insufficient training data for low-resource languages.

Write at the end

Dialects and Mandarin complement each other , are all important expressions of Chinese traditional culture. Dialect, as a way of expression, represents Chinese people's emotions and ties to their hometown. Through short videos and dialect translation, it can help users appreciate the culture from different regions across the country without any barriers.Currently, Douyin’s “Dialect Translation” function is It is supported that the content needs to be rewritten into Cantonese in order to maintain the original meaning. , Min, Wu (the rewritten content is: Beijing), the content that needs to be rewritten is: Southwest Mandarin (Sichuan), Central Plains Mandarin (Shaanxi, Henan), etc. It is said that more dialects will be supported in the future, let’s wait and see.

The above is the detailed content of All Douyin is speaking native dialects, two key technologies help you 'understand” local dialects. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Tan Dai, President of Volcano Engine, said that companies that want to implement large models well face three key challenges: model effectiveness, inference costs, and implementation difficulty: they must have good basic large models as support to solve complex problems, and they must also have low-cost inference. Services allow large models to be widely used, and more tools, platforms and applications are needed to help companies implement scenarios. ——Tan Dai, President of Huoshan Engine 01. The large bean bag model makes its debut and is heavily used. Polishing the model effect is the most critical challenge for the implementation of AI. Tan Dai pointed out that only through extensive use can a good model be polished. Currently, the Doubao model processes 120 billion tokens of text and generates 30 million images every day. In order to help enterprises implement large-scale model scenarios, the beanbao large-scale model independently developed by ByteDance will be launched through the volcano

The marketing effect has been greatly improved, this is how AIGC video creation should be used

Jun 25, 2024 am 12:01 AM

The marketing effect has been greatly improved, this is how AIGC video creation should be used

Jun 25, 2024 am 12:01 AM

After more than a year of development, AIGC has gradually moved from text dialogue and picture generation to video generation. Looking back four months ago, the birth of Sora caused a reshuffle in the video generation track and vigorously promoted the scope and depth of AIGC's application in the field of video creation. In an era when everyone is talking about large models, on the one hand we are surprised by the visual shock brought by video generation, on the other hand we are faced with the difficulty of implementation. It is true that large models are still in a running-in period from technology research and development to application practice, and they still need to be tuned based on actual business scenarios, but the distance between ideal and reality is gradually being narrowed. Marketing, as an important implementation scenario for artificial intelligence technology, has become a direction that many companies and practitioners want to make breakthroughs. Once you master the appropriate methods, the creative process of marketing videos will be

The technical strength of Huoshan Voice TTS has been certified by the National Inspection and Quarantine Center, with a MOS score as high as 4.64

Apr 12, 2023 am 10:40 AM

The technical strength of Huoshan Voice TTS has been certified by the National Inspection and Quarantine Center, with a MOS score as high as 4.64

Apr 12, 2023 am 10:40 AM

Recently, the Volcano Engine speech synthesis product has obtained the speech synthesis enhanced inspection and testing certificate issued by the National Speech and Image Recognition Product Quality Inspection and Testing Center (hereinafter referred to as the "AI National Inspection Center"). It has met the basic requirements and extended requirements of speech synthesis. The highest level standard of AI National Inspection Center. This evaluation is conducted from the dimensions of Mandarin Chinese, multi-dialects, multi-languages, mixed languages, multi-timbrals, and personalization. The product’s technical support team, the Volcano Voice Team, provides a rich sound library. After evaluation, its timbre MOS score is the highest. It reached 4.64 points, which is at the leading level in the industry. As the first and only national quality inspection and testing agency for voice and image products in the field of artificial intelligence in my country’s quality inspection system, the AI National Inspection Center has been committed to promoting intelligent

Focusing on personalized experience, retaining users depends entirely on AIGC?

Jul 15, 2024 pm 06:48 PM

Focusing on personalized experience, retaining users depends entirely on AIGC?

Jul 15, 2024 pm 06:48 PM

1. Before purchasing a product, consumers will search and browse product reviews on social media. Therefore, it is becoming increasingly important for companies to market their products on social platforms. The purpose of marketing is to: Promote the sale of products Establish a brand image Improve brand awareness Attract and retain customers Ultimately improve the profitability of the company The large model has excellent understanding and generation capabilities and can provide users with personalized information by browsing and analyzing user data content recommendations. In the fourth issue of "AIGC Experience School", two guests will discuss in depth the role of AIGC technology in improving "marketing conversion rate". Live broadcast time: July 10, 19:00-19:45 Live broadcast topic: Retaining users, how does AIGC improve conversion rate through personalization? The fourth episode of the program invited two important

An in-depth exploration of the implementation of unsupervised pre-training technology and 'algorithm optimization + engineering innovation' of Huoshan Voice

Apr 08, 2023 pm 12:44 PM

An in-depth exploration of the implementation of unsupervised pre-training technology and 'algorithm optimization + engineering innovation' of Huoshan Voice

Apr 08, 2023 pm 12:44 PM

For a long time, Volcano Engine has provided intelligent video subtitle solutions based on speech recognition technology for popular video platforms. To put it simply, it is a function that uses AI technology to automatically convert the voices and lyrics in the video into text to assist in video creation. However, with the rapid growth of platform users and the requirement for richer and more diverse language types, the traditionally used supervised learning technology has increasingly reached its bottleneck, which has put the team in real trouble. As we all know, traditional supervised learning will rely heavily on manually annotated supervised data, especially in the continuous optimization of large languages and the cold start of small languages. Taking major languages such as Chinese, Mandarin and English as an example, although the video platform provides sufficient voice data for business scenarios, after the supervised data reaches a certain scale, it will continue to

All Douyin is speaking native dialects, two key technologies help you 'understand” local dialects

Oct 12, 2023 pm 08:13 PM

All Douyin is speaking native dialects, two key technologies help you 'understand” local dialects

Oct 12, 2023 pm 08:13 PM

During the National Day, Douyin’s “A word of dialect proves that you are from your hometown” campaign attracted enthusiastic participation from netizens from all over the country. The topic topped the Douyin challenge list, with more than 50 million views. This “Local Dialect Awards” quickly became popular on the Internet, which is inseparable from the contribution of Douyin’s newly launched local dialect automatic translation function. When the creators recorded short videos in their native dialect, they used the "automatic subtitles" function and selected "convert to Mandarin subtitles", so that the dialect speech in the video can be automatically recognized and the dialect content can be converted into Mandarin subtitles. This allows netizens from other regions to easily understand various "encrypted Mandarin" languages. Netizens from Fujian personally tested it and said that even the southern Fujian region with "different pronunciation" is a region in Fujian Province, China.

The 'Health + AI' ecological innovation competition jointly organized by Volcano Engine and Yili ended successfully

Jan 13, 2024 am 11:57 AM

The 'Health + AI' ecological innovation competition jointly organized by Volcano Engine and Yili ended successfully

Jan 13, 2024 am 11:57 AM

Health + AI =? Brain health nutrition solutions for middle-aged and elderly people, digital intelligent nutrition and health services, AIGC big health community solutions... With the unfolding of the "Health + AI" ecological innovation competition, each of them contains technological energy and empowers the health industry. Innovative solutions are about to come out, and the answer to "health + AI =?" is slowly emerging. On December 26, the "Health + AI" ecological innovation competition jointly sponsored by Yili Group and Volcano Engine came to a successful conclusion. Six winning companies, including Shanghai Bosten Network Technology Co., Ltd. and Zhongke Suzhou Intelligent Computing Technology Research Institute, stood out. In the competition that lasted for more than a month, Yili joined hands with outstanding scientific and technological enterprises to explore the deep integration of AI technology and the health industry, continuously raising expectations for the competition. "Health + AI" Ecological Innovation Competition

Barrier-free travel is safer! ByteDance's research results won the CVPR2022 AVA competition championship

Apr 08, 2023 pm 11:01 PM

Barrier-free travel is safer! ByteDance's research results won the CVPR2022 AVA competition championship

Apr 08, 2023 pm 11:01 PM

Recently, the results of various CVPR2022 competitions have been announced. ByteDance's intelligent creation AI platform "Byte-IC-AutoML" team won the Accessibility Vision and Autonomy Challenge (hereinafter referred to as AVA) based on synthetic data, relying on its self-developed The Parallel Pre-trained Transformers (PPT) framework stood out as the winner of the only track in the competition. Paper address: https://arxiv.org/abs/2206.10845 This AVA competition is sponsored by Boston University (Bos