The brilliance of SGD brings the significance of deep learning

Big Data Digest Produced

In July, New York University (NYU) postdoctoral fellow Naomi Saphra wrote an article titled "Interpretability Creationism", explaining it from an evolutionary perspective. The relationship between stochastic gradient descent (SGD) and deep learning is thought-provoking.

For example: "Just like the human tailbone, some phenomena may have lost their original role during the model training process and become similar to degenerate organs."

"Whether it is To study the behavior of parasitic chicks or the internal performance of neural networks, if you do not consider how the system develops, it will be difficult to distinguish what is valuable information."

The following is the original text, compiled without changing the original meaning. , please enjoy.

Centuries ago to Europeans, the presence of cuckoo eggs in nests was an honor for nesting birds. For the nesting bird enthusiastically feeds her "holy guests" even more diligently than she does her own (expelled) chicks, a behavior consistent with the spirit of Christian hospitality.

In 1859, Charles Darwin questioned the optimistic, cooperative notion of bird behavior by studying the finch, another occasionally parasitic finch.

Without considering the role of the cuckoo from an evolutionary perspective, it is difficult to realize that the nesting bird is not a generous owner of the cuckoo chicks, but an unfortunate victim .

As evolutionary biologist Theodosius Dobzhansky said: "Without the brilliance of evolution, nothing in biology is understandable."

Although stochastic gradient descent is not the true form of biological evolution, But post hoc analysis in machine learning has many similarities to the scientific method in biology, which often requires understanding the origins of a model's behavior.

Whether you are studying the behavior of parasitic chicks or the internal behavior of neural networks, it is difficult to discern what is valuable information without considering how the system develops.

Therefore, when analyzing the model, it is important to pay attention not only to the state at the end of training, but also to the multiple intermediate checkpoints during the training process. Such experiments are minimally expensive but may lead to meaningful findings that help to better understand and explain the behavior of the model.

Just the right story

Human beings are causal thinkers and like to look for causal relationships between things, even if there may be a lack of scientific basis.

In the field of NLP, researchers also tend to provide an explainable causal explanation for observed behavior, but this explanation may not really reveal the inner workings of the model. For example, one might pay close attention to interpretability artifacts such as syntactic attention distributions or selective neurons, but in reality we cannot be sure that the model is actually using these behavioral patterns.

To solve this problem, causal modeling can help. When we try to intervene (modify or manipulate) certain features and patterns of a model to test their impact on the model's behavior, this intervention may only target certain obvious, specific types of behavior. In other words, when trying to understand how a model uses specific features and patterns, we may only be able to observe some of these behaviors and ignore other potential, less obvious behaviors.

Thus, in practice, we may only be able to perform certain types of minor interventions on specific units in the representation, failing to correctly reflect the interactions between features.

We may introduce distribution shifts when trying to intervene (modify or manipulate) certain features and patterns of the model to test their impact on the behavior of the model. Significant distribution shifts can lead to erratic behavior, so why wouldn't they lead to spurious interpretability artifacts?

Translator's Note: Distribution shift refers to the difference between the statistical rules established by the model on the training data and the data after intervention. This difference may cause the model to fail to adapt to new data distributions and thus exhibit erratic behavior.

Fortunately, methods for studying biological evolution can help us understand some of the phenomena produced in the model. Just like the human tailbone, some phenomena may have lost their original role during the model training process and have become something like degenerate organs. Some phenomena may be interdependent, for example, the emergence of certain characteristics early in training may affect the subsequent development of other characteristics, just as animals need basic light sensing capabilities before developing complex eyes.

There are also some phenomena that may be due to competition between traits. For example, animals with strong smelling abilities may be less dependent on vision, so their visual abilities may be weakened. In addition, some phenomena may be just side effects of the training process, similar to the junk DNA in our genome. They occupy a large part of the genome but do not directly affect our appearance and function.

During the process of training the model, some unused phenomena may appear, and we have many theories to explain this phenomenon. For example, the information bottleneck hypothesis predicts that early in training, input information will be memorized and then compressed in the model, retaining only information relevant to the output. These early memories may not always be useful when processing unseen data, but they are very important for eventually learning a specific output representation.

We can also consider the possibility of degenerate features, because the early and late behaviors of the trained model are very different. Early models were simpler. Taking language models as an example, early models are similar to simple n-gram models, while later models can express more complex language patterns. This mixing in the training process can have side effects that can easily be mistaken for being a critical part of training the model.

Evolutionary Perspective

It is very difficult to understand the learning tendency of a model based only on the features after training. According to the work of Lovering et al., observing the ease of feature extraction at the beginning of training and analyzing the fine-tuning data has a much deeper impact on understanding fine-tuning performance than simply analyzing it at the end of training.

Language layered behavior is a typical explanation based on analytical static models. It has been suggested that words that are close together in the sentence structure will be represented closer in the model, while words that are structurally further apart will be represented further apart. So how do we know that the model is grouping words by their closeness in sentence structure?

In fact, we can say with more confidence that some language models are hierarchical because early models encode more local information in the long short-term memory network (LSTM) and Transformer, and when When these dependencies can be stacked hierarchically on familiar short components, they make it easier to learn more distant dependencies.

An actual case was encountered when dealing with the problem of interpretive creationism. When training a text classifier multiple times using different random seeds, it can be observed that the model is distributed in several different clusters. It was also found that the generalization behavior of a model can be predicted by observing how well the model connects to other models on the loss surface. In other words, depending on where the loss appears on the surface, the generalization performance of the model may vary. This phenomenon may be related to the random seeds used during training.

But can it really be said? What if a cluster actually corresponds to an early stage of the model? If a cluster actually only represents an early stage of the model, eventually those models may shift to a cluster with better generalization performance. Therefore, in this case, the observed phenomena simply indicate that some fine-tuning processes are slower than others.

It is necessary to prove that the training trajectory may fall into a basin on the loss surface to explain the diversity of generalization behavior in the trained model. In fact, after examining several checkpoints during training, it was found that a model at the center of a cluster develops stronger connections with other models in its cluster during training. However, some models are still able to successfully shift to a better cluster.

A Suggestion

For answering the research question, simply observing the training process is not enough. In the search for causal relationships, intervention is required. Take the study of antibiotic resistance in biology, for example. Researchers need to deliberately expose bacteria to antibiotics and cannot rely on natural experiments. Therefore, statements based on observations of training dynamics require experimental confirmation.

Not all statements require observation of the training process. In the eyes of ancient humans, many organs had obvious functions, such as eyes for seeing, and the heart for pumping blood. In the field of natural language processing (NLP), by analyzing static models, we can make simple interpretations, such as that specific neurons fire in the presence of specific attributes, or that certain types of information are still available in the model.

However, observations of the training process can still clarify the meaning of many observations made in static models. This means that, although not all problems require observation of the training process, in many cases it is helpful to understand the training process to understand the observations.

The advice is simple: when studying and analyzing a trained model, don’t just focus on the final results of the training process. Instead, the analysis should be applied to multiple intermediate checkpoints during training; when fine-tuning the model, check several points early and late in training. It is important to observe changes in model behavior during training, which can help researchers better understand whether the model strategy is reasonable and evaluate the model strategy after observing what happens early in training.

Reference link: https://thegradient.pub/interpretability-creationism/

The above is the detailed content of The brilliance of SGD brings the significance of deep learning. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

In the 1950s, artificial intelligence (AI) was born. That's when researchers discovered that machines could perform human-like tasks, such as thinking. Later, in the 1960s, the U.S. Department of Defense funded artificial intelligence and established laboratories for further development. Researchers are finding applications for artificial intelligence in many areas, such as space exploration and survival in extreme environments. Space exploration is the study of the universe, which covers the entire universe beyond the earth. Space is classified as an extreme environment because its conditions are different from those on Earth. To survive in space, many factors must be considered and precautions must be taken. Scientists and researchers believe that exploring space and understanding the current state of everything can help understand how the universe works and prepare for potential environmental crises

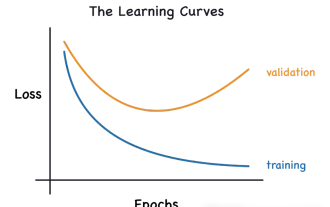

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

MetaFAIR teamed up with Harvard to provide a new research framework for optimizing the data bias generated when large-scale machine learning is performed. It is known that the training of large language models often takes months and uses hundreds or even thousands of GPUs. Taking the LLaMA270B model as an example, its training requires a total of 1,720,320 GPU hours. Training large models presents unique systemic challenges due to the scale and complexity of these workloads. Recently, many institutions have reported instability in the training process when training SOTA generative AI models. They usually appear in the form of loss spikes. For example, Google's PaLM model experienced up to 20 loss spikes during the training process. Numerical bias is the root cause of this training inaccuracy,