Technology peripherals

Technology peripherals

AI

AI

What are some classic cases where deep learning is not as effective as traditional methods?

What are some classic cases where deep learning is not as effective as traditional methods?

What are some classic cases where deep learning is not as effective as traditional methods?

As one of the most cutting-edge fields of technology, deep learning is often considered the key to technological progress. However, are there some cases where deep learning is not as effective as traditional methods? This article summarizes some high-quality answers from Zhihu to answer this question

Question link: https://www.zhihu.com/question/451498156

# Answer 1

Author: Jueleizai

Source link: https://www.zhihu.com/question /451498156/answer/1802577845

For fields that require interpretability, basic deep learning is incomparable with traditional methods. I have been working on risk control/anti-money laundering products for the past few years, but regulations require our decisions to be explainable. We have tried deep learning, but explainability is difficult to achieve, and the results are not very good. For risk control scenarios, data cleaning is very important, otherwise it will just be garbage in garbage out.

When writing the above content, I remembered an article I read two years ago: "You don’t need ML/AI, you need SQL"

https://www.php.cn/link/f0e1f0412f36e086dc5f596b84370e86

The author is Celestine Omin, a Nigerian software engineer, the largest e-commerce website in Nigeria One Konga works. We all know that precision marketing and personalized recommendations for old users are one of the most commonly used areas of AI. When others are using deep learning to make recommendations, his method seems extremely simple. He just ran through the database, screened out all users who had not logged in for three months, and pushed coupons to them. It also ran through the product list in the user's shopping cart and decided to recommend related products based on these popular products.

As a result, with his simple SQL-based personalized recommendations, the open rate of most marketing emails is between 7-10%, and when done well, the open rate is close to 25% -30%, three times the industry average open rate.

Of course, this example is not to tell you that the recommendation algorithm is useless and everyone should use SQL. It means that when applying deep learning, you need to consider constraints such as cost and application scenarios. . In my previous answer (What exactly does the implementation ability of an algorithm engineer refer to?), I mentioned that practical constraints need to be considered when implementing algorithms.

https://www.php.cn/link/f0e1f0412f36e086dc5f596b84370e86

And Nigeria’s e-commerce environment, It is still in a very backward state, and logistics cannot keep up. Even if the deep learning method is used to improve the effect, it will not actually have much impact on the company's overall profits.

Therefore, the algorithm must be "adapted to local conditions" when implemented. Otherwise, the situation of "the electric fan blowing the soap box" will occur again.

A large company introduced a soap packaging production line, but found that this production line had a flaw: there were often boxes without soap. They couldn't sell empty boxes to customers, so they had to hire a postdoc who studied automation to design a plan to sort empty soap boxes.

The postdoctoral fellow organized a scientific research team of more than a dozen people and used a combination of machinery, microelectronics, automation, X-ray detection and other technologies, spending 900,000 yuan to successfully solve the problem. Whenever an empty soap box passes through the production line, detectors on both sides will detect it and drive a robot to push the empty soap box away.

There was a township enterprise in southern China that also bought the same production line. When the boss found out about this problem, he got very angry and hired a small worker to say, "You can fix this for me, or else you can get away from me." The worker quickly figured out a way. He spent 190 yuan to place a high-power electric fan next to the production line and blew it hard, so that all the empty soap boxes were blown away.

(Although it’s just a joke)

Deep learning is a hammer, but not everything in the world is a nail.

# Answer 2

Author: Mo Xiao Fourier

Source link: https://www.zhihu.com/question/ 451498156/answer/1802730183

There are two common scenarios:

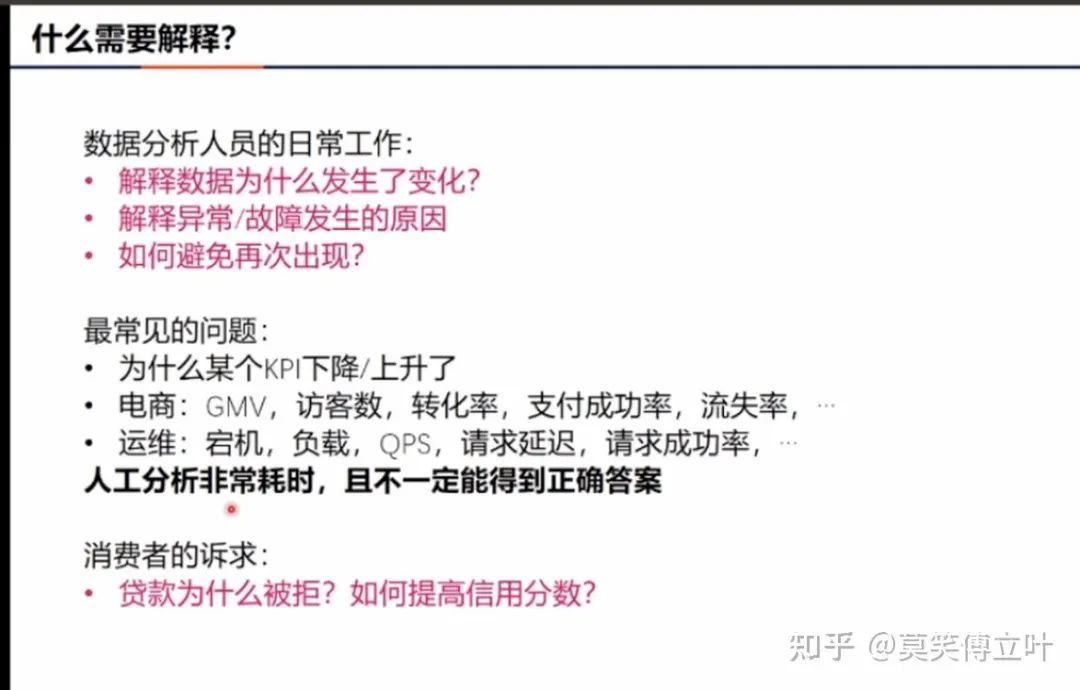

1. Scenarios that pursue explainability.

Deep learning is very good at solving classification and regression problems, but its explanation of what affects the results is very weak. In actual business scenarios, the requirements for interpretability are very high, such as In the following scenarios, deep learning is often overturned.

2. Many operations optimization scenarios

such as scheduling, planning, and allocation problems, often such The problem does not translate well into a supervised learning format, so optimization algorithms are often used. In current research, deep learning algorithms are often integrated into the solution process for better solutions, but in general, the model itself is not yet deep learning as the backbone.

Deep learning is a very good solution, but it is not the only one. Even when implemented, there are still big problems. If deep learning is integrated into the optimization algorithm, it can still be of great use as a component of the solution.

In short,

Answer 3

Author: LinT

Source link: https://www.zhihu.com/question/451498156/answer/1802516688

This question should be looked at based on scenarios. Although deep learning eliminates the trouble of feature engineering, it may be difficult to apply in some scenarios:

- Applications have high requirements on latency, but not so high accuracy. requirements, a simple model may be a better choice at this time;

- Some data types, such as tabular data, may be more suitable to use statistical learning models such as tree-based models instead of deep Learning model;

- Model decisions have a significant impact, such as safety-related and economic decision-making, and the model is required to be interpretable. Then linear models or tree-based models are more suitable than deep learning. Good choice;

- The application scenario determines the difficulty of data collection, and there is a risk of over-fitting when using deep learning

Real Applications are all based on demand. It is unscientific to talk about performance regardless of demand (accuracy, latency, computing power consumption). If the "dry translation" in the question is limited to a certain indicator, the scope of discussion may be narrowed.

Original link: https://mp.weixin.qq.com/s/tO2OD772qCntNytwqPjUsA

The above is the detailed content of What are some classic cases where deep learning is not as effective as traditional methods?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

There are many reasons why MySQL startup fails, and it can be diagnosed by checking the error log. Common causes include port conflicts (check port occupancy and modify configuration), permission issues (check service running user permissions), configuration file errors (check parameter settings), data directory corruption (restore data or rebuild table space), InnoDB table space issues (check ibdata1 files), plug-in loading failure (check error log). When solving problems, you should analyze them based on the error log, find the root cause of the problem, and develop the habit of backing up data regularly to prevent and solve problems.

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Detailed explanation of database ACID attributes ACID attributes are a set of rules to ensure the reliability and consistency of database transactions. They define how database systems handle transactions, and ensure data integrity and accuracy even in case of system crashes, power interruptions, or multiple users concurrent access. ACID Attribute Overview Atomicity: A transaction is regarded as an indivisible unit. Any part fails, the entire transaction is rolled back, and the database does not retain any changes. For example, if a bank transfer is deducted from one account but not increased to another, the entire operation is revoked. begintransaction; updateaccountssetbalance=balance-100wh

Can mysql return json

Apr 08, 2025 pm 03:09 PM

Can mysql return json

Apr 08, 2025 pm 03:09 PM

MySQL can return JSON data. The JSON_EXTRACT function extracts field values. For complex queries, you can consider using the WHERE clause to filter JSON data, but pay attention to its performance impact. MySQL's support for JSON is constantly increasing, and it is recommended to pay attention to the latest version and features.

Master SQL LIMIT clause: Control the number of rows in a query

Apr 08, 2025 pm 07:00 PM

Master SQL LIMIT clause: Control the number of rows in a query

Apr 08, 2025 pm 07:00 PM

SQLLIMIT clause: Control the number of rows in query results. The LIMIT clause in SQL is used to limit the number of rows returned by the query. This is very useful when processing large data sets, paginated displays and test data, and can effectively improve query efficiency. Basic syntax of syntax: SELECTcolumn1,column2,...FROMtable_nameLIMITnumber_of_rows;number_of_rows: Specify the number of rows returned. Syntax with offset: SELECTcolumn1,column2,...FROMtable_nameLIMIToffset,number_of_rows;offset: Skip

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

Monitor MySQL and MariaDB Droplets with Prometheus MySQL Exporter

Apr 08, 2025 pm 02:42 PM

Monitor MySQL and MariaDB Droplets with Prometheus MySQL Exporter

Apr 08, 2025 pm 02:42 PM

Effective monitoring of MySQL and MariaDB databases is critical to maintaining optimal performance, identifying potential bottlenecks, and ensuring overall system reliability. Prometheus MySQL Exporter is a powerful tool that provides detailed insights into database metrics that are critical for proactive management and troubleshooting.

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The MySQL primary key cannot be empty because the primary key is a key attribute that uniquely identifies each row in the database. If the primary key can be empty, the record cannot be uniquely identifies, which will lead to data confusion. When using self-incremental integer columns or UUIDs as primary keys, you should consider factors such as efficiency and space occupancy and choose an appropriate solution.

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

It is impossible to view MongoDB password directly through Navicat because it is stored as hash values. How to retrieve lost passwords: 1. Reset passwords; 2. Check configuration files (may contain hash values); 3. Check codes (may hardcode passwords).