Technology peripherals

Technology peripherals

AI

AI

Microsoft releases AI content moderation tool called Azure AI Content Safety

Microsoft releases AI content moderation tool called Azure AI Content Safety

Microsoft releases AI content moderation tool called Azure AI Content Safety

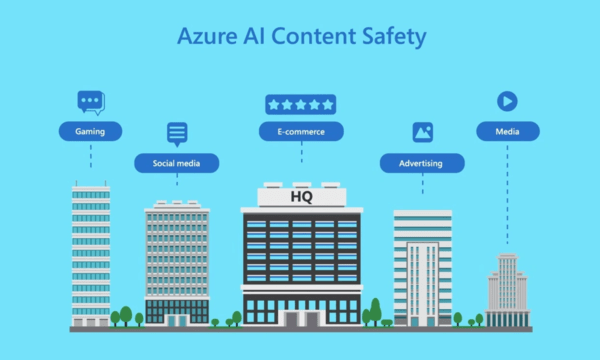

[CNMO News] After many months of testing, Microsoft recently officially released the AI content review tool Azure AI Content Safety. It is reported that this product is a content moderation tool that it began testing in May this year. It contains a series of trained AI models that can detect negative content related to prejudice, hatred, violence, etc. in pictures or texts, and can understand and detect Contains images or text in eight languages.

Additionally, the moderation tool assigns a severity score to flagged content and guides human reviewers on content that requires action. Initially, the audit tool was integrated into the Azure OpenAI service, but now it will be rolled out as a standalone system

Mentioned in Microsoft’s official blog post: “This means that users can apply it to artificial intelligence content generated by open source models and other company models, and can also call some user-generated content to further enhance its practicality. ”

Microsoft said that although the product has improved in handling data and content to more impartially understand context, it still needs to rely on human reviewers to flag data and content. It also means that ultimate justice rests with humans. However, human reviewers may not be completely neutral and prudent when handling data and content due to personal biases

The above is the detailed content of Microsoft releases AI content moderation tool called Azure AI Content Safety. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

The article reviews top AI voice generators like Google Cloud, Amazon Polly, Microsoft Azure, IBM Watson, and Descript, focusing on their features, voice quality, and suitability for different needs.

How to Access Falcon 3? - Analytics Vidhya

Mar 31, 2025 pm 04:41 PM

How to Access Falcon 3? - Analytics Vidhya

Mar 31, 2025 pm 04:41 PM

Falcon 3: A Revolutionary Open-Source Large Language Model Falcon 3, the latest iteration in the acclaimed Falcon series of LLMs, represents a significant advancement in AI technology. Developed by the Technology Innovation Institute (TII), this open

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

2024 witnessed a shift from simply using LLMs for content generation to understanding their inner workings. This exploration led to the discovery of AI Agents – autonomous systems handling tasks and decisions with minimal human intervention. Buildin