Technology peripherals

Technology peripherals

AI

AI

AI interprets brain signals in real time and restores key visual features of images at 7x speed, forwarded by LeCun

AI interprets brain signals in real time and restores key visual features of images at 7x speed, forwarded by LeCun

AI interprets brain signals in real time and restores key visual features of images at 7x speed, forwarded by LeCun

Now, AI can interpret brain signals in real time!

This is not sensational, but a new study by Meta, which can guess the picture you are looking at in 0.5 seconds based on brain signals, and use AI to restore it in real time.

Before this, although AI has been able to restore images from brain signals relatively accurately, there is still a bug - it is not fast enough.

To this end, Meta has developed a new decoding model, which increases the speed of image retrieval by AI by 7 times. It can almost "instantly" read what people are looking at and make a rough guess.

Looks like a standing man. After several restorations, the AI actually interpreted a "standing man":

Picture

Picture

LeCun forwarded that the research on reconstructing visual and other inputs from MEG brain signals is indeed great.

Picture

Picture

So, how does Meta allow AI to “read brains quickly”?

How to interpret brain activity decoding?

Currently, there are two main ways for AI to read brain signals and restore images.

One is fMRI (functional magnetic resonance imaging), which can generate images of blood flow to specific parts of the brain; the other is MEG (magnetoencephalography), which can measure the extremely high intensity of nerve currents in the brain. Weak biomagnetic signal.

However, the speed of fMRI neuroimaging is often very slow, with an average of 2 seconds to produce a picture (≈0.5 Hz). In contrast, MEG can even record thousands of brain activity images per second ( ≈5000 Hz).

So compared to fMRI, why not use MEG data to try to restore the "image seen by humans"?

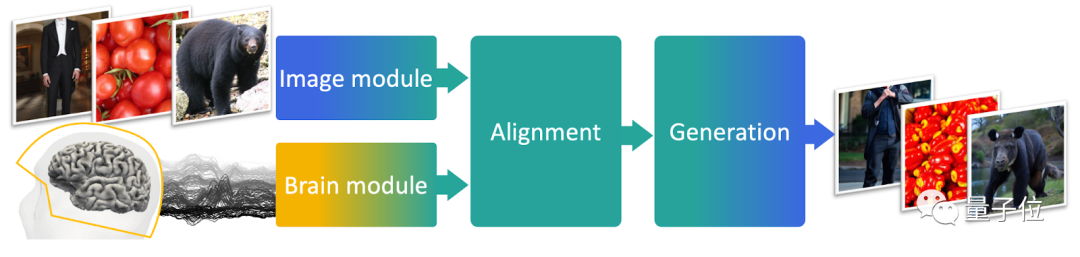

Based on this idea, the authors designed a MEG decoding model, which consists of three parts.

The first part is a pre-trained model, responsible for obtaining embeddings from images;

The second part is an end-to-end training model, responsible for aligning MEG data with image embeddings;

The third part is a pre-trained image generator, responsible for restoring the final image.

Picture

Picture

For training, the researchers used a data set called THINGS-MEG, which contains 4 young people (2 boys and 2 girls) , average 23.25 years) MEG data recorded while viewing images.

These young people viewed a total of 22,448 images (1,854 types). Each image was displayed for 0.5 seconds and the interval was 0.8~1.2 seconds. 200 of them were viewed repeatedly.

In addition, there were 3659 images that were not shown to participants but were also used in image retrieval.

So, what is the effect of the AI trained in this way?

Image retrieval speed increased by 7 times

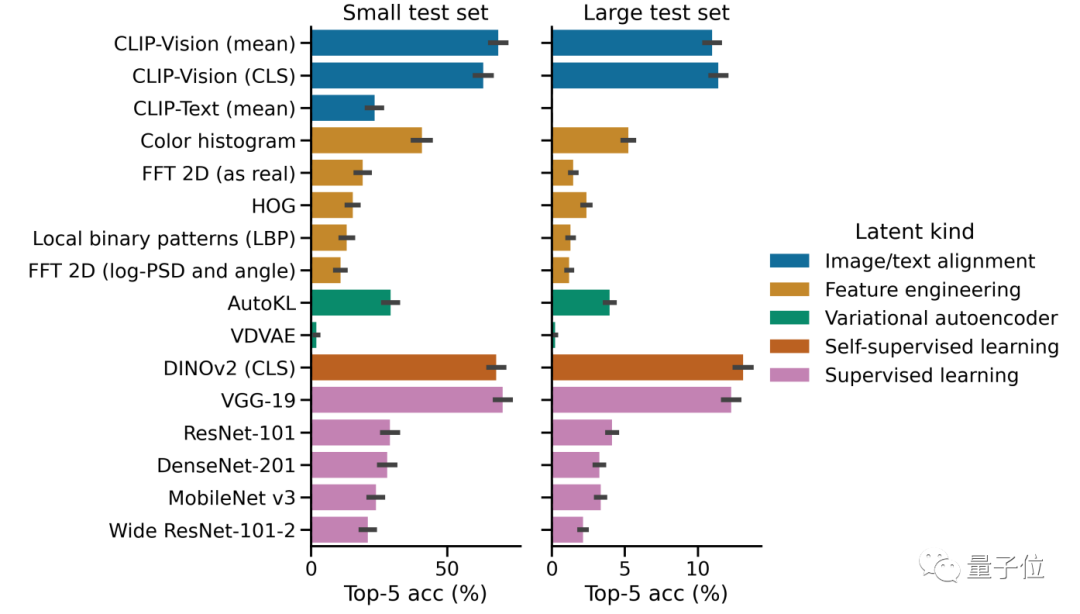

Overall, the MEG decoding model designed in this study is 7 times faster than the image retrieval speed of the linear decoder.

Among them, compared with CLIP and other models, the visual Transformer architecture DINOv2 developed by Meta performs better in extracting image features and can better align MEG data and image embeddings.

Picture

Picture

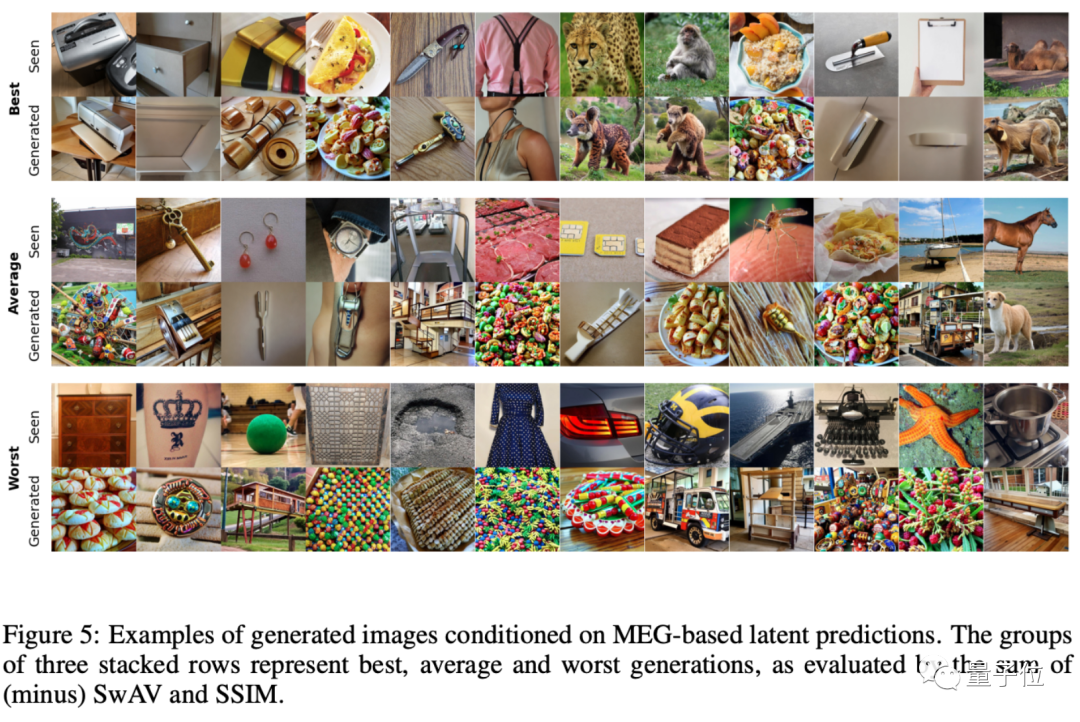

The authors divided the overall generated images into three major categories, the highest matching degree, the medium matching degree and the worst matching degree:

Picture

Picture

However, judging from the generated examples, the image effect restored by this AI is indeed not very good.

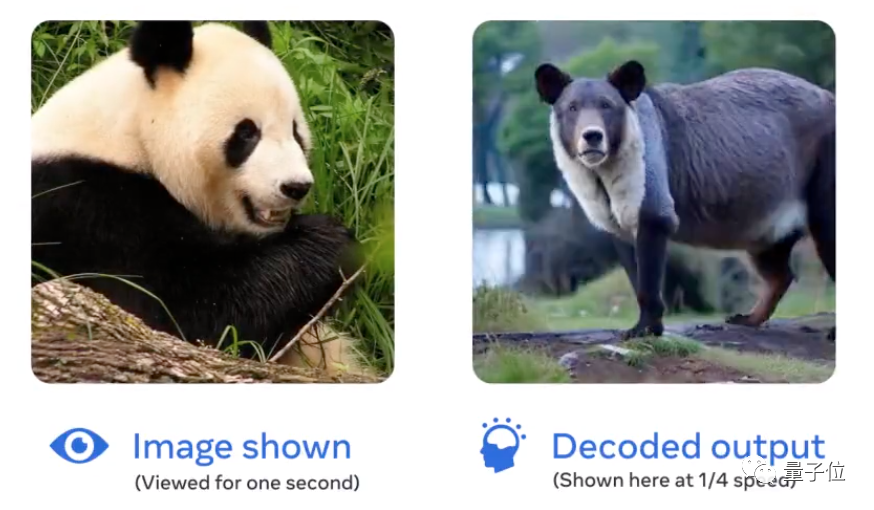

Even the most restored image is still questioned by some netizens: Why does the panda look nothing like a panda?

Picture

Picture

The author said: At least it looks like a black and white bear. (The panda is furious!)

Picture

Picture

Of course, researchers also admit that the image effect restored from MEG data is indeed not very good at present. The main advantage Still in terms of speed.

For example, a previous study called 7T fMRI from the University of Minnesota and other institutions can restore the image seen by the human eye from fMRI data with a high degree of recovery.

Picture

Picture

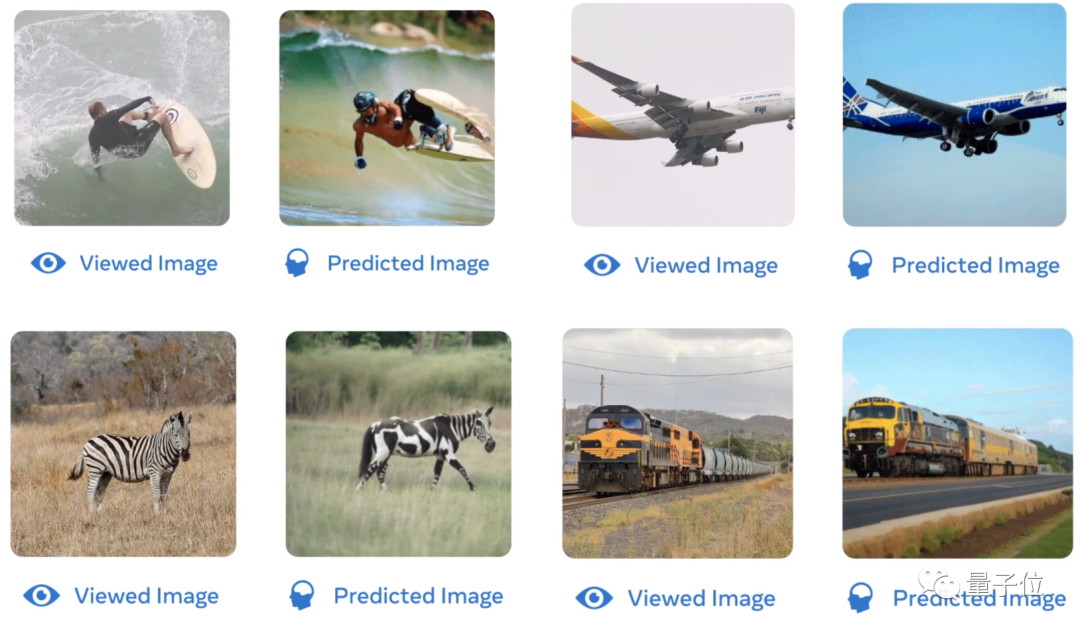

Whether it is human surfing movements, the shape of an airplane, the color of zebras, or the background of a train, AI trained based on fMRI data can better The image is restored:

Picture

Picture

The authors also gave an explanation for this, believing that this is because the visual features restored by AI based on MEG are biased. advanced.

But in comparison, 7T fMRI can extract and restore lower-level visual features in the image, so that the overall restoration degree of the generated image is higher.

Where do you think this type of research can be used?

Paper address:

https://www.php.cn/link/f40723ed94042ea9ea36bfb5ad4157b2

The above is the detailed content of AI interprets brain signals in real time and restores key visual features of images at 7x speed, forwarded by LeCun. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

DDREASE is a tool for recovering data from file or block devices such as hard drives, SSDs, RAM disks, CDs, DVDs and USB storage devices. It copies data from one block device to another, leaving corrupted data blocks behind and moving only good data blocks. ddreasue is a powerful recovery tool that is fully automated as it does not require any interference during recovery operations. Additionally, thanks to the ddasue map file, it can be stopped and resumed at any time. Other key features of DDREASE are as follows: It does not overwrite recovered data but fills the gaps in case of iterative recovery. However, it can be truncated if the tool is instructed to do so explicitly. Recover data from multiple files or blocks to a single

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

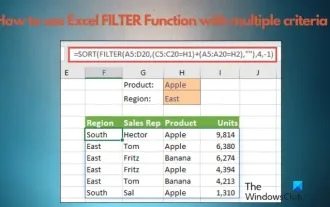

How to use Excel filter function with multiple conditions

Feb 26, 2024 am 10:19 AM

How to use Excel filter function with multiple conditions

Feb 26, 2024 am 10:19 AM

If you need to know how to use filtering with multiple criteria in Excel, the following tutorial will guide you through the steps to ensure you can filter and sort your data effectively. Excel's filtering function is very powerful and can help you extract the information you need from large amounts of data. This function can filter data according to the conditions you set and display only the parts that meet the conditions, making data management more efficient. By using the filter function, you can quickly find target data, saving time in finding and organizing data. This function can not only be applied to simple data lists, but can also be filtered based on multiple conditions to help you locate the information you need more accurately. Overall, Excel’s filtering function is a very practical

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,