Technology peripherals

Technology peripherals

AI

AI

OpenAI image detection tool exposed, CTO: 99% of images generated by AI can be recognized

OpenAI image detection tool exposed, CTO: 99% of images generated by AI can be recognized

OpenAI image detection tool exposed, CTO: 99% of images generated by AI can be recognized

OpenAI is going to launch AI image recognition.

The latest news is that their company is developing a detection tool.

According to Chief Technology Officer Mira Murat:

The tool has very high accuracy, with an accuracy rate of up to 99%.

It is currently in the internal testing process and will be released to the public soon.

I have to say that this accuracy rate is still a bit exciting. After all, OpenAI’s previous efforts in AI text detection ended in a disastrous failure with an “accuracy rate of 26%”.

AI content detection is not simple

OpenAI has already had a layout in the field of AI content detection.

In January this year, they released an AI text detector to distinguish between AI and human-generated content to prevent AI text from being abused.

However, this tool sadly retired in July: without any announcement, the page directly 404.

The reason is that the accuracy rate is too low, "almost like guessing."

According to data published by OpenAI itself:

It can only correctly identify 26% of AI-generated text, while incorrectly identifying 9% of human-written text.

After this hasty ending, OpenAI stated that it will absorb user feedback, strive to improve, and research more effective text source technology.

At the same time, they also announced that tools to determine whether images, audio and video are generated by AI will also be developed.

Now, with the advent of DALL-E 3 and the continuous iteration of similar tools such as Midjourney, AI painting capabilities are getting stronger and stronger.

The biggest concern is that it will be used to fabricate fake news images around the world.

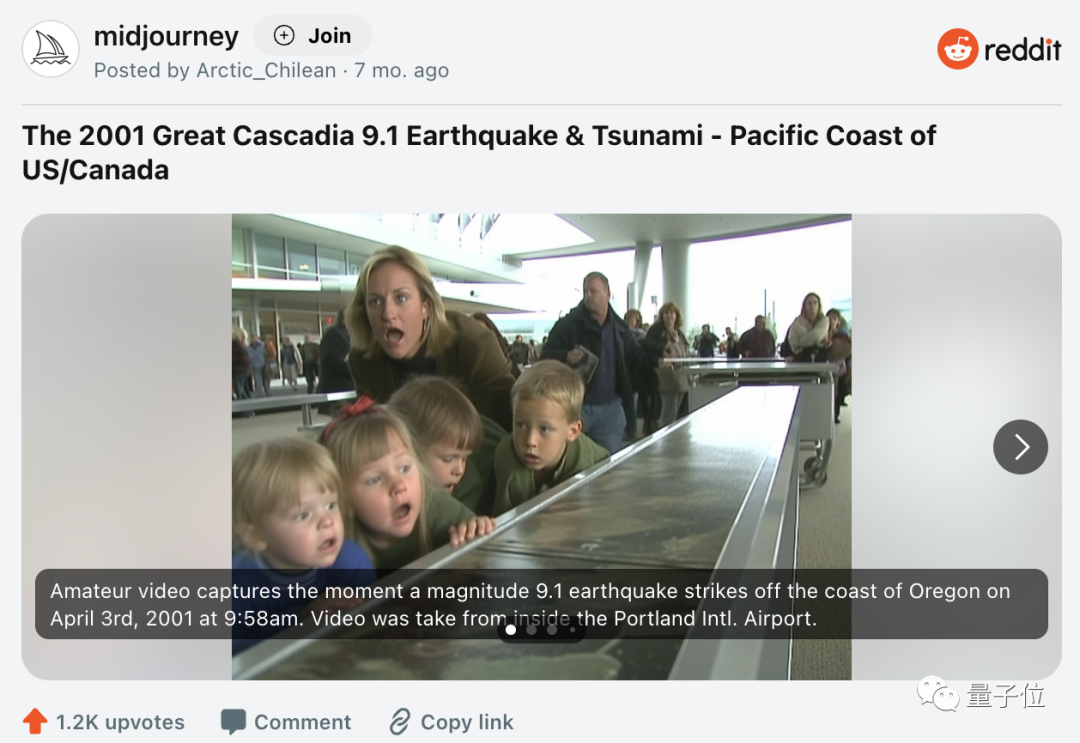

For example, there is an AI-forged "scene" of the "2001 Cascadia 9.1 earthquake and tsunami" on reddit, which has been liked by more than 1.2k netizens.

Compared with AI text detection tools, the development of AI drawing detection tools is obviously more urgent (probably because "no one cares whether the speech is written by oneself or by a secretary ”, but the content of “pictures and truth” is hard not to be believed by some people).

However, just as OpenAI’s AI text detection tool was offline, some netizens pointed out:

It is contradictory to develop generation and detection tools at the same time.

If one side is doing well, it means the other side is not doing well, and there may also be a conflict of interest.

The more direct idea is to hand it over to a third party.

But the performance of third parties on AI text has not been good before.

As far as the technology itself is concerned, another feasible method is to hide the watermark when AI generates content.

That’s what Google does.

Recently (at the end of August this year), Google has launched an AI image detection technology before OpenAI:

SynthID.

It currently cooperates with Google's Vincent graph model Imagen, so that every image generated by the model is embedded with a "metadata identification that this is generated by AI" -

Even if the image is A series of modifications such as cropping, adding filters, changing colors and even lossy compression will not affect the recognition.

In internal testing, SynthID accurately identified a large number of edited AI images, but the specific accuracy rate was not disclosed.

I don’t know what technology OpenAI’s upcoming tool will use, and whether it will be the one with the highest accuracy on the market.

Ultraman responds to the "Core Building Plan"

The above news comes from the speeches delivered by OpenAI CTO and Altman at the Tech Live conference held by the Wall Street Journal this week.

At the meeting, the two also revealed more news about OpenAI.

For example, the next generation of large models may be launched soon.

The name is not disclosed, but OpenAI did apply for the GPT-5 trademark in July this year.

Some people are concerned about the accuracy of "GPT-5" and ask whether it can no longer produce errors or false content.

In this regard, the CTO's attitude was more cautious and just said "maybe".

She explained:

We have made great progress on the hallucination problem of GPT-4, but it is not yet where we need to be.

Ultraman talked about the "Core Building Plan".

Judging from his original words, there is no "real hammer", but it also leaves unlimited room for imagination:

We will definitely not do this if we follow the default path. But I would never rule it out.

Compared to the "core-making plan", Ultraman's reply to the rumours of building a mobile phone was quite straightforward.

In September this year, Apple’s former chief design officer Jony Ive (who has worked at Apple for 27 years) was exposed to be in contact with OpenAI. Sources said that Altman wanted to develop a hardware device to provide a A more natural and intuitive way to interact with AI can be called the "iPhone of AI".

Now, he told everyone:

I am not sure yet what I want to do, I just have some vague ideas.

And:

No AI device will overshadow the popularity of the iPhone, and I have no interest in competing with any smartphone.

Reference link:

[1]https://finance.yahoo.com/news/openai- claims-tool-detect-ai-051511179.html?guccounter=1.

[2]https://gizmodo.com/openais-sam-altman-says-he-has-no-interest-in-competing-1850937333.

The above is the detailed content of OpenAI image detection tool exposed, CTO: 99% of images generated by AI can be recognized. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

When developing PHP projects, ensuring code coverage is an important part of ensuring code quality. However, when I was using TravisCI for continuous integration, I encountered a problem: the test coverage report was not uploaded to the Coveralls platform, resulting in the inability to monitor and improve code coverage. After some exploration, I found the tool php-coveralls, which not only solved my problem, but also greatly simplified the configuration process.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

During Laravel development, it is often necessary to add virtual columns to the model to handle complex data logic. However, adding virtual columns directly into the model can lead to complexity of database migration and maintenance. After I encountered this problem in my project, I successfully solved this problem by using the stancl/virtualcolumn library. This library not only simplifies the management of virtual columns, but also improves the maintainability and efficiency of the code.