Technology peripherals

Technology peripherals

AI

AI

Big release, 'brain-like science' or the optimal solution to the problem of computing power consumption and context length of artificial intelligence large language model!

Big release, 'brain-like science' or the optimal solution to the problem of computing power consumption and context length of artificial intelligence large language model!

Big release, 'brain-like science' or the optimal solution to the problem of computing power consumption and context length of artificial intelligence large language model!

In a grand event of science fiction and science, science fiction suddenly shines into reality.

Recently, at the Shenzhen Advanced Institute, the Shenzhen University of Technology Education Foundation and the Science and Fantasy Growth Fund held an event based on the emergence of science fiction and AI. A team from Shenzhen called Luxi Technology has publicly released their artificial intelligence large language model---NLM (Neuromorphic Generative Pre-trained Language Model) for the first time, a large language model that is not based on Transformer.

Different from many large models at home and abroad, this team takes brain-like science and brain-like intelligence as its core, while integrating the characteristics of recurrent neural networks, and developing large language models inspired by the efficient computing characteristics of the brain.

What’s even more amazing is that the computing power consumption of this model under the same level of parameters is 1/22 of the Transformer architecture; on the issue of context length, NLM also gave a perfect answer: the context length window can achieve unlimited growth, regardless of It doesn't matter whether it is the 2k limit of open source LLM, or other context length limits of 32k or 100k.

What is brain-inspired computing?

Brain-like computing is a computing model that imitates the structure and function of the human brain. It simulates the neural network connections of the human brain in terms of architecture, design principles, and information processing methods. This kind of computing goes beyond simply trying to simulate the surface characteristics of biological neural networks, but goes deep into how to simulate the basic construction of biological neural networks-that is, processing and storing sequence information through large-scale interconnections of neurons and synapses.

Unlike traditional rule-based algorithms, brain-inspired computing relies on a large number of interconnected neural networks to learn and extract information autonomously, just like the human brain. This approach allows computing systems to learn from experience, adapt to new situations, understand complex patterns, and make advanced decisions and predictions.

Due to its high degree of adaptability and parallel processing capabilities, brain-inspired computing systems have shown extremely high efficiency and accuracy in processing big data, image and speech recognition, natural language processing and other fields. Not only can these systems quickly process complex and changing information, but they also consume far less energy and computing resources than traditional computing architectures because they do not require extensive pre-programming and data input.

In general, brain-inspired computing opens up a new computing paradigm. It transcends traditional artificial neural networks and moves towards advanced intelligent systems that can self-learn, self-organize, and even have a certain degree of self-awareness.

The advancement of large brain-inspired models

At the event, Dr. Zhou Peng from Lu Xi’s team explained in detail the implementation mechanism of the large brain-like model.

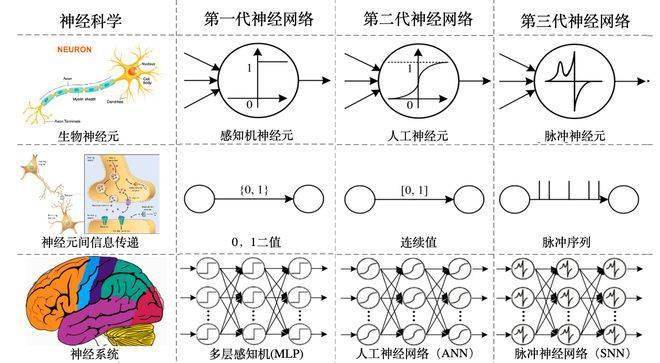

As a new generation of neural network model, also known as brain-like neural network, it breaks through the shortcomings of the first two generations of neural networks.

-The first generation neural network (also known as: MLP multi-layer perceptron), which transmits signals as 0 and 1, cannot handle overly complex tasks and does not require much computing power.

-The second generation neural network, also known as artificial neural network, changes the transmission signal into a continuous interval of [0-1], which has sufficient complexity, but the computing power overhead has also soared.

- The third generation of neural networks, also known as brain-like neural networks, turns signals into pulse sequences. While having sufficient complexity, it also makes the computing power cost controllable. This pulse sequence is achieved by mimicking the dynamics in neural structures. At the same time, sequence means time, and the third generation neural network can effectively integrate and output the time information in the information.

-Compared with the previous two generations of neural networks, it processes sequence information with time dimensions more effectively and understands the real world more effectively.

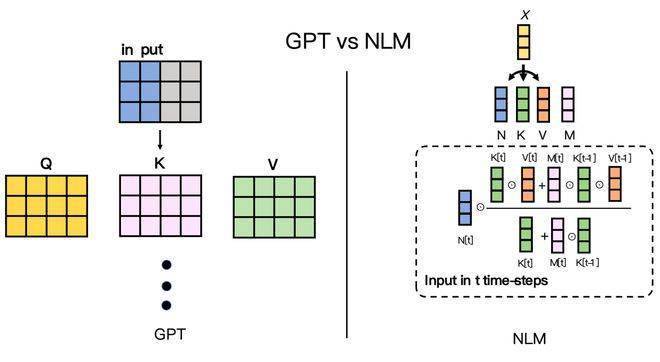

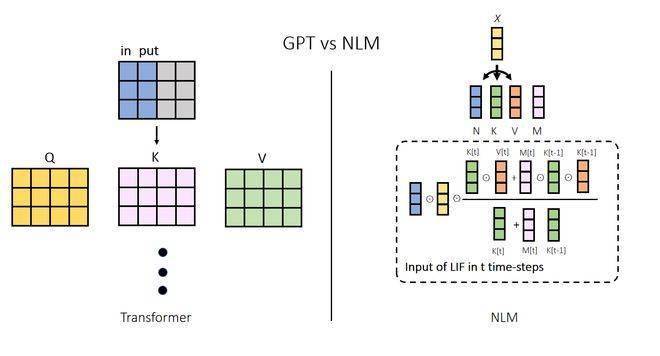

The reasoning principle of large models based on brain-like algorithms is also completely different from Transformer. During the reasoning process, there are significant differences in the operating mechanisms of the Transformer model and the brain-like model. Whenever the Transformer model performs inference, it will consider all contextual information to generate the next token. This operation can be compared to during a chat, every time we say a word, we need to recall all the experiences of the day. This is also the main reason why the calculation costs of large-scale models continue to increase while their parameters continue to grow.

Relatively speaking, the brain-like model only needs to rely on its internal state and a token when reasoning. This can be compared to when we blurt out what the next word is when speaking, without having to specifically recall all previous situations, and the content of the speech is also intrinsically related to previous experiences. This mechanism is the key to NLM's ability to significantly reduce computing power overhead, making it closer to the way the human brain operates, and thus significantly improving its performance.

Also because of the characteristics of brain inspiration, the limited context length is no longer a troubling problem. The NLM large model using the third generation neural network does not have a context length bottleneck because the computing power required to process the next token is not related to the context length. The context length of the large language model of the publicly available Transformer architecture is only 100k. Increasing the context length is not only a matter of computing power overhead, but also a question of "can it".

The infinite length context of NLM will open the door to imagination in the application of large language models, whether it is studying complex financial reports, reading hundreds of thousands of words of novels, or making large models "more precise" through unlimited length context. "I understand you" can become a reality.

AI in the eyes of Lu Xi’s team

At this event, Dr. Zhou Peng, the founder and CTO of Luxi Technology, explained the team’s current mission - to empower all things with wisdom.

In an era of artificial intelligence, artificial intelligence needs to be popularized everywhere, just as the Internet and electricity are already everywhere around us. Although current artificial intelligence is impressive in terms of capabilities, its operating costs place a huge burden on businesses and consumers. The vast majority of mobile phones, watches, tablets and laptops are unable to run generative artificial intelligence large language models in a complete, systematic, efficient and high-quality manner under current technology. The threshold for developing large model applications has also hindered many outstanding developers who are interested in this. Intimidated.

At the event, Luxi Technology showed the audience how to use the "NLM-GPT" large model in the offline mode of an ordinary Android phone to complete various common tasks in work and life, pushing the event to a climax .

- The mobile phones participating in the demonstration are equipped with common chip architectures on the market, and their performance is similar to that of common Android models in the consumer market. With the phone in airplane mode and not connected to the Internet, Luxi Technology demonstrated a large model of "NLM-GPT" that can talk to users in real time on the phone, answer questions raised by users, and complete tasks including poetry creation, recipe writing, knowledge Instructions such as retrieval and file interpretation are highly complex, require high performance parameters of mobile phone hardware, and traditionally require networking to complete.

- During the entire demonstration process, the energy consumption of the mobile phone was stable, with minimal impact on the normal standby time, and no impact on the overall performance of the mobile phone.

-This demonstration successfully proved that the "NLM-GPT" large model has the potential to run in all scenarios, with high efficiency, low power consumption and zero traffic consumption in small C-end commercial devices such as smartphones and tablets. This means that thanks to the empowerment of the "NLM-GPT" large model, mobile phones, watches, tablets, laptops and other devices can more accurately and efficiently understand human beings' true intentions, and can be used in various situations such as office, study, social networking, entertainment, etc. Complete various instructions and tasks put forward by humans with higher quality in application scenarios, greatly improving the efficiency and quality of social production and human life.

Luxi Technology believes that the "generative artificial intelligence large language model" driven by "brain-like technology" will comprehensively expand human thinking, perception and action in various fields such as learning, work and life, and enhance the overall human ability overall wisdom. Thanks to the empowerment of brain-like technology, artificial intelligence will no longer be a new agent that replaces humans, but will become an efficient intelligent tool for humans to change the world and create a better future.

Just as the ancients trained hounds and falcons, the profession of hunter will not disappear because of the emergence of hounds and falcons. On the contrary, hunters have benefited from this and have mastered the power possessed by hounds and falcons but not possessed by humans themselves. They can obtain prey more efficiently, providing power and nutrients for the growth of human groups and the development of human civilization.

In the future, applying artificial intelligence large language models in daily work and life will no longer be a complex multi-process system project, but will be like "opening the payment code when checking out", "pressing the shutter when taking a photo", "One click and three consecutive clicks when viewing short videos" is generally simple, natural and smooth. Lu Xi's team will continue to work in the field of brain-like computing, conduct in-depth research on the brain, nature's most precious gift to mankind, and bring brain-like intelligence into daily life.

Perhaps, in the near future, humans will have more new artificial intelligence partners. There is no blood flowing in their bodies, and their intelligence will not replace humans. With the support of brain-inspired technology, they will work with us to explore the mysteries of the universe, expand the boundaries of society, and create a better future.

Source: Life Daily

(Source: undefined)

For more exciting information, please download the "Jimu News" client in the application market. Please do not reprint without authorization. You are welcome to provide news clues, and you will be paid once accepted. The 24-hour reporting hotline is 027-86777777.

The above is the detailed content of Big release, 'brain-like science' or the optimal solution to the problem of computing power consumption and context length of artificial intelligence large language model!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?

Mar 16, 2025 am 11:47 AM

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?

Mar 16, 2025 am 11:47 AM

OpenAI's o1: A 12-Day Gift Spree Begins with Their Most Powerful Model Yet December's arrival brings a global slowdown, snowflakes in some parts of the world, but OpenAI is just getting started. Sam Altman and his team are launching a 12-day gift ex

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

The article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)