Technology peripherals

Technology peripherals

AI

AI

With nearly half the parameters, the performance is close to Google Minerva, another large mathematical model is open source

With nearly half the parameters, the performance is close to Google Minerva, another large mathematical model is open source

With nearly half the parameters, the performance is close to Google Minerva, another large mathematical model is open source

Nowadays, language models trained on various text mixed data will show very general language understanding and generation capabilities and can be used as basic models to adapt to various applications. Applications such as open dialogue or instruction tracking require balanced performance across the entire natural text distribution and therefore prefer general-purpose models.

However, if one wants to maximize performance within a certain domain (such as medicine, finance, or science), then domain-specific language models may be available at a given computational cost Superior capabilities, or providing a given level of capabilities at a lower computational cost.

Researchers from Princeton University, EleutherAI and others have trained a domain-specific language model to solve mathematical problems. They believe that: first, solving mathematical problems requires pattern matching with a large amount of professional prior knowledge, so it is an ideal environment for domain adaptability training; second, mathematical reasoning itself is the core task of AI; finally, the ability to perform strong mathematical reasoning Language models are the upstream of many research topics, such as reward modeling, inference reinforcement learning, and algorithmic reasoning.

Therefore, they propose a method to adapt language models to mathematics through continuous pre-training of Proof-Pile-2. Proof-Pile-2 is a mix of mathematics-related text and code. Applying this approach to Code Llama results in LLEMMA: a base language model for 7B and 34B, with greatly improved mathematical capabilities.

##Paper address: https://arxiv.org/pdf/2310.10631.pdf

Project address: https://github.com/EleutherAI/math-lm

LLEMMA 7B’s 4-shot Math performance far exceeds Google Minerva 8B, and LLEMMA 34B is The performance is close to Minerva 62B with nearly half the parameters.

Specifically, the contributions of this article are as follows:

- 1. Trained and published LLEMMA model: 7B and 34B language models dedicated to mathematics. The LLEMMA model is the state of the art in base models publicly released on MATH.

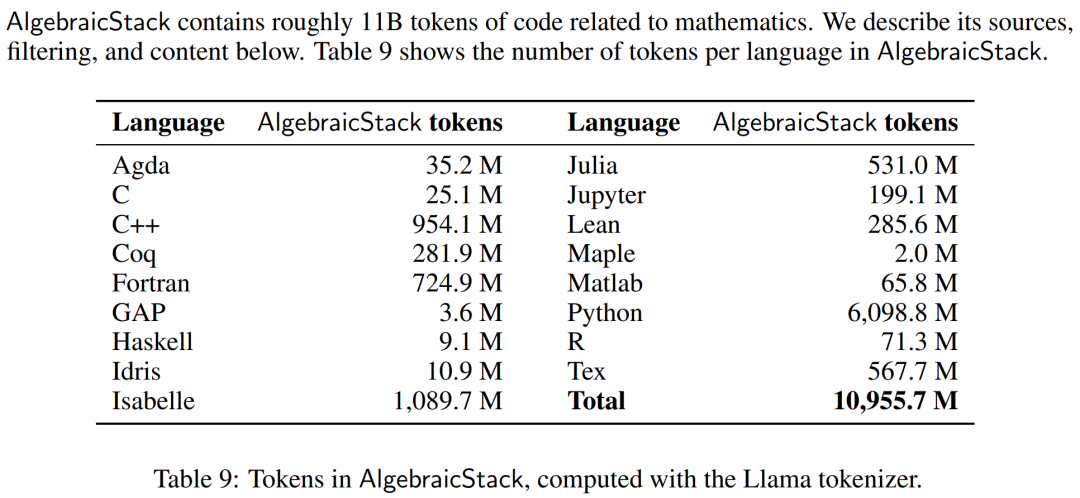

- 2. Released AlgebraicStack, a dataset containing 11B code tokens specifically related to mathematics.

- 3. It is demonstrated that LLEMMA is capable of solving mathematical problems using computational tools, namely the Python interpreter and the formal theorem prover.

- 4. Unlike previous mathematical language models (such as Minerva), the LLEMMA model is open-ended. The researchers made the training data and code available to the public. This makes LLEMMA a platform for future research in mathematical reasoning.

Method Overview

LLEMMA is a 70B and 34B language model specifically for mathematics. It is obtained by continuing to pre-train the code Llama on Proof-Pile-2.

The researchers created Proof-Pile-2, a 55B token mixture of scientific papers, network data containing mathematics, and mathematical code. The knowledge deadline for Proof-Pile-2 is April 2023, except for the Lean proofsteps subset.

The researchers used OpenWebMath, a 15B token dataset composed of high-quality web pages, filtered for mathematical content. OpenWebMath filters CommonCrawl web pages based on math-related keywords and classifier-based math scores, preserves math formatting (e.g., LATEX, AsciiMath), and includes additional quality filters (e.g., plexity, domain, length) and near-duplication. In addition to this, the researchers also used the ArXiv subset of RedPajama, which is an open rendition of the LLaMA training dataset. The ArXiv subset contains 29B chunks. The training mixture consists of a small amount of general domain data and acts as a regularizer. Since the pre-training dataset for LLaMA 2 is not yet publicly available, the researchers used Pile as an alternative training dataset. Models and training Each model is initialized from Code Llama, which in turn is initialized from Llama 2, using a deconder only transformer structure, is trained on 500B code tokens. The researchers continued to train the Code Llama model on Proof-Pile-2 using the standard autoregressive language modeling objective. Here, the LLEMMA 7B model has 200B tokens and the LLEMMA 34B model has 50B tokens. The researchers used the GPT-NeoX library to train the above two models with bfloat16 mixed precision on 256 A100 40GB GPUs. They used tensor parallelism with world size 2 for LLEMMA-7B and tensor parallelism with world size 8 for 34B, as well as ZeRO Stage 1 shard optimizer states across data-parallel replicas. Flash Attention 2 is also used to increase throughput and further reduce memory requirements. LLEMMA 7B has been trained for 42,000 steps, with a global batch size of 4 million tokens and a context length of 4096 tokens. This is equivalent to 23,000 A100 hours. The learning rate warms up to 1·10^−4 after 500 steps and then cosineally decays to 1/30 of the maximum learning rate after 48,000 steps. LLEMMA 34B has been trained for 12,000 steps. The global batch size is also 4 million tokens and the context length is 4096. This is equivalent to 47,000 A100 hours. The learning rate warms up to 5·10^−5 after 500 steps, and then decays to 1/30 of the peak learning rate. In the experimental part, the researcher aimed to evaluate whether LLEMMA can be used as a basic model for mathematical texts. They utilize few-shot evaluation to compare LLEMMA models and focus primarily on SOTA models that are not fine-tuned on supervised samples of mathematical tasks. The researchers first used chain-of-thinking reasoning and majority voting methods to evaluate LLEMMA’s ability to solve mathematical problems. The evaluation benchmarks included MATH and GSM8k. Then explore the use of few-shot tools and theorem proving. Finally, the impact of memory and data mixing is studied. Solve math problems using Chains of Thoughts (CoT) These tasks include problems expressed as LATEX or natural language Generate independent text answers without using external tools. The evaluation benchmarks used by researchers include MATH, GSM8k, OCWCourses, SAT and MMLU-STEM. The results are shown in Table 1 below. LLEMMA’s continuous pre-training on the Proof-Pile-2 corpus improved the few-sample performance on 5 mathematical benchmarks, among which LLEMMA 34B improved on GSM8k It is 20 percentage points higher than Code Llama on MATH and 13 percentage points higher than Code Llama on MATH. At the same time LLEMMA 7B outperformed the proprietary Minerva model. Therefore, the researchers concluded that continuous pre-training on Proof-Pile-2 can help improve the ability of the pre-trained model to solve mathematical problems. Use tools to solve math problems These tasks include using computational tools to solve problems. The evaluation benchmarks used by researchers include MATH Python and GSM8k Python. The results are shown in Table 3 below, LLEMMA outperforms Code Llama on both tasks. The performance on MATH and GSM8k using both tools is also better than without the tools. Formal Mathematics Proof-Pile-2’s AlgebraicStack dataset holds 1.5 billion tokens of formal mathematical data, including formal proofs extracted from Lean and Isabelle. While a full study of formal mathematics is beyond the scope of this article, we evaluate the few-shot performance of LLEMMA on the following two tasks. Informal to formal proof task, that is, given a formal proposition, an informal LATEX proposition, and an informal LATEX proof In this case, generate a formal proof; Form-to-form proof task is to prove a formal proposition by generating a series of proof steps (or strategies). The results are shown in Table 4 below. LLEMMA’s continuous pre-training on Proof-Pile-2 improved the few-sample performance on two formal theorem proving tasks. The impact of data mixing When training a language model, a common approach is to train based on the mixing weights High-quality subsets of the data are upsampled. The researchers selected the blend weights by performing short training on several carefully selected blend weights. We then selected hybrid weights that minimized perplexity on a set of high-quality held-out texts (here we used the MATH training set). Table 5 below shows the MATH training set perplexity of the model after training with different data mixes such as arXiv, web and code. For more technical details and evaluation results, please refer to the original paper. Evaluation results

The above is the detailed content of With nearly half the parameters, the performance is close to Google Minerva, another large mathematical model is open source. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving