A large model with only a 4k window length can still read large sections of text!

A latest achievement by a Chinese doctoral student at Princeton has successfully "broken through" the limit of the window length of large models.

Not only can it answer various questions, but the entire implementation process can be completed entirely by prompt, without any additional training .

The research team created a tree memory strategy called MemWalker that can break through the window length limit of the model itself.

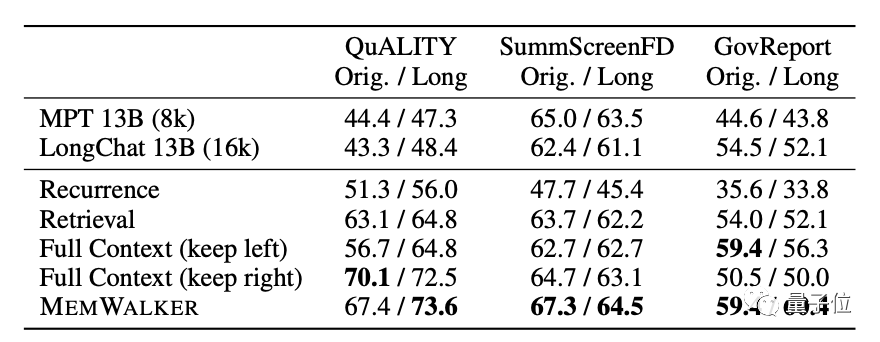

During the test, the longest text read by the model contained 12,000 tokens, and the results were significantly improved compared to LongChat.

Compared to the similar TreeIndex, MemWalker can reason and answer any question instead of just making generalizations.

The research and development of MemWalker utilized the idea of "divide and conquer". Some netizens commented:

Every time we make the thinking process of large models more like humans, their performance will improve. The better

#So, what exactly is the tree memory strategy, and how does it read long text with a limited window length?

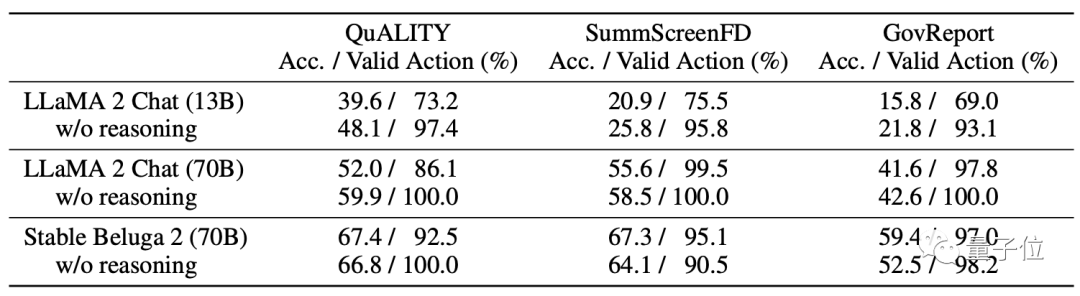

On the model, MemWalker uses Stable Beluga 2 as the basic model, which is obtained by Llama 2-70B through command tuning.

Before selecting this model, the developers compared its performance with the original Llama 2 and finally decided on the choice.

Just like the name MemWalker, its working process is like a memory stream walking.

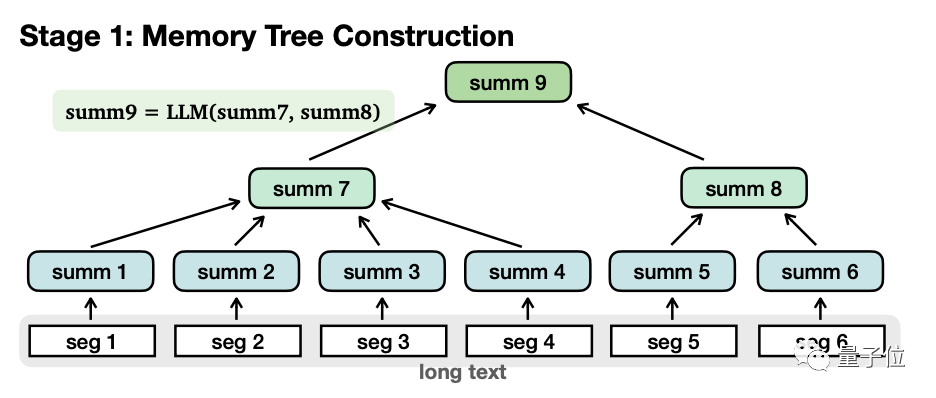

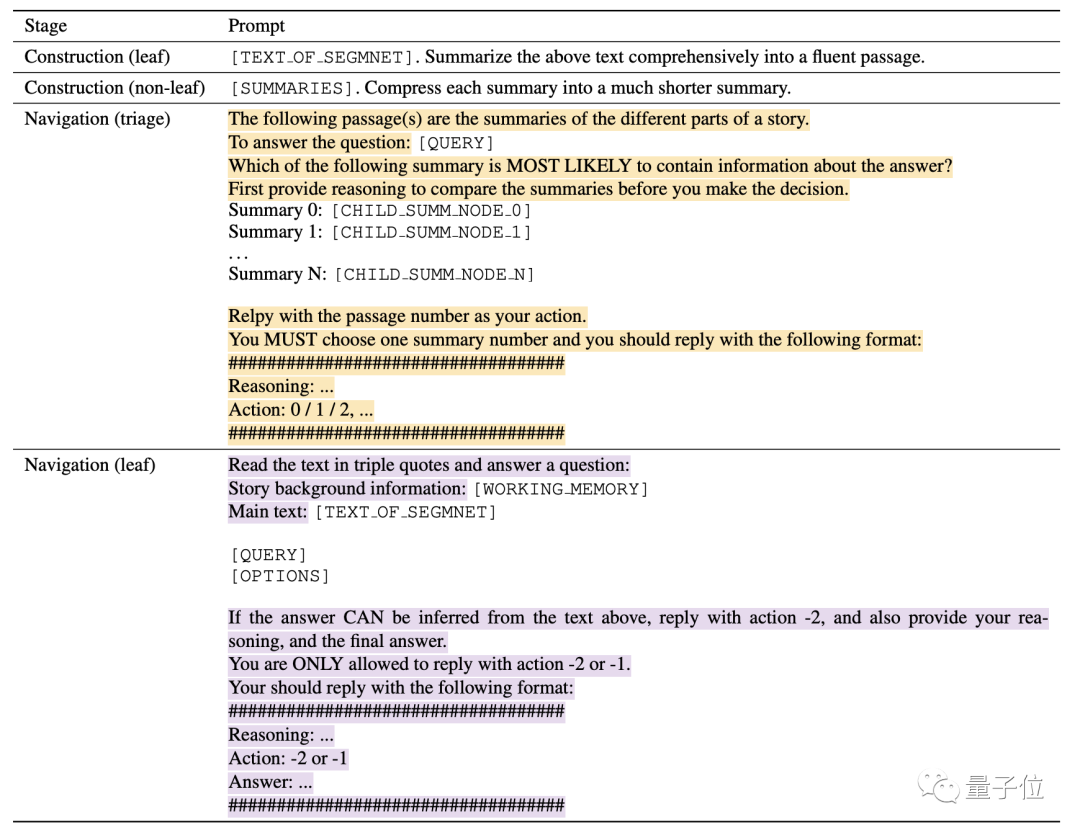

Specifically, it is roughly divided into two stages: Memory tree construction and Navigation retrieval.

When building the memory tree, the long text will be divided into multiple small segments (seg1-6) , and the large model will do the processing for each segment separately. Out the summary, get "leaf nodes"(leaf nodes,summ1-6).

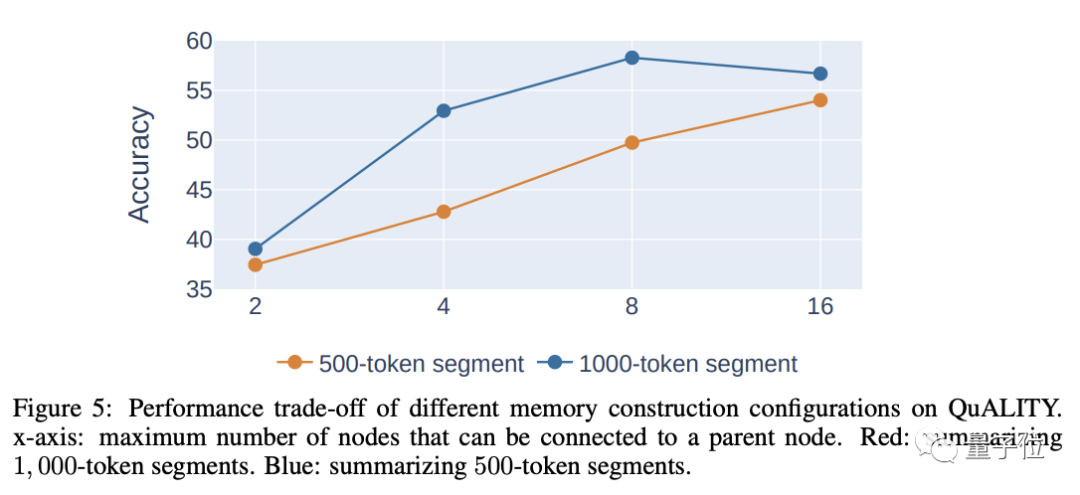

When segmenting, the longer each segment is, the fewer the levels will be, which is beneficial to subsequent retrieval. However, if it is too long, it will lead to a decrease in accuracy, so comprehensive considerations are needed to determine the length of each segment.

The author believes that the reasonable length of each paragraph is 500-2000 tokens, and the one used in the experiment is 1000 tokens.

Then, the model recursively summarizes the contents of these leaf nodes again to form "non-leaf nodes"(non-leaf nodes,summ7-8).

Another difference between the two is that leaf nodes contain original information, while non-leaf nodes only have summarized secondary information.

Functionally, non-leaf nodes are used to navigate and locate the leaf nodes where the answer is located, while leaf nodes are used to reason about the answer.

The non-leaf nodes can have multiple levels, and the model is gradually summarized until the "root node" is obtained to form a complete tree structure.

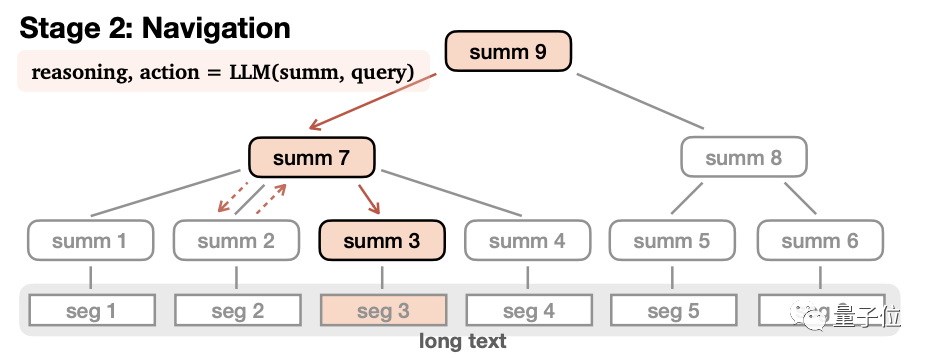

After the memory tree is established, you can enter the navigation retrieval stage to generate answers.

In this process, the model starts from the root node, reads the contents of the -level child nodes one by one, and then infers that this node should be entered Or return.

After deciding to enter this node, repeat the process again until the leaf node is read. If the content of the leaf node is suitable, the answer is generated, otherwise it is returned. In order to ensure the completeness of the answer, the end condition of this process is not that a suitable leaf node is found, but that the model believes that a complete answer is obtained, or the maximum number of steps is reached. During the navigation process, if the model finds that it has entered the wrong path, it can also navigate back.In addition, MemWalker also introduces a working memory mechanism to improve accuracy.

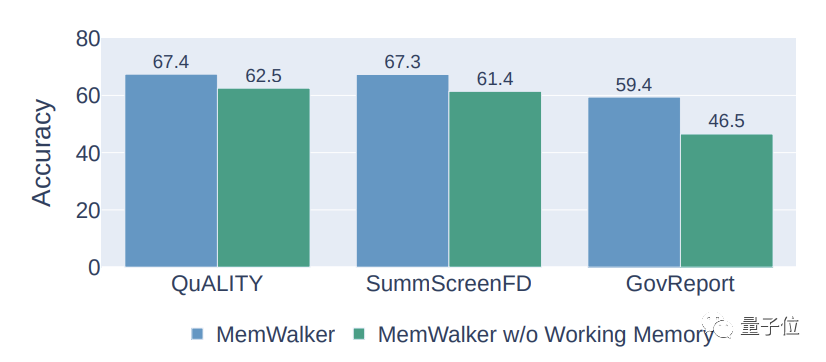

#This mechanism will add the visited node content to the context of the current content.

When the model enters a new node, the current node content will be added to the memory.

This mechanism allows the model to utilize the content of visited nodes at each step to avoid the loss of important information.

Experimental results show that the working memory mechanism can increase the accuracy of MemWalker by about 10%.

Moreover, the process mentioned above can be completed only by relying on prompt, and no additional training is required.

Theoretically, MemWalker can read infinitely long text as long as it has enough computing power.

However, the time and space complexity when constructing the memory tree becomes exponential as the length of the text increases.

The first author of the paper is Howard Chen, a Chinese doctoral student in the NLP Laboratory of Princeton University.

Tsinghua Yao Class alumnus Chen Danqi is Howard’s mentor, and her academic report on ACL this year was also related to search.

This result was completed by Howard during his internship at Meta. Three scholars, Ramakanth Pasunuru, Jason Weston and Asli Celikyilmaz from the Meta AI Laboratory also participated in this project.

Paper address: https://arxiv.org/abs/2310.05029

The above is the detailed content of Long texts can be read with a 4k window length. Chen Danqi and his disciples teamed up with Meta to launch a new method to enhance the memory of large models.. For more information, please follow other related articles on the PHP Chinese website!

The difference between Fahrenheit and Celsius

The difference between Fahrenheit and Celsius

The role of float() function in python

The role of float() function in python

Configure Java runtime environment

Configure Java runtime environment

What to do if the documents folder pops up when the computer is turned on

What to do if the documents folder pops up when the computer is turned on

The role of registering a cloud server

The role of registering a cloud server

How to buy Ripple in China

How to buy Ripple in China

NTSD command usage

NTSD command usage

phpstudy database cannot start solution

phpstudy database cannot start solution

Usage of background-image

Usage of background-image