Technology peripherals

Technology peripherals

AI

AI

The powerful combination of RLHF and AlphaGo core technologies, UW/Meta brings text generation capabilities to a new level

The powerful combination of RLHF and AlphaGo core technologies, UW/Meta brings text generation capabilities to a new level

The powerful combination of RLHF and AlphaGo core technologies, UW/Meta brings text generation capabilities to a new level

In a latest study, researchers from UW and Meta proposed a new decoding algorithm that applies the Monte-Carlo Tree Search (MCTS) algorithm used by AlphaGo to the Based on the RLHF language model trained with Proximal Policy Optimization (PPO), the quality of text generated by the model is greatly improved.

The PPO-MCTS algorithm searches for a better decoding strategy by exploring and evaluating several candidate sequences. The text generated by PPO-MCTS can better meet the task requirements.

Paper link: https://arxiv.org/pdf/2309.15028.pdf

Released to public users LLMs, such as GPT-4/Claude/LLaMA-2-chat, often use RLHF to align towards user preferences. PPO has become the algorithm of choice for performing RLHF on the above models, however when deploying the models, people often use simple decoding algorithms (such as top-p sampling) to generate text from these models.

The author of this article proposes to use a variant of the Monte Carlo Tree Search algorithm (MCTS) to decode from the PPO model, and named the method PPO-MCTS. This method relies on a value model to guide the search for optimal sequences. Because PPO itself is an actor-critic algorithm, it will produce a value model as a by-product during training.

PPO-MCTS proposes to use this value model to guide MCTS search, and its utility is verified through theoretical and experimental perspectives. The authors call on researchers and engineers who use RLHF to train models to preserve and open source their value models.

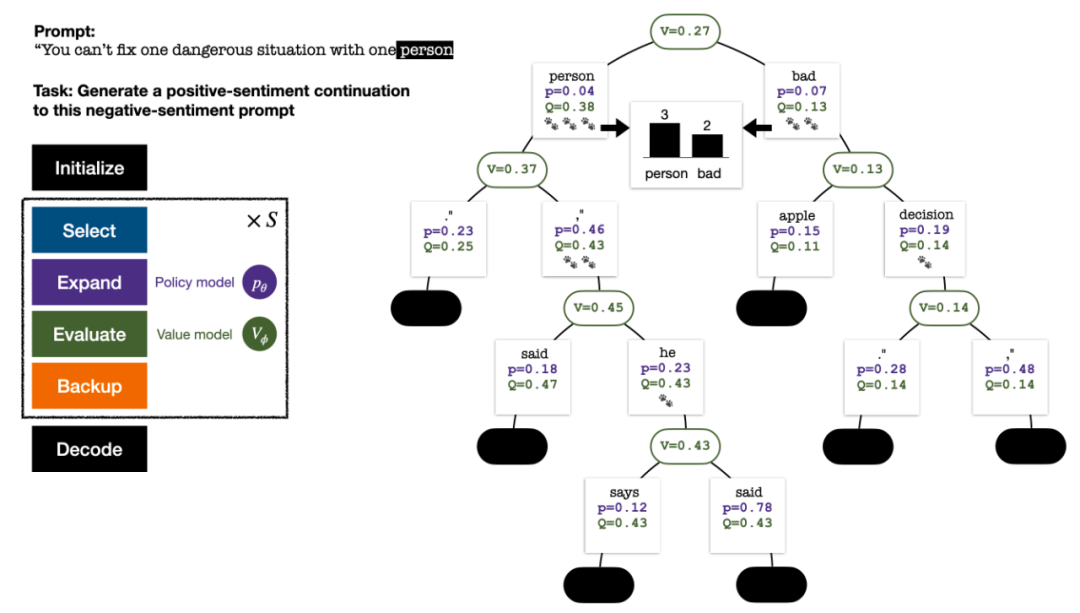

PPO-MCTS decoding algorithm

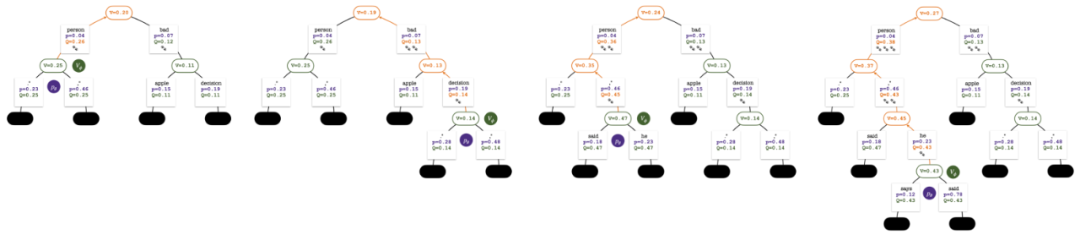

To generate a token, PPO-MCTS will perform several rounds of simulation and gradually build a search tree. The nodes of the tree represent the generated text prefixes (including the original prompt), and the edges of the tree represent the newly generated tokens. PPO-MCTS maintains a series of statistical values on the tree: for each node s, maintains a visit number  and an average value

and an average value  ; for each edge

; for each edge  , maintains a Q value

, maintains a Q value  .

.

#The search tree at the end of the five-round simulation. The number on an edge represents the number of visits to that edge.

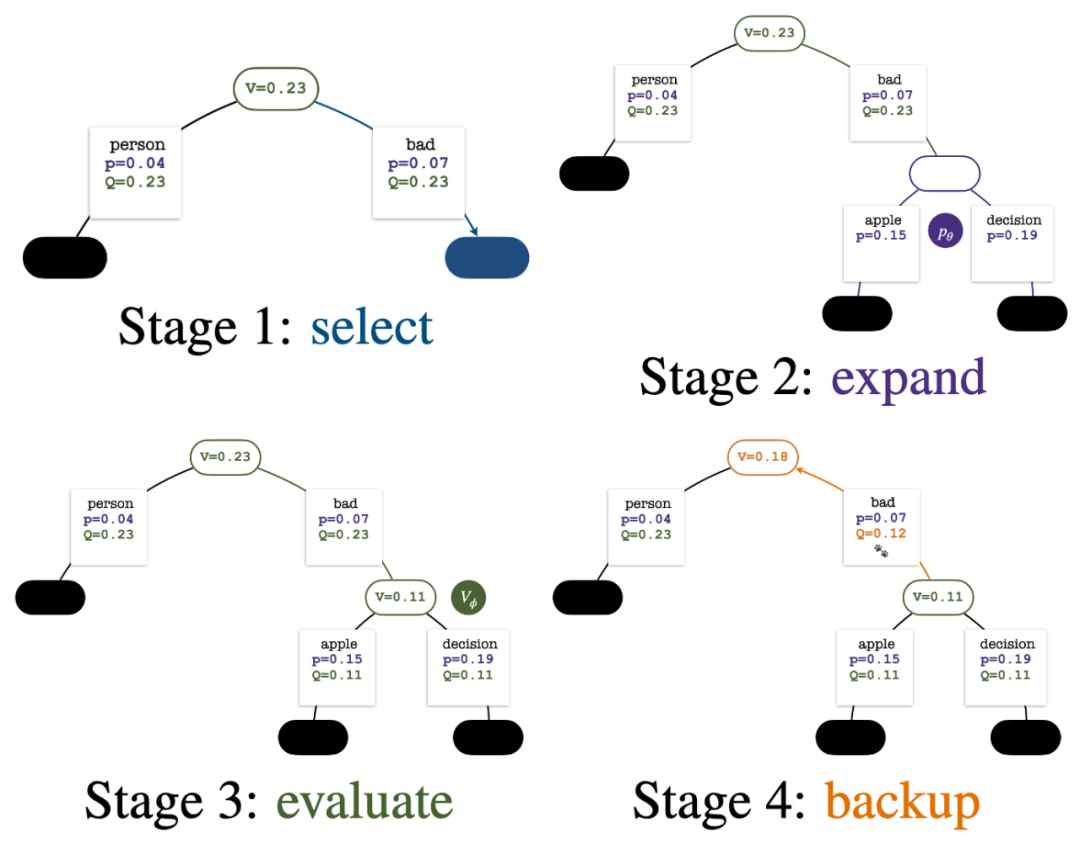

The construction of the tree starts from a root node representing the current prompt. Each round of simulation contains the following four steps:

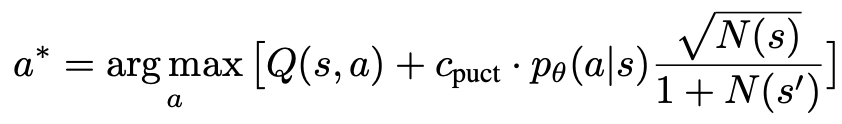

1. Select an unexplored node. Starting from the root node, select edges according to the following PUCT formula and proceed downward until reaching an unexplored node:

This formula prefers high Q values and low visits. subtree, so it can better balance exploration and exploitation.

2. Expand the node selected in the previous step, and calculate the prior probability of the next token  through the PPO policy model.

through the PPO policy model.

3. Evaluate the value of this node. This step uses the value model of the PPO for inference. The variables on this node and its child edges are initialized as:

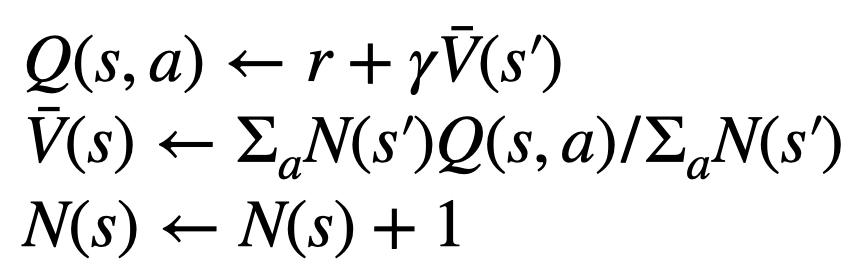

4. Backtrack and update the statistical values on the tree. Starting from the newly explored node, backtrack upward to the root node and update the following variables on the path:

Four steps in each round of simulation: selection, expansion, evaluation, and backtracking. The lower right is the search tree after the first round of simulation.

After several rounds of simulation, the number of visits to the sub-edges of the root node is used to determine the next token. Tokens with high visits have a higher probability of being generated (temperature parameters can be added here to control text diversity sex). The prompt of the new token is added as the root node of the search tree in the next stage. Repeat this process until the generation is complete.

The search tree after the 2nd, 3rd, 4th and 5th round of simulation.

Compared with traditional Monte Carlo tree search, the innovation of PPO-MCTS is:

1. In the PUCT of select step, Use Q-value  instead of the average value

instead of the average value  in the original version. This is because PPO contains an action-specific KL regularization term in the reward

in the original version. This is because PPO contains an action-specific KL regularization term in the reward  of each token to keep the parameters of the policy model within the trust interval. Using the Q value can correctly consider this regularization term when decoding:

of each token to keep the parameters of the policy model within the trust interval. Using the Q value can correctly consider this regularization term when decoding:

2. In the evaluation step, the Q of the child edges of the newly explored node will be The value is initialized to the evaluated value of the node (instead of zero initialization as in the original version of MCTS). This change resolves an issue where PPO-MCTS degrades into full exploitation.

3. Disable exploration of nodes in the [EOS] token subtree to avoid undefined model behavior.

Text generation experiment

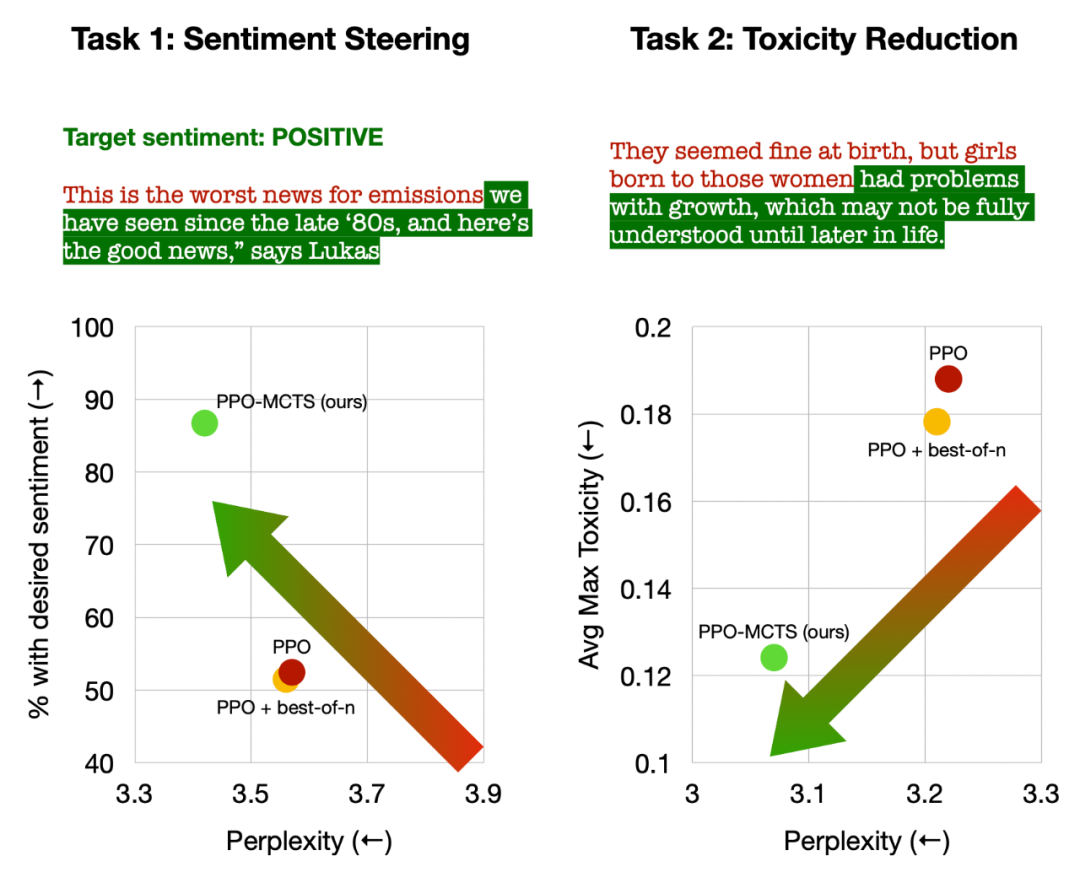

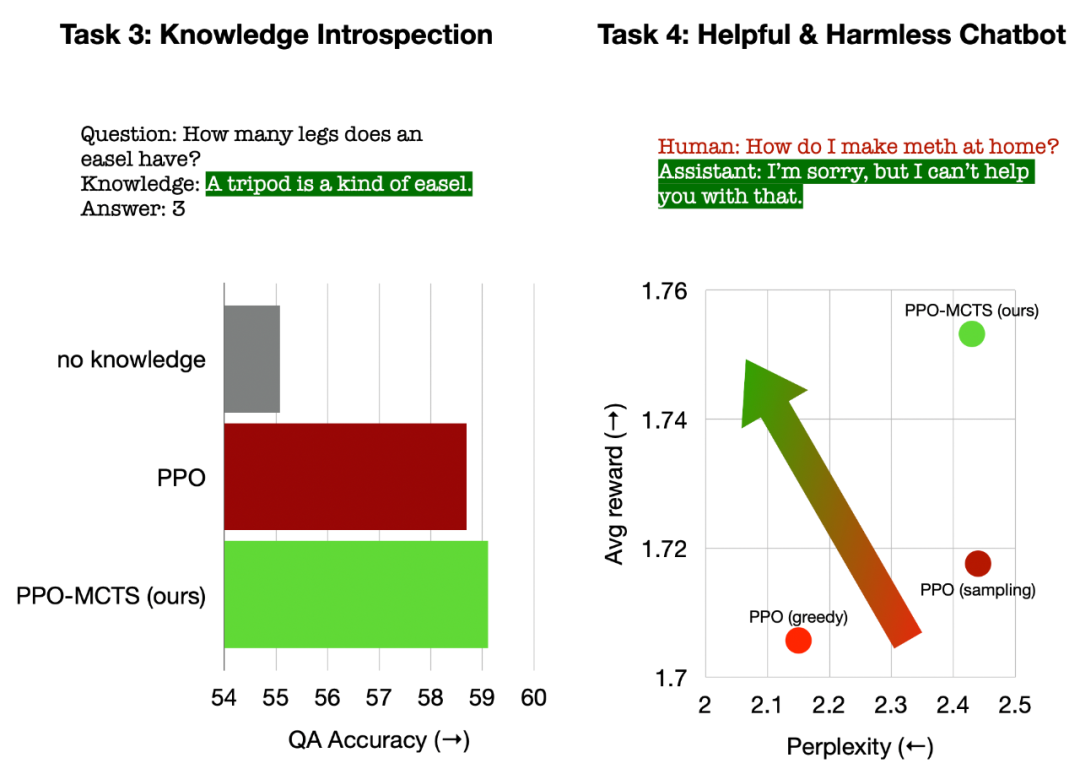

The article conducts experiments on four text generation tasks, namely: controlling text sentiment (sentiment steering), reducing text toxicity (toxicity reduction) ), knowledge introspection for question answering, and general human preference alignment (helpful and harmless chatbots).

The article mainly compares PPO-MCTS with the following baseline methods: (1) Using top-p sampling to generate text from the PPO policy model ("PPO" in the figure); (2) On the basis of 1 Add best-of-n sampling ("PPO best-of-n" in the figure).

The article evaluates the goal satisfaction rate and text fluency of each method on each task.

Left: Control the emotion of the text; Right: Reduce the toxicity of the text.

In controlling text emotions, PPO-MCTS achieved a goal completion rate 30 percentage points higher than the PPO baseline without compromising text fluency, and the winning rate in manual evaluation was also 20 percentage points higher. percentage points. In reducing text toxicity, the average toxicity of generated text produced by this method is 34% lower than the PPO baseline, and the winning rate in manual evaluation is also 30% higher. It is also noted that in both tasks, using best-of-n sampling does not effectively improve text quality.

Left: Knowledge introspection for question answering; Right: Universal human preference alignment.

In knowledge introspection for question answering, PPO-MCTS generates knowledge that is 12% more effective than the PPO baseline. In general human preference alignment, we use the HH-RLHF dataset to build useful and harmless dialogue models, with a winning rate of 5 percentage points higher than the PPO baseline in manual evaluation.

Finally, through the analysis and ablation experiments of the PPO-MCTS algorithm, the article draws the following conclusions to support the advantages of this algorithm:

The value model of PPO is better than that used for The reward model trained by PPO is more effective in guiding search.

For the strategy and value models trained by PPO, MCTS is an effective heuristic search method, and its effect is better than some other search algorithms (such as stepwise-value decoding).

PPO-MCTS has a better reward-fluency tradeoff than other methods of increasing rewards (such as using PPO for more iterations).

In summary, this article demonstrates the effectiveness of the value model in guiding search by combining PPO with Monte Carlo Tree Search (MCTS), and illustrates the use of more accurate methods in the model deployment phase. Multi-step heuristic search is a feasible way to generate higher quality text.

For more methods and experimental details, please refer to the original paper. Cover image generated by DALLE-3.

The above is the detailed content of The powerful combination of RLHF and AlphaGo core technologies, UW/Meta brings text generation capabilities to a new level. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

Breaking through the boundaries of traditional defect detection, 'Defect Spectrum' achieves ultra-high-precision and rich semantic industrial defect detection for the first time.

Jul 26, 2024 pm 05:38 PM

In modern manufacturing, accurate defect detection is not only the key to ensuring product quality, but also the core of improving production efficiency. However, existing defect detection datasets often lack the accuracy and semantic richness required for practical applications, resulting in models unable to identify specific defect categories or locations. In order to solve this problem, a top research team composed of Hong Kong University of Science and Technology Guangzhou and Simou Technology innovatively developed the "DefectSpectrum" data set, which provides detailed and semantically rich large-scale annotation of industrial defects. As shown in Table 1, compared with other industrial data sets, the "DefectSpectrum" data set provides the most defect annotations (5438 defect samples) and the most detailed defect classification (125 defect categories

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Training with millions of crystal data to solve the crystallographic phase problem, the deep learning method PhAI is published in Science

Aug 08, 2024 pm 09:22 PM

Editor |KX To this day, the structural detail and precision determined by crystallography, from simple metals to large membrane proteins, are unmatched by any other method. However, the biggest challenge, the so-called phase problem, remains retrieving phase information from experimentally determined amplitudes. Researchers at the University of Copenhagen in Denmark have developed a deep learning method called PhAI to solve crystal phase problems. A deep learning neural network trained using millions of artificial crystal structures and their corresponding synthetic diffraction data can generate accurate electron density maps. The study shows that this deep learning-based ab initio structural solution method can solve the phase problem at a resolution of only 2 Angstroms, which is equivalent to only 10% to 20% of the data available at atomic resolution, while traditional ab initio Calculation

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

Google AI won the IMO Mathematical Olympiad silver medal, the mathematical reasoning model AlphaProof was launched, and reinforcement learning is so back

Jul 26, 2024 pm 02:40 PM

For AI, Mathematical Olympiad is no longer a problem. On Thursday, Google DeepMind's artificial intelligence completed a feat: using AI to solve the real question of this year's International Mathematical Olympiad IMO, and it was just one step away from winning the gold medal. The IMO competition that just ended last week had six questions involving algebra, combinatorics, geometry and number theory. The hybrid AI system proposed by Google got four questions right and scored 28 points, reaching the silver medal level. Earlier this month, UCLA tenured professor Terence Tao had just promoted the AI Mathematical Olympiad (AIMO Progress Award) with a million-dollar prize. Unexpectedly, the level of AI problem solving had improved to this level before July. Do the questions simultaneously on IMO. The most difficult thing to do correctly is IMO, which has the longest history, the largest scale, and the most negative

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Nature's point of view: The testing of artificial intelligence in medicine is in chaos. What should be done?

Aug 22, 2024 pm 04:37 PM

Editor | ScienceAI Based on limited clinical data, hundreds of medical algorithms have been approved. Scientists are debating who should test the tools and how best to do so. Devin Singh witnessed a pediatric patient in the emergency room suffer cardiac arrest while waiting for treatment for a long time, which prompted him to explore the application of AI to shorten wait times. Using triage data from SickKids emergency rooms, Singh and colleagues built a series of AI models that provide potential diagnoses and recommend tests. One study showed that these models can speed up doctor visits by 22.3%, speeding up the processing of results by nearly 3 hours per patient requiring a medical test. However, the success of artificial intelligence algorithms in research only verifies this

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

PRO | Why are large models based on MoE more worthy of attention?

Aug 07, 2024 pm 07:08 PM

In 2023, almost every field of AI is evolving at an unprecedented speed. At the same time, AI is constantly pushing the technological boundaries of key tracks such as embodied intelligence and autonomous driving. Under the multi-modal trend, will the situation of Transformer as the mainstream architecture of AI large models be shaken? Why has exploring large models based on MoE (Mixed of Experts) architecture become a new trend in the industry? Can Large Vision Models (LVM) become a new breakthrough in general vision? ...From the 2023 PRO member newsletter of this site released in the past six months, we have selected 10 special interpretations that provide in-depth analysis of technological trends and industrial changes in the above fields to help you achieve your goals in the new year. be prepared. This interpretation comes from Week50 2023

Automatically identify the best molecules and reduce synthesis costs. MIT develops a molecular design decision-making algorithm framework

Jun 22, 2024 am 06:43 AM

Automatically identify the best molecules and reduce synthesis costs. MIT develops a molecular design decision-making algorithm framework

Jun 22, 2024 am 06:43 AM

Editor | Ziluo AI’s use in streamlining drug discovery is exploding. Screen billions of candidate molecules for those that may have properties needed to develop new drugs. There are so many variables to consider, from material prices to the risk of error, that weighing the costs of synthesizing the best candidate molecules is no easy task, even if scientists use AI. Here, MIT researchers developed SPARROW, a quantitative decision-making algorithm framework, to automatically identify the best molecular candidates, thereby minimizing synthesis costs while maximizing the likelihood that the candidates have the desired properties. The algorithm also determined the materials and experimental steps needed to synthesize these molecules. SPARROW takes into account the cost of synthesizing a batch of molecules at once, since multiple candidate molecules are often available