On October 30, Kunlun Wanwei announced the open source tens of billions of large language models "Tiangong" Skywork-13B series, and rarely open sourced the matching series# A super large high-quality open source Chinese data set of ##600GB, 150B Tokens.

Kunlun Wanwei's "Tiangong" Skywork-13B series currently includes two major models with 13 billion parameters: Skywork-13B-Base Model, Skywork-13B-Mathmodel, they have shown the best of models of the same size in multiple authoritative evaluations and benchmark tests such as CEVAL and GSM8K. The effect is , and its Chinese ability is particularly outstanding, and its performance in Chinese technology, finance, government affairs and other fields is higher than other open source models.

The download address for Skywork-13B (Model Scope) is available at: https://modelscope.cn/organization/skyworkSkywork-13B download address (Github): https:/ /github.com/SkyworkAI/SkyworkIn addition to the open source model, the Skywork-13B series of large models will also be open source600GB , 150B Tokens’s high-quality Chinese corpus data setSkypile/Chinese-Web-Text-150B, This is one of the largest open source Chinese datasets currently available.

At the same time, Kunlun Wanwei’s “Skywork-13B” Skywork-13B series of large models will soon be fully open for commercial use— —Developers can use it commercially without applying.

13 billion parameters, two major models, one of the largest Chinese data sets, fully open for commercial use.Kunlun Wanwei "Tiangong"Skywork-13B series of large models can be called the most thorough open source tens of billions of high-quality commercial models in the industry.

The open source of the Skywork-13B series of large models will provide the best technical support for the application of large models and the development of the open source community, reducing the commercial threshold for large models, promoting the implementation of artificial intelligence technology in various industries, and contributing to the construction of the artificial intelligence ecosystem. We will work together with the open source community to explore the unknown world and create a better future.Strongest parameter performance: comprehensively surpassing large models of the same size

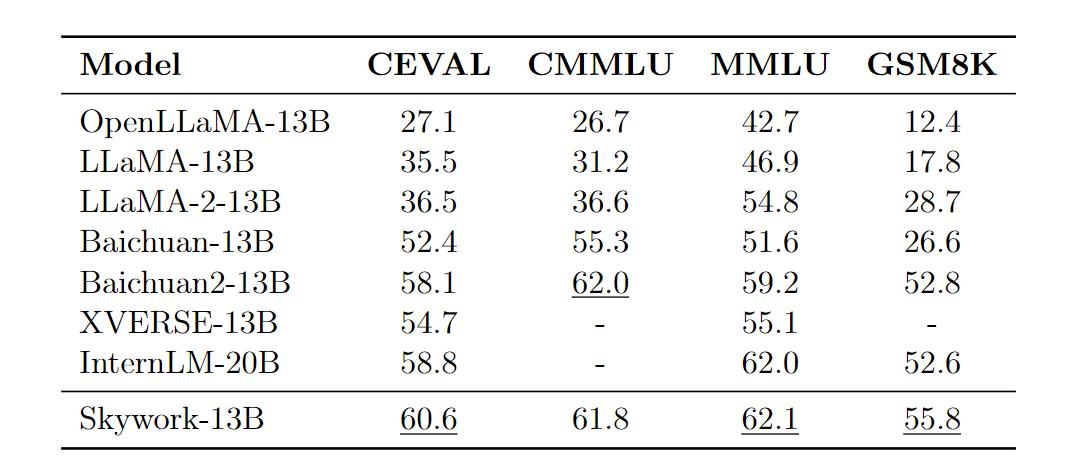

This open source Skywork-13B series model comprehensively surpasses large open source models such as LLaMA2-13B in several authoritative evaluation benchmarks such as CEVAL, CMMLU, MMLU, GSM8K, etc., and achieves the best results among large models of the same scale. (Data as of October 25)

Most training data: 3.2THigh-quality multi-language Training data

Skywork-13B series large models have 13 billion parameters and 3.2 trillion high-quality multi-language training data. The model's generation ability, creative ability and mathematical reasoning ability have been significantly improved.The strongest Chinese language modeling capability: Chinese language modeling perplexity evaluation, surpassing all Chinese open source models

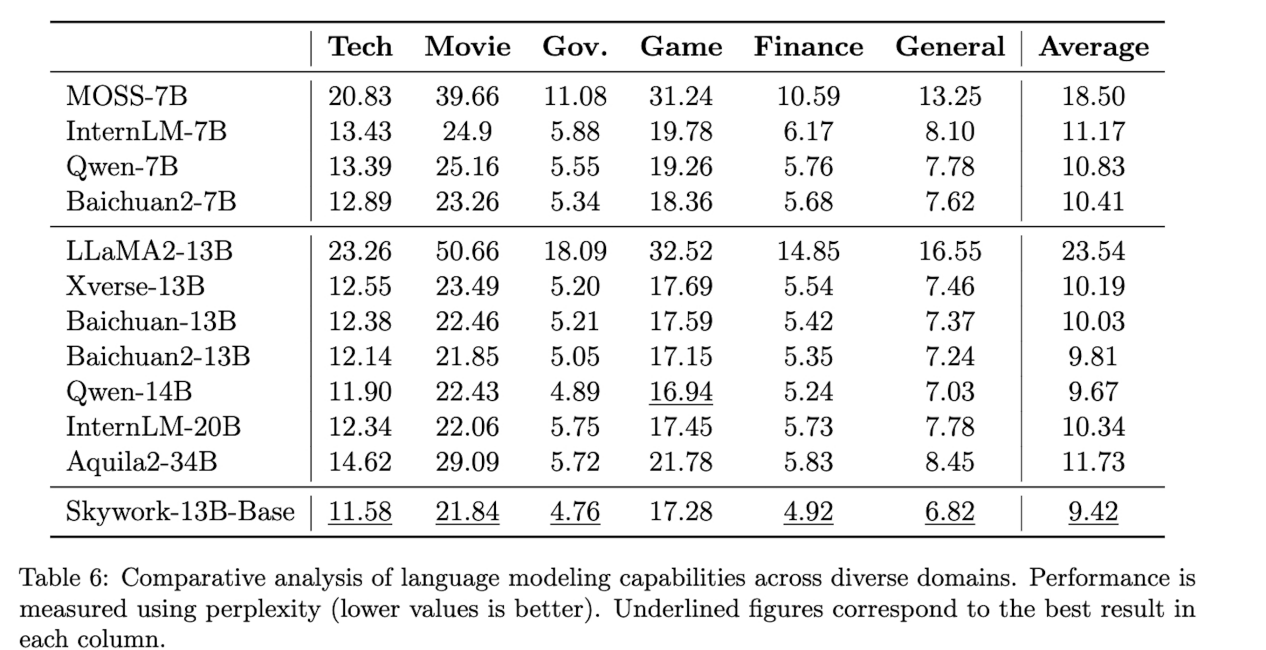

Skywork-13B series large models are excellent in Chinese language modeling , possessing excellent Chinese cultural and creative abilities. In the evaluation in the field of Chinese text creation, the Skywork-13B series large models have demonstrated outstanding capabilities, especially in the fields of technology, finance, government affairs, corporate services, cultural creation, games, etc., and their performance is better than other open source models

The figure shows the confusion of the evaluation model under different fields of data. The lower the value, the stronger the modeling ability of the model in that field. The results show that Tiangong 13B has performed well in technical articles, movies, government reports, games, finance, and general fields.

One of the largest Chinese open source data sets:150B TokensHigh-quality Chinese corpus

The Skywork-13B series will be equipped with the open source 600GB, 150B Tokens high-quality Chinese corpus data set Skypile/Chinese-Web-Text-150B, which is currently the largest One of the open source Chinese datasets. Developers can draw on the large model pre-training process and experience in the technical report to the greatest extent, deeply customize model parameters, and conduct targeted training and optimization.

The most sincere open source commercial use: no need to apply, you can achieve commercial use

Currently, in the open source community, most Chinese models are not fully available for commercial use. Normally, users in the open source community need to go through a cumbersome commercial license application process. In some cases, the issuance of commercial licenses will be clearly stipulated by the company's size, industry, number of users, etc. Kunlun Wanwei's openness and accessibility to the Skywork-13B series of open source shall not be authorized. We attach great importance to commercialization, simplify the authorization process, and remove restrictions on industry, company size, users, etc. The purpose is to help more users and companies interested in Chinese large models continue to explore and progress in the industry.

Skywork-13B series large-scale models are now fully licensed for commercial use. Users only need to download the model and agree to and abide by the "Skywork Model Community License Agreement". They do not need to apply for authorization again to use large-scale models for commercial purposes. We hope that users can more easily explore the technical capabilities of the Skywork-13B series of large-scale models and explore commercial applications in different scenarios

Promote the prosperity of the open source ecosystem and allow more developers to participate# In the technological development of##AIGC, we promote technological improvement through co-creation and sharing. In the AI era, open source ecological construction is booming and has become an important link in realizing the integration of AI and applications. By lowering the threshold for model development and usage costs, and maximizing the sharing of technical capabilities and experience, more companies and developers will be able to participate in this technological change led by AI. Fang Han, chairman and CEO of Kunlun Wanwei, is one of the first senior open source experts to participate in the construction of the open source ecosystem, and is also one of the pioneers of Chinese Linux open source. The open source spirit and the development of AIGC technology will be perfectly integrated in Kunlun Wanwei’s strategy

All in AGI

Kunlun Wanwei Group Introduction

Благодаря передовому прогнозированию тенденций технологического развития компания Kunlun Technology приступила к развертыванию месторождения AIGC уже в 2020 году. На данный момент компания накопила почти три года соответствующего опыта инженерных исследований и разработок и создала ведущие в отрасли возможности глубокой обработки данных предварительного обучения.Куньлунь Ванвэй также добился крупных прорывов в области искусственного интеллекта.В настоящее время он сформирован Большие модели ИИ, поиск ИИ, ИИ Имея шесть основных бизнес-матриц ИИ: игры, музыку ИИ, анимацию ИИ и социальные сети ИИ, это одна из отечественных компаний с сильнейшими технологиями моделирования и инженерными возможностями, наиболее полной планировкой и полностью привержен созданию сообществ с открытым исходным кодом.

The above is the detailed content of The new Kunlun Wanwei 'Tiangong' 13B series large models can be easily commercialized without any threshold.. For more information, please follow other related articles on the PHP Chinese website!

what does focus mean

what does focus mean

Tutorial on making word document tables

Tutorial on making word document tables

Database Delete usage

Database Delete usage

How to remove people from the blacklist on WeChat

How to remove people from the blacklist on WeChat

How to repair lsp

How to repair lsp

What is a root domain name server

What is a root domain name server

How to use fit function in Python

How to use fit function in Python

Solution to computer black screen prompt missing operating system

Solution to computer black screen prompt missing operating system

The main dangers of Trojan viruses

The main dangers of Trojan viruses