The core technology in the AI industry is a large model, and the basic model Quality largely determines the prospects of AI industrialization. Training large models requires systematic engineering including complex technologies such as computing base, network, storage, big data, AI framework, and AI models. Only a powerful cloud computing system can train high-quality large models

In 2009, Zhou Jingren of Alibaba Cloud proposed the concept of "the data center is a computer". Today, in the AI era, such a technical system is even more needed. As a supercomputer, cloud computing can efficiently connect heterogeneous computing resources, break through the bottleneck of a single performance chip, and collaboratively complete large-scale intelligent computing tasks

In order to ensure stable interconnection and efficient parallel computing for large-scale model training , Alibaba Cloud has made a new upgrade to the artificial intelligence platform PAI. The bottom layer of PAI adopts the HPN 7.0 new generation AI cluster network architecture, which supports cluster scalability up to 100,000 cards. The ultra-large-scale distributed training acceleration ratio is as high as 96%, far exceeding the industry level. In large-scale model training tasks, it can save more than 50% of computing resources, and its performance is world-leading

Alibaba Cloud Tongyi Large Model The series is trained through the artificial intelligence platform PAI. In addition to the Tongyi large model, many large companies in China, such as Baichuan Intelligent, Zhipu AI, Zero One Wish, Kunlun Wanwei, vivo, Fudan University and a number of leading enterprises and institutions are also training large models on Alibaba Cloud.

Wang Xiaochuan, founder and CEO of Baichuan Intelligence, said, "Baichuan has released 7 large models only half a year after its establishment. The rapid iteration is inseparable from the support of cloud computing." Baichuan Intelligence and Alibaba Cloud have conducted in-depth cooperation. , with the joint efforts of both parties, Baichuan successfully completed the training task of the kilocalorie large model, effectively reduced the cost of model inference and improved the efficiency of model deployment.

Alibaba Cloud has become the public AI computing base for China’s large models. Up to now, many mainstream large models in China have provided API services to the outside world through Alibaba Cloud, including Tongyi series, Baichuan series, Zhipu AI ChatGLM series, Jiang Ziya general large models, etc.

With the gradual development of the artificial intelligence industry, there will be an explosion in demand for large-scale intelligent computing power. Alibaba Cloud has established 89 cloud computing data centers in 30 regions around the world and provided more than 3,000 edge computing nodes. The advantages of low latency and high elasticity of cloud computing will be fully utilized. This year, Alibaba Cloud successfully supported the burst of high-intensity traffic of Miaoya Camera in a short period of time

Zhou Jingren said: "With the large model With the integration of technology and cloud computing itself, we hope that the future cloud can drive autonomously like a car, greatly improving the experience of developers using the cloud."

According to the news, it is reported that there are more than 30 cloud models on Alibaba Cloud. The product has been integrated with large model capabilities. For example, Alibaba Cloud's big data governance platform DataWorks has added a new interactive form - Copilot. Users only need to use natural language input to generate SQL and automatically perform corresponding data ETL operations. This will increase the efficiency of the entire development and analysis process by more than 30%, comparable to "autonomous driving"

Alibaba Cloud's containers, databases and other products also provide a similar development experience, and can realize NL2SQL, SQL annotation generation/ Error correction/optimization and other functions. In the future, these capabilities will also be integrated into other Alibaba Cloud products

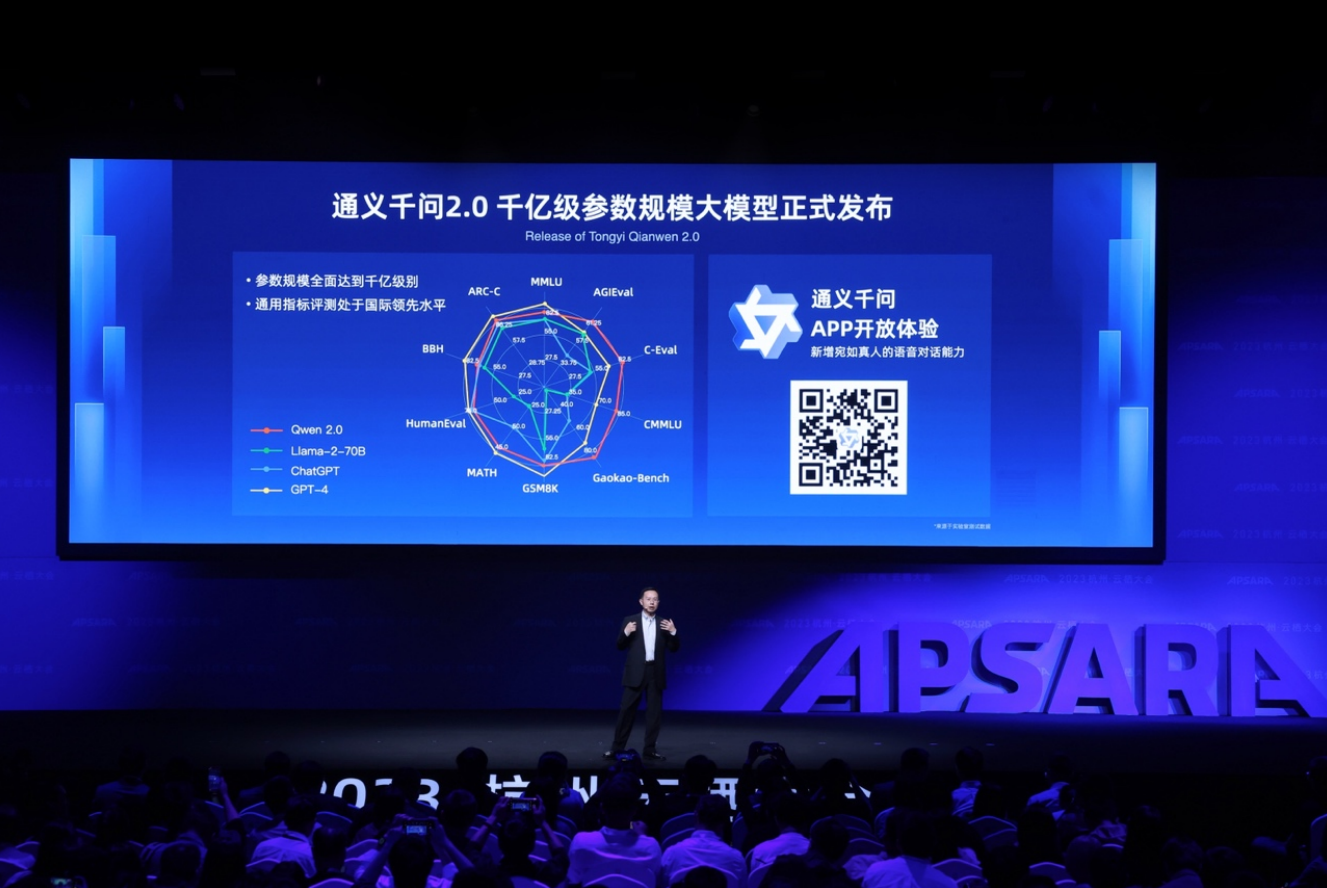

At the 2023 Yunqi Conference, Zhou Jingren announced The latest progress of Alibaba’s self-developed large models was released, and Tongyi Qianwen 2.0 with a scale of hundreds of billions of parameters was released. Tongyi Qianwen 2.0 comprehensively surpassed GPT-3.5 and Llama2 in 10 authoritative tests, accelerating to catch up with GPT-4.

Thousands of industries want to use large models to achieve changes in production and service methods, but the use of large models is high Thresholds keep most people out of the technological wave. Whether it is customizing an exclusive large model or building innovative applications based on large models, there are high requirements for talent, technology, and funding.

At the Yunqi Conference, Zhou Jingren released a one-stop large-model application development platform - Alibaba Cloud Bailian. The platform integrates mainstream high-quality large models at home and abroad, and provides services such as model selection, fine-tuning training, security suites, model deployment, and full-link application development tools. In this way, users can simplify complex tasks such as underlying computing power deployment, model pre-training and tool development. Developers only need to spend 5 minutes to develop a large model application and "refine" an enterprise-specific model within a few hours. In this way, developers can focus more on application innovation

In order to promote the integration and application of large-scale models in various industries, Alibaba Cloud has developed eight industry large-scale models based on common goals , and announced progress on the spot: personalized character creation platform Universal Stardust, intelligent investment research assistant Universal Midas, AI reading assistant Universal Zhiwen, etc. made their debuts; intelligent coding assistant Universal Lingma has been widely adopted within Alibaba Cloud and has obtained Widely praised; the work-study AI assistant Universal Listening handles more than 50,000 audio and video every day, with a cumulative user count of more than one million

The development of large-scale models is triggering a new round of innovation in all walks of life. At present, enterprises such as CCTV.com, Langxin Technology, and AsiaInfo Technology have taken the lead in developing exclusive models and applications on the Alibaba Cloud Bailian platform. Langxin Technology has trained a large-scale electric power-specific model in the cloud, and developed an "intelligent assistant for power bill interpretation" and a "power industry policy analysis/data analysis assistant." These applications have increased customer reception efficiency by 50% and reduced complaint rates by 70%

"Promote China's AI Ecological prosperity is Alibaba Cloud's primary goal. Alibaba Cloud will firmly build the most open large model platform in the AI era, and we welcome all large models to connect to Alibaba Cloud Bailian to jointly provide AI services to developers," said Zhou Jingren.

Alibaba Cloud is China's first technology company to open source self-developed large models, leading the trend of open source large models in China. At present, Alibaba Cloud has open sourced Tongyi Qianwen 7B and 14B versions, and the number of downloads has exceeded one million. At the scene, Zhou Jingren announced that the Tongyi Qianwen 72B model will be open sourced and will become the open source model with the largest parameters in China

In addition to sharing self-developed new technologies with developers, Alibaba Cloud also strongly supports the development of large models from three parties. In the Alibaba Cloud Magic Community, top players in the industry such as Baichuan Intelligence, Zhipu AI, Shanghai Artificial Intelligence Laboratory, and IDEA Research Institute have all made their core large models open source for the first time, while Alibaba Cloud provides developers with free GPU computing power for them to experience and use these large models, which has accumulated more than 30 million hours so far

According to Zhou Jingren It was revealed that the Moda community has gathered more than 2,300 artificial intelligence models and attracted 2.8 million artificial intelligence developers. The number of downloads of artificial intelligence models has exceeded 100 million times, making it the largest artificial intelligence community in China with the most active developers

At the 2023 Yunqi Conference, Alibaba Cloud announced a major Plan: "Yun Gong Kaiwu Plan" aims to provide a cloud server for each of all college students in China. In addition, Alibaba Cloud will also provide larger-scale computing resource support to contracted universities to help China's young scholars and students reach the peak of scientific research. At present, Tsinghua University, Peking University, Zhejiang University, Shanghai Jiao Tong University, University of Science and Technology of China, South China University of Technology and other universities have become the first batch of partners of the plan

The above is the detailed content of Alibaba Cloud comprehensively upgraded its AI infrastructure. Tongyi Qianwen 2.0 was officially unveiled. Half of China's large model companies are deployed on Alibaba Cloud.. For more information, please follow other related articles on the PHP Chinese website!