Machine Learning | PyTorch Concise Tutorial Part 1

The previous articles introduced feature normalization and tensors. Next, I will write two concise tutorials on PyTorch, mainly introducing simple practices of PyTorch.

1. Four arithmetic operations

import torcha = torch.tensor([2, 3, 4])b = torch.tensor([3, 4, 5])print("a + b: ", (a + b).numpy())print("a - b: ", (a - b).numpy())print("a * b: ", (a * b).numpy())print("a / b: ", (a / b).numpy())There is no need to explain addition, subtraction, multiplication and division. The output is:

a + b:[5 7 9]a - b:[-1 -1 -1]a * b:[ 6 12 20]a / b:[0.6666667 0.750.8]

2. Linear regression

Linear regression is found A straight line is as close as possible to the known point, as shown in the figure:

Figure 1

Figure 1

import torchfrom torch import optimdef build_model1():return torch.nn.Sequential(torch.nn.Linear(1, 1, bias=False))def build_model2():model = torch.nn.Sequential()model.add_module("linear", torch.nn.Linear(1, 1, bias=False))return modeldef train(model, loss, optimizer, x, y):model.train()optimizer.zero_grad()fx = model.forward(x.view(len(x), 1)).squeeze()output = loss.forward(fx, y)output.backward()optimizer.step()return output.item()def main():torch.manual_seed(42)X = torch.linspace(-1, 1, 101, requires_grad=False)Y = 2 * X + torch.randn(X.size()) * 0.33print("X: ", X.numpy(), ", Y: ", Y.numpy())model = build_model1()loss = torch.nn.MSELoss(reductinotallow='mean')optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.9)batch_size = 10for i in range(100):cost = 0.num_batches = len(X) // batch_sizefor k in range(num_batches):start, end = k * batch_size, (k + 1) * batch_sizecost += train(model, loss, optimizer, X[start:end], Y[start:end])print("Epoch = %d, cost = %s" % (i + 1, cost / num_batches))w = next(model.parameters()).dataprint("w = %.2f" % w.numpy())if __name__ == "__main__":main()(1) Start with the main function, torch.manual_seed(42 ) is used to set the seed of the random number generator to ensure that the random number sequence generated is the same every time it is run. This function accepts an integer parameter as a seed and can be used in scenarios that require random numbers such as training neural networks to ensure Repeatability of results;

(2) torch.linspace(-1, 1, 101, requires_grad=False) is used to generate a set of equally spaced values within a specified interval. This function accepts three Parameters: starting value, ending value and number of elements, returning a tensor containing the specified number of equally spaced values;

(3) Internal implementation of build_model1:

- torch.nn.Sequential(torch.nn.Linear(1, 1, bias=False)) uses the constructor of the nn.Sequential class, passes the linear layer to it as a parameter, and then returns a Neural network model;

- build_model2 has the same function as build_model1, using the add_module() method to add a submodule named linear to it;

(4) torch.nn.MSELoss (reductinotallow='mean') defines the loss function;

Use optim.SGD(model.parameters(), lr=0.01, momentum=0.9) to implement the Stochastic Gradient Descent (SGD) optimization algorithm

Split the training set by batch size and loop 100 times

(7) Next is the training function train, which is used to train a neural network model. Specifically, this function accepts the following Parameters:

- model: neural network model, usually an instance of a class inherited from nn.Module;

- loss: loss function, used to calculate the predicted value of the model and the true value The difference between values;

- optimizer: optimizer, used to update the parameters of the model;

- x: input data, which is a tensor of torch.Tensor type;

- y: The target data is a tensor of torch.Tensor type;

(8) train is a commonly used method in the PyTorch training process. The steps are as follows:

- Set the model to training mode, that is, enable special operations used during training such as dropout and batch normalization;

- Clear the gradient cache of the optimizer for a new round of gradient calculation;

- Pass the input data to the model, calculate the predicted value of the model, and pass the predicted value and target data to the loss function to calculate the loss value;

- Backpropagate the loss value and calculate the gradient of the model parameters ;

- Use the optimizer to update the model parameters to minimize the loss value;

- Return the scalar value of the loss value;

(9)print("Round times = %d, loss value = %s" % (i 1, cost / num_batches)) Finally, print the current training round and loss value. The above code output is as follows:

...Epoch = 95, cost = 0.10514946877956391Epoch = 96, cost = 0.10514946877956391Epoch = 97, cost = 0.10514946877956391Epoch = 98, cost = 0.10514946877956391Epoch = 99, cost = 0.10514946877956391Epoch = 100, cost = 0.10514946877956391w = 1.98

3. Logistic regression

Logistic regression uses a curve to approximately represent the trajectory of a bunch of discrete points. As shown in the figure:

Figure 2

Figure 2

import numpy as npimport torchfrom torch import optimfrom data_util import load_mnistdef build_model(input_dim, output_dim):return torch.nn.Sequential(torch.nn.Linear(input_dim, output_dim, bias=False))def train(model, loss, optimizer, x_val, y_val):model.train()optimizer.zero_grad()fx = model.forward(x_val)output = loss.forward(fx, y_val)output.backward()optimizer.step()return output.item()def predict(model, x_val):model.eval()output = model.forward(x_val)return output.data.numpy().argmax(axis=1)def main():torch.manual_seed(42)trX, teX, trY, teY = load_mnist(notallow=False)trX = torch.from_numpy(trX).float()teX = torch.from_numpy(teX).float()trY = torch.tensor(trY)n_examples, n_features = trX.size()n_classes = 10model = build_model(n_features, n_classes)loss = torch.nn.CrossEntropyLoss(reductinotallow='mean')optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.9)batch_size = 100for i in range(100):cost = 0.num_batches = n_examples // batch_sizefor k in range(num_batches):start, end = k * batch_size, (k + 1) * batch_sizecost += train(model, loss, optimizer,trX[start:end], trY[start:end])predY = predict(model, teX)print("Epoch %d, cost = %f, acc = %.2f%%"% (i + 1, cost / num_batches, 100. * np.mean(predY == teY)))if __name__ == "__main__":main()(1) Start with the main function, which is introduced above torch.manual_seed(42), skip it here ;

(2) load_mnist is its own implementation of downloading the mnist data set, returning trX and teX as input data, trY and teY as label data;

(3) internal implementation of build_model: torch.nn .Sequential(torch.nn.Linear(input_dim, output_dim, bias=False)) is used to build a neural network model containing a linear layer. The number of input features of the model is input_dim, the number of output features is output_dim, and the linear layer has no Bias term, where n_classes=10 means outputting 10 categories; After rewriting: (3) Internal implementation of build_model: Use torch.nn.Sequential(torch.nn.Linear(input_dim, output_dim, bias=False)) to build a neural network model containing a linear layer. The number of input features of the model is input_dim. The number of output features is output_dim, and the linear layer has no bias term. Among them, n_classes=10 means outputting 10 categories;

(4) The other steps are to define the loss function, gradient descent optimizer, split the training set through batch_size, and loop 100 times for train;

Use optim.SGD(model.parameters(), lr=0.01, momentum=0.9) to implement the Stochastic Gradient Descent (SGD) optimization algorithm

(6) At the end of each round of training Finally, the predict function needs to be executed to make predictions. This function accepts two parameters model (the trained model) and teX (the data that needs to be predicted). Specific steps are as follows:

- model.eval()模型设置为评估模式,这意味着模型将不会进行训练,而是仅用于推理;

- 将output转换为NumPy数组,并使用argmax()方法获取每个样本的预测类别;

(7)print("Epoch %d, cost = %f, acc = %.2f%%" % (i + 1, cost / num_batches, 100. * np.mean(predY == teY)))最后打印当前训练的轮次,损失值和acc,上述的代码输出如下(执行很快,但是准确率偏低):

...Epoch 91, cost = 0.252863, acc = 92.52%Epoch 92, cost = 0.252717, acc = 92.51%Epoch 93, cost = 0.252573, acc = 92.50%Epoch 94, cost = 0.252431, acc = 92.50%Epoch 95, cost = 0.252291, acc = 92.52%Epoch 96, cost = 0.252153, acc = 92.52%Epoch 97, cost = 0.252016, acc = 92.51%Epoch 98, cost = 0.251882, acc = 92.51%Epoch 99, cost = 0.251749, acc = 92.51%Epoch 100, cost = 0.251617, acc = 92.51%

4、神经网络

一个经典的LeNet网络,用于对字符进行分类,如图:

图3

图3

- 定义一个多层的神经网络

- 对数据集的预处理并准备作为网络的输入

- 将数据输入到网络

- 计算网络的损失

- 反向传播,计算梯度

import numpy as npimport torchfrom torch import optimfrom data_util import load_mnistdef build_model(input_dim, output_dim):return torch.nn.Sequential(torch.nn.Linear(input_dim, 512, bias=False),torch.nn.Sigmoid(),torch.nn.Linear(512, output_dim, bias=False))def train(model, loss, optimizer, x_val, y_val):model.train()optimizer.zero_grad()fx = model.forward(x_val)output = loss.forward(fx, y_val)output.backward()optimizer.step()return output.item()def predict(model, x_val):model.eval()output = model.forward(x_val)return output.data.numpy().argmax(axis=1)def main():torch.manual_seed(42)trX, teX, trY, teY = load_mnist(notallow=False)trX = torch.from_numpy(trX).float()teX = torch.from_numpy(teX).float()trY = torch.tensor(trY)n_examples, n_features = trX.size()n_classes = 10model = build_model(n_features, n_classes)loss = torch.nn.CrossEntropyLoss(reductinotallow='mean')optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.9)batch_size = 100for i in range(100):cost = 0.num_batches = n_examples // batch_sizefor k in range(num_batches):start, end = k * batch_size, (k + 1) * batch_sizecost += train(model, loss, optimizer,trX[start:end], trY[start:end])predY = predict(model, teX)print("Epoch %d, cost = %f, acc = %.2f%%"% (i + 1, cost / num_batches, 100. * np.mean(predY == teY)))if __name__ == "__main__":main()(1)以上这段神经网络的代码与逻辑回归没有太多的差异,区别的地方是build_model,这里是构建一个包含两个线性层和一个Sigmoid激活函数的神经网络模型,该模型包含一个输入特征数量为input_dim,输出特征数量为output_dim的线性层,一个Sigmoid激活函数,以及一个输入特征数量为512,输出特征数量为output_dim的线性层;

(2)print("Epoch %d, cost = %f, acc = %.2f%%" % (i + 1, cost / num_batches, 100. * np.mean(predY == teY)))最后打印当前训练的轮次,损失值和acc,上述的代码输入如下(执行时间比逻辑回归要长,但是准确率要高很多):

第91个时期,费用= 0.054484,准确率= 97.58%第92个时期,费用= 0.053753,准确率= 97.56%第93个时期,费用= 0.053036,准确率= 97.60%第94个时期,费用= 0.052332,准确率= 97.61%第95个时期,费用= 0.051641,准确率= 97.63%第96个时期,费用= 0.050964,准确率= 97.66%第97个时期,费用= 0.050298,准确率= 97.66%第98个时期,费用= 0.049645,准确率= 97.67%第99个时期,费用= 0.049003,准确率= 97.67%第100个时期,费用= 0.048373,准确率= 97.68%

The above is the detailed content of Machine Learning | PyTorch Concise Tutorial Part 1. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

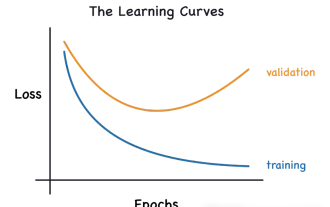

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

In layman’s terms, a machine learning model is a mathematical function that maps input data to a predicted output. More specifically, a machine learning model is a mathematical function that adjusts model parameters by learning from training data to minimize the error between the predicted output and the true label. There are many models in machine learning, such as logistic regression models, decision tree models, support vector machine models, etc. Each model has its applicable data types and problem types. At the same time, there are many commonalities between different models, or there is a hidden path for model evolution. Taking the connectionist perceptron as an example, by increasing the number of hidden layers of the perceptron, we can transform it into a deep neural network. If a kernel function is added to the perceptron, it can be converted into an SVM. this one

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

In the 1950s, artificial intelligence (AI) was born. That's when researchers discovered that machines could perform human-like tasks, such as thinking. Later, in the 1960s, the U.S. Department of Defense funded artificial intelligence and established laboratories for further development. Researchers are finding applications for artificial intelligence in many areas, such as space exploration and survival in extreme environments. Space exploration is the study of the universe, which covers the entire universe beyond the earth. Space is classified as an extreme environment because its conditions are different from those on Earth. To survive in space, many factors must be considered and precautions must be taken. Scientists and researchers believe that exploring space and understanding the current state of everything can help understand how the universe works and prepare for potential environmental crises

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

MetaFAIR teamed up with Harvard to provide a new research framework for optimizing the data bias generated when large-scale machine learning is performed. It is known that the training of large language models often takes months and uses hundreds or even thousands of GPUs. Taking the LLaMA270B model as an example, its training requires a total of 1,720,320 GPU hours. Training large models presents unique systemic challenges due to the scale and complexity of these workloads. Recently, many institutions have reported instability in the training process when training SOTA generative AI models. They usually appear in the form of loss spikes. For example, Google's PaLM model experienced up to 20 loss spikes during the training process. Numerical bias is the root cause of this training inaccuracy,