Technology peripherals

Technology peripherals

AI

AI

GPT-4 makes a 'world model', allowing LLM to learn from 'wrong questions' and significantly improve its reasoning ability

GPT-4 makes a 'world model', allowing LLM to learn from 'wrong questions' and significantly improve its reasoning ability

GPT-4 makes a 'world model', allowing LLM to learn from 'wrong questions' and significantly improve its reasoning ability

Recently, large language models have made significant breakthroughs in various natural language processing tasks, especially in mathematical problems that require complex chain of thought (CoT) reasoning

For example, in the data sets of difficult mathematical tasks such as GSM8K and MATH, proprietary models including GPT-4 and PaLM-2 have achieved remarkable results. In this regard, open source large models still have considerable room for improvement. To further improve the CoT inference capabilities of open source large models for mathematical tasks, a common approach is to fine-tune these models using annotated/generated question-inference data pairs (CoT data) that directly teach the model how to perform tasks on these Perform CoT inference during the task.

Recently, researchers from Xi'an Jiaotong University, Microsoft and Peking University explored an improvement idea in a paper, that is, through the reverse learning process (i.e., learning from the mistakes of LLM ) to further improve his reasoning ability

Just like a student who starts learning mathematics, he will first improve his understanding by studying the knowledge points and examples in the textbook. But at the same time, he also does exercises to consolidate what he has learned. When he encounters difficulties or fails in solving a problem, he will realize what mistakes he has made and learn how to correct them, thus forming a "wrong problem book". It is by learning from mistakes that his reasoning ability is further improved

Inspired by this process, this work explores how LLM's reasoning ability can be improved from understanding and correcting errors benefit from.

Paper address: https://arxiv.org/pdf/2310.20689.pdf

Specific Specifically, the researchers first generated error-correction data pairs (called correction data) and then used the correction data to fine-tune the LLM. When generating correction data: what needed to be rewritten, they used multiple LLMs (including LLaMA and the GPT family of models) to collect inaccurate inference paths (i.e., the final answer was incorrect), and subsequently used GPT-4 as " Corrector", which generates corrections for these inaccurate reasoning paths

The generated correction contains three pieces of information: (1) the incorrect step in the original solution; (2) an explanation that the step was incorrect Correct reasons; (3) How to revise the original solution to arrive at the correct final answer. After filtering out corrections with incorrect final answers, manual evaluation showed that the correction data showed sufficient quality for the subsequent fine-tuning phase. The researchers used QLoRA to fine-tune the LLM on the CoT data and correction data, thereby performing "Learning from Errors" (LEMA).

Research shows that the current LLM can use a step-by-step approach to solve problems, but this multi-step generation process does not mean that the LLM itself has strong reasoning capabilities. This is because they may only imitate the surface behavior of human reasoning without truly understanding the underlying logic and rules required

This lack of understanding can lead to errors in the reasoning process, so The help of a "world model" is needed, because the "world model" has a priori awareness of the logic and rules of the real world. From this perspective, the LEMA framework in this article can be seen as using GPT-4 as a "world model" to teach smaller models to follow these logics and rules, rather than just imitating step-by-step behavior.

Now, let’s take a look at the specific implementation steps of this study

Method Overview

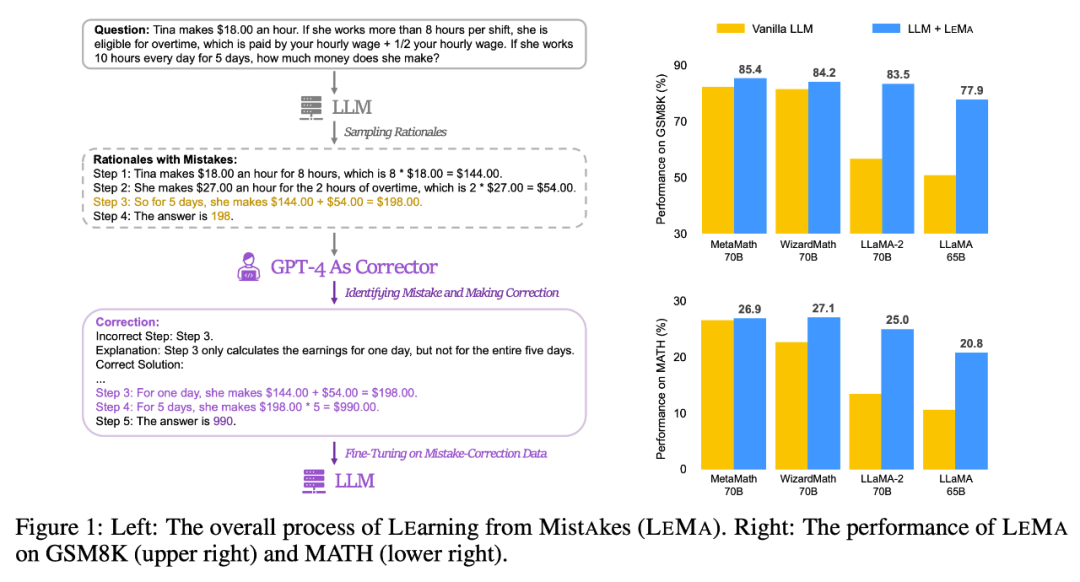

Please look at Figure 1 (left) below, which shows the overall process of LEMA, including the two main stages of generating correction data: content that needs to be rewritten and fine-tuning LLM. Figure 1 (right) shows the performance of LEMA on GSM8K and MATH data sets

Generate corrected data: re-processing is required. What is written

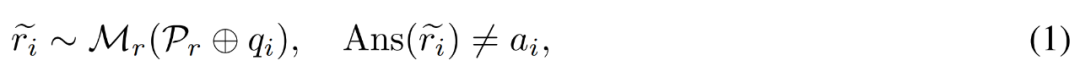

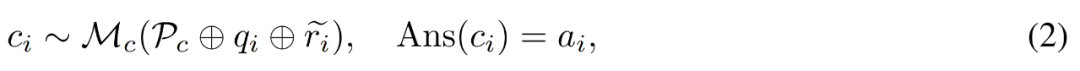

Given a question and answer example , a corrector model M_c and an inference model M_r, the researcher generated error correction data pairs

, a corrector model M_c and an inference model M_r, the researcher generated error correction data pairs , Among them,

, Among them,  represents the inaccurate reasoning path of question q_i, and c_i represents the correction to

represents the inaccurate reasoning path of question q_i, and c_i represents the correction to  .

.

Correction of inaccurate reasoning path. The researcher first uses the inference model M_r to sample multiple inference paths for each question q_i, and then only retains those paths that ultimately do not lead to the correct answer a_i, as shown in the following formula (1).

Generate fix for errors. For question q_i and inaccurate reasoning path  , the researcher uses the corrector model M_c to generate a correction, and then checks the correct answer in the correction, as shown in equation (2) below.

, the researcher uses the corrector model M_c to generate a correction, and then checks the correct answer in the correction, as shown in equation (2) below.

P_c here includes four annotated error correction examples to guide the corrector model on which types to include in the generated corrections The information

Specifically, the annotated correction includes the following three categories of information:

- Error step: Original reasoning path Which step went wrong.

- Explanation: What type of error occurred in this step;

- Correct solution: How to correct the inaccurate reasoning path to better solve the original problem.

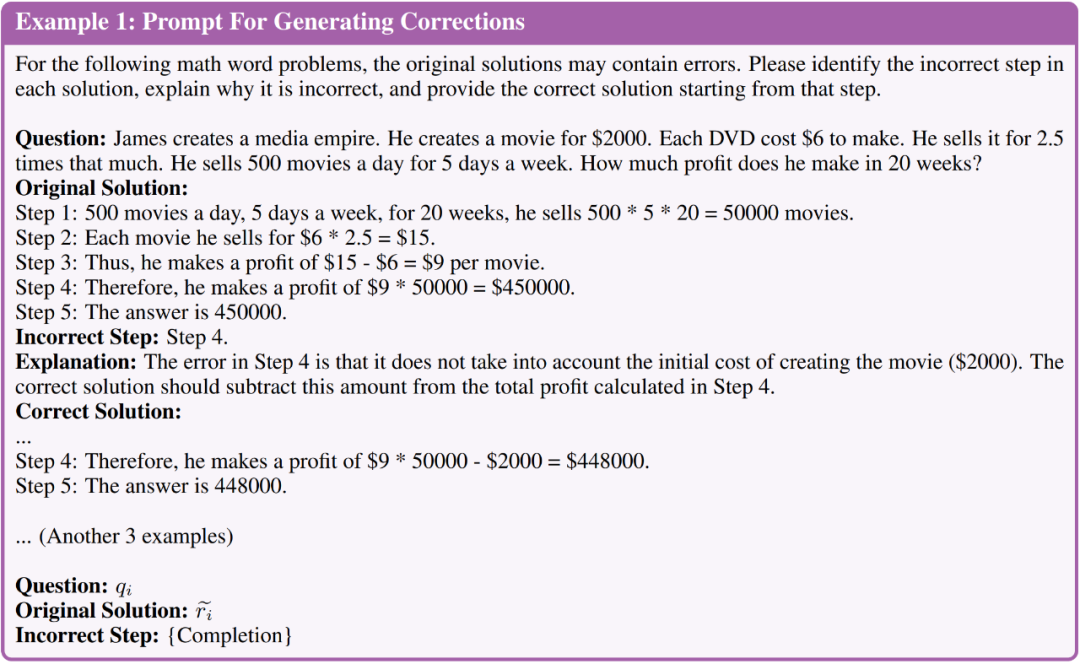

Please take a look at the picture below. Figure 1 briefly shows the prompts used to generate the correction

Generate corrected human evaluation. Before generating larger data, we first manually evaluated the quality of the generated corrections. They used LLaMA-2-70B as M_r and GPT-4 as M_c, and generated 50 error-corrected data pairs based on the GSM8K training set.

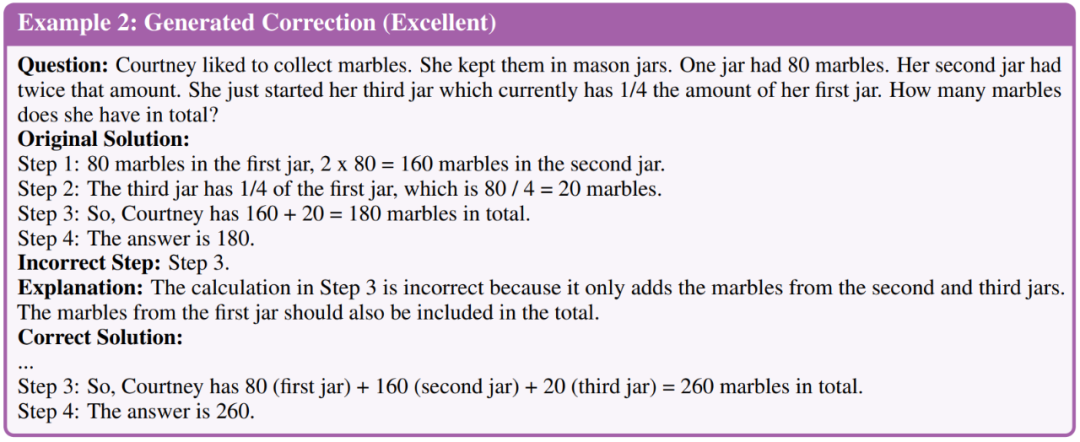

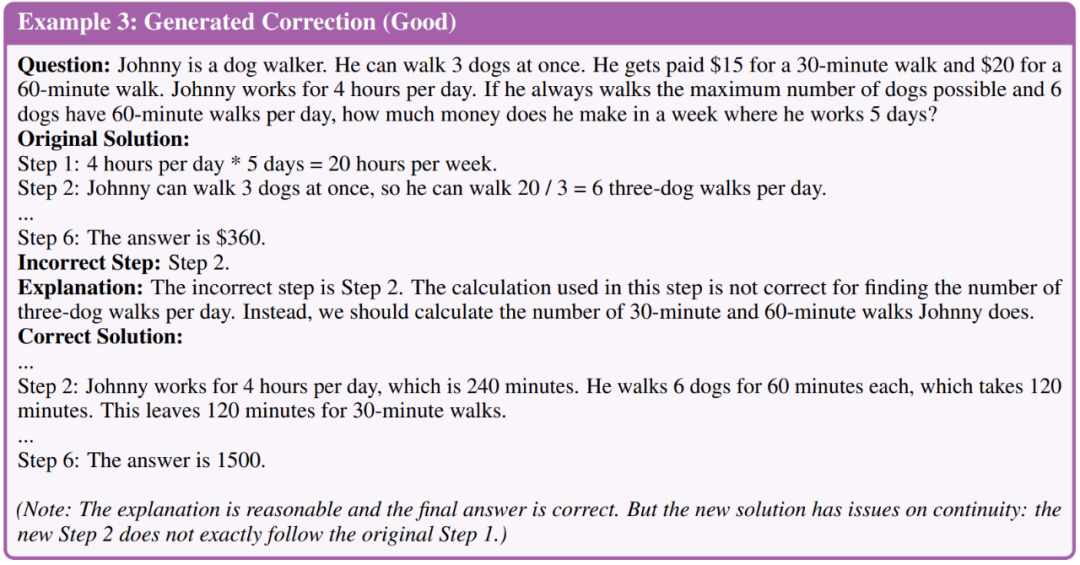

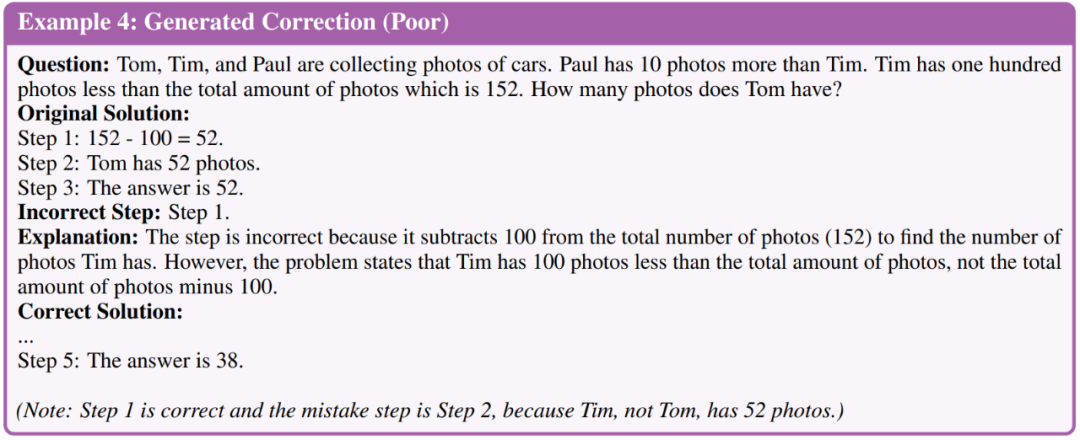

The researchers classified revisions into three quality levels: excellent, good and bad. Below is an example of three levels

##The evaluation results found that, Of the 50 build fixes, 35 were of excellent quality, 11 were good, and 4 were poor. Based on this evaluation, the researchers concluded that the overall quality of the corrections generated using GPT-4 was sufficient for further fine-tuning stages. Therefore, they generated more large-scale corrections and used all the corrections that ultimately led to the correct answer to the LLM that required fine-tuning.

It is LLM that needs fine-tuning

After generating correction data: what needed to be rewritten, the researchers fine-tuned the LLM to evaluate whether the models could learn from their mistakes. They mainly perform performance comparisons under the following two fine-tuning settings.

The first is to fine-tune on the Chain of Thought (CoT) data. Researchers fine-tune the model only on question-rationale data. Although there is annotated data in each task, they additionally employ CoT data augmentation. The researchers used GPT-4 to generate more reasoning paths for each question in the training set and filter out paths with incorrect final answers. They leverage CoT data augmentation to build a robust fine-tuning baseline that uses only CoT data and facilitates ablation studies on the data size that controls fine-tuning.

The second is to fine-tune the CoT data correction data. In addition to CoT data, the researchers also generated error correction data for fine-tuning (i.e., LEMA). They also conducted ablation experiments with controlled data size to reduce the impact of increments on data size.

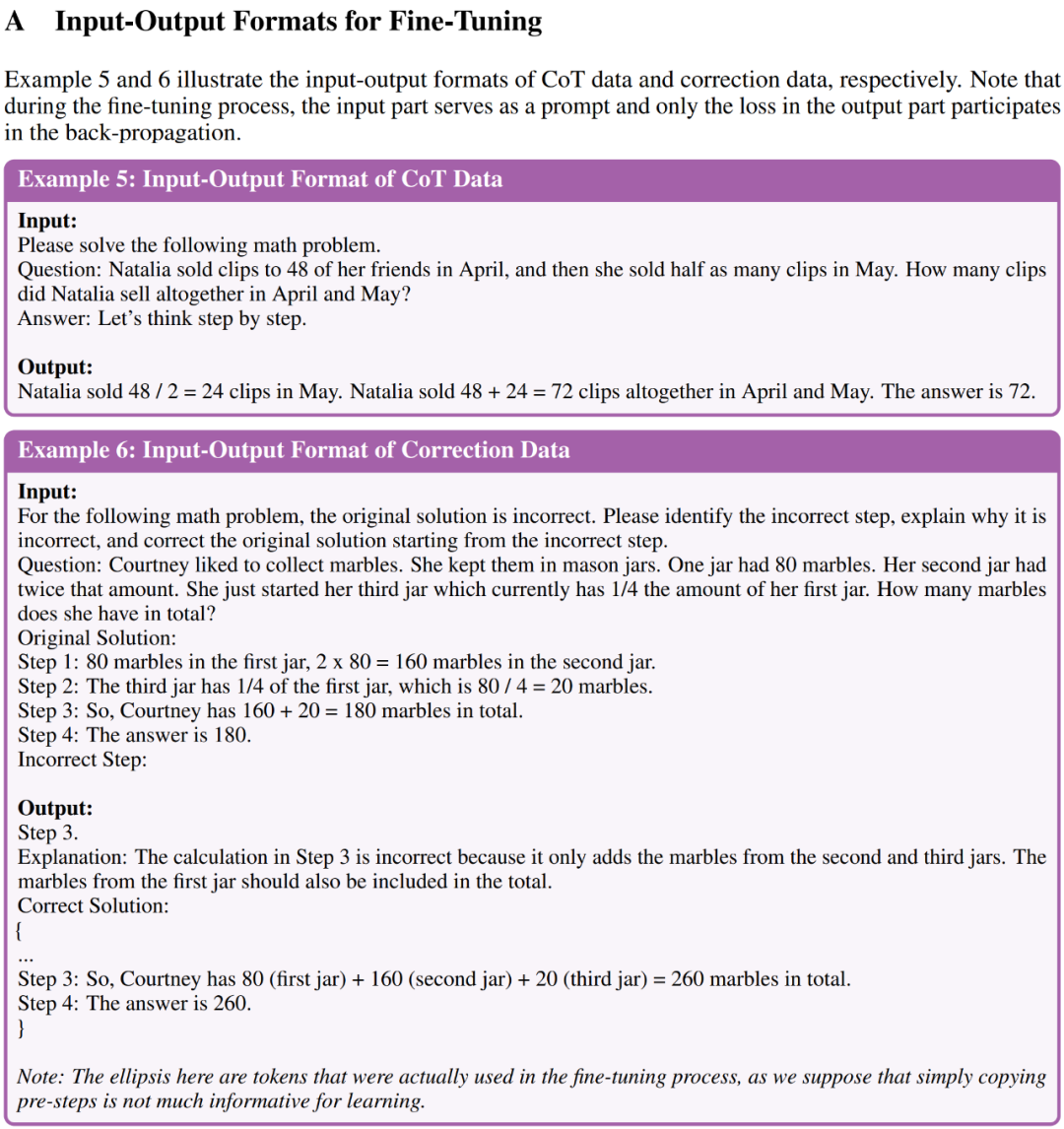

Example 5 and Example 6 in Appendix A show the input-output formats of CoT data and correction data for fine-tuning respectively

Experimental results

The researchers demonstrated the effectiveness of LEMA on five open source LLMs and two challenging mathematical reasoning tasks through experimental results

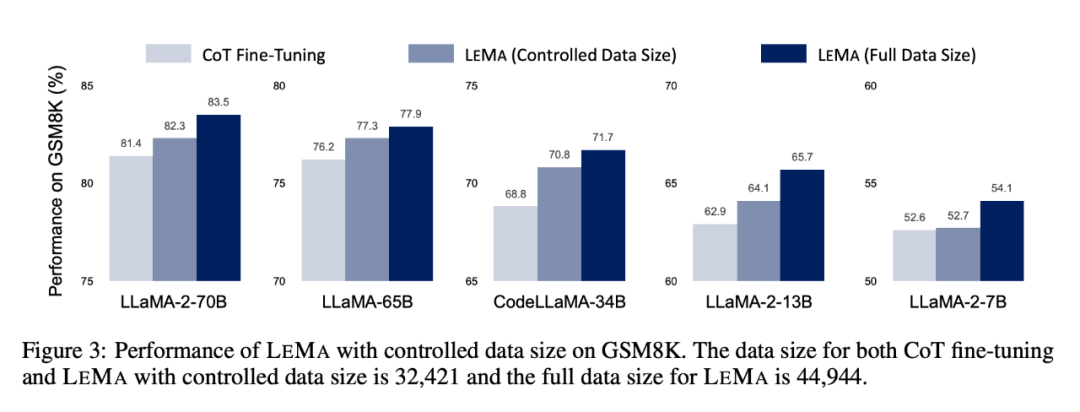

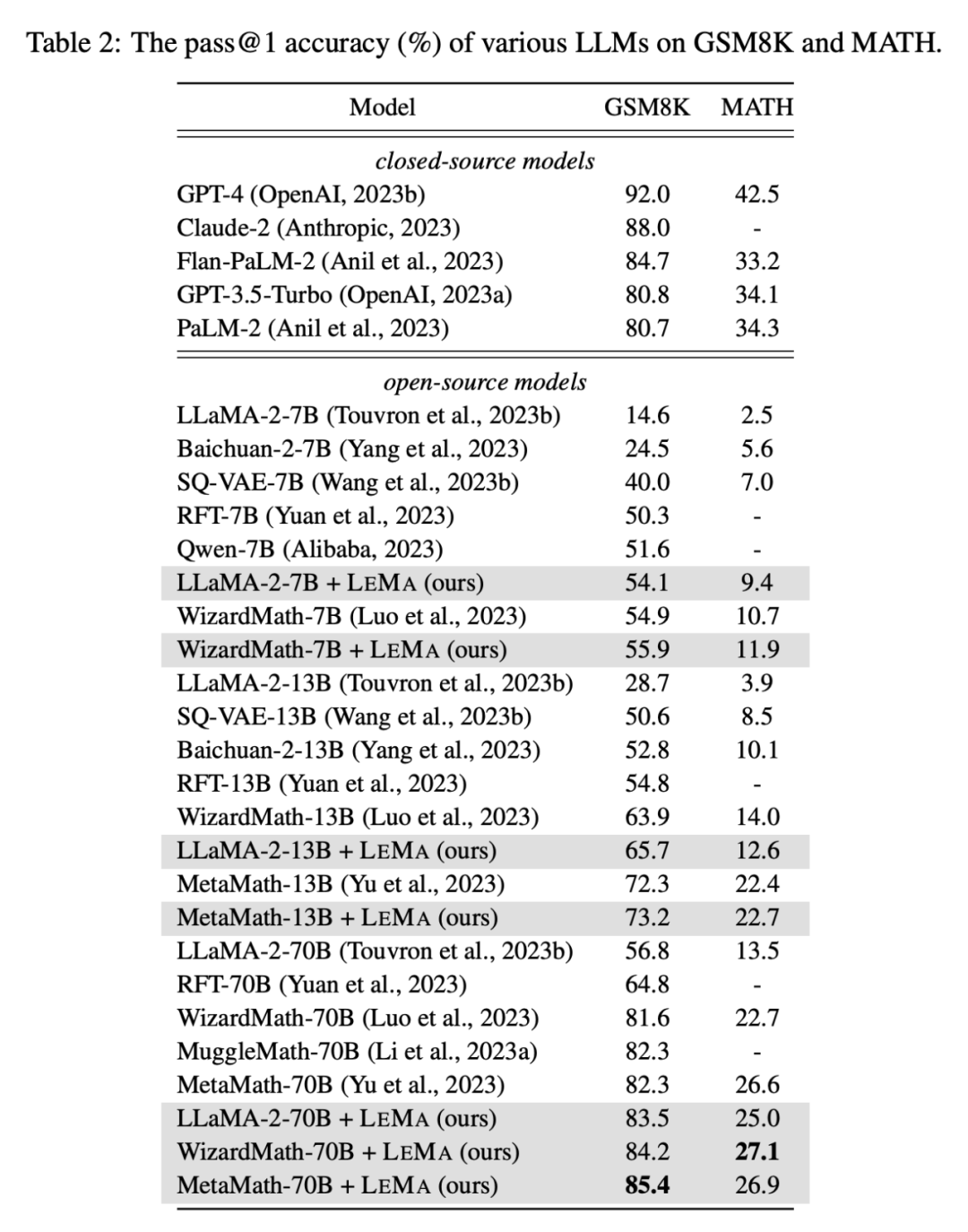

LEMA consistently improves performance across a variety of LLMs and tasks, compared to just fine-tuning on CoT data. For example, LEMA using LLaMA-2-70B achieved 83.5% and 25.0% on GSM8K and MATH respectively, while fine-tuning only on CoT data achieved 81.4% and 23.6% respectively

Additionally, LEMA is compatible with proprietary LLM: LEMA with WizardMath-70B/MetaMath-70B achieves 84.2%/85.4% pass@1 on GSM8K Accuracy, achieving a pass@1 accuracy of 27.1%/26.9% on MATH, exceeding the SOTA performance achieved by many open source models on these challenging tasks.

Subsequent ablation studies show that LEMA still outperforms CoT-alone fine-tuning with the same amount of data. This suggests that CoT data and corrected data are not equally effective, as combining both data sources yields more improvement than using a single data source. These experimental results and analyzes highlight the potential of learning from errors to enhance LLM inference capabilities.

For more research details, please see the original paper

The above is the detailed content of GPT-4 makes a 'world model', allowing LLM to learn from 'wrong questions' and significantly improve its reasoning ability. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

36

36

110

110

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Binance is the overlord of the global digital asset trading ecosystem, and its characteristics include: 1. The average daily trading volume exceeds $150 billion, supports 500 trading pairs, covering 98% of mainstream currencies; 2. The innovation matrix covers the derivatives market, Web3 layout and education system; 3. The technical advantages are millisecond matching engines, with peak processing volumes of 1.4 million transactions per second; 4. Compliance progress holds 15-country licenses and establishes compliant entities in Europe and the United States.

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Exchanges play a vital role in today's cryptocurrency market. They are not only platforms for investors to trade, but also important sources of market liquidity and price discovery. The world's largest virtual currency exchanges rank among the top ten, and these exchanges are not only far ahead in trading volume, but also have their own advantages in user experience, security and innovative services. Exchanges that top the list usually have a large user base and extensive market influence, and their trading volume and asset types are often difficult to reach by other exchanges.

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

The plunge in the cryptocurrency market has caused panic among investors, and Dogecoin (Doge) has become one of the hardest hit areas. Its price fell sharply, and the total value lock-in of decentralized finance (DeFi) (TVL) also saw a significant decline. The selling wave of "Black Monday" swept the cryptocurrency market, and Dogecoin was the first to be hit. Its DeFiTVL fell to 2023 levels, and the currency price fell 23.78% in the past month. Dogecoin's DeFiTVL fell to a low of $2.72 million, mainly due to a 26.37% decline in the SOSO value index. Other major DeFi platforms, such as the boring Dao and Thorchain, TVL also dropped by 24.04% and 20, respectively.

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

The top exchanges include: 1. Binance, the world's largest trading volume, supports 600 currencies, and the spot handling fee is 0.1%; 2. OKX, a balanced platform, supports 708 trading pairs, and the perpetual contract handling fee is 0.05%; 3. Gate.io, covers 2700 small currencies, and the spot handling fee is 0.1%-0.3%; 4. Coinbase, the US compliance benchmark, the spot handling fee is 0.5%; 5. Kraken, the top security, and regular reserve audit.

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

How to avoid losses after ETH upgrade

Apr 21, 2025 am 10:03 AM

How to avoid losses after ETH upgrade

Apr 21, 2025 am 10:03 AM

After ETH upgrade, novices should adopt the following strategies to avoid losses: 1. Do their homework and understand the basic knowledge and upgrade content of ETH; 2. Control positions, test the waters in small amounts and diversify investment; 3. Make a trading plan, clarify goals and set stop loss points; 4. Profil rationally and avoid emotional decision-making; 5. Choose a formal and reliable trading platform; 6. Consider long-term holding to avoid the impact of short-term fluctuations.