Summary of real-time data analysis and prediction experience based on MongoDB

Title: Experience summary of real-time data analysis and prediction based on MongoDB

Introduction:

With the rapid development of information technology, data analysis and prediction It has become a key factor in corporate decision-making and development. As a non-relational database, MongoDB provides a lot of convenience for real-time data analysis and prediction. This article will summarize the experience of real-time data analysis and prediction based on MongoDB, and provide some practical guidance.

1. Introduction to MongoDB

MongoDB is an open source document database that uses a JSON-like BSON (Binary JSON) format to store data. Compared with traditional relational databases, MongoDB has high scalability, flexibility and good performance. It supports dynamic query, indexing, aggregation, distributed computing and other features, making it very suitable for real-time data analysis and prediction.

2. Challenges of real-time data analysis and prediction

Real-time data analysis and prediction face some challenges. First of all, the amount of data is huge and the real-time requirements are high. Therefore, the system needs to have the ability to process large-scale data and provide accurate analysis and prediction results in a short time. Secondly, data sources are diverse and have complex structures. Data may come from multiple channels and have different formats and structures, which requires the system to have good data integration and cleaning capabilities. Finally, the results need to be displayed in real time and support multiple forms of visualization. This places higher requirements on system response speed and user experience.

3. Real-time data analysis process based on MongoDB

The real-time data analysis process based on MongoDB mainly includes data collection and transmission, data integration and cleaning, data analysis and prediction, and result display.

- Data collection and transmission: Data collection can be carried out in various ways, such as log records, sensor data, social media data, etc. MongoDB provides a variety of data import tools and APIs to make data import simple and efficient.

- Data integration and cleaning: MongoDB’s flexibility makes it possible to process diverse data. Data from different sources and formats can be integrated into MongoDB by using data integration tools, ETL tools, or programming languages. At the same time, data can be cleaned and processed to ensure data quality and accuracy.

- Data analysis and prediction: MongoDB provides rich query and aggregation functions to support real-time data analysis. You can leverage MongoDB's query language and aggregation pipeline for on-the-fly analysis, or use MapReduce for complex calculations and analysis. In addition, combined with algorithms such as machine learning, further data prediction and modeling can be performed.

- Result display: MongoDB can display and visualize data through built-in visualization tools or third-party tools. In this way, users can intuitively observe and understand the analysis results and make corresponding decisions.

4. Advantages and applications of real-time data analysis and prediction based on MongoDB

- Advantages:

(1) Efficient data storage And processing capabilities: MongoDB supports horizontal expansion and sharding technology, and can handle massive data and high concurrent requests.

(2) Flexible data model: MongoDB’s document data model is suitable for different types and structures of data, and can meet the needs of real-time data analysis and prediction.

(3) Support rich query and aggregation functions: MongoDB provides a powerful query language and aggregation pipeline to meet complex analysis needs.

- Application:

(1) Real-time log analysis: Using MongoDB’s fast insertion and query performance, large-scale log data can be analyzed in real-time and potential problems discovered. or abnormal circumstances.

(2) User behavior analysis: By collecting user behavior data and combining it with the aggregation and calculation functions of MongoDB, the user's preferences and needs can be understood, and corresponding responses and recommendations can be made.

(3) Prediction and modeling: Combining machine learning and data mining algorithms, MongoDB can be used for data prediction and modeling to achieve more accurate predictions and decisions.

Conclusion:

Real-time data analysis and prediction based on MongoDB can help enterprises quickly obtain useful information, optimize decisions, and improve efficiency and competitiveness. However, in practical applications, it is also necessary to pay attention to issues such as data security and privacy protection, and to flexibly choose appropriate tools and technologies based on actual needs. In short, MongoDB provides a new choice for real-time data analysis and prediction, with broad application prospects.

The above is the detailed content of Summary of real-time data analysis and prediction experience based on MongoDB. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

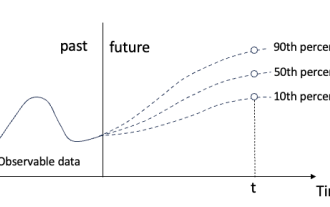

Quantile regression for time series probabilistic forecasting

May 07, 2024 pm 05:04 PM

Quantile regression for time series probabilistic forecasting

May 07, 2024 pm 05:04 PM

Do not change the meaning of the original content, fine-tune the content, rewrite the content, and do not continue. "Quantile regression meets this need, providing prediction intervals with quantified chances. It is a statistical technique used to model the relationship between a predictor variable and a response variable, especially when the conditional distribution of the response variable is of interest When. Unlike traditional regression methods, quantile regression focuses on estimating the conditional magnitude of the response variable rather than the conditional mean. "Figure (A): Quantile regression Quantile regression is an estimate. A modeling method for the linear relationship between a set of regressors X and the quantiles of the explained variables Y. The existing regression model is actually a method to study the relationship between the explained variable and the explanatory variable. They focus on the relationship between explanatory variables and explained variables

What is the use of net4.0

May 10, 2024 am 01:09 AM

What is the use of net4.0

May 10, 2024 am 01:09 AM

.NET 4.0 is used to create a variety of applications and it provides application developers with rich features including: object-oriented programming, flexibility, powerful architecture, cloud computing integration, performance optimization, extensive libraries, security, Scalability, data access, and mobile development support.

How to use C++ for time series analysis and forecasting?

Jun 02, 2024 am 09:37 AM

How to use C++ for time series analysis and forecasting?

Jun 02, 2024 am 09:37 AM

Time series analysis and forecasting using C++ involves the following steps: Installing the necessary libraries Preprocessing Data Extracting features (ACF, CCF, SDF) Fitting models (ARIMA, SARIMA, exponential smoothing) Forecasting future values

How does Golang promote innovation in data analysis?

May 09, 2024 am 08:09 AM

How does Golang promote innovation in data analysis?

May 09, 2024 am 08:09 AM

Go language empowers data analysis innovation with its concurrent processing, low latency and powerful standard library. Through concurrent processing, the Go language can perform multiple analysis tasks at the same time, significantly improving performance. Its low-latency nature enables analytics applications to process data in real-time, enabling rapid response and insights. In addition, the Go language's rich standard library provides libraries for data processing, concurrency control, and network connections, making it easier for analysts to build robust and scalable analysis applications.

How to configure MongoDB automatic expansion on Debian

Apr 02, 2025 am 07:36 AM

How to configure MongoDB automatic expansion on Debian

Apr 02, 2025 am 07:36 AM

This article introduces how to configure MongoDB on Debian system to achieve automatic expansion. The main steps include setting up the MongoDB replica set and disk space monitoring. 1. MongoDB installation First, make sure that MongoDB is installed on the Debian system. Install using the following command: sudoaptupdatesudoaptinstall-ymongodb-org 2. Configuring MongoDB replica set MongoDB replica set ensures high availability and data redundancy, which is the basis for achieving automatic capacity expansion. Start MongoDB service: sudosystemctlstartmongodsudosys

How to ensure high availability of MongoDB on Debian

Apr 02, 2025 am 07:21 AM

How to ensure high availability of MongoDB on Debian

Apr 02, 2025 am 07:21 AM

This article describes how to build a highly available MongoDB database on a Debian system. We will explore multiple ways to ensure data security and services continue to operate. Key strategy: ReplicaSet: ReplicaSet: Use replicasets to achieve data redundancy and automatic failover. When a master node fails, the replica set will automatically elect a new master node to ensure the continuous availability of the service. Data backup and recovery: Regularly use the mongodump command to backup the database and formulate effective recovery strategies to deal with the risk of data loss. Monitoring and Alarms: Deploy monitoring tools (such as Prometheus, Grafana) to monitor the running status of MongoDB in real time, and

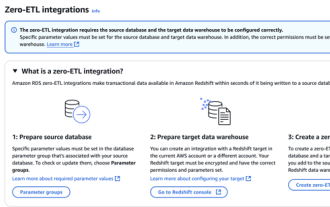

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

2025 100-fold potential coin prediction: The three major dark horse currency types that subvert cognition!

Mar 04, 2025 am 07:18 AM

2025 100-fold potential coin prediction: The three major dark horse currency types that subvert cognition!

Mar 04, 2025 am 07:18 AM

The cryptocurrency market is ready to go in 2025, and the prediction that Bitcoin has exceeded $100,000 has ignited investors' enthusiasm. However, real wealth opportunities are often hidden in projects that are underestimated or have great potential. Based on current market trends and technological trends, the following three cryptocurrencies, relying on their unique advantages, market narrative and ecological potential, are expected to achieve significant growth in the next two years and become a dark horse in the market! Ripple (XRP) - The core advantage of returning to the king after legal challenges: The dawn of SEC litigation begins: XRP prices have continued to be sluggish since the SEC sued Ripple in 2020. However, there have been frequent positive signals recently. If the lawsuit results are beneficial to Ripple, XRP will get rid of the shadow of regulation and return to mainstream transactions