Technology peripherals

Technology peripherals

AI

AI

Transformer Revisited: Inversion is more effective, a new SOTA for real-world prediction emerges

Transformer Revisited: Inversion is more effective, a new SOTA for real-world prediction emerges

Transformer Revisited: Inversion is more effective, a new SOTA for real-world prediction emerges

In time series forecasting, Transformer has demonstrated its powerful ability to describe dependencies and extract multi-level representations. However, some researchers have questioned the effectiveness of Transformer-based predictors. Such predictors typically embed multiple variables of the same timestamp into indistinguishable channels and focus on these timestamps to capture temporal dependencies. The researchers found that simple linear layers that consider numerical relationships rather than semantic relationships outperformed complex Transformers in both performance and efficiency. At the same time, the importance of ensuring the independence of variables and exploiting mutual information has received increasing attention from recent research. These studies explicitly model multivariate correlations to achieve precise predictions. However, it is still difficult to achieve this goal without subverting the common Transformer architecture

When considering the controversy caused by Transformer-based predictors, the researchers Thinking about why Transformer performs even worse than linear models in time series forecasting, while dominating in many other fields

Recently, a new paper from Tsinghua University proposed a Different perspective - Transformer performance is not intrinsic, but is caused by improper application of the schema to time series data.

The link to the paper is: https://arxiv.org/pdf/2310.06625.pdf

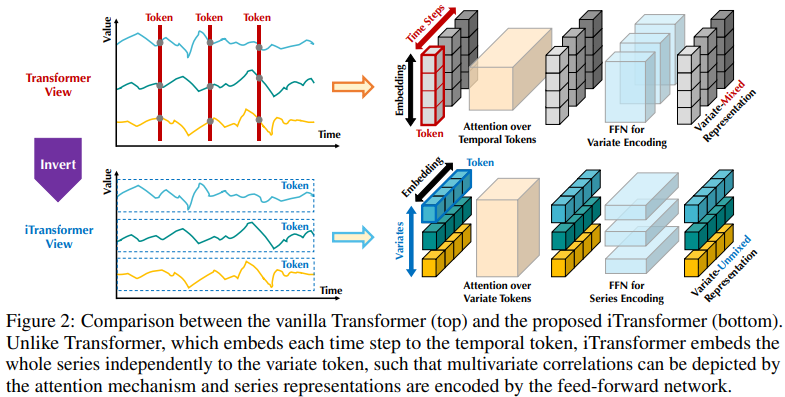

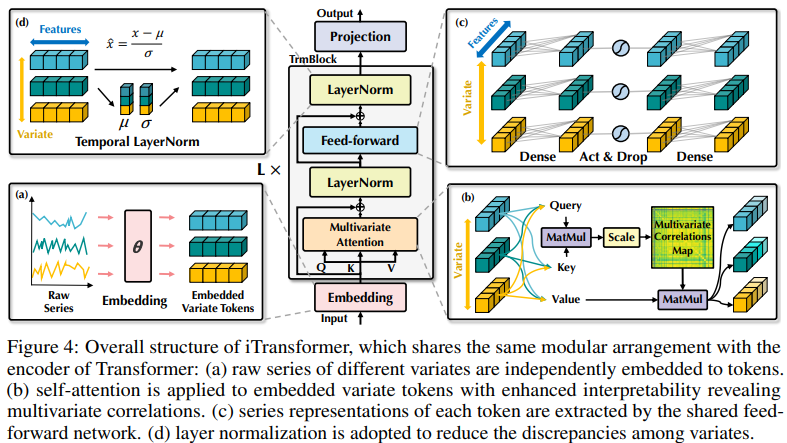

The existing structure of Transformer-based predictors may not be suitable for multivariate time series forecasting. The left side of Figure 2 shows that points at the same time step represent different physical meanings, but the measurement results are inconsistent. These points are embedded into a token, and multivariate correlations are ignored. Furthermore, in the real world, individual time steps are rarely labeled with useful information due to misalignment of local receptive fields and timestamps at multivariate time points. In addition, although sequence variation is significantly affected by sequence order, the variant attention mechanism in the temporal dimension has not been fully adopted. Therefore, Transformer's ability to capture basic sequence representation and describe multivariate correlations is weakened, limiting its ability and generalization ability on different time series data

Regarding the irrationality of embedding the multi-variable points of each time step into a (time) token, the researcher started from the reverse perspective of the time series and independently embedded the entire time series of each variable into a (variable ) token, this is an extreme case of patching that expands the local receptive field. Through inversion, the embedded token aggregates the global representation of the sequence, which can be more variable-centered and better utilize the attention mechanism for multi-variable association. At the same time, feedforward networks can skillfully learn generalized representations of different variables encoded by any lookback sequence and decode them to predict future sequences.

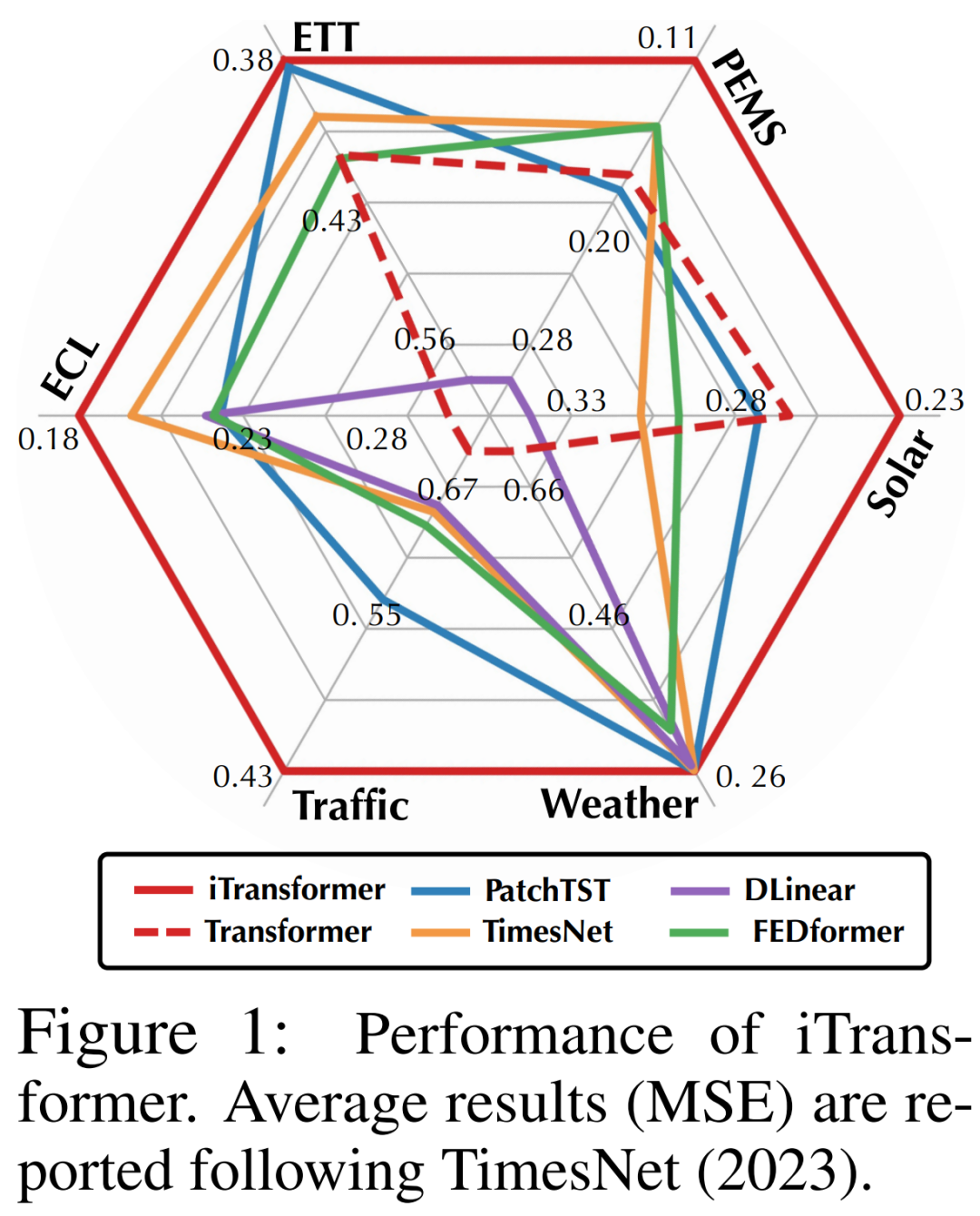

Researchers pointed out that for time series prediction, Transformer is not invalid, but its use is inappropriate. In this paper, the researchers re-examined the structure of Transformer and recommended iTransformer as the basic pillar of time series prediction. They embed each time series as a variable token, adopt a multi-variable correlation attention mechanism, and use a feed-forward network to encode the sequence. Experimental results show that the proposed iTransformer reaches the state-of-the-art level in the actual prediction benchmark Figure 1 and unexpectedly solves the problems faced by Transformer-based predictors

# In summary, the contributions of this article have the following three points:

- The researcher reflected on the architecture of Transformer and found that The capabilities of the native Transformer component on time series have not yet been fully exploited.

- The iTransformer proposed in this article treats independent time series as tokens, captures multi-variable correlations through self-attention, and uses layer normalization and feed-forward network modules to learn better sequences Global representation,for time series forecasting.

- Through experiments, iTransformer reaches SOTA on real-world prediction benchmarks. The researchers analyzed the inversion module and architectural choices, pointing out the direction for future improvements of Transformer-based predictors.

iTransformer

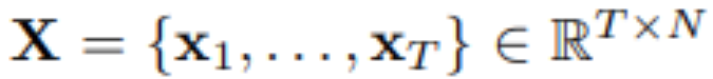

In multivariate time series forecasting, given historical observations:

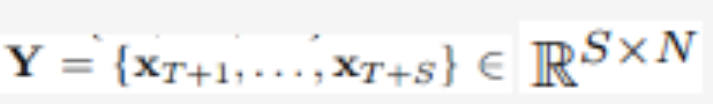

Using T time steps and N variables, the researcher predicts S time steps in the future:  . For convenience, it is expressed as

. For convenience, it is expressed as  is the multivariate variables recorded simultaneously at time step t, and

is the multivariate variables recorded simultaneously at time step t, and  is the entire time series indexed by n for each variable. It is worth noting that in the real world,

is the entire time series indexed by n for each variable. It is worth noting that in the real world,  may not contain time points with essentially the same timestamp due to system latency of monitors and loosely organized datasets. Elements of

may not contain time points with essentially the same timestamp due to system latency of monitors and loosely organized datasets. Elements of

can differ from each other in physical measurements and statistical distributions, and variables

can differ from each other in physical measurements and statistical distributions, and variables  often share these data.

often share these data.

The Transformer variant equipped with the architecture proposed in this article is called iTransformer. Basically, there are no more specific requirements for the Transformer variant, just note that The force mechanism should be suitable for multivariate correlation modeling. Therefore, an effective set of attention mechanisms can serve as a plug-in to reduce the complexity of associations when the number of variables increases.

iTransformer is shown in the fourth picture, using a simpler Transformer encoder architecture, including embedding, projection and Transformer blocks

Experiments and Results

The researchers conducted a comprehensive evaluation of iTransformer in various time series forecasting applications, confirming the versatility of the framework and Further studied the effect of inverting the responsibilities of the Transformer component for specific time series dimensions

The researchers extensively included 6 real-world data sets in the experiment, including ETT, weather, electricity, and transportation data set, solar data set and PEMS data set. For detailed data set information, please refer to the original text

##The rewritten content is: Prediction results

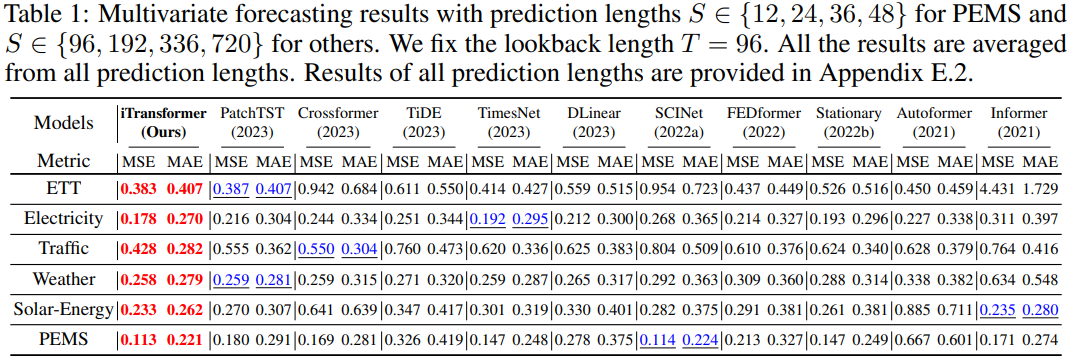

are shown in Table 1 shown, with red indicating the best and underline indicating the best. The lower the MSE/MAE, the rewritten content is: the more accurate the prediction results are. The iTransformer proposed in this article achieves SOTA performance. The native Transformer component is capable of time modeling and multivariate correlation, and the proposed inverted architecture can effectively solve real-world time series prediction scenarios.

The content that needs to be rewritten is: Universality of iTransformer

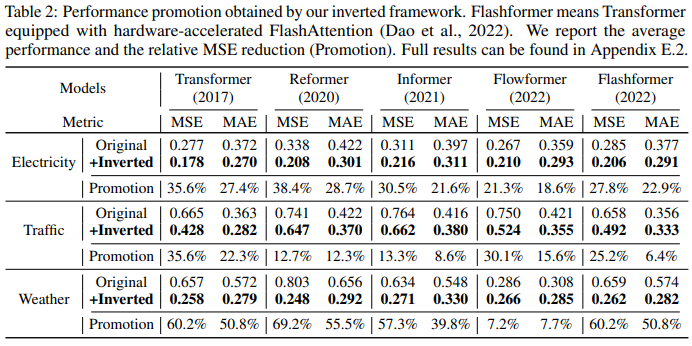

Researchers who have applied this framework to Transformer and its variants to evaluate iTransformers have found that these variants generally address the quadratic complexity of the self-attention mechanism, including Reformer, Informer, Flowformer, and FlashAttention. The researchers also found that simply inverting the perspective can improve the performance of Transformer-based predictors, improve efficiency, generalize to unseen variables, and make better use of historical observational data

Table 2 Transformers and corresponding iTransformers were evaluated. It is worth noting that the framework continues to improve various Transformers. Overall, Transformers improved by an average of 38.9%, Reformers by an average of 36.1%, Informers by an average of 28.5%, Flowformers by an average of 16.8%, and Flashformers by an average of 32.2%.

Another factor is that iTransformer can be widely used in Transformer-based predictors because it adopts the inverted structure of the attention mechanism in the variable dimension, introducing efficient attention with linear complexity, from Fundamentally solve the efficiency problem caused by 6 variables. This problem is common in real-world applications, but can be resource-consuming for Channel Independent

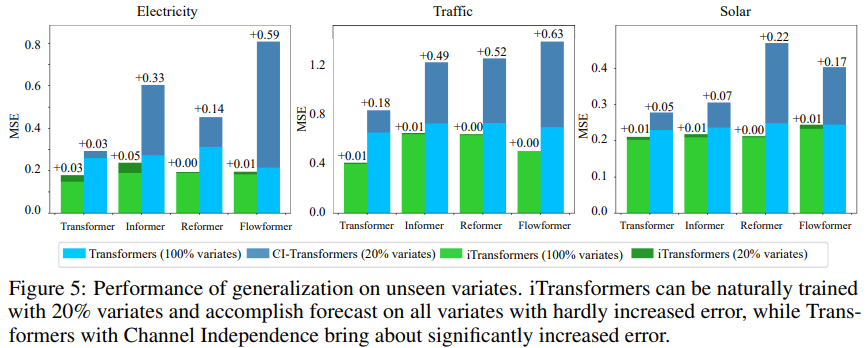

To test the hypothesis, the researchers used iTransformer Comparisons are made with another generalization strategy: Channel Independent, which enforces a shared Transformer to learn the pattern for all variants. As shown in Figure 5, the generalization error of Channel Independent (CI-Transformers) can increase significantly, while the increase in iTransformer prediction error is much smaller.

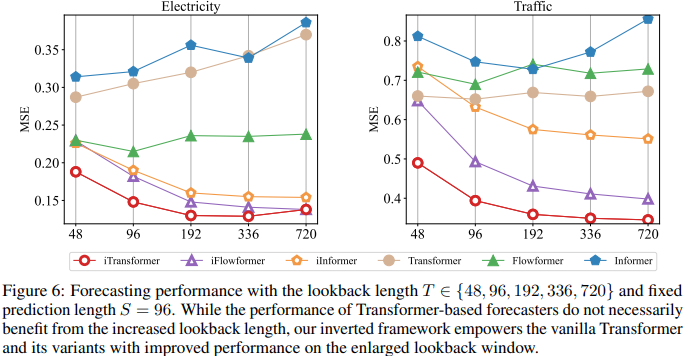

Since the responsibilities of the attention and feedforward networks are inverted, Figure 6 evaluates the Transformers and iTransformers as the lookback length increases. performance. It validates the rationale of leveraging MLP in the temporal dimension, i.e. Transformers can benefit from extended look-back windows, resulting in more accurate predictions.

Model analysis

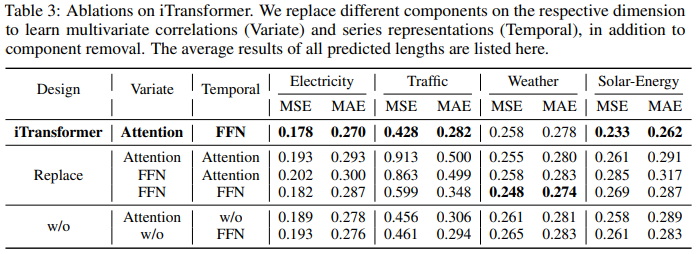

In order to verify the rationality of the Transformer component, research The authors conducted detailed ablation experiments, including component replacement (Replace) and component removal (w/o) experiments. Table 3 lists the experimental results.

For more details, please refer to the original article.

The above is the detailed content of Transformer Revisited: Inversion is more effective, a new SOTA for real-world prediction emerges. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1381

1381

52

52

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

The readdir function in the Debian system is a system call used to read directory contents and is often used in C programming. This article will explain how to integrate readdir with other tools to enhance its functionality. Method 1: Combining C language program and pipeline First, write a C program to call the readdir function and output the result: #include#include#include#includeintmain(intargc,char*argv[]){DIR*dir;structdirent*entry;if(argc!=2){

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

In Debian systems, the readdir function is used to read directory contents, but the order in which it returns is not predefined. To sort files in a directory, you need to read all files first, and then sort them using the qsort function. The following code demonstrates how to sort directory files using readdir and qsort in Debian system: #include#include#include#include#include//Custom comparison function, used for qsortintcompare(constvoid*a,constvoid*b){returnstrcmp(*(

How to perform digital signature verification with Debian OpenSSL

Apr 13, 2025 am 11:09 AM

How to perform digital signature verification with Debian OpenSSL

Apr 13, 2025 am 11:09 AM

Using OpenSSL for digital signature verification on Debian systems, you can follow these steps: Preparation to install OpenSSL: Make sure your Debian system has OpenSSL installed. If not installed, you can use the following command to install it: sudoaptupdatesudoaptininstallopenssl to obtain the public key: digital signature verification requires the signer's public key. Typically, the public key will be provided in the form of a file, such as public_key.pe

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

The steps to install an SSL certificate on the Debian mail server are as follows: 1. Install the OpenSSL toolkit First, make sure that the OpenSSL toolkit is already installed on your system. If not installed, you can use the following command to install: sudoapt-getupdatesudoapt-getinstallopenssl2. Generate private key and certificate request Next, use OpenSSL to generate a 2048-bit RSA private key and a certificate request (CSR): openss

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

In Debian systems, OpenSSL is an important library for encryption, decryption and certificate management. To prevent a man-in-the-middle attack (MITM), the following measures can be taken: Use HTTPS: Ensure that all network requests use the HTTPS protocol instead of HTTP. HTTPS uses TLS (Transport Layer Security Protocol) to encrypt communication data to ensure that the data is not stolen or tampered during transmission. Verify server certificate: Manually verify the server certificate on the client to ensure it is trustworthy. The server can be manually verified through the delegate method of URLSession

How to do Debian Hadoop log management

Apr 13, 2025 am 10:45 AM

How to do Debian Hadoop log management

Apr 13, 2025 am 10:45 AM

Managing Hadoop logs on Debian, you can follow the following steps and best practices: Log Aggregation Enable log aggregation: Set yarn.log-aggregation-enable to true in the yarn-site.xml file to enable log aggregation. Configure log retention policy: Set yarn.log-aggregation.retain-seconds to define the retention time of the log, such as 172800 seconds (2 days). Specify log storage path: via yarn.n

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.