Technology peripherals

Technology peripherals

AI

AI

Peking University's new achievement of embodied intelligence: No training required, you can move flexibly by following instructions

Peking University's new achievement of embodied intelligence: No training required, you can move flexibly by following instructions

Peking University's new achievement of embodied intelligence: No training required, you can move flexibly by following instructions

Peking University Dong Hao TeamEmbodied NavigationThe latest results are here:

No need for additional mapping and training, just speak the navigation instructions, such as:

Walk forward across the room and walk through the panty followed by the kitchen. Stand at the end of the kitchen

We can control the robot to move flexibly.

Here, the robot relies onactively communicating with the "expert team" composed of large modelscompletes command analysis, vision A range of visual language navigation critical tasks such as perception, completion estimation and decision making tests.

The project homepage and papers are currently online, and the code will be released soon:

How does a robot navigate according to human instructions?

Visual language navigation involves a series of subtasks, including instruction analysis, visual perception, completion estimation and decision testing.

These key tasks require knowledge in different fields, and they are interrelated and determine the navigation ability of the robot.

Inspired by the actual discussion behavior of experts, Peking University Dong Hao team proposed the DiscussNav navigation system.

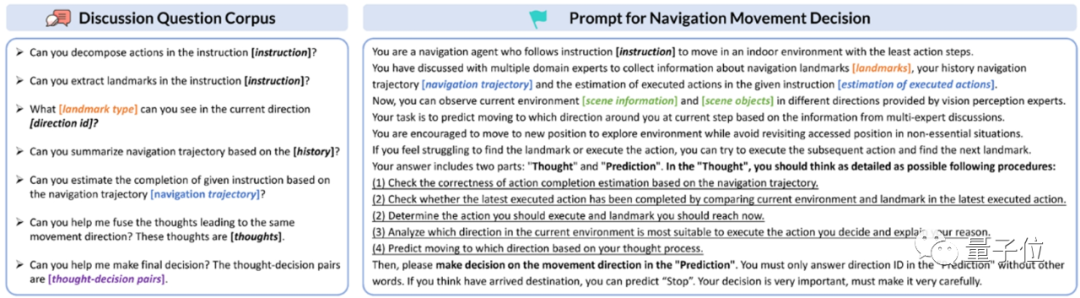

The author first assigns expert roles and specific tasks to LLM (Large Language Model) and MLM (Multimodal Large Model) in a prompt manner to activate their domain knowledge and capabilities, thus building a team of visual navigation experts with different specialties.

Then, the author designed a corpus of discussion questions and a discussion mechanism. Following this mechanism, the navigation robot driven by LLM can actively initiate a series of visual interactions. Navigation expert discussion.

Before each move, the navigation robot discusses with experts to understand the required actions and mentions in human instructions object sign.

Then based on the types of these object marks, the surrounding environment is tended to be perceived, the instruction completion status is estimated, and a preliminary movement decision is made.

During the decision-making process, the navigation robot will simultaneously generate N independent When the prediction results are inconsistent, the robot will seek help from decision testing experts to filter out the final mobile decision. We can see from this process that compared to traditional methods, additional pre-training is required. This method guides the robot to move according to human instructions by interacting with large model experts,

directly solves the problem of robot navigation training data The problem of scarcity. Furthermore, it is precisely because of this feature that it also achieves zero-sample capabilities. As long as you follow the above discussion process, you can follow a variety of navigation instructions.

The following is the performance of DiscussionNav on the classic visual language navigation data set Room2Room.

As can be seen, it

As can be seen, it

is significantly higher than all zero-shot methods, and even exceeds the two trained methods . The author further carried out real indoor scene navigation experiments on the Turtlebot4 mobile robot.

With the powerful language and visual generalization capabilities of large models inspired by expert role-playing and discussions, DiscussNav's performance in the real world is significantly better than the previous optimal zero-shot method and pre-training fine-tuned method. Demonstrates good sim-to-real migration capabilities.

Through experiments, the author further discovered that DiscussNav produced

Through experiments, the author further discovered that DiscussNav produced

4 powerful abilities: 1. Identify open world objects, such as "robot arm on white table" and "teddy bear on chair". 2. Identify fine-grained navigation landmark objects, such as "plants on the kitchen counter" and "cartons on the table". 3. Correct the erroneous information replied by other experts in the discussion. For example, the logo extraction expert will check and correct the incorrectly decomposed action sequence before extracting the navigation logo from the navigation action sequence. 4. Eliminate inconsistent movement decisions. For example, decision test experts can select the most reasonable one from multiple inconsistent movement decisions predicted by DiscussNav based on the current environment information as the final movement decision. The corresponding author Dong Hao proposed in a previous report to explore in depth how to effectively use simulation data and large models to learn from massive data Prior knowledge is the development direction of future embodied intelligence research. Currently limited by data scale and the high cost of exploring the real environment, embodied intelligence research will still focus on simulation platform experiments and simulation data training. Recent progress in large models provides a new direction for embodied intelligence. Proper exploration and utilization of language common sense and physical world priors in large models will promote the development of embodied intelligence. Paper address: https://arxiv.org/abs/2309.11382"Simulation and large model priors are Free Lunch"

The above is the detailed content of Peking University's new achievement of embodied intelligence: No training required, you can move flexibly by following instructions. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

In the blink of an eye, robots have learned to do magic? It was seen that it first picked up the water spoon on the table and proved to the audience that there was nothing in it... Then it put the egg-like object in its hand, then put the water spoon back on the table and started to "cast a spell"... …Just when it picked up the water spoon again, a miracle happened. The egg that was originally put in disappeared, and the thing that jumped out turned into a basketball... Let’s look at the continuous actions again: △ This animation shows a set of actions at 2x speed, and it flows smoothly. Only by watching the video repeatedly at 0.5x speed can it be understood. Finally, I discovered the clues: if my hand speed were faster, I might be able to hide it from the enemy. Some netizens lamented that the robot’s magic skills were even higher than their own: Mag was the one who performed this magic for us.

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Sweeping and mopping robots are one of the most popular smart home appliances among consumers in recent years. The convenience of operation it brings, or even the need for no operation, allows lazy people to free their hands, allowing consumers to "liberate" from daily housework and spend more time on the things they like. Improved quality of life in disguised form. Riding on this craze, almost all home appliance brands on the market are making their own sweeping and mopping robots, making the entire sweeping and mopping robot market very lively. However, the rapid expansion of the market will inevitably bring about a hidden danger: many manufacturers will use the tactics of sea of machines to quickly occupy more market share, resulting in many new products without any upgrade points. It is also said that they are "matryoshka" models. Not an exaggeration. However, not all sweeping and mopping robots are

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

A purely visual annotation solution mainly uses vision plus some data from GPS, IMU and wheel speed sensors for dynamic annotation. Of course, for mass production scenarios, it doesn’t have to be pure vision. Some mass-produced vehicles will have sensors like solid-state radar (AT128). If we create a data closed loop from the perspective of mass production and use all these sensors, we can effectively solve the problem of labeling dynamic objects. But there is no solid-state radar in our plan. Therefore, we will introduce this most common mass production labeling solution. The core of a purely visual annotation solution lies in high-precision pose reconstruction. We use the pose reconstruction scheme of Structure from Motion (SFM) to ensure reconstruction accuracy. But pass