Technology peripherals

Technology peripherals

AI

AI

Dimensity 9300, the first generative AI mobile chip: capable of running large models with 33 billion parameters

Dimensity 9300, the first generative AI mobile chip: capable of running large models with 33 billion parameters

Dimensity 9300, the first generative AI mobile chip: capable of running large models with 33 billion parameters

Nov 10, 2023 am 08:38 AMAI drawing is generated in one second, and the large language model is 20 tokens per second.

2023 is the first year of generative AI, and the mobile devices in our hands are also accelerating into the era of large models.

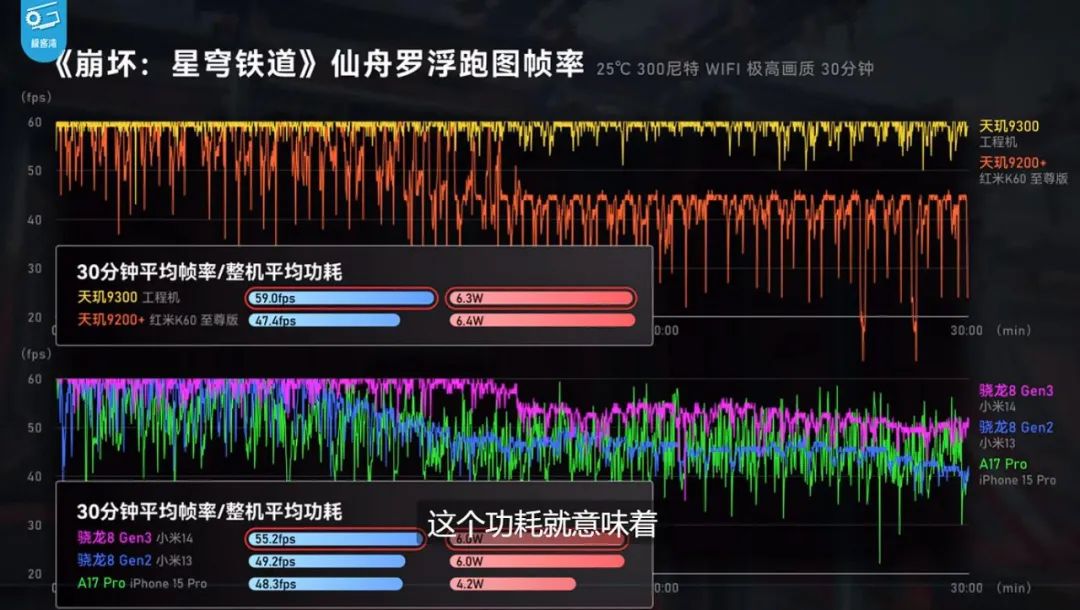

On the evening of November 6th, MediaTek officially released the annual flagship SoC Dimensity 9300. This chip has a 4+4 full-core design and completely surpasses Android in terms of performance and energy consumption. Competing with Apple.

What’s more noteworthy is that this year’s flagship chip is defined as a 5G generative AI mobile chip, which provides outstanding features of high intelligence, high performance, high energy efficiency, and low power consumption that far exceed those of the past.

Dimensity 9300 uses TSMC’s new generation 4nm process and has 22.7 billion transistors. In order to meet the computing power needs of the generative AI era, the Dimensity 9300 pioneered the use of the "all-large-core" CPU architecture, including 4 Cortex-X4 ultra-large cores with a maximum frequency of 3.25GHz and 4 main frequency The 2.0GHz Cortex-A720 large core has a peak performance that is 40% higher than the previous generation, and power consumption is saved by 33% with the same performance.

This architecture ensures fast working speed and high efficiency while also having power-saving characteristics. It can reduce power consumption in both light-load and heavy-load application scenarios. consumption and extend battery life. MediaTek said that the Dimensity 9300 has been optimized for common task requirements such as video, live broadcast, and games, and is more adaptable to the multi-tasking mode of folding screen mobile phones than before.

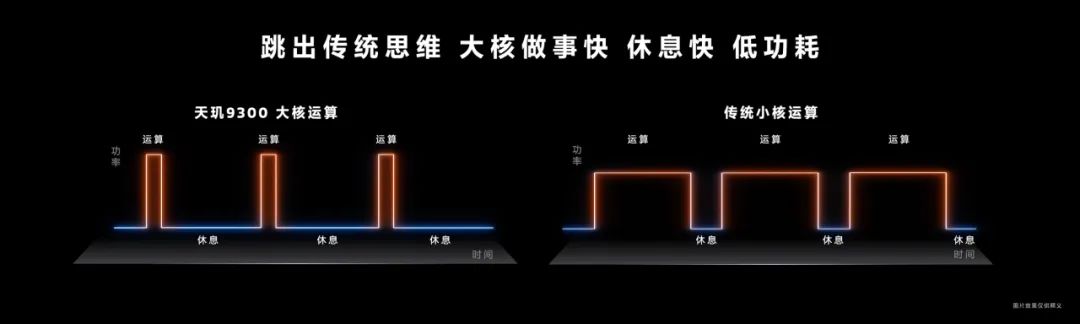

With the improvement of chip manufacturing processes, transistors are continuously miniaturized, and various leakage problems have become a major obstacle to the development of Moore's Law. Leakage means a significant increase in energy consumption, and the chip will also face problems of overheating or even failure. In this case, the power consumption gap between the small core and the large core has become smaller and smaller.

According to reports, MediaTek began exploring the form of full-core chips as early as three years ago. Now, by letting the big cores process tasks quickly and then sleep for longer periods of time, we can make big-core processors counterintuitively more power-efficient than their smaller-core counterparts. On the other hand, MediaTek has also added an out-of-order strategy to further increase the efficiency of application execution.  MediaTek believes that by next year, the design of all large cores will become the consensus in the industry.

MediaTek believes that by next year, the design of all large cores will become the consensus in the industry.

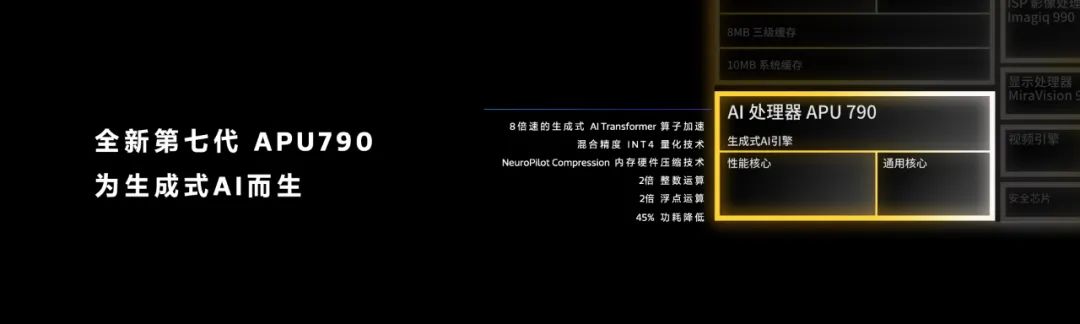

In addition to conventional capabilities, MediaTek has focused on upgrading the AI performance of the chip this time. Dimensity 9300 integrates MediaTek's seventh-generation AI processor APU 790, which is specially designed for generative AI. Its performance and energy efficiency have been significantly improved compared to the previous generation. The performance of integer operations and floating point operations is twice that of the previous generation. Power consumption is reduced by 45%.

APU 790 has a built-in hardware-level generative AI engine, which can achieve faster and safer edge AI calculations. Compared with the previous generation, it specifically performs operator acceleration for Transformers commonly used in large language models. , the processing speed of large models is 8 times that of the previous generation.

APU 790 has a built-in hardware-level generative AI engine, which can achieve faster and safer edge AI calculations. Compared with the previous generation, it specifically performs operator acceleration for Transformers commonly used in large language models. , the processing speed of large models is 8 times that of the previous generation.

The main core network architecture of contemporary popular large language models (LLM) is mostly composed of transformer blocks. Compared with the common CNN network in computer vision, the transformer network uses the Softmax LayerNorm operator as the core and has fewer convolution operators, so the acceleration mechanism of the original AI core is not applicable. On the seventh-generation APU processor, MediaTek focuses on optimizing the Softmax LayerNorm operator to increase computing power.

Quantification is currently one of the effective ways to optimize AI reasoning. Based on the characteristics of large language models with hundreds of millions of parameters, MediaTek has developed mixed-precision INT4 quantization technology, combined with its unique memory hardware compression technology NeuroPilot Compression, which can more efficiently utilize memory bandwidth and significantly reduce the occupation of terminal memory by large AI models.

MediaTek engineers said that although large models can bring better productivity, running 13B locally means that it takes up about 13GB of memory, plus Android’s own 4GB, and other APP’s 6GB has exceeded the 16G memory capacity of most mobile phones. The memory hardware compression technology used by Dimensity 9300 reduces the memory usage of large models to 5GB through quantization and compression. Only in this way can most users afford to run large model applications in daily life.

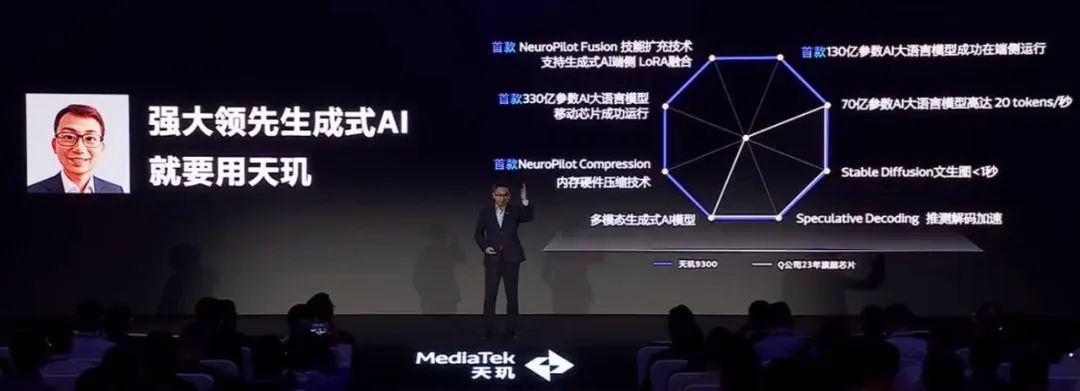

APU 790 also supports the generative AI model end-side "skill expansion" technology NeuroPilot Fusion, which can continuously perform low-rank adaptation (LoRA, Low-Rank Adaptation) fusion on the end-side based on the basic large model, thereby empowering The basic large model has more comprehensive capabilities.

Based on such hardware and optimization, Dimensity 9300 can reach 2019 points on the latest version of the AI Benchmark proposed by ETH Zurich, which is a new high for mobile chips.

Using Dimensity 9300, end-side AI image generation such as Stable Diffusion can produce images within one second, and end-side inference of a 7 billion parameter large language model can be achieved per second 20token.

MediaTek said that in its cooperation with vivo, based on Dimensity 9300, it has taken the lead in realizing the inference of 7B and 13B large models on the mobile terminal. It is expected that such products will be launched on the terminal soon. In addition, in more extreme cases, MediaTek has also run through large models up to 33B.

In the communication meeting and on-site before the release, MediaTek demonstrated the ability to use the Dimensity 9300 engineering machine to realize LoRA text diagrams and large model text generation.

We can foresee that on the latest generation of flagship mobile phones, we can use smarter intelligent assistants to quickly reply to chats and right-click based on suggestions given by large models, and use AI-generated emoticons to fight pictures...

What will be launched soon and everyone can experience it is the AI assistant Lan Xin Xiao V in the OriginOS 4 system on vivo X100 series mobile phones.

At the beginning of this month, vivo just introduced the blue heart model and its applications at the developer conference. This series of capabilities is obviously for mobile phones. . With the blessing of large models, Lanxin Xiao V has industry-leading wisdom. It can receive information for processing through voice, text, file drag and drop, etc. If you encounter simple questions, Little V will reply with text or pictures. For complex questions, it can also output answers in the form of a SWOT model or mind map.

In addition, the AI capabilities of Dimensity 9300 also cover everything from search to shooting.

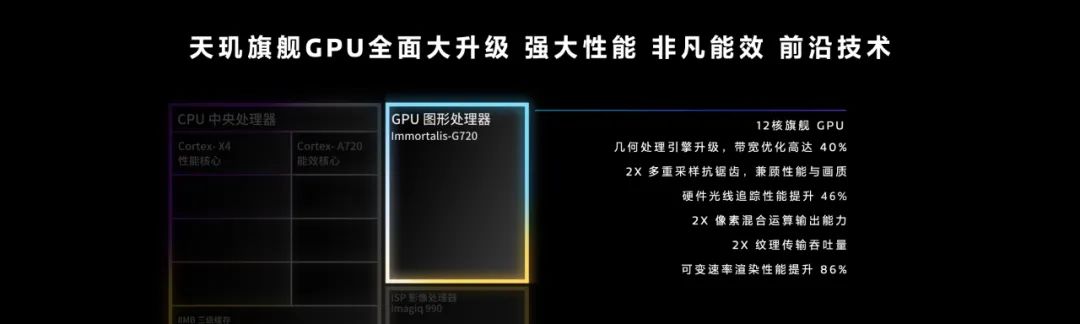

## in in in in in, in IN IN IN OTHER APPROVED · As for Imagiq 990, it supports AI semantic segmentation video engine, 16-layer image semantic segmentation, dual depth-of-field and light spot engines, full-pixel focus overlay 2x lossless zoom, OIS optical image stabilization core, 3-microphone high-dynamic recording noise reduction, and can filter 25km /h Wind noise above 99% of wind speed. The Dimensity 9300 also comes with a new secure boot chip, an isolated secure computing environment and Armv9’s memory marking extensions to help developers avoid memory exploits. In terms of network, Dimensity 9300 integrates a 5G modem that supports Sub-6GHz four-carrier aggregation (4CC-CA) and multi-standard dual-card dual-pass. It also improves the signal through AI algorithms and supports 5G situational awareness functions. Dimensity 9300 supports Wi-Fi 7 and 5G sub-6GHz frequency bands, with a downlink rate of 7Gbps. In terms of Bluetooth connection, Dimensity 9300 supports 3 Bluetooth antennas and unique dual-channel Bluetooth flash connection technology, which can bring an ultra-low latency Bluetooth audio experience.

## in in in in in, in IN IN IN OTHER APPROVED · As for Imagiq 990, it supports AI semantic segmentation video engine, 16-layer image semantic segmentation, dual depth-of-field and light spot engines, full-pixel focus overlay 2x lossless zoom, OIS optical image stabilization core, 3-microphone high-dynamic recording noise reduction, and can filter 25km /h Wind noise above 99% of wind speed. The Dimensity 9300 also comes with a new secure boot chip, an isolated secure computing environment and Armv9’s memory marking extensions to help developers avoid memory exploits. In terms of network, Dimensity 9300 integrates a 5G modem that supports Sub-6GHz four-carrier aggregation (4CC-CA) and multi-standard dual-card dual-pass. It also improves the signal through AI algorithms and supports 5G situational awareness functions. Dimensity 9300 supports Wi-Fi 7 and 5G sub-6GHz frequency bands, with a downlink rate of 7Gbps. In terms of Bluetooth connection, Dimensity 9300 supports 3 Bluetooth antennas and unique dual-channel Bluetooth flash connection technology, which can bring an ultra-low latency Bluetooth audio experience.

According to reports, the first mobile phones using Dimensity 9300 chip include vivo, OPPO, Xiaomi, Transsion, etc. After MediaTek’s launch event, vivo has announced that it will be the first to carry a new flagship chip in the X100 series released on November 13, and will be the first to implement LPDDR5T-9600 memory.

We look forward to the advent of a new generation of products.

The above is the detailed content of Dimensity 9300, the first generative AI mobile chip: capable of running large models with 33 billion parameters. For more information, please follow other related articles on the PHP Chinese website!

Hot Article

Hot tools Tags

Hot Article

Hot Article Tags

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow

Aug 19, 2024 pm 04:48 PM

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow

Aug 19, 2024 pm 04:48 PM

The first large UI model in China is released! Motiff's large model creates the best assistant for designers and optimizes UI design workflow