Technology peripherals

Technology peripherals

AI

AI

Nvidia releases AI chip H200: performance soars by 90%, Llama 2 inference speed doubles

Nvidia releases AI chip H200: performance soars by 90%, Llama 2 inference speed doubles

Nvidia releases AI chip H200: performance soars by 90%, Llama 2 inference speed doubles

DoNews News on November 14th, NVIDIA released the next generation of artificial intelligence supercomputer chips on the 13th Beijing time. These chips will play an important role in deep learning and large language models (LLM), such as OpenAI’s GPT-4.

The new generation of chips has made significant progress compared with the previous generation and will be widely used in data centers and supercomputers to handle complex tasks such as weather and climate prediction, drug research and development, and quantum computing

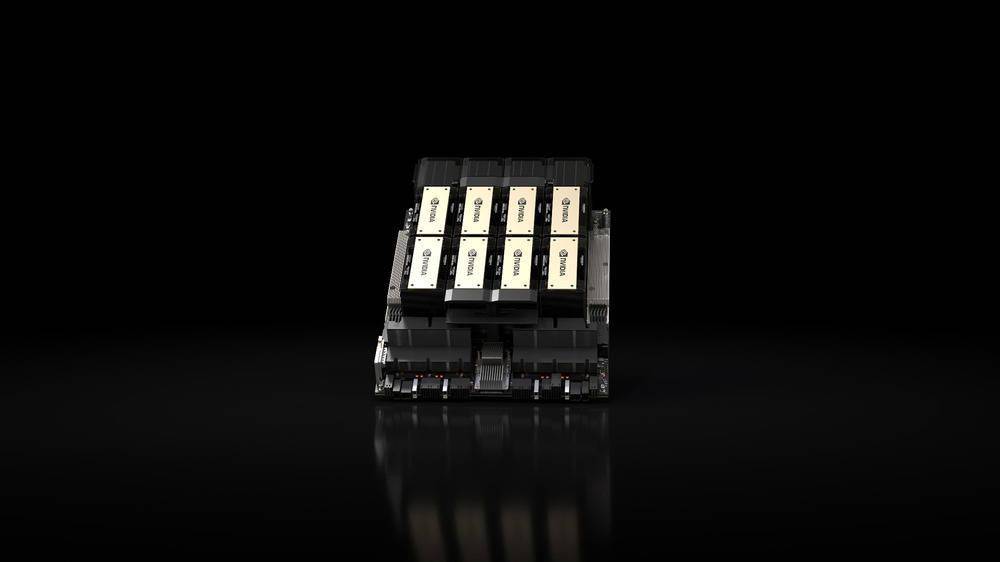

The key product released is the HGX H200 GPU based on Nvidia's "Hopper" architecture, which is the successor to the H100 GPU and is the company's first chip to use HBM3e memory. HBM3e memory has faster speed and larger capacity, so it is very suitable for the application of large language models

NVIDIA said: "With HBM3e technology, NVIDIA H200 memory speed reaches 4.8TB per second, capacity is 141GB, almost twice that of A100, and bandwidth has also increased by 2.4 times."

In the field of artificial intelligence, NVIDIA claims that the inference speed of HGX H200 on Llama 2 (70 billion parameter LLM) is twice as fast as that of H100. HGX H200 will be available in 4-way and 8-way configurations and is compatible with the software and hardware in the H100 system

It will be available in every type of data center (on-premises, cloud, hybrid cloud and edge) and deployed by Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure, etc., and will be available in Q2 2024 roll out.

Another key product released by NVIDIA this time is the GH200 Grace Hopper "superchip", which combines the HGX H200 GPU and Arm-based NVIDIA Grace CPU through the company's NVLink-C2C interconnect, officials said It is designed for supercomputers and allows "scientists and researchers to solve the world's most challenging problems by accelerating complex AI and HPC applications running terabytes of data."

The GH200 will be used in "more than 40 AI supercomputers at research centers, system manufacturers and cloud providers around the world," including Dell, Eviden, Hewlett Packard Enterprise (HPE), Lenovo, QCT and Supermicro.

It is worth noting that HPE’s Cray EX2500 supercomputer will use four-way GH200 and can scale to tens of thousands of Grace Hopper superchip nodes

The above is the detailed content of Nvidia releases AI chip H200: performance soars by 90%, Llama 2 inference speed doubles. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?

Mar 16, 2025 am 11:47 AM

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?

Mar 16, 2025 am 11:47 AM

OpenAI's o1: A 12-Day Gift Spree Begins with Their Most Powerful Model Yet December's arrival brings a global slowdown, snowflakes in some parts of the world, but OpenAI is just getting started. Sam Altman and his team are launching a 12-day gift ex

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

The article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)