Technology peripherals

Technology peripherals

AI

AI

NVIDIA releases H200, the world's most powerful AI chip: performance nearly doubled compared to H100

NVIDIA releases H200, the world's most powerful AI chip: performance nearly doubled compared to H100

NVIDIA releases H200, the world's most powerful AI chip: performance nearly doubled compared to H100

NVIDIA releases the world’s most powerful AI chip H200: performance nearly doubled compared to H100

Quick Technology reported today (November 14) that at the 2023 Global Supercomputing Conference (SC2023), chip giant Nvidia released the successor to the H100 chip, which is currently the world’s most powerful AI chip-H200.

The performance of H200 is directly improved by 60% to 90% compared to H100

Not only that, H200 and H100 are both based on the NVIDIA Hopper architecture, which also means that the two chips are compatible with each other. For companies using H100, they can be seamlessly replaced with the latest H200.

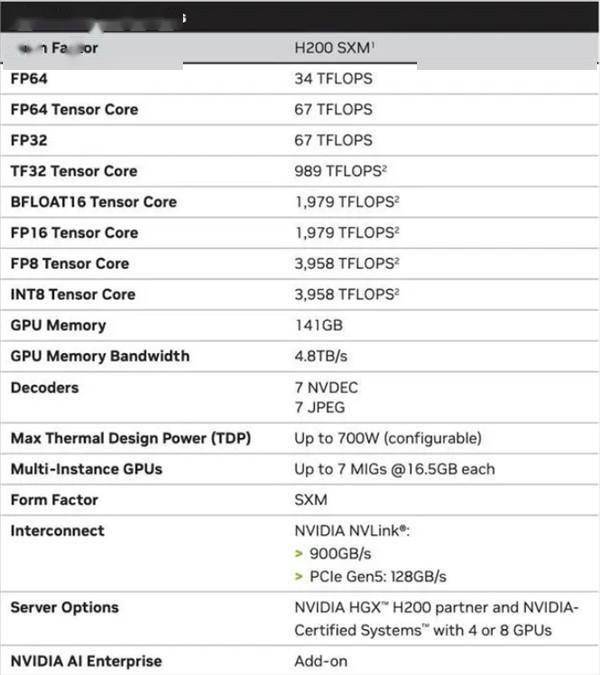

H200 is Nvidia’s first chip to use HBM3e memory. It has faster speed and larger capacity, and is very suitable for training and inference of large language models

In addition to HBM3e memory, the memory capacity of H200 is 141GB, and the bandwidth has increased from 3.35TB/s of H100 to 4.8TB/s.

The performance improvement of H200 is mainly reflected in the inference performance of large models. The inference speed of H200 on the large Llama2 model with 70 billion parameters is twice as fast as that of H100, and the inference energy consumption of H200 is directly reduced compared to H100. half.

H200 has higher memory bandwidth, which means that for memory-intensive high-performance computing applications, operating data can be accessed more efficiently. Using the H200 can increase result acquisition time by up to 110 times compared to a central processing unit (CPU)

Nvidia said that the H200 is expected to be shipped in the second quarter of 2024, and the price has not been announced yet. However, in the face of computing power shortage, big technology companies are still expected to stock up crazily.

The above is the detailed content of NVIDIA releases H200, the world's most powerful AI chip: performance nearly doubled compared to H100. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

36

36

110

110

NVIDIA launches RTX HDR function: unsupported games use AI filters to achieve HDR gorgeous visual effects

Feb 24, 2024 pm 06:37 PM

NVIDIA launches RTX HDR function: unsupported games use AI filters to achieve HDR gorgeous visual effects

Feb 24, 2024 pm 06:37 PM

According to news from this website on February 23, NVIDIA updated and launched the NVIDIA application last night, providing players with a new unified GPU control center, allowing players to capture wonderful moments through the powerful recording tool provided by the in-game floating window. In this update, NVIDIA also introduced the RTXHDR function. The official introduction is attached to this site: RTXHDR is a new AI-empowered Freestyle filter that can seamlessly introduce the gorgeous visual effects of high dynamic range (HDR) into In games that do not originally support HDR. All you need is an HDR-compatible monitor to use this feature with a wide range of DirectX and Vulkan-based games. After the player enables the RTXHDR function, the game will run even if it does not support HD

Is the performance of RTX5090 significantly improved?

Mar 05, 2024 pm 06:16 PM

Is the performance of RTX5090 significantly improved?

Mar 05, 2024 pm 06:16 PM

Many users are curious about the next-generation brand new RTX5090 graphics card. They don’t know how much the performance of this graphics card has been improved compared to the previous generation. Judging from the current information, the overall performance of this graphics card is still very good. Is the performance improvement of RTX5090 obvious? Answer: It is still very obvious. 1. This graphics card has an acceleration frequency beyond the limit, up to 3GHz, and is also equipped with 192 streaming multiprocessors (SM), which may even generate up to 520W of power. 2. According to the latest news from RedGamingTech, NVIDIARTX5090 is expected to exceed the 3GHz clock frequency, which will undoubtedly play a greater role in performing difficult graphics operations and calculations, providing smoother and more realistic games.

It is reported that NVIDIA RTX 50 series graphics cards are natively equipped with a 16-Pin PCIe Gen 6 power supply interface

Feb 20, 2024 pm 12:00 PM

It is reported that NVIDIA RTX 50 series graphics cards are natively equipped with a 16-Pin PCIe Gen 6 power supply interface

Feb 20, 2024 pm 12:00 PM

According to news from this website on February 19, in the latest video of Moore's LawisDead channel, anchor Tom revealed that Nvidia GeForce RTX50 series graphics cards will be natively equipped with PCIeGen6 16-Pin power supply interface. Tom said that in addition to the high-end GeForceRTX5080 and GeForceRTX5090 series, the mid-range GeForceRTX5060 will also enable new power supply interfaces. It is reported that Nvidia has set clear requirements that in the future, each GeForce RTX50 series will be equipped with a PCIeGen6 16-Pin power supply interface to simplify the supply chain. The screenshots attached to this site are as follows: Tom also said that GeForceRTX5090

NVIDIA RTX 4070 and 4060 Ti FE graphics cards have dropped below the recommended retail price, 4599/2999 yuan respectively

Feb 22, 2024 pm 09:43 PM

NVIDIA RTX 4070 and 4060 Ti FE graphics cards have dropped below the recommended retail price, 4599/2999 yuan respectively

Feb 22, 2024 pm 09:43 PM

According to news from this site on February 22, generally speaking, NVIDIA and AMD have restrictions on channel pricing, and some dealers who privately reduce prices significantly will also be punished. For example, AMD recently punished dealers who sold 6750GRE graphics cards at prices below the minimum price. The merchant was punished. This site has noticed that NVIDIA GeForce RTX 4070 and 4060 Ti have dropped to record lows. Their founder's version, that is, the public version of the graphics card, can currently receive a 200 yuan coupon at JD.com's self-operated store, with prices of 4,599 yuan and 2,999 yuan. Of course, if you consider third-party stores, there will be lower prices. In terms of parameters, the RTX4070 graphics card has a 5888CUDA core, uses 12GBGDDR6X memory, and a bit width of 192bi

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

'AI Factory” will promote the reshaping of the entire software stack, and NVIDIA provides Llama3 NIM containers for users to deploy

Jun 08, 2024 pm 07:25 PM

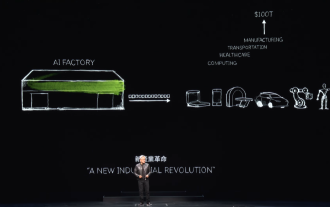

According to news from this site on June 2, at the ongoing Huang Renxun 2024 Taipei Computex keynote speech, Huang Renxun introduced that generative artificial intelligence will promote the reshaping of the full stack of software and demonstrated its NIM (Nvidia Inference Microservices) cloud-native microservices. Nvidia believes that the "AI factory" will set off a new industrial revolution: taking the software industry pioneered by Microsoft as an example, Huang Renxun believes that generative artificial intelligence will promote its full-stack reshaping. To facilitate the deployment of AI services by enterprises of all sizes, NVIDIA launched NIM (Nvidia Inference Microservices) cloud-native microservices in March this year. NIM+ is a suite of cloud-native microservices optimized to reduce time to market

After multiple transformations and cooperation with AI giant Nvidia, why did Vanar Chain surge 4.6 times in 30 days?

Mar 14, 2024 pm 05:31 PM

After multiple transformations and cooperation with AI giant Nvidia, why did Vanar Chain surge 4.6 times in 30 days?

Mar 14, 2024 pm 05:31 PM

Recently, Layer1 blockchain VanarChain has attracted market attention due to its high growth rate and cooperation with AI giant NVIDIA. Behind VanarChain's popularity, in addition to undergoing multiple brand transformations, popular concepts such as main games, metaverse and AI have also earned the project plenty of popularity and topics. Prior to its transformation, Vanar, formerly TerraVirtua, was founded in 2018 as a platform that supported paid subscriptions, provided virtual reality (VR) and augmented reality (AR) content, and accepted cryptocurrency payments. The platform was created by co-founders Gary Bracey and Jawad Ashraf, with Gary Bracey having extensive experience involved in video game production and development.

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

TrendForce: Nvidia's Blackwell platform products drive TSMC's CoWoS production capacity to increase by 150% this year

Apr 17, 2024 pm 08:00 PM

According to news from this site on April 17, TrendForce recently released a report, believing that demand for Nvidia's new Blackwell platform products is bullish, and is expected to drive TSMC's total CoWoS packaging production capacity to increase by more than 150% in 2024. NVIDIA Blackwell's new platform products include B-series GPUs and GB200 accelerator cards integrating NVIDIA's own GraceArm CPU. TrendForce confirms that the supply chain is currently very optimistic about GB200. It is estimated that shipments in 2025 are expected to exceed one million units, accounting for 40-50% of Nvidia's high-end GPUs. Nvidia plans to deliver products such as GB200 and B100 in the second half of the year, but upstream wafer packaging must further adopt more complex products.