Technology peripherals

Technology peripherals

AI

AI

Large model hallucination rate ranking: GPT-4 is the lowest at 3%, and Google Palm is as high as 27.2%

Large model hallucination rate ranking: GPT-4 is the lowest at 3%, and Google Palm is as high as 27.2%

Large model hallucination rate ranking: GPT-4 is the lowest at 3%, and Google Palm is as high as 27.2%

The development of artificial intelligence has made rapid progress, but problems frequently arise. OpenAI's new GPT vision API is amazing for its front end, but it's also hard to complain about for its back end due to hallucination issues.

Illusion has always been the fatal flaw of large models. Due to the complexity of the data set, it is inevitable that there will be outdated and erroneous information, causing the output quality to face severe challenges. Too much repeated information can also bias large models, which is also a type of illusion. But hallucinations are not unanswerable propositions. During the development process, careful use of data sets, strict filtering, construction of high-quality data sets, and optimization of model structure and training methods can alleviate the hallucination problem to a certain extent.

There are so many popular large-scale models, how effective are they in alleviating hallucinations? Here is a ranking that clearly compares their differences

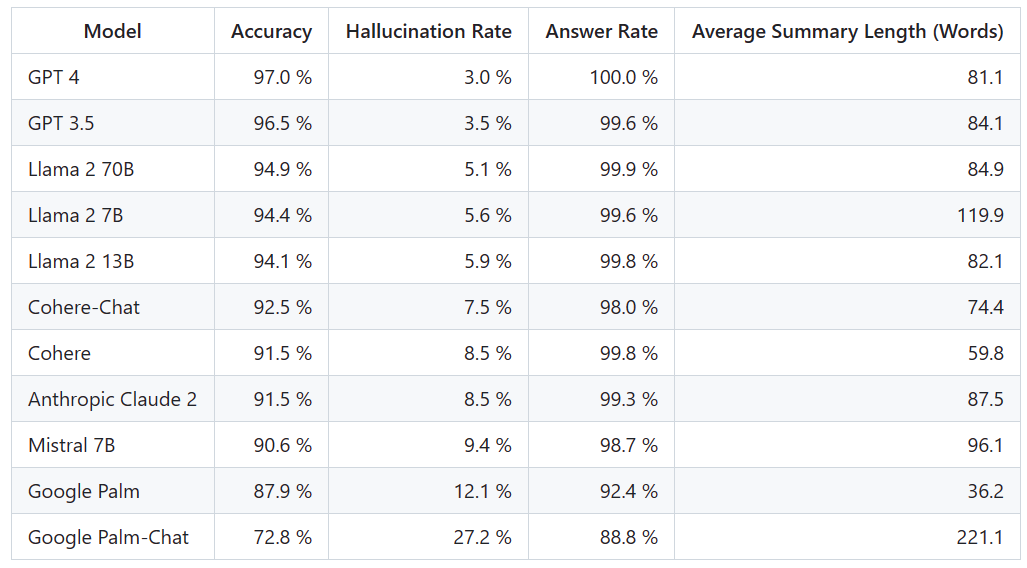

##The Vectara platform released this ranking, which focuses on artificial intelligence intelligent. The update date of the rankings is November 1, 2023. Vectara stated that they will continue to follow up on the hallucination evaluation in order to update the rankings as the model is updated

Project address: https ://github.com/vectara/hallucination-leaderboard

To determine this leaderboard, Vectara conducted a factual consistency study and trained a model to detect hallucinations in LLM output . They used a comparable SOTA model and provided each LLM with 1,000 short documents via a public API and asked them to summarize each document using only the facts presented in the document. Among these 1000 documents, only 831 documents were summarized by each model, and the remaining documents were rejected by at least one model due to content restrictions. Using these 831 documents, Vectara calculated the overall accuracy and illusion rate for each model. The rate at which each model refuses to respond to prompts is detailed in the "Answer Rate" column. None of the content sent to the model contains illegal or unsafe content, but contains enough trigger words to trigger certain content filters. These documents are mainly from the CNN/Daily Mail corpus

The detection address of the hallucination model is: https://huggingface.co/vectara/hallucination_evaluation_model

In addition, more and more LLM Used in RAG (Retrieval Augmented Generation) pipelines to answer user queries, such as Bing Chat and Google Chat integration. In the RAG system, the model is deployed as an aggregator of search results, so this ranking is also a good indicator of the accuracy of the model when used in the RAG system

Given that GPT-4 has been It seems unsurprising that it has the lowest rate of hallucinations, given its stellar performance. However, some netizens expressed that they were surprised that there was not a big gap between GPT-3.5 and GPT-4

OpenAI launched GPT-4 Turbo. No, some netizens immediately suggested updating it in the rankings. #We will wait and see what the next ranking will look like and whether there will be major changes.

The above is the detailed content of Large model hallucination rate ranking: GPT-4 is the lowest at 3%, and Google Palm is as high as 27.2%. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to dynamically create an object through a string and call its methods in Python?

Apr 01, 2025 pm 11:18 PM

How to dynamically create an object through a string and call its methods in Python?

Apr 01, 2025 pm 11:18 PM

In Python, how to dynamically create an object through a string and call its methods? This is a common programming requirement, especially if it needs to be configured or run...

How to use Go or Rust to call Python scripts to achieve true parallel execution?

Apr 01, 2025 pm 11:39 PM

How to use Go or Rust to call Python scripts to achieve true parallel execution?

Apr 01, 2025 pm 11:39 PM

How to use Go or Rust to call Python scripts to achieve true parallel execution? Recently I've been using Python...

How to solve the problem of missing dynamic loading content when obtaining web page data?

Apr 01, 2025 pm 11:24 PM

How to solve the problem of missing dynamic loading content when obtaining web page data?

Apr 01, 2025 pm 11:24 PM

Problems and solutions encountered when using the requests library to crawl web page data. When using the requests library to obtain web page data, you sometimes encounter the...

How to operate Zookeeper performance tuning on Debian

Apr 02, 2025 am 07:42 AM

How to operate Zookeeper performance tuning on Debian

Apr 02, 2025 am 07:42 AM

This article describes how to optimize ZooKeeper performance on Debian systems. We will provide advice on hardware, operating system, ZooKeeper configuration and monitoring. 1. Optimize storage media upgrade at the system level: Replacing traditional mechanical hard drives with SSD solid-state drives will significantly improve I/O performance and reduce access latency. Disable swap partitioning: By adjusting kernel parameters, reduce dependence on swap partitions and avoid performance losses caused by frequent memory and disk swaps. Improve file descriptor upper limit: Increase the number of file descriptors allowed to be opened at the same time by the system to avoid resource limitations affecting the processing efficiency of ZooKeeper. 2. ZooKeeper configuration optimization zoo.cfg file configuration

How to do Oracle security settings on Debian

Apr 02, 2025 am 07:48 AM

How to do Oracle security settings on Debian

Apr 02, 2025 am 07:48 AM

To strengthen the security of Oracle database on the Debian system, it requires many aspects to start. The following steps provide a framework for secure configuration: 1. Oracle database installation and initial configuration system preparation: Ensure that the Debian system has been updated to the latest version, the network configuration is correct, and all required software packages are installed. It is recommended to refer to official documents or reliable third-party resources for installation. Users and Groups: Create a dedicated Oracle user group (such as oinstall, dba, backupdba) and set appropriate permissions for it. 2. Security restrictions set resource restrictions: Edit /etc/security/limits.d/30-oracle.conf

In the ChatGPT era, how can the technical Q&A community respond to challenges?

Apr 01, 2025 pm 11:51 PM

In the ChatGPT era, how can the technical Q&A community respond to challenges?

Apr 01, 2025 pm 11:51 PM

The technical Q&A community in the ChatGPT era: SegmentFault’s response strategy StackOverflow...

Python asyncio Telnet connection is disconnected immediately: How to solve server-side blocking problem?

Apr 02, 2025 am 06:30 AM

Python asyncio Telnet connection is disconnected immediately: How to solve server-side blocking problem?

Apr 02, 2025 am 06:30 AM

About Pythonasyncio...

What is the reason why pipeline files cannot be written when using Scapy crawler?

Apr 02, 2025 am 06:45 AM

What is the reason why pipeline files cannot be written when using Scapy crawler?

Apr 02, 2025 am 06:45 AM

Discussion on the reasons why pipeline files cannot be written when using Scapy crawlers When learning and using Scapy crawlers for persistent data storage, you may encounter pipeline files...