Technology peripherals

Technology peripherals

AI

AI

Master four commonly used current limiting algorithms and you will definitely pass the interview

Master four commonly used current limiting algorithms and you will definitely pass the interview

Master four commonly used current limiting algorithms and you will definitely pass the interview

Under high concurrent access, such as e-commerce promotions, traffic continues to pour in, and the frequency of mutual calls between services suddenly increases, causing the system load to be too high. The stability of the services that the system relies on has a great impact on the system, and there are many uncertain factors that can cause avalanches, such as network connection interruptions, service downtime, etc. Generally, microservice fault-tolerant components provide current limiting, isolation, degradation, circuit breaker and other means, which can effectively protect our microservice system. This article mainly talks about current limiting.

Current limiting means limiting the maximum flow to prevent the operating frequency from exceeding the defined limit. The maximum concurrency that the system can provide is limited, and there are too many requests at the same time, which requires throttling, such as flash sales and major promotions. When a large number of requests flood in instantly, the server cannot serve it, so it has to be throttling. Rate limiting protects services from accidental or malicious overuse by limiting the number of requests that can reach the API in a given period of time. Without rate limiting, any user can bombard your server with requests, causing a situation where other users starve to death.

Why is there a speed limit?

- Preventing resource starvation: The most common reason for rate limiting is to increase the availability of API-based services by avoiding resource starvation. If you apply rate limiting, you can prevent load-based denial of service (doS) attacks. Even if one user bombards the API with tons of requests, other users won't starve.

- Security: Rate limiting prevents brute-force attacks on security-intensive features such as logins, promotional codes, and more. The number of requests for these functions is limited at the user level, so brute force algorithms will not work in these scenarios.

- Prevent operational costs: In the case of automatic expansion of resources in a pay-per-use model, rate limiting helps control operational costs by placing a virtual upper limit on resource expansion. Without rate limiting, resources can scale disproportionately, resulting in exponential bills.

Rate Limiting Policy Rate limiting can be applied to the following parameters:

- User: Limit to a given time period Number of requests allowed to the user. User-based rate limiting is one of the most common and intuitive forms of rate limiting.

- Concurrency: This limits the number of parallel sessions a user can allow within a given time frame. Limiting the number of parallel connections also helps mitigate DDOS attacks.

- Location/ID: This is useful for running location-based or demographic-focused campaigns. Requests that are not from the target demographic can be throttled to increase availability in the target area

- Server: Server-based rate limiting is a niche strategy. This is usually used when a specific server requires the majority of requests, that is, the server is strongly coupled to a specific function

Next, we will introduce four common current limiting algorithms

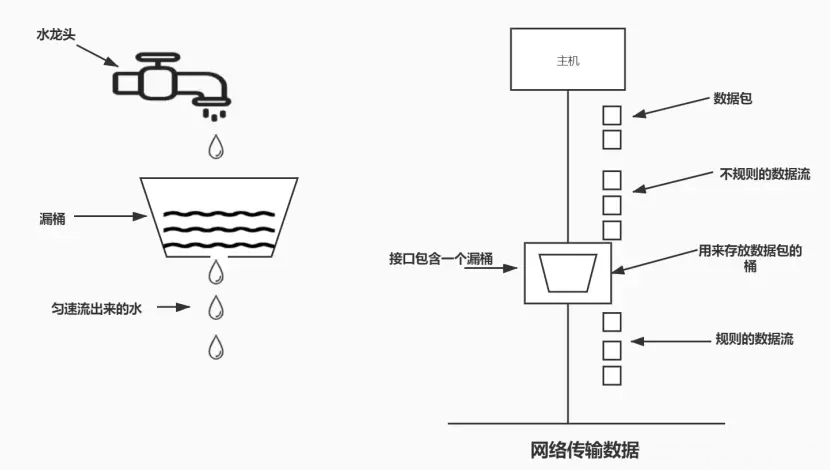

1. Leaky Bucket Algorithm

The idea of the leaky bucket algorithm isa simple and intuitive algorithm, which isa fixed-capacity leaky bucket that flows out water droplets at a constant fixed rate. If the bucket is empty, no drops of water need flow. Water can flow into the leaky bucket at any rate. If the incoming water drop exceeds the capacity of the bucket, the incoming water drop overflows (is discarded), while the capacity of the leaky bucket remains unchanged.

The advantage of this algorithm is that it smooths out bursts of requests and processes them at a constant rate. It is also easy to implement on a load balancer and is memory efficient for every user. Maintains constant near-uniform traffic to the server regardless of the number of requests.

The disadvantage is that a burst of requests may fill the bucket, resulting in a starvation of new requests. It also does not guarantee that the request will be completed within a given time.

Advantages:

- Smooth traffic. Because the leaky bucket algorithm processes requests at a fixed rate, it can effectively smooth and shape traffic and avoid traffic bursts and fluctuations (similar to the peak-shaving and valley-filling function of message queues).

- Prevent overloading. When the incoming requests exceed the capacity of the bucket, the requests can be discarded directly to prevent system overload.

shortcoming:

- Unable to handle burst traffic: Since the export speed of the leaky bucket is fixed, it cannot handle burst traffic. For example, requests cannot be processed faster even when traffic is light.

- Data may be lost: If the inlet traffic is too large and exceeds the capacity of the bucket, then some requests need to be discarded. This can be a problem in some scenarios where missing requests cannot be tolerated.

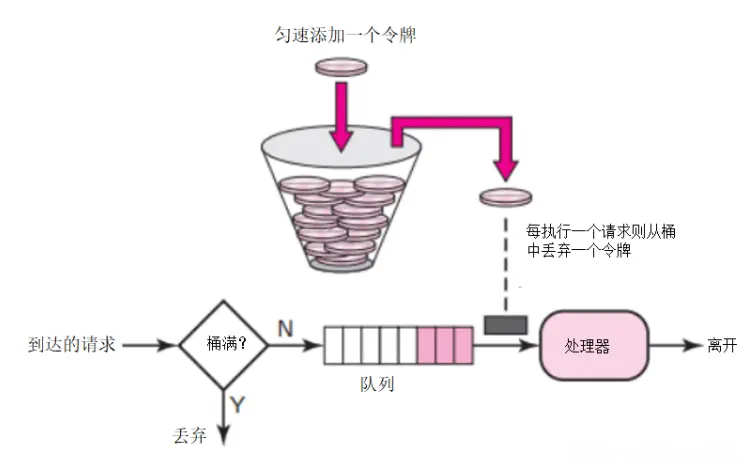

2. Token Bucket Algorithm

Token Bucket Algorithm: Assuming the limit is 2r/s, the bucket will be sent to the bucket at a fixed rate of 500 milliseconds. Add token in . A maximum of b tokens can be stored in the bucket. When the bucket is full, newly added tokens are discarded or rejected. When a packet of size n bytes arrives, n tokens are removed from the bucket and the packet is sent to the network. If there are less than n tokens in the bucket, the token will not be deleted and the packet will be flow-limited (either discarded or buffered). The token bucket current limiting principle is as shown in the figure.

The token bucket on the current limiting server side can adjust the speed of generating tokens and the capacity of the bucket according to the actual service performance and time period. . When the rate needs to be increased, the rate of tokens put into the bucket can be increased as needed

The speed of generating tokens is constant, while the speed of requesting tokens is not limited. This means that when faced with instantaneous large traffic, the algorithm can obtain a large number of tokens in a short period of time, and the process of obtaining tokens does not consume a lot of resources

When each new request When reaching the server, two operations are performed:

- Get token: Get the current number of tokens for this user. If it is larger than the defined limit, the request is dropped.

- Update token: If the obtained token is less than the limit of duration d, accept the request and attach the token.

#This algorithm is memory efficient because we save less amount of data per user for our application. The problem here is that it can lead to race conditions in distributed environments. This happens when two requests from two different application servers try to get the token at the same time.

Advantages:

- Can handle burst traffic : Token bucket algorithm can handle burst traffic . When the bucket is full, requests can be processed at maximum speed. This is very useful for application scenarios that need to handle bursty traffic.

- Limit average rate: In long-term operation, the data transmission rate will be limited to the predefined average rate (i.e., generate the command rate of cards).

- Flexibility: Compared with the leaky bucket algorithm, the token bucket algorithm provides greater flexibility. For example, the rate at which tokens are generated can be dynamically adjusted.

Disadvantages:

- May cause overload: If tokens are generated too fast , may result in large bursts of traffic, which may overload the network or service.

- Requires storage space: The token bucket requires a certain amount of storage space to save tokens, which may cause a waste of memory resources.

- The implementation is slightly complicated: Compared with the counter algorithm, the implementation of the token bucket algorithm is slightly more complicated.

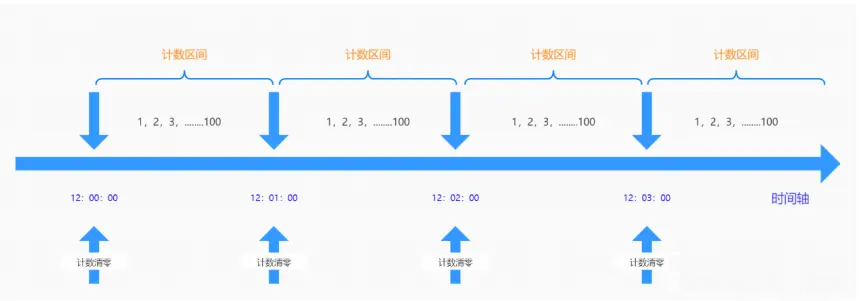

3. Fixed time window algorithm

In a fixed time window, a certain number of requests are allowed to enter. If the quantity is exceeded, it will be rejected or queued to wait for the next time period. This counter current limiting is implemented by limiting within a time interval. If the user sends a request before the end of the previous interval (but does not exceed the limit) and also sends a request at the beginning of the current interval (also does not exceed the limit), then these requests will be normal for their respective intervals. However, when requests exceed system limits during critical periods of the time interval, it may cause system overload

Because the counter algorithm has a time critical point defect, it is vulnerable to attacks in a very short period of time around the time critical point. For example, you can set a maximum of 100 requests for an interface per minute. For example, there is no data request in the 12:00:00-12:00:59 time period, but there is a sudden concurrent request in the 12:00:59-12:01:00 time period. 100 requests, and then entering the next counting cycle, the counter is cleared, and there are 100 requests between 12:01:00-12:01:01. In other words, around the time critical point, there may be twice as many requests as the threshold at the same time, causing an overload of background processing requests, resulting in insufficient system operation capabilities, and even causing the system to crash.

Disadvantages:

- The current limit is not smooth enough. For example: the current limit is 3 per second, and 3 requests are sent in the first millisecond. If the current limit is reached, all requests for the remaining time of the window will be rejected, resulting in a bad experience.

- Cannot handle window boundary issues. Because flow control is performed within a certain time window, a window boundary effect may occur, that is, a large number of requests may be allowed to pass at the boundary of the time window, resulting in burst traffic.

For example: the current limit is 3 per second, 3 requests were sent in the last millisecond of the first second, and another one was sent in the first millisecond of the second second. 3 requests. Six requests were processed within these two millimeters, but the current limit was not triggered. If there is a burst of traffic, it can overwhelm the server.

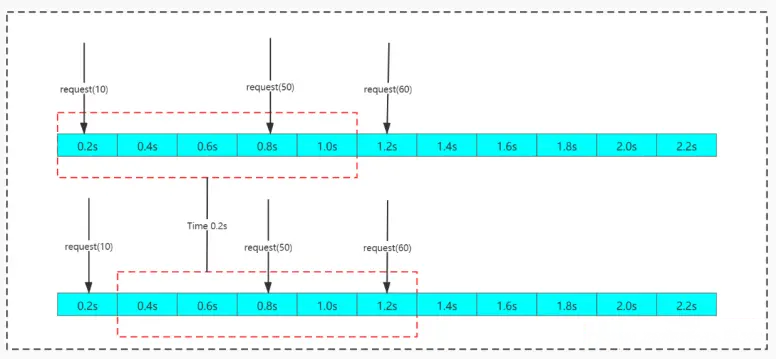

4. Sliding Time Window Algorithm

The sliding window algorithm divides a fixed time period and moves it with time. The starting time point becomes a time list. At the second time point, the end time point is increased by a time point and repeated continuously. In this way, the problem of the critical point of the counter can be cleverly avoided.

The sliding window algorithm can effectively avoid the time critical point problem in the counter algorithm, but there is still the concept of time segments. At the same time, the counting operation of the sliding window algorithm is also more time-consuming than the fixed time window algorithm.

Disadvantages: There is still the problem of insufficient smoothness in current limiting. For example: the current limit is 3 per second, and 3 requests are sent in the first millisecond. If the current limit is reached, all requests within the remaining window time will be rejected, resulting in a poor experience.

Summary

Introduces four commonly used current limiting algorithms: fixed window algorithm, sliding window algorithm, leaky bucket algorithm and token bucket algorithm. Each algorithm has its own characteristics and applicable scenarios. Let’s briefly summarize and compare them below.

- Token Bucket Algorithm It can both smooth traffic and handle burst traffic, and is suitable for situations where burst traffic needs to be processed scene.

- Leaky Bucket AlgorithmThe advantage is that traffic processing is smoother, but it cannot cope with burst traffic and is suitable for scenarios that require smooth traffic.

- Fixed window algorithm is simple to implement, but the current limit is not smooth enough and has window boundary problems. It is suitable for scenarios that require simple implementation of current limit. .

- Sliding window algorithm solves the window boundary problem, but there is still the problem of insufficient smoothness in current limiting, which is suitable for applications where the average request rate needs to be controlled scene.

The above is the detailed content of Master four commonly used current limiting algorithms and you will definitely pass the interview. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

Mistral OCR: Revolutionizing Retrieval-Augmented Generation with Multimodal Document Understanding Retrieval-Augmented Generation (RAG) systems have significantly advanced AI capabilities, enabling access to vast data stores for more informed respons

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist