Technology peripherals

Technology peripherals

AI

AI

Real-time image speed increased by 5-10 times, Tsinghua LCM/LCM-LoRA became popular, with over one million views and over 200,000 downloads

Real-time image speed increased by 5-10 times, Tsinghua LCM/LCM-LoRA became popular, with over one million views and over 200,000 downloads

Real-time image speed increased by 5-10 times, Tsinghua LCM/LCM-LoRA became popular, with over one million views and over 200,000 downloads

Generative models enter the "real-time" era?

Using Vincentian diagrams and Tusheng diagrams is no longer a new thing. However, in the process of using these tools, we found that they often run slowly, causing us to wait for a while to get the generated results

But recently, a model called "LCM" changed this In some cases, it can even generate images continuously in real time.

# Source: https://twitter.com/javilopen/status/1724398666889224590

The full name of LCM is Latent Consistency Models (latent consistency model), which was built by researchers from the Interdisciplinary Information Institute of Tsinghua University. Before the release of this model, latent diffusion models (LDM) such as Stable Diffusion were very slow to generate due to the computational complexity of the iterative sampling process. Through some innovative methods, LCM can generate high-resolution images with only a few steps of inference. According to statistics, LCM can increase the efficiency of mainstream Vincentian graph models by 5-10 times, so it can show real-time effects.

- Please click the following link to view the paper: https://arxiv.org/pdf/2310.04378.pdf

- Project address: https://github.com/luosiallen/latent-consistency-model

Technical report link: https://arxiv.org/ pdf/2311.05556.pdf

## Picture source: https://twitter.com/javilopen/status/1724398666889224590

## Picture source: https://twitter.com/javilopen/status/1724398666889224590

##The content that needs to be rewritten is: Image source: https://twitter.com/javilopen/status/1724398708052414748

Our team has completely open sourced the code of LCM, and disclosed the model weight files and online demonstrations based on internal distillation of pre-trained models such as SD-v1.5 and SDXL. In addition, the Hugging Face team has integrated the potential consistency model into the diffusers official repository, and updated the relevant code frameworks of LCM and LCM-LoRA in two consecutive official versions v0.22.0 and v0.23.0, providing an understanding of the potential consistency model. Good support for consistency models. The model published on Hugging Face ranked first in today's popularity list, becoming the most popular Vincent graph model on all platforms and the third most popular model in all categories

Next, we will introduce the two research results of LCM and LCM-LoRA respectively.

LCM: Generate high-resolution images with only a few steps of inference

AIGC era, including diffusion model-based Vincentian maps such as Stable Diffusion and DALL-E 3 The model has received widespread attention. Diffusion models produce high-quality images by adding noise to training data and then reversing the process. However, the diffusion model requires multi-step sampling to generate images, which is a relatively slow process and increases the cost of inference. The slow multi-step sampling problem is a major bottleneck when deploying such models.

The Consistency Model (CM) proposed by Dr. Song Yang of OpenAI this year provides an idea to solve the above problems. It was pointed out that the consistency model is designed to have the ability to be generated in a single step, showing great potential to accelerate the generation of diffusion models. However, since the consistency model is limited to unconditional image generation, many practical applications including Vincentian images, graph-generated images, etc. are still unable to enjoy the potential advantages of this model.

Latent Consistency Model (LCM) was born to solve the above problems. The latent consistency model supports image generation tasks under given conditions, and combines latent coding, classifier-free guidance and many other technologies that are widely used in diffusion models, greatly speeding up the conditional denoising process and providing many practical applications. The task opens a pathway.

LCM Technical Details

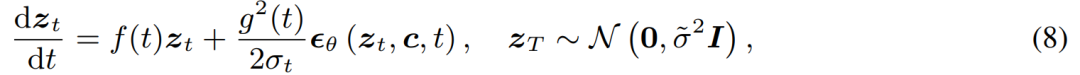

Specifically, the latent consistency model interprets the denoising problem of the diffusion model as solving the augmented probabilistic flow ordinary differential equation shown below the process of.

The solution efficiency can be improved by improving the traditional diffusion model. The traditional method uses numerical iteration to solve ordinary differential equations, but even with a more accurate solver, the accuracy of each step is limited, and about 10 iterations are needed to obtain satisfactory results

With traditional iterative solving Different from ordinary differential equations, the potential consistency model requires a single-step solution to the ordinary differential equation directly and predicts the final solution of the equation. Theoretically, the picture can be generated in a single step

In order to train a latent consistency model, this study proposes that the rapid generation of the model can be achieved with very little resource consumption by fine-tuning parameters of a pre-trained diffusion model (for example, stable diffusion). This distillation process is based on the optimization of the consistency loss function proposed by Dr. Song Yang. In order to obtain better performance and reduce computational overhead on the Vincentian graph task, this paper proposes three key technologies:

Rewritten content: (1) By using a pre-trained autoencoder, the original The image is encoded into a representation in the latent space to reduce redundant information when compressing the image and make the image more semantically consistent

(2) Classifier-free bootstrapping is used as an input parameter of the model to distill into the latent In the consistency model, while enjoying better picture-text consistency brought by classifier-free guidance, since the classifier-free guidance amplitude is distilled into the latent consistency model as an input parameter, it can reduce inference time. The required computational overhead;

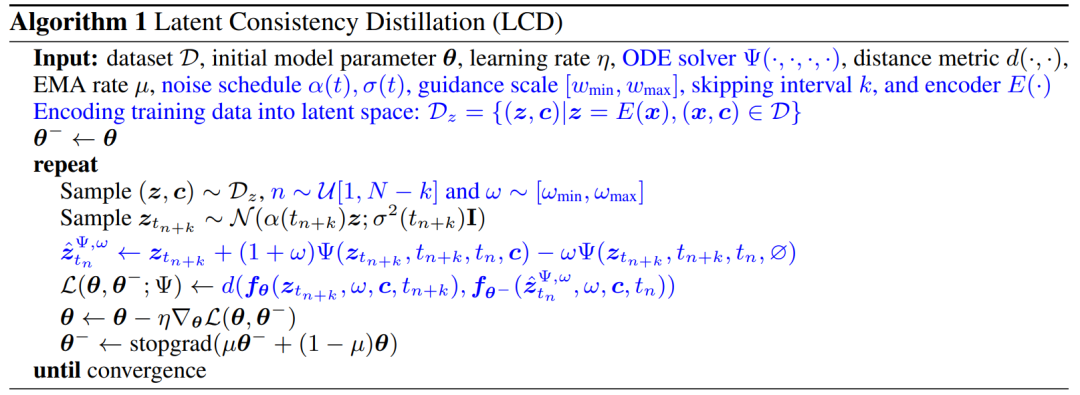

(3) Using the skip strategy to calculate the consistency loss greatly speeds up the distillation process of the potential consistency model. The pseudocode of the distillation algorithm of the latent consistency model is shown in the figure below.

# Qualitative and quantitative results show that the latent consistency model has the ability to quickly generate high-quality images. This model can generate high-quality images in 1 to 4 steps. By comparing the actual inference time and the generation quality indicator FID, it can be seen that compared to the DPM solver, one of the fastest existing samplers, the potential consistency model can accelerate the actual inference time by about 4 times while maintaining the same generation quality.

# Image generated by LCM

#LCM-LORA: A general stable transmission acceleration module

Based on the latent consistency model, the author team then further released their technical report on LCM-LoRA. Since the distillation process of the latent consistency model can be regarded as a fine-tuning process for the original pre-trained model, efficient fine-tuning techniques such as LoRA can be used to train the latent consistency model. Thanks to the resource savings brought by LoRA technology, the author's team conducted distillation on the SDXL model with the largest number of parameters in the Stable Diffusion series, and successfully obtained a potential consensus that can be generated in a very few steps that is comparable to dozens of SDXL steps. sexual model.In the introduction of the paper, the study points out that although the latent diffusion model (LDM) has been successful in generating text images and line drawing images, its slow reverse sampling process limits real-time applications and has an impact on user experience. Current open source models and acceleration technologies cannot yet achieve real-time generation on ordinary consumer-grade GPUs. Methods to accelerate LDM are generally divided into two categories: the first category involves advanced ODE solvers, such as DDIM, DPMSolver and DPM -Solver to speed up the generation process. The second category involves distilling LDM to simplify its functionality. ODE - Solver reduces the inference steps but still requires significant computational overhead, especially when employing classifier-free guidance. Meanwhile, distillation methods such as Guided-Distill, while promising, face practical limitations due to their intensive computational requirements. Finding a balance between the speed and quality of LDM-generated images remains a challenge in the field.

Recently, inspired by the Consistency Model (CM), the Latent Consistency Model (LCM) emerged as a solution to the slow sampling problem in image generation. LCM regards the back-diffusion process as an enhanced probability flow ODE (PF-ODE) problem. This type of model innovatively predicts solutions in the latent space without the need for iterative solutions via numerical ODE solvers. As a result, they enable efficient synthesis of high-resolution images with only 1 to 4 inference steps. In addition, LCM also performs well in terms of distillation efficiency. It only takes 32 hours of training with A100 to complete the minimum step inference

On this basis, a method called latent consistency fine-tuning ( LCF) method, which can fine-tune pre-trained LCM without starting from a teacher diffusion model. For specialized datasets, such as anime, real photos, or fantasy image datasets, additional steps are required, such as distilling pre-trained LDM into LCM using Latent Consistent Distillation (LCD), or fine-tuning LCM directly using LCF. However, this additional training may hinder the rapid deployment of LCM on different datasets, which raises a key question: whether fast, training-free inference can be achieved on custom datasets. To answer the above questions, researchers proposed LCM-LoRA. LCM-LoRA is a general training-free acceleration module that can be directly plugged into various Stable-Diffusion (SD) fine-tuned models or SD LoRA to support fast inference with minimal steps. Compared with early numerical probabilistic flow ODE (PF-ODE) solvers such as DDIM, DPM-Solver and DPM-Solver, LCM-LoRA represents a new class of PF-ODE solver modules based on neural networks. It demonstrates strong generalization capabilities across various fine-tuned SD models and LoRA

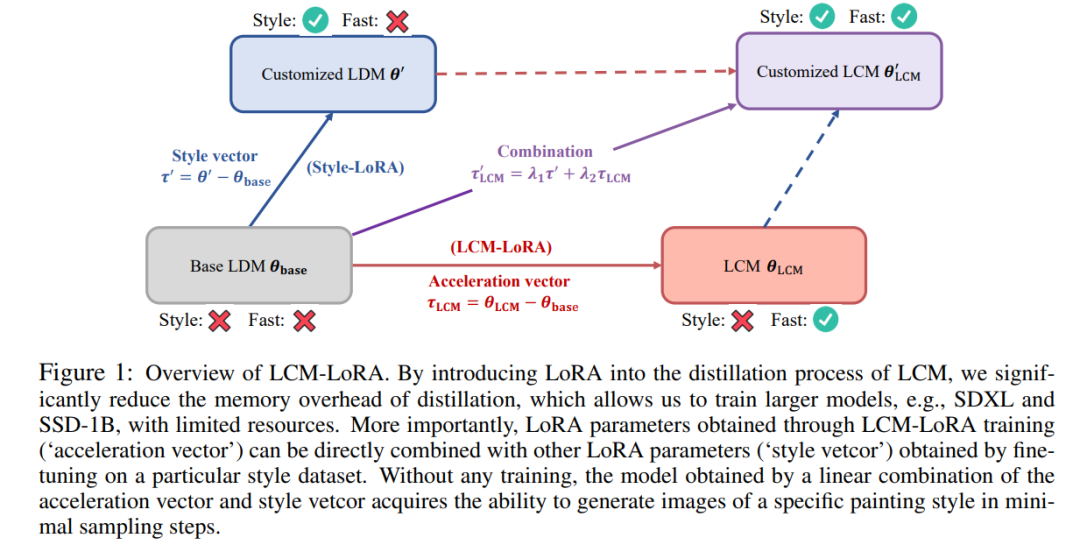

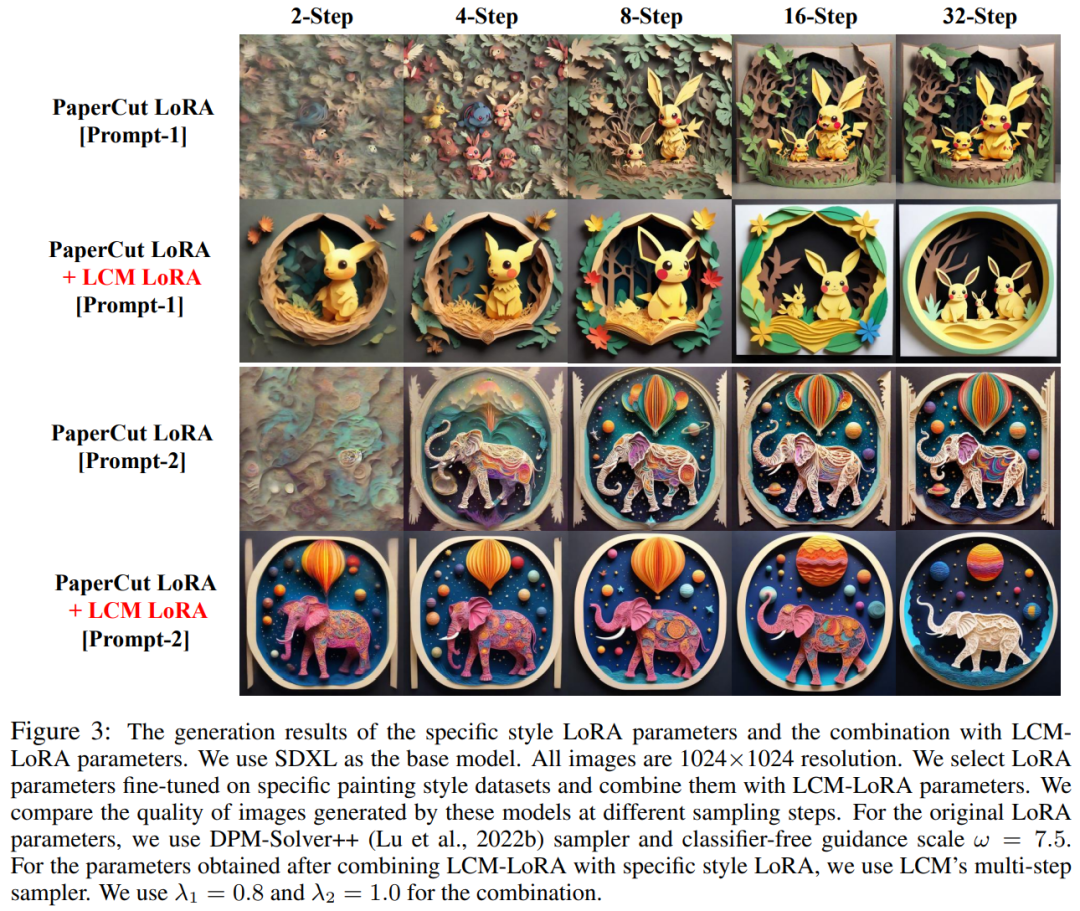

LCM-LoRA overview plot. By introducing LoRA into the distillation process of LCM, the study significantly reduced the memory overhead of distillation, which allowed them to utilize limited resources to train larger models such as SDXL and SSD-1B. More importantly, the LoRA parameters (acceleration vector) obtained through LCM-LoRA training can be directly combined with other LoRA parameters (style vetcor) obtained by fine-tuning on a specific style dataset. Without any training, the model obtained by the linear combination of acceleration vector and style vetcor can generate images of a specific painting style with minimal sampling steps.

LCM-LoRA overview plot. By introducing LoRA into the distillation process of LCM, the study significantly reduced the memory overhead of distillation, which allowed them to utilize limited resources to train larger models such as SDXL and SSD-1B. More importantly, the LoRA parameters (acceleration vector) obtained through LCM-LoRA training can be directly combined with other LoRA parameters (style vetcor) obtained by fine-tuning on a specific style dataset. Without any training, the model obtained by the linear combination of acceleration vector and style vetcor can generate images of a specific painting style with minimal sampling steps.

The technical details of LCM-LoRA can be rewritten as:

Generally speaking, the latent consistency model is trained using single-stage guided distillation Proceeding in this way, this method utilizes the pre-trained autoencoder latent space to distill the guidance diffusion model into LCM. This process involves enhancing the probabilistic flow ODE, which we can think of as a mathematical formula that ensures that the generated samples follow a trajectory that produces high-quality images. It is worth mentioning that the focus of distillation is to maintain the fidelity of these trajectories while significantly reducing the number of sampling steps required. Algorithm 1 provides the pseudocode for the LCD.

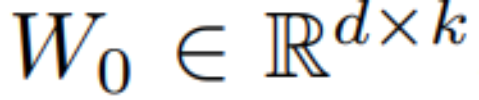

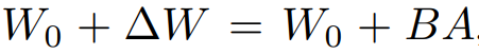

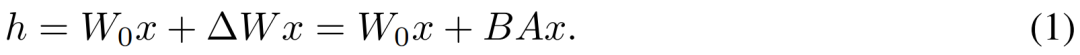

Since the distillation process of LCM is performed on the parameters of the pre-trained diffusion model, we can regard the latent consistency distillation as the fine-tuning process of the diffusion model, thus Some efficient parameter adjustment methods can be used, such as LoRA.LoRA обновляет предварительно обученную матрицу весов, применяя разложение низкого ранга. В частности, для весовой матрицы  ее метод обновления выражается как

ее метод обновления выражается как  , где

, где  во время процесса обучения W_0 остается неизменным, а обновление градиента применяется только к двум параметрам A и B. Следовательно, для входных данных как продукта матриц низкого ранга LoRA значительно уменьшает количество обучаемых параметров, тем самым уменьшая использование памяти.

во время процесса обучения W_0 остается неизменным, а обновление градиента применяется только к двум параметрам A и B. Следовательно, для входных данных как продукта матриц низкого ранга LoRA значительно уменьшает количество обучаемых параметров, тем самым уменьшая использование памяти.

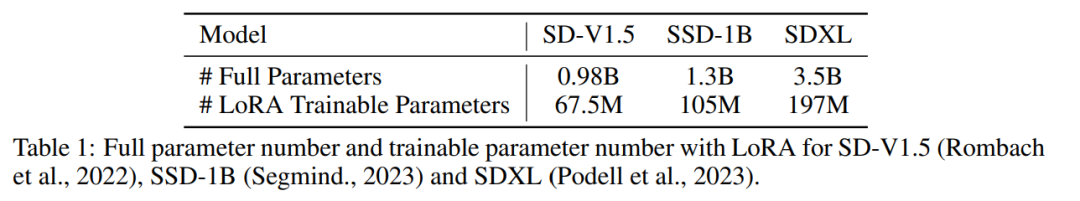

В таблице ниже общее количество параметров полной модели сравнивается с обучаемыми параметрами при использовании технологии LoRA. Очевидно, что за счет включения технологии LoRA в процесс дистилляции LCM количество обучаемых параметров значительно сокращается, что эффективно снижает требования к памяти для обучения.

С помощью серии экспериментов это исследование показывает, что парадигма ЖК-дисплея может быть хорошо адаптирована к более крупным моделям, таким как SDXL и SSD-1B. Показаны результаты генерации различных моделей. на рисунке 2 показано.

Автор обнаружил, что использование технологии LoRA может повысить эффективность процесса дистилляции, а также обнаружил, что параметры LoRA, полученные в результате обучения, можно использовать в качестве общего модуля ускорения, который может быть напрямую использован с другими LoRA. Комбинированное использование параметров

Как показано на рисунке 1 выше, команда авторов обнаружила, что достаточно просто объединить «параметры стиля», полученные путем точной настройки конкретных данных стиля. устанавливается с помощью «параметров ускорения», полученных путем дистилляции латентной консистенции. Путем линейной комбинации можно получить новую модель потенциальной консистенции как с возможностью быстрой генерации, так и с особым стилем. Это открытие дает мощный импульс большому количеству моделей с открытым исходным кодом, которые уже существуют в существующем сообществе открытого исходного кода, позволяя этим моделям даже наслаждаться эффектами ускорения, обеспечиваемыми моделью скрытой согласованности, без какого-либо дополнительного обучения.

Короче говоря, LCM-LoRA — это общий модуль ускорения, не требующий обучения, для моделей со стабильной диффузией (SD). Он функционирует как автономный и эффективный решающий модуль на основе нейронной сети для прогнозирования решений PF-ODE, обеспечивая быстрый вывод с минимальными шагами на различных точно настроенных моделях SD и SD LoRA. Большое количество экспериментов по преобразованию текста в изображение доказывает сильную способность к обобщению и превосходство LCM-LoRA.

Введение командыВсе авторы статьи из Университет Цинхуа, двое. Соавторы — Ло Симиан и Тан Ицинь.

Ло Симиан — студент второго курса магистратуры факультета компьютерных наук и технологий Университета Цинхуа, его научным руководителем является профессор Чжао Син. Он окончил Школу больших данных Фуданьского университета со степенью бакалавра. Направление его исследований — мультимодальные генеративные модели. Он интересуется моделями диффузии, моделями согласованности и ускорением AIGC, а также занимается разработкой генеративных моделей следующего поколения. Ранее он опубликовал множество статей в качестве первого автора на ведущих конференциях, таких как ICCV и NeurIPS.

Тань Ицинь — студент второго курса магистратуры филиала Университета Цинхуа, и его научным руководителем является г-н Хуан Лунбо. Будучи студентом, он учился на факультете электронной техники Университета Цинхуа. Его исследовательские интересы в основном охватывают модели глубокого обучения с подкреплением и диффузии. В ходе предыдущих исследований он опубликовал несколько громких статей в качестве первого автора на научных конференциях, таких как ICLR, и выступил с устными докладами.

Стоит отметить, что один из двух был на курсе продвинутой компьютерной теории преподавателем колледжа Ли Цзянь, идея LCM была предложена и наконец представлена как итоговый курсовой проект. Среди трех преподавателей Ли Цзянь и Хуан Лунбо — доценты Института междисциплинарной информации Цинхуа, а Чжао Син — доцент Института междисциплинарной информации Цинхуа.

Первый ряд (слева направо): Ло Сымянь, Тан Ицинь. Второй ряд (слева направо): Хуан Лунбо, Ли Цзянь, Чжао Син.

The above is the detailed content of Real-time image speed increased by 5-10 times, Tsinghua LCM/LCM-LoRA became popular, with over one million views and over 200,000 downloads. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

The author of ControlNet has another hit! The whole process of generating a painting from a picture, earning 1.4k stars in two days

Jul 17, 2024 am 01:56 AM

The author of ControlNet has another hit! The whole process of generating a painting from a picture, earning 1.4k stars in two days

Jul 17, 2024 am 01:56 AM

It is also a Tusheng video, but PaintsUndo has taken a different route. ControlNet author LvminZhang started to live again! This time I aim at the field of painting. The new project PaintsUndo has received 1.4kstar (still rising crazily) not long after it was launched. Project address: https://github.com/lllyasviel/Paints-UNDO Through this project, the user inputs a static image, and PaintsUndo can automatically help you generate a video of the entire painting process, from line draft to finished product. follow. During the drawing process, the line changes are amazing. The final video result is very similar to the original image: Let’s take a look at a complete drawing.

From RLHF to DPO to TDPO, large model alignment algorithms are already 'token-level'

Jun 24, 2024 pm 03:04 PM

From RLHF to DPO to TDPO, large model alignment algorithms are already 'token-level'

Jun 24, 2024 pm 03:04 PM

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com In the development process of artificial intelligence, the control and guidance of large language models (LLM) has always been one of the core challenges, aiming to ensure that these models are both powerful and safe serve human society. Early efforts focused on reinforcement learning methods through human feedback (RL

Topping the list of open source AI software engineers, UIUC's agent-less solution easily solves SWE-bench real programming problems

Jul 17, 2024 pm 10:02 PM

Topping the list of open source AI software engineers, UIUC's agent-less solution easily solves SWE-bench real programming problems

Jul 17, 2024 pm 10:02 PM

The AIxiv column is a column where this site publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com The authors of this paper are all from the team of teacher Zhang Lingming at the University of Illinois at Urbana-Champaign (UIUC), including: Steven Code repair; Deng Yinlin, fourth-year doctoral student, researcher

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

Axiomatic training allows LLM to learn causal reasoning: the 67 million parameter model is comparable to the trillion parameter level GPT-4

Jul 17, 2024 am 10:14 AM

Axiomatic training allows LLM to learn causal reasoning: the 67 million parameter model is comparable to the trillion parameter level GPT-4

Jul 17, 2024 am 10:14 AM

Show the causal chain to LLM and it learns the axioms. AI is already helping mathematicians and scientists conduct research. For example, the famous mathematician Terence Tao has repeatedly shared his research and exploration experience with the help of AI tools such as GPT. For AI to compete in these fields, strong and reliable causal reasoning capabilities are essential. The research to be introduced in this article found that a Transformer model trained on the demonstration of the causal transitivity axiom on small graphs can generalize to the transitive axiom on large graphs. In other words, if the Transformer learns to perform simple causal reasoning, it may be used for more complex causal reasoning. The axiomatic training framework proposed by the team is a new paradigm for learning causal reasoning based on passive data, with only demonstrations

arXiv papers can be posted as 'barrage', Stanford alphaXiv discussion platform is online, LeCun likes it

Aug 01, 2024 pm 05:18 PM

arXiv papers can be posted as 'barrage', Stanford alphaXiv discussion platform is online, LeCun likes it

Aug 01, 2024 pm 05:18 PM

cheers! What is it like when a paper discussion is down to words? Recently, students at Stanford University created alphaXiv, an open discussion forum for arXiv papers that allows questions and comments to be posted directly on any arXiv paper. Website link: https://alphaxiv.org/ In fact, there is no need to visit this website specifically. Just change arXiv in any URL to alphaXiv to directly open the corresponding paper on the alphaXiv forum: you can accurately locate the paragraphs in the paper, Sentence: In the discussion area on the right, users can post questions to ask the author about the ideas and details of the paper. For example, they can also comment on the content of the paper, such as: "Given to

A significant breakthrough in the Riemann Hypothesis! Tao Zhexuan strongly recommends new papers from MIT and Oxford, and the 37-year-old Fields Medal winner participated

Aug 05, 2024 pm 03:32 PM

A significant breakthrough in the Riemann Hypothesis! Tao Zhexuan strongly recommends new papers from MIT and Oxford, and the 37-year-old Fields Medal winner participated

Aug 05, 2024 pm 03:32 PM

Recently, the Riemann Hypothesis, known as one of the seven major problems of the millennium, has achieved a new breakthrough. The Riemann Hypothesis is a very important unsolved problem in mathematics, related to the precise properties of the distribution of prime numbers (primes are those numbers that are only divisible by 1 and themselves, and they play a fundamental role in number theory). In today's mathematical literature, there are more than a thousand mathematical propositions based on the establishment of the Riemann Hypothesis (or its generalized form). In other words, once the Riemann Hypothesis and its generalized form are proven, these more than a thousand propositions will be established as theorems, which will have a profound impact on the field of mathematics; and if the Riemann Hypothesis is proven wrong, then among these propositions part of it will also lose its effectiveness. New breakthrough comes from MIT mathematics professor Larry Guth and Oxford University

LLM is really not good for time series prediction. It doesn't even use its reasoning ability.

Jul 15, 2024 pm 03:59 PM

LLM is really not good for time series prediction. It doesn't even use its reasoning ability.

Jul 15, 2024 pm 03:59 PM

Can language models really be used for time series prediction? According to Betteridge's Law of Headlines (any news headline ending with a question mark can be answered with "no"), the answer should be no. The fact seems to be true: such a powerful LLM cannot handle time series data well. Time series, that is, time series, as the name suggests, refers to a set of data point sequences arranged in the order of time. Time series analysis is critical in many areas, including disease spread prediction, retail analytics, healthcare, and finance. In the field of time series analysis, many researchers have recently been studying how to use large language models (LLM) to classify, predict, and detect anomalies in time series. These papers assume that language models that are good at handling sequential dependencies in text can also generalize to time series.