Technology peripherals

Technology peripherals

AI

AI

A new step towards high-quality image generation: Google's UFOGen ultra-fast sampling method

A new step towards high-quality image generation: Google's UFOGen ultra-fast sampling method

A new step towards high-quality image generation: Google's UFOGen ultra-fast sampling method

In the past year, a series of Vincentian graph diffusion models represented by Stable Diffusion have completely changed the field of visual creation. Countless users have improved their productivity with images produced by diffusion models. However, the speed of generation of diffusion models is a common problem. Because the denoising model relies on multi-step denoising to gradually turn the initial Gaussian noise into an image, it requires multiple calculations of the network, resulting in a very slow generation speed. This makes the large-scale Vincentian graph diffusion model very unfriendly to some applications that focus on real-time and interactivity. With the introduction of a series of technologies, the number of steps required to sample from a diffusion model has increased from the initial few hundred steps to dozens of steps, or even only 4-8 steps.

Recently, a research team from Google proposed the UFOGen model, a variant of the diffusion model that can sample extremely quickly. By fine-tuning Stable Diffusion with the method proposed in the paper, UFOGen can generate high-quality images in just one step. At the same time, Stable Diffusion's downstream applications, such as graph generation and ControlNet, can also be retained.

Please click the following link to view the paper: https://arxiv.org/abs/2311.09257

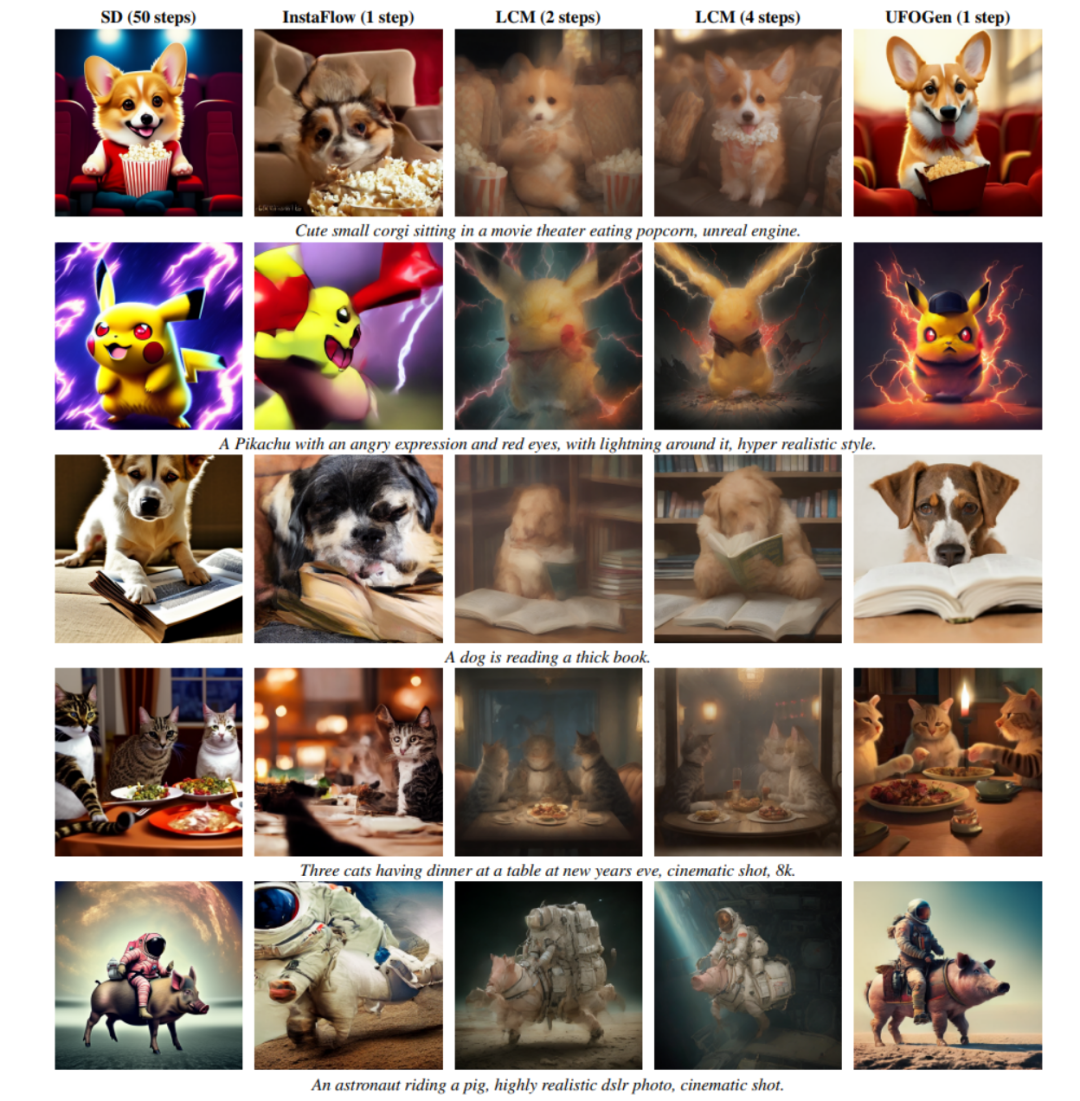

As you can see from the picture below, UFOGen can generate high-quality, diverse pictures in just one step.

Improving the generation speed of diffusion models is not a new research direction. Previous research in this area mainly focused on two directions. One direction is to design more efficient numerical calculation methods, so as to achieve the purpose of solving the sampling ODE of the diffusion model using fewer discrete steps. For example, the DPM series of numerical solvers proposed by Zhu Jun's team at Tsinghua University have been verified to be very effective in Stable Diffusion, and can significantly reduce the number of solution steps from the default 50 steps of DDIM to less than 20 steps. Another direction is to use the knowledge distillation method to compress the ODE-based sampling path of the model to a smaller number of steps. Examples in this direction are Guided distillation, one of the best paper candidates of CVPR2023, and the recently popular Latent Consistency Model (LCM). LCM, in particular, can reduce the number of sampling steps to only 4 by distilling the consistency target, which has spawned many real-time generation applications.

However, Google’s research team did not follow the above general direction in the UFOGen model. Instead, it took a different approach and used a mixture of the diffusion model and GAN proposed more than a year ago. Model ideas. They believe that the aforementioned ODE-based sampling and distillation has its fundamental limitations, and it is difficult to compress the number of sampling steps to the limit. Therefore, if you want to achieve the goal of one-step generation, you need to open up new ideas.

Hybrid model refers to a method that combines a diffusion model and a generative adversarial network (GAN). This method was first proposed by NVIDIA's research team at ICLR 2022 and is called DDGAN ("Using Denoising Diffusion GAN to Solve Three Problems in Generative Learning"). DDGAN is inspired by the shortcomings of ordinary diffusion models that make Gaussian assumptions about noise reduction distributions. Simply put, the diffusion model assumes that the denoising distribution (a conditional distribution that, given a noisy sample, generates a less noisy sample) is a simple Gaussian distribution. However, the theory of stochastic differential equations proves that such an assumption only holds true when the noise reduction step size approaches 0. Therefore, the diffusion model requires a large number of repeated denoising steps to ensure a small denoising step size, resulting in a slower generation speed.

DDGAN proposes to abandon the Gaussian assumption of the denoising distribution and instead Use a conditional GAN to simulate this noise reduction distribution. Because GAN has extremely strong representation capabilities and can simulate complex distributions, a larger noise reduction step size can be used to reduce the number of steps. However, DDGAN changes the stable reconstruction training goal of the diffusion model into the training goal of GAN, which can easily cause training instability and make it difficult to extend to more complex tasks. At NeurIPS 2023, the same Google research team that created UGOGen proposed SIDDM (paper title Semi-Implicit Denoising Diffusion Models), reintroducing the reconstruction objective function into the training objective of DDGAN, making training more stable and The generation quality is greatly improved compared to DDGAN.

SIDDM, as the predecessor of UFOGen, can generate high-quality images on CIFAR-10, ImageNet and other research data sets in only 4 steps. But SIDDM has two problems that need to be solved: first, it cannot achieve one-step generation of ideal conditions; second, it is not simple to extend it to the field of Vincentian graphs that attract more attention. To this end, Google’s research team proposed UFOGen to solve these two problems.

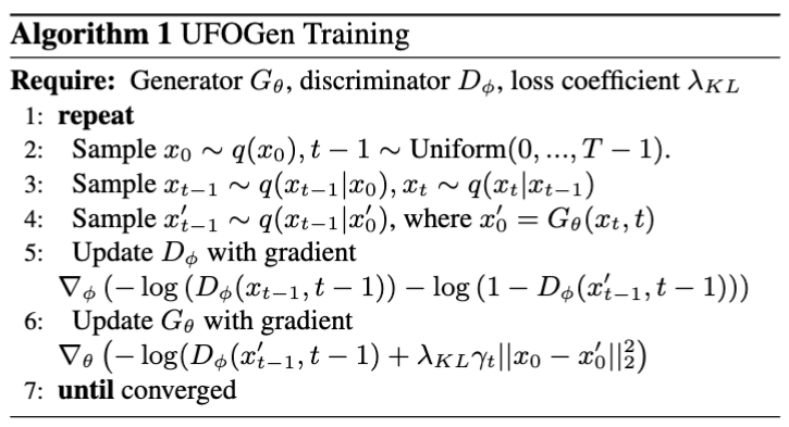

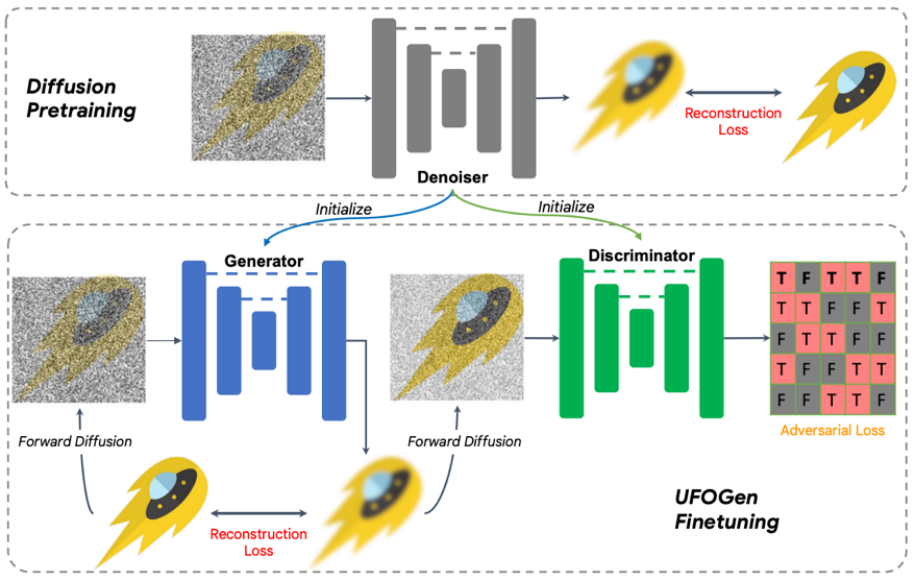

Specifically, for question one, through simple mathematical analysis, the team found that by changing the parameterization method of the generator and changing the calculation method of the reconstruction loss function, the theory The above model can be generated in one step. For question two, the team proposed to use the existing Stable Diffusion model for initialization to allow the UFOGen model to be expanded to Vincent diagram tasks faster and better. It is worth noting that SIDDM has proposed that both the generator and the discriminator adopt the UNet architecture. Therefore, based on this design, the generator and discriminator of UFOGen are initialized by the Stable Diffusion model. Doing so makes the most of Stable Diffusion's internal information, especially about the relationship between images and text. Such information is difficult to obtain through adversarial learning. The training algorithm and diagram are shown below.

It is worth noting that before this, there was some work using GAN to do Vincentian graphs, such as NVIDIA StyleGAN-T and Adobe's GigaGAN both extend the basic architecture of StyleGAN to a larger scale, allowing them to generate graphs in one step. The author of UFOGen pointed out that compared with previous GAN-based work, in addition to generation quality, UFOGen has several advantages:

Rewritten content: 1. In the Vincentian graph task , pure generative adversarial network (GAN) training is very unstable. The discriminator not only needs to judge the texture of the image, but also needs to understand the degree of match between the image and the text, which is a very difficult task, especially in the early stages of training. Therefore, previous GAN models, such as GigaGAN, introduced a large number of auxiliary losses to help training, which made training and parameter adjustment extremely difficult. However, UFOGen makes GAN play a supporting role in this regard by introducing reconstruction loss, thereby achieving very stable training

2. Training GAN directly from scratch is not only unstable but also abnormal Expensive, especially for tasks like Vincent plots that require large amounts of data and training steps. Because two sets of parameters need to be updated at the same time, the training of GAN consumes more time and memory than the diffusion model. UFOGen's innovative design can initialize parameters from Stable Diffusion, greatly saving training time. Usually convergence only requires tens of thousands of training steps.

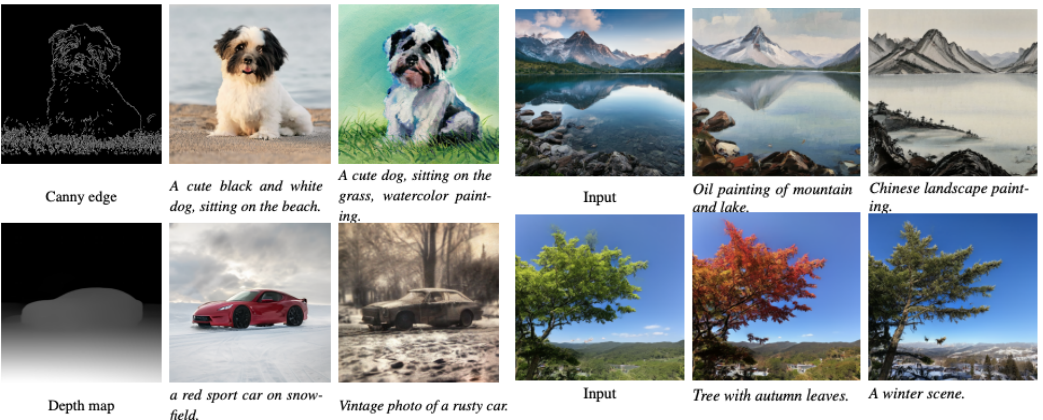

3. One of the charms of the Vincent graph diffusion model is that it can be applied to other tasks, including applications that do not require fine-tuning such as graph graphs, and applications that already require fine-tuning such as controlled generation. Previous GAN models have been difficult to scale to these downstream tasks because fine-tuning GANs has been difficult. In contrast, UFOGen has the framework of a diffusion model and therefore can be more easily applied to these tasks. The figure below shows UFOGen's graph generation graph and examples of controllable generation. Note that these generation only require one step of sampling.

Experiments have shown that UFOGen only needs one step of sampling to generate high-quality images that conform to text descriptions. Compared with recently proposed high-speed sampling methods for diffusion models (such as Instaflow and LCM), UFOGen shows strong competitiveness. Even compared to Stable Diffusion, which requires 50 steps of sampling, the samples generated by UFOGen are not inferior in appearance. Here are some comparison results:

Summary

The Google team proposed a method called UFOGen Powerful model, achieved by improving the existing diffusion model and a hybrid model of GAN. This model is fine-tuned by Stable Diffusion, and while ensuring the ability to generate graphs in one step, it is also suitable for different downstream applications. As one of the early works to achieve ultra-fast text-to-image synthesis, UFOGen has opened up a new path in the field of high-efficiency generative models

The above is the detailed content of A new step towards high-quality image generation: Google's UFOGen ultra-fast sampling method. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to create oracle database How to create oracle database

Apr 11, 2025 pm 02:36 PM

How to create oracle database How to create oracle database

Apr 11, 2025 pm 02:36 PM

To create an Oracle database, the common method is to use the dbca graphical tool. The steps are as follows: 1. Use the dbca tool to set the dbName to specify the database name; 2. Set sysPassword and systemPassword to strong passwords; 3. Set characterSet and nationalCharacterSet to AL32UTF8; 4. Set memorySize and tablespaceSize to adjust according to actual needs; 5. Specify the logFile path. Advanced methods are created manually using SQL commands, but are more complex and prone to errors. Pay attention to password strength, character set selection, tablespace size and memory

How to create an oracle database How to create an oracle database

Apr 11, 2025 pm 02:33 PM

How to create an oracle database How to create an oracle database

Apr 11, 2025 pm 02:33 PM

Creating an Oracle database is not easy, you need to understand the underlying mechanism. 1. You need to understand the concepts of database and Oracle DBMS; 2. Master the core concepts such as SID, CDB (container database), PDB (pluggable database); 3. Use SQL*Plus to create CDB, and then create PDB, you need to specify parameters such as size, number of data files, and paths; 4. Advanced applications need to adjust the character set, memory and other parameters, and perform performance tuning; 5. Pay attention to disk space, permissions and parameter settings, and continuously monitor and optimize database performance. Only by mastering it skillfully requires continuous practice can you truly understand the creation and management of Oracle databases.

How to write oracle database statements

Apr 11, 2025 pm 02:42 PM

How to write oracle database statements

Apr 11, 2025 pm 02:42 PM

The core of Oracle SQL statements is SELECT, INSERT, UPDATE and DELETE, as well as the flexible application of various clauses. It is crucial to understand the execution mechanism behind the statement, such as index optimization. Advanced usages include subqueries, connection queries, analysis functions, and PL/SQL. Common errors include syntax errors, performance issues, and data consistency issues. Performance optimization best practices involve using appropriate indexes, avoiding SELECT *, optimizing WHERE clauses, and using bound variables. Mastering Oracle SQL requires practice, including code writing, debugging, thinking and understanding the underlying mechanisms.

How to add, modify and delete MySQL data table field operation guide

Apr 11, 2025 pm 05:42 PM

How to add, modify and delete MySQL data table field operation guide

Apr 11, 2025 pm 05:42 PM

Field operation guide in MySQL: Add, modify, and delete fields. Add field: ALTER TABLE table_name ADD column_name data_type [NOT NULL] [DEFAULT default_value] [PRIMARY KEY] [AUTO_INCREMENT] Modify field: ALTER TABLE table_name MODIFY column_name data_type [NOT NULL] [DEFAULT default_value] [PRIMARY KEY]

What are the integrity constraints of oracle database tables?

Apr 11, 2025 pm 03:42 PM

What are the integrity constraints of oracle database tables?

Apr 11, 2025 pm 03:42 PM

The integrity constraints of Oracle databases can ensure data accuracy, including: NOT NULL: null values are prohibited; UNIQUE: guarantee uniqueness, allowing a single NULL value; PRIMARY KEY: primary key constraint, strengthen UNIQUE, and prohibit NULL values; FOREIGN KEY: maintain relationships between tables, foreign keys refer to primary table primary keys; CHECK: limit column values according to conditions.

Detailed explanation of nested query instances in MySQL database

Apr 11, 2025 pm 05:48 PM

Detailed explanation of nested query instances in MySQL database

Apr 11, 2025 pm 05:48 PM

Nested queries are a way to include another query in one query. They are mainly used to retrieve data that meets complex conditions, associate multiple tables, and calculate summary values or statistical information. Examples include finding employees above average wages, finding orders for a specific category, and calculating the total order volume for each product. When writing nested queries, you need to follow: write subqueries, write their results to outer queries (referenced with alias or AS clauses), and optimize query performance (using indexes).

What does oracle do

Apr 11, 2025 pm 06:06 PM

What does oracle do

Apr 11, 2025 pm 06:06 PM

Oracle is the world's largest database management system (DBMS) software company. Its main products include the following functions: relational database management system (Oracle database) development tools (Oracle APEX, Oracle Visual Builder) middleware (Oracle WebLogic Server, Oracle SOA Suite) cloud service (Oracle Cloud Infrastructure) analysis and business intelligence (Oracle Analytics Cloud, Oracle Essbase) blockchain (Oracle Blockchain Pla

How to configure Debian Apache log format

Apr 12, 2025 pm 11:30 PM

How to configure Debian Apache log format

Apr 12, 2025 pm 11:30 PM

This article describes how to customize Apache's log format on Debian systems. The following steps will guide you through the configuration process: Step 1: Access the Apache configuration file The main Apache configuration file of the Debian system is usually located in /etc/apache2/apache2.conf or /etc/apache2/httpd.conf. Open the configuration file with root permissions using the following command: sudonano/etc/apache2/apache2.conf or sudonano/etc/apache2/httpd.conf Step 2: Define custom log formats to find or