Technology peripherals

Technology peripherals

AI

AI

Five major models of generative AI: VAEs, GANs, Diffusion, Transformers, NeRFs

Five major models of generative AI: VAEs, GANs, Diffusion, Transformers, NeRFs

Five major models of generative AI: VAEs, GANs, Diffusion, Transformers, NeRFs

Choosing the right GenAI model for the task requires understanding the technology used by each model and its specific capabilities. Please learn about the five GenAI models of VAEs, GANs, Diffusion, Transformers and NerFs below.

Previously, most AI models focused on better processing, analyzing, and interpreting data. Until recently, breakthroughs in so-called generative neural network models have led to a range of new tools for creating all kinds of content, from photos and paintings to poetry, code, screenplays and films.

Overview of Top AI Generative Models

In the mid-2010s, researchers discovered new prospects for generative artificial intelligence models. At that time, they developed variational autoencoders (VAEs), generative adversarial networks (GAN), and diffusion models (Diffusion). Transformers, introduced in 2017, are groundbreaking neural networks capable of analyzing large data sets at scale to automatically create large language models (LLMs). In 2020, researchers introduced Neural Radiation Field (NeRF) technology, which can generate 2D content from 3D images

The rapid development of these generative models is an ongoing work, because researchers' tweaks often lead to huge improvements, and remarkable progress isn't slowing down. Professor White said at the University of California, Berkeley: "Model architectures are constantly changing, and new model architectures will continue to be developed."

Each model has its special capabilities, and currently, diffusion The model (Diffusion) performs exceptionally well in the field of image and video synthesis, the Transformers model (Transformers) performs well in the field of text, and GAN is good at expanding small data sets with reasonable synthetic samples. But choosing the best model always depends on the specific use case.

All models are different and AI researchers and ML (machine learning) engineers must choose the right one for the appropriate use case and required performance, taking into account the model’s use in computing , possible limitations in memory and capital.

Converter models in particular have contributed to the recent progress and excitement in generative models. Adnan Masood, chief artificial intelligence architect at UST Digital Transformation Consulting, said: "The latest breakthroughs in artificial intelligence models come from pre-training on large amounts of data and using self-supervised learning to train models without explicit labels."

For example, OpenAI’s generative pre-trained converter family of models is one of the largest and most powerful models in the category. Among them, the GPT-3 model contains 17.5 billion parameters

Main applications of top generative AI models

Masood explained that the top Generative AI models use a variety of different techniques and methods to generate entirely new data. The main features and uses of these models include:

- VAE uses an encoder-decoder architecture to generate new data, often used for image and video generation, such as Synthetic faces for privacy protection.

- GAN uses generators and discriminators to generate new data and is often used in video game development to create realistic game characters.

- Diffusion adds and then eliminates noise to produce high-quality images with high levels of detail, creating near-lifelike images of natural scenes.

- Transformer efficiently processes sequential data in parallel for machine translation, text summarization, and image creation.

- NeRF provides a new approach to 3D scene reconstruction using neural representations.

#Let’s go over each method in more detail.

VAE

VAE was developed in 2014 to use neural networks to encode data more efficiently

Yael Lev, head of AI at Sisense, said that the artificial intelligence analysis platform VAE has learned to express information more effectively. VAE consists of two parts: an encoder that compresses the data, and a decoder that restores the data to its original form. They are ideal for generating new instances from smaller pieces of information, repairing noisy images or data, detecting anomalous content in data and filling in missing information

However, variational autoencoders (VAEs) also tend to produce blurry or low-quality images, according to UST’s Masood. Another problem is that the low-dimensional latent space used to capture the data structure is complex and challenging. These shortcomings may limit the effectiveness of VAE in applications that require high-quality images or a clear understanding of the latent space. The next iteration of VAE will likely focus on improving the quality of generated data, speeding up training, and exploring its applicability to sequence data

GANs

GANs were developed in 2014 and are used to generate realistic faces and print figures. GANs pit neural networks that generate real content against neural networks that detect fake content. "Gradually, the two networks merge together to produce generated images that are indistinguishable from the original data," said Anand Rao, global AI leader at PwC.

GAN Commonly used for image generation, image editing, super-resolution, data enhancement, style transfer, music generation and deepfake creation. One problem with GANs is that they can suffer from mode collapse, where the generator produces limited and repetitive outputs, making them difficult to train. Masood said the next generation of GANs will focus on improving the stability and convergence of the training process, extending its applicability to other fields, and developing more effective evaluation metrics. GANs are also difficult to optimize and stabilize, and there is no clear control over the samples generated.

Diffusion

The diffusion model was developed in 2015 by a team of researchers at Stanford University using For simulating and inverting entropy and noise. Diffusion techniques provide a way to simulate phenomena such as how a substance such as salt diffuses into a liquid and then reverses it. This same model also helps generate new content from blank images.

Diffusion models are currently the first choice for image generation, and they are the basic models for popular image generation services, such as Dall-E 2, Stable Diffusion, Midjourney and Imagen. They are also used in pipelines to generate speech, video, and 3D content. Additionally, diffusion techniques can be used for data imputation, where missing data is predicted and generated. Many applications pair diffusion models with LLM for text-to-image or text-to-video generation. For example, Stable Diffusion 2 uses a contrastive language-image pre-trained model as a text encoder, and it also adds models for depth and upscaling.

Masood predicts that further improvements to models such as stable diffusion may focus on improving negative cues, enhancing the ability to generate images in the style of a specific artist, and improving celebrity images.

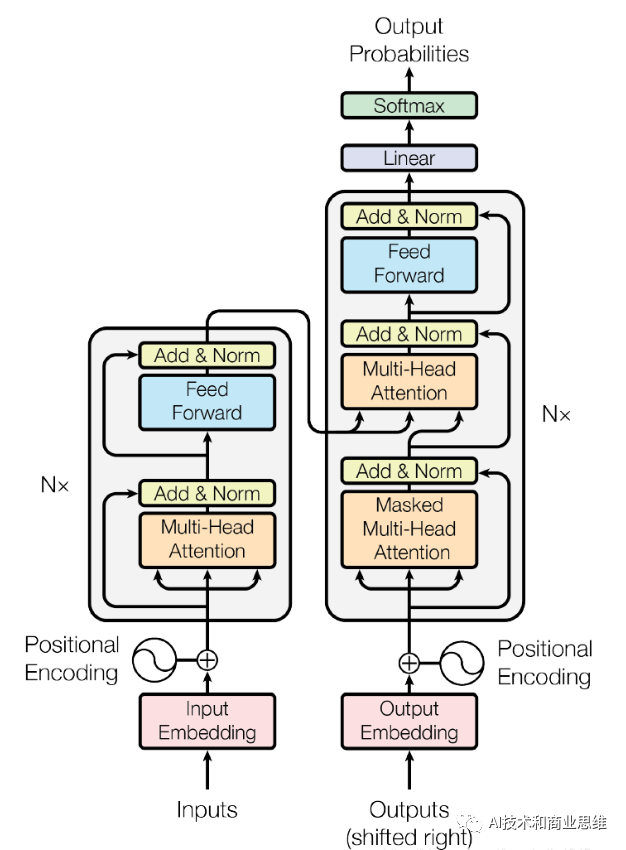

Transformers

The Transformer model was developed in 2017 by a team at Google Brain to improve language translation. These models are well suited to processing information in different orders and are able to process data in parallel while also leveraging unlabeled data to scale to large models

Rewritten content: These techniques Can be applied to text summarization, chatbots, recommendation engines, language translation, knowledge bases, personalized recommendations (via preference models), sentiment analysis, and named entity recognition for identifying people, places, and things. Additionally, they can be used in areas such as speech recognition, such as OpenAI’s Whisper technology, as well as object detection in videos and images, image captioning, text classification, and dialogue generation.

AlthoughTransformers

are versatile, but they do have limitations. They can be expensive to train and require large data sets. The resulting models are also quite large, making it challenging to identify sources of bias or inaccurate results. "Their complexity also makes it difficult to explain their inner workings, hindering their interpretability and transparency," Massoud said.Transformer Model Architecture

NeRF

NeRF

NeRF was developed in 2020 to capture 3D representations of light fields into neural networks, the first implementation was very slow and took several It takes days to capture the first 3D image.

However, in 2022, researchers at Nvidia discovered a way to generate a new model in about 30 seconds. These models can represent 3D objects in units of a few megabytes with comparable quality while other technologies may require gigabytes. These models promise to lead to more efficient techniques for capturing and generating 3D objects in the Metaverse. Alexander Keller, research director at Nvidia, said NeRFs could eventually be as important to 3D graphics as digital cameras are to modern photography. Masood said NeRFs It shows great potential in robotics, urban mapping, autonomous navigation and virtual reality applications. However, NERF remains computationally expensive and combining multiple NERFs into larger scenes is challenging. The only viable use case for NeRF today is to convert images into 3D objects or scenes. Despite these limitations, Masood predicts that NeRF will find new roles in basic image processing tasks such as denoising, deblurring, upsampling, compression, and image editing within the GenAI ecosystem. It is important to note that these models are a work in progress, and researchers are seeking ways to improve individual models and combine them with other models and processing techniques. Lev predicts that generative models will become more general, applications will expand beyond traditional domains, and users will be able to more effectively guide AI models and understand how they work better.

There is also work in progress on multimodal models that use retrieval methods to call model libraries optimized for specific tasks. He also hopes that the generative model will be able to develop other capabilities, such as making API calls and using external tools. For example, an LLM fine-tuned based on the company's call center knowledge will provide answers to questions and perform troubleshooting, such as resetting the customer modem or when the problem is solved. send email.

In fact, in the future there may be something more efficient to replace today's popular model architecture. "When new architectures emerge, Diffusion and Transformer models may no longer be useful," White said. We saw this with the introduction of Diffusion, as their approach to natural language applications was not conducive to long-short-term memory algorithms and recurrent neural networks. (RNN)

Some people predict that the generative AI ecosystem will evolve into a three-layer model. The base layer is a series of basic models based on text, images, speech and code. These models ingest large amounts of data and are built on large deep learning models, combined with human judgment. Next, industry- and function-specific domain models will improve healthcare, legal, or other types of data processing. At the top level, companies will build proprietary models using proprietary data and subject matter expertise. These three layers will disrupt the way teams develop models and usher in a new era of models as a service

How to choose a generative AI model: First considerationsAccording to Sisense’s Lev, top considerations when choosing between models include the following:

The problem you are trying to solve.

Select a model known to be suitable for your specific task. For example, use transformers for language tasks and NeRF for 3D scenes.Quantity and quality of data. Diffusion requires a lot of good data to work properly, while VAE works better with less data.

Quality of results. GAN is better for clear and detailed images, while VAE is better for smoother results.

The difficulty of training the model. GAN can be difficult to train, while VAE and Diffusion are easier.

Computing resource requirements. Both NeRF and Diffusion require a lot of computer power to work properly.

Requires control and understanding. If you want more control over the results or a better understanding of how the model works, VAEs may be better than GANs.

The above is the detailed content of Five major models of generative AI: VAEs, GANs, Diffusion, Transformers, NeRFs. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S