Technology peripherals

Technology peripherals

AI

AI

NVIDIA CEO Jensen Huang: The wave of artificial intelligence is emerging, and the industry is ushering in a new starting point

NVIDIA CEO Jensen Huang: The wave of artificial intelligence is emerging, and the industry is ushering in a new starting point

NVIDIA CEO Jensen Huang: The wave of artificial intelligence is emerging, and the industry is ushering in a new starting point

[CNMO News] Recently, the content that needs to be rewritten is: NVIDIA CEO Jensen Huang said that the world is at the beginning of the artificial intelligence (AI) wave, and he is confident about it. He believes that the growth momentum of data centers will continue into 2025, and emphasized that the company is expanding its chip supply chain to meet this growing demand.

Huang Renxun

According to CNMO reports, Huang Renxun shared his views on artificial intelligence in a university speech in May this year. He said that artificial intelligence brings huge opportunities to enterprises. Those enterprises that can quickly adapt and utilize artificial intelligence technology will improve their competitiveness, while enterprises that fail to make good use of artificial intelligence will face decline. He likens the current situation to the early stages of personal computers, networks, mobile devices and cloud technology, but believes that the impact of artificial intelligence is more fundamental and that every level of computing will be rewritten

The content that needs to be rewritten is: NVIDIA

Huang Renxun pointed out that artificial intelligence has changed the way we write and execute software, and is a regeneration opportunity for the computer industry in all aspects. He predicts that within the next ten years, industries will use new artificial intelligence computers to replace trillions of dollars worth of traditional computers

Huang Renxun’s views reflect the technology industry’s optimism about the future of AI, revealing the huge potential and influence of AI. With the development of technology, the application of AI is becoming more and more widespread. From self-driving cars to smart homes, to medical diagnosis and financial transactions, the influence of AI is constantly expanding. Of course, this also brings some challenges, including how to ensure the safety and fairness of AI and how to deal with the resulting employment issues.

The above is the detailed content of NVIDIA CEO Jensen Huang: The wave of artificial intelligence is emerging, and the industry is ushering in a new starting point. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Xiaomi Mi Watch S4 Sport officially announced to be released on July 19, using a one-piece titanium body

Jul 18, 2024 am 12:52 AM

Xiaomi Mi Watch S4 Sport officially announced to be released on July 19, using a one-piece titanium body

Jul 18, 2024 am 12:52 AM

On July 16, Xiaomi CEO Lei Jun issued an announcement: At 7 pm on July 19, this Friday night, I will hold the 5th "Lei Jun Annual Lecture" with the theme of "Courage" and talk about the ins and outs of building a car and this. A story of more than three years of ups and downs. Subsequently, Xiaomi officials began to warm up many new products. According to CNMO, Xiaomi’s first professional sports smart watch, S4Sport, will also be officially released on July 19. Xiaomi Mi Watch S4Sport was officially announced on July 19. According to the official introduction, Xiaomi Mi Watch S4Sport has made breakthrough innovations in design: the one-piece titanium body with front and rear sapphire glass materials. The above design not only ensures the durability of the watch, but also gives Its high-end texture and visual

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

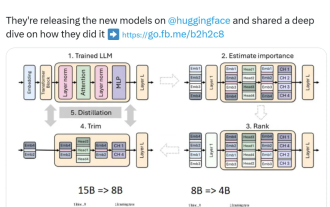

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

The rise of small models. Last month, Meta released the Llama3.1 series of models, which includes Meta’s largest model to date, the 405B model, and two smaller models with 70 billion and 8 billion parameters respectively. Llama3.1 is considered to usher in a new era of open source. However, although the new generation models are powerful in performance, they still require a large amount of computing resources when deployed. Therefore, another trend has emerged in the industry, which is to develop small language models (SLM) that perform well enough in many language tasks and are also very cheap to deploy. Recently, NVIDIA research has shown that structured weight pruning combined with knowledge distillation can gradually obtain smaller language models from an initially larger model. Turing Award Winner, Meta Chief A

Compliant with NVIDIA SFF-Ready specification, ASUS launches Prime GeForce RTX 40 series graphics cards

Jun 15, 2024 pm 04:38 PM

Compliant with NVIDIA SFF-Ready specification, ASUS launches Prime GeForce RTX 40 series graphics cards

Jun 15, 2024 pm 04:38 PM

According to news from this site on June 15, Asus has recently launched the Prime series GeForce RTX40 series "Ada" graphics card. Its size complies with Nvidia's latest SFF-Ready specification. This specification requires that the size of the graphics card does not exceed 304 mm x 151 mm x 50 mm (length x height x thickness). ). The Prime series GeForceRTX40 series launched by ASUS this time includes RTX4060Ti, RTX4070 and RTX4070SUPER, but it currently does not include RTX4070TiSUPER or RTX4080SUPER. This series of RTX40 graphics cards adopts a common circuit board design with dimensions of 269 mm x 120 mm x 50 mm. The main differences between the three graphics cards are