Technology peripherals

Technology peripherals

AI

AI

Tencent reveals the latest large model training method that saves 50% of computing power costs

Tencent reveals the latest large model training method that saves 50% of computing power costs

Tencent reveals the latest large model training method that saves 50% of computing power costs

In the context of a shortage of computing power, how to improve the efficiency of large model training and inference and reduce costs has become the focus of the industry.

On November 23, Tencent disclosed that the self-developed machine learning framework Angel behind Tencent’s Hunyuan large model has been upgraded again, and the large model training efficiency has been improved to 2.6 that of mainstream open source frameworks. times, hundreds of billions of large model training can save 50% of computing power costs. The upgraded Angel supports ultra-large-scale training at the 10,000-ka level in a single task, further improving the performance and efficiency of Tencent Cloud's HCC large model dedicated computing cluster.

At the same time, Angel also provides a one-stop platform from model development to application implementation, supporting users to quickly call Tencent Hunyuan large model capabilities through API interfaces or fine-tuning, accelerating Large model application construction, more than 300 Tencent products and scenarios such as Tencent Conference, Tencent News, Tencent Video, etc. have been connected to Tencent Hunyuan internal testing.

Currently, relevant capabilities have been opened to the outside world through Tencent Cloud. Based on the upgraded Angel machine learning framework, Tencent Cloud TI platform can provide better training and inference acceleration capabilities, and support customers to use their own data for one-stop training and fine-tuning, and create exclusive intelligent applications based on Tencent's Hunyuan large model.

The self-developed machine learning framework has been upgraded, and the efficiency of large model training and inference has been further improved

With the advent of the era of large models, model parameters have increased exponentially growth, reaching the trillion level. Large models gradually develop from supporting a single modality and task to supporting multiple tasks in multiple modalities. Under this trend, large model training requires huge computing power, far exceeding the processing speed of a single chip, and multi-card distributed training communication losses are huge. How to improve the utilization rate of hardware resources has become an important prerequisite for the development and practicality of domestic large model technology.

In order to train large models, Tencent has developed a machine learning training framework called AngelPTM, which accelerates and accelerates the entire process of pre-training, model fine-tuning and reinforcement learning. optimization. AngelPTM adopts the latest FP8 mixed precision training technology, combines the deeply optimized 4D parallelism and ZeROCache mechanism to optimize storage. It can be compatible with a variety of domestic hardware and can train with fewer resources and faster speeds. Larger models

In April 2023, Tencent Cloud released a new generation of HCC high-performance computing clusters for large models, whose performance is three times higher than the previous generation. In addition to hardware upgrades, HCC has also performed system-level optimizations on network protocols, communication strategies, AI frameworks, and model compilation, greatly reducing training, tuning, and computing power costs. AngelPTM has previously provided services through HCC. This upgrade of the Angel machine learning framework will further improve the performance of HCC's dedicated computing cluster for large models and help enterprises accelerate the practical application of large models

In order to solve the training challenges and rising inference costs caused by the increase in model parameters, Tencent's self-developed large model inference framework AngelHCF has improved performance by expanding parallel capabilities and adopting multiple Attention optimization strategies. At the same time, the framework is also adapted to a variety of compression algorithms to improve throughput, thereby achieving faster inference performance and lower costs, and supporting large model inference services

Compared to The mainstream framework in the industry, AngelHCF’s inference speed has been increased by 1.3 times. In the application of Tencent's Hunyuan large model Wenshengtu, the inference time was shortened from the original 10 seconds to 3 to 4 seconds. In addition, AngelHCF also supports a variety of flexible large model compression and quantization strategies, and supports automatic compression

One-stop application construction, allowing large models to be used "out of the box"

As a practical-level large model, Tencent's Hunyuan large model has been oriented to application scenarios since the beginning of research and development, and has solved the difficulties in implementing large models in practice. Tencent has many types of products and applications and a large amount of traffic, making it very challenging to actually "use" the model. Based on Angel, Tencent has built a one-stop platform for large model access and application development, including services such as data processing, fine-tuning, model evaluation, one-click deployment, and prompt word optimization, allowing large models to be used "out of the box" become possible.

In terms of model access, Tencent Hunyuan Large Model provides models with sizes of more than 100 billion, 10 billion, and 1 billion, fully adapting to the needs of various application scenarios. With simple fine-tuning, you can meet business needs and reduce resource costs for model training and inference services. In common application scenarios such as Q&A and content classification, it is more cost-effective

At the application development level, more than 300 businesses and application scenarios within Tencent have been connected to the Tencent Hunyuan large model The number of internal tests has doubled compared to last month, covering multiple fields such as text summary, abstract, creation, translation, and coding.

In September 2023, Tencent Hunyuan, a practical large-scale model independently developed by Tencent, was officially unveiled and opened through Tencent Cloud. Tencent Hunyuan has a parameter scale of more than 100 billion, and the pre-training corpus contains more than 2 trillion tokens. It integrates Tencent’s independent technology accumulation in pre-training algorithms, machine learning platforms, and underlying computing resources, and continues to iterate in applications to continuously optimize large-scale model capabilities. At present, customers from multiple industries such as retail, education, finance, medical care, media, transportation, government affairs, etc. have accessed Tencent Hunyuan large-scale model through Tencent Cloud

The above is the detailed content of Tencent reveals the latest large model training method that saves 50% of computing power costs. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

On May 30, Tencent announced a comprehensive upgrade of its Hunyuan model. The App "Tencent Yuanbao" based on the Hunyuan model was officially launched and can be downloaded from Apple and Android app stores. Compared with the Hunyuan applet version in the previous testing stage, Tencent Yuanbao provides core capabilities such as AI search, AI summary, and AI writing for work efficiency scenarios; for daily life scenarios, Yuanbao's gameplay is also richer and provides multiple features. AI application, and new gameplay methods such as creating personal agents are added. "Tencent does not strive to be the first to make large models." Liu Yuhong, vice president of Tencent Cloud and head of Tencent Hunyuan large model, said: "In the past year, we continued to promote the capabilities of Tencent Hunyuan large model. In the rich and massive Polish technology in business scenarios while gaining insights into users’ real needs

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Tan Dai, President of Volcano Engine, said that companies that want to implement large models well face three key challenges: model effectiveness, inference costs, and implementation difficulty: they must have good basic large models as support to solve complex problems, and they must also have low-cost inference. Services allow large models to be widely used, and more tools, platforms and applications are needed to help companies implement scenarios. ——Tan Dai, President of Huoshan Engine 01. The large bean bag model makes its debut and is heavily used. Polishing the model effect is the most critical challenge for the implementation of AI. Tan Dai pointed out that only through extensive use can a good model be polished. Currently, the Doubao model processes 120 billion tokens of text and generates 30 million images every day. In order to help enterprises implement large-scale model scenarios, the beanbao large-scale model independently developed by ByteDance will be launched through the volcano

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

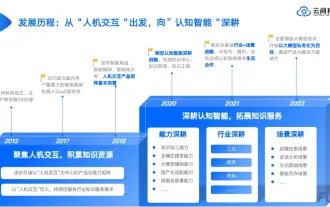

1. Background Introduction First, let’s introduce the development history of Yunwen Technology. Yunwen Technology Company...2023 is the period when large models are prevalent. Many companies believe that the importance of graphs has been greatly reduced after large models, and the preset information systems studied previously are no longer important. However, with the promotion of RAG and the prevalence of data governance, we have found that more efficient data governance and high-quality data are important prerequisites for improving the effectiveness of privatized large models. Therefore, more and more companies are beginning to pay attention to knowledge construction related content. This also promotes the construction and processing of knowledge to a higher level, where there are many techniques and methods that can be explored. It can be seen that the emergence of a new technology does not necessarily defeat all old technologies. It is also possible that the new technology and the old technology will be integrated with each other.

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

Xiaomi Byte joins forces! A large model of Xiao Ai's access to Doubao: already installed on mobile phones and SU7

Jun 13, 2024 pm 05:11 PM

According to news on June 13, according to Byte's "Volcano Engine" public account, Xiaomi's artificial intelligence assistant "Xiao Ai" has reached a cooperation with Volcano Engine. The two parties will achieve a more intelligent AI interactive experience based on the beanbao large model. It is reported that the large-scale beanbao model created by ByteDance can efficiently process up to 120 billion text tokens and generate 30 million pieces of content every day. Xiaomi used the beanbao large model to improve the learning and reasoning capabilities of its own model and create a new "Xiao Ai Classmate", which not only more accurately grasps user needs, but also provides faster response speed and more comprehensive content services. For example, when a user asks about a complex scientific concept, &ldq

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made