Technology peripherals

Technology peripherals

AI

AI

Small scale, high efficiency: DeepMind launches multi-modal solution Mirasol 3B

Small scale, high efficiency: DeepMind launches multi-modal solution Mirasol 3B

Small scale, high efficiency: DeepMind launches multi-modal solution Mirasol 3B

One of the main challenges faced by multi-modal learning is the need to fuse heterogeneous modalities such as text, audio, and video. Multi-modal models need to combine signals from different sources. However, these modalities have different characteristics and are difficult to combine through a single model. For example, video and text have different sampling rates

Recently, a research team from Google DeepMind decoupled multi-modal models into multiple independent, specialized autoregressive models, according to Features of various modalities to process input.

Specifically, the study proposes a multimodal model called Mirasol3B. Mirasol3B consists of time-synchronized autoregressive components for audio and video as well as autoregressive components for contextual modalities. These modes are not necessarily aligned in time, but are arranged in order

Paper address: https://arxiv.org/abs/2311.05698

Mirasol3B reaches SOTA level in multi-modal benchmarks, outperforming larger models. By learning more compact representations, controlling the sequence length of audio-video feature representations, and modeling based on temporal correspondences, Mirasol3B is able to effectively meet the high computational requirements of multi-modal inputs.

Method Introduction

Mirasol3B is an audio-video-text multimodal model where autoregressive modeling is decoupled into temporal alignment Autoregressive components for modalities (e.g. audio, video), and autoregressive components for non-temporally aligned contextual modalities (e.g. text). Mirasol3B uses cross-attention weights to coordinate the learning process of these components. This decoupling makes the parameter distribution within the model more reasonable, allocates enough capacity to the modalities (video and audio), and makes the overall model more lightweight.

As shown in Figure 1, Mirasol3B consists of two main learning components: the autoregressive component and the input combination component. Among them, the autoregressive component is designed to handle nearly simultaneous multi-modal inputs, such as video and audio, for timely input combinations

When rewriting the content, you need to keep the original meaning unchanged and change the language to Chinese. The study proposes to segment the temporally aligned modalities into time segments and learn audio-video joint representations in the time segments. Specifically, this research proposes a modal joint feature learning mechanism called "Combiner". "Combiner" fuses modal features within the same time period to generate a more compact representation

"Combiner" extracts a primary spatiotemporal representation from the original modal input and captures the video The dynamic characteristics, combined with its synchronic audio features, the model can receive multi-modal input at different rates and perform well when processing longer videos.

"Combiner" effectively meets the need for modal representation to be both efficient and informative. It can fully cover events and activities in video and other concurrent modalities, and can be used in subsequent autoregressive models to learn long-term dependencies.

In order to process video and audio signals and accommodate longer video/audio inputs, they are split into (roughly synchronized in time) Small pieces, and then learn joint audio-visual representation through "Combiner". The second component handles context, or temporally misaligned signals such as global textual information, which are often still continuous. It is also autoregressive and uses the combined latent space as cross-attention input.

The learning component contains video and audio, and its parameter is 3B; while the component without audio is 2.9B. Among them, most parameters are used in audio and video autoregressive models. Mirasol3B usually processes 128-frame videos, and can also process longer videos, such as 512 frames.

Due to the design of the partition and "Combiner" model architecture, add more frames, or increase The size and number of blocks, etc., will only increase the parameters slightly, solving the problem that longer videos require more parameters and larger memory.

Experiments and Results

This study tested and evaluated Mirasol3B on the standard VideoQA benchmark, long video VideoQA benchmark, and audio video benchmark.

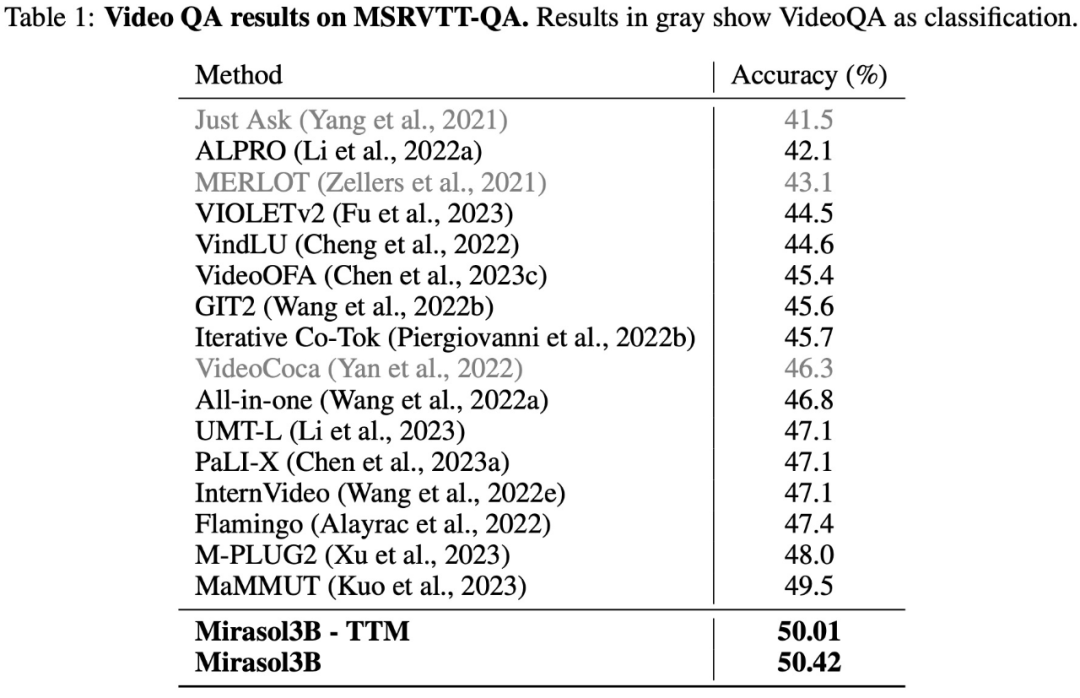

The test results on the VideoQA data set MSRVTTQA are shown in Table 1 below. Mirasol3B surpasses the current SOTA model, as well as larger models such as PaLI-X and Flamingo.

In terms of long video question and answer, this study tested and evaluated Mirasol3B on the ActivityNet-QA and NExTQA data sets. The results are shown in Table 2 below. Display:

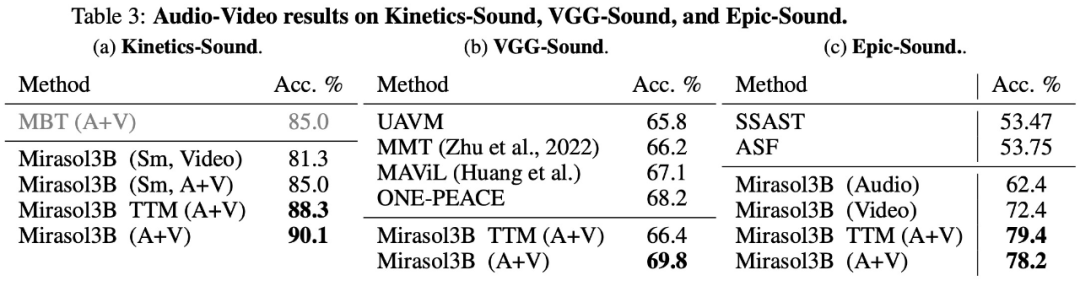

In the end, the study selected KineticsSound, VGG-Sound, and Epic-Sound for audio-video benchmarking and adopted an open Generate evaluation. The experimental results are shown in Table 3 below:

Interested readers can read the original text of the paper to learn more about the research content.

The above is the detailed content of Small scale, high efficiency: DeepMind launches multi-modal solution Mirasol 3B. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

DDREASE is a tool for recovering data from file or block devices such as hard drives, SSDs, RAM disks, CDs, DVDs and USB storage devices. It copies data from one block device to another, leaving corrupted data blocks behind and moving only good data blocks. ddreasue is a powerful recovery tool that is fully automated as it does not require any interference during recovery operations. Additionally, thanks to the ddasue map file, it can be stopped and resumed at any time. Other key features of DDREASE are as follows: It does not overwrite recovered data but fills the gaps in case of iterative recovery. However, it can be truncated if the tool is instructed to do so explicitly. Recover data from multiple files or blocks to a single

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.