Technology peripherals

Technology peripherals

AI

AI

Important breakthrough! The Westlake University team and Zhejiang Second Hospital jointly realized Chinese decoding of brain-computer interface

Important breakthrough! The Westlake University team and Zhejiang Second Hospital jointly realized Chinese decoding of brain-computer interface

Important breakthrough! The Westlake University team and Zhejiang Second Hospital jointly realized Chinese decoding of brain-computer interface

Professor Mohammed Sawan’s team at the Advanced Neural Chip Center, Professor Zhang Yue’s team and Professor Zhu Junming’s team from the Natural Language Processing Laboratory jointly released their latest research results: “A high-performance brain-sentence communication designed for logosyllabic language". This research realizes full-spectrum Chinese decoding of brain-computer interface, which to a certain extent fills the gap in international Chinese decoding brain-computer interface technology.

Brain-computer interface (BCI) is recognized as the main battlefield for the cross-integration of life sciences and information technology in the future, and is a research direction with important social value and strategic significance.

Brain-computer interface technology refers to the creation of a connection path for information exchange between the human or animal brain and external devices. Its essence is a new type of information transmission channel that allows information to bypass the original muscle and peripheral nerve pathways. Realize connection with the external world, thereby replacing human movement, language and other functions to a certain extent.

Design and performance of full-spectrum Chinese decoder for brain-computer interface

Design and performance of full-spectrum Chinese decoder for brain-computer interface

In August of this year, two back-to-back Nature articles demonstrated the power of brain-computer interfaces in language recovery. However, most of the existing language brain-computer interface technologies are built for alphabetic language systems such as English, and research on language brain-computer interface systems for non-alphabetic systems such as Chinese characters is still blank.

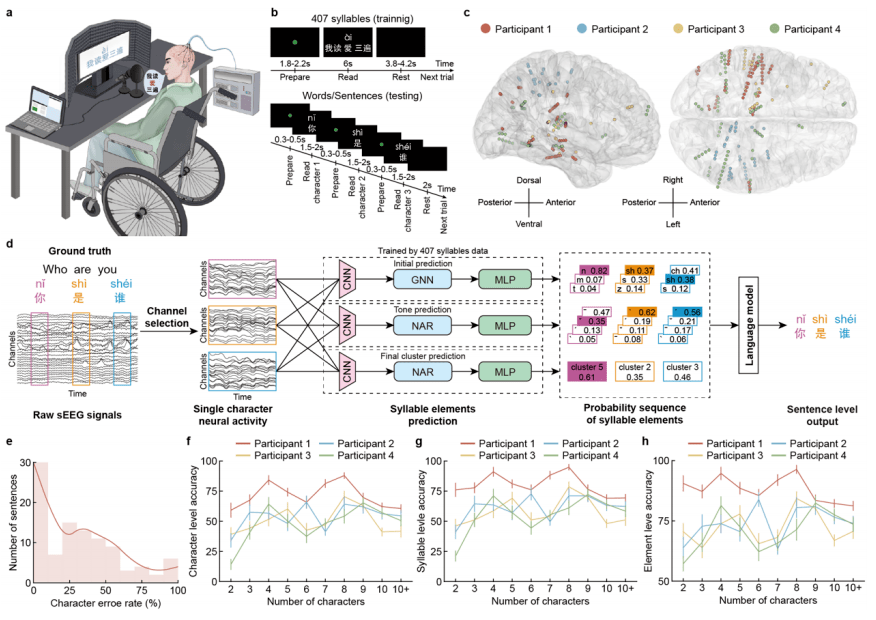

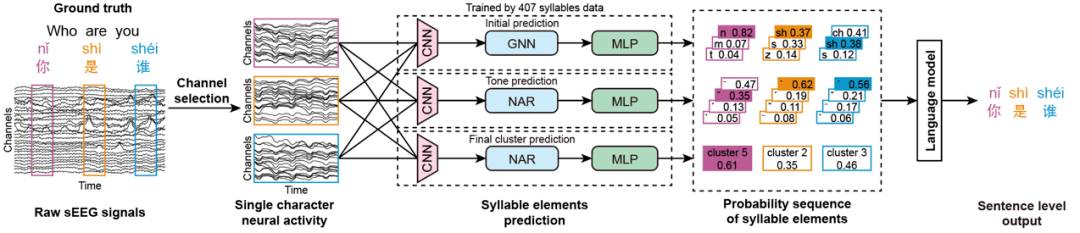

In this study, the research team used stereotactic electroencephalography (SEEG) to collect neural activity signals in the brain corresponding to the pronunciation process of all Mandarin Chinese characters, and combined with deep learning algorithms and language models to achieve full-spectrum pronunciation of Chinese characters. Decoding, establishing a Chinese brain-computer interface system covering the pronunciation of all Chinese Mandarin characters, achieving end-to-end output from brain activity to complete Mandarin sentences.

Chinese, as a pictographic and syllabic language, has more than 50,000 characters, which is significantly different from English, which is composed of 26 letters, so this is a huge challenge for existing language brain-computer interface systems. challenges. In order to solve this problem, over the past three years, the research team has conducted an in-depth analysis of the pronunciation rules and characteristics of the Chinese language itself. Starting from the three elements of initial consonants, tones and finals of Chinese pronunciation syllables, and combining the characteristics of the Pinyin input system, a new language brain-computer interface system suitable for Chinese was designed. The research team built a Chinese speech-SEEG database of more than 100 hours by designing a speech database covering all 407 Chinese Pinyin syllables and Chinese pronunciation characteristics and simultaneously collecting EEG signals. Through artificial intelligence model training, the system built a prediction model for the three elements of Chinese character pronunciation syllables (including initial consonants, tones and finals), and finally integrated all predicted elements through a language model, combining semantic information to generate the most likely Complete Chinese sentences.

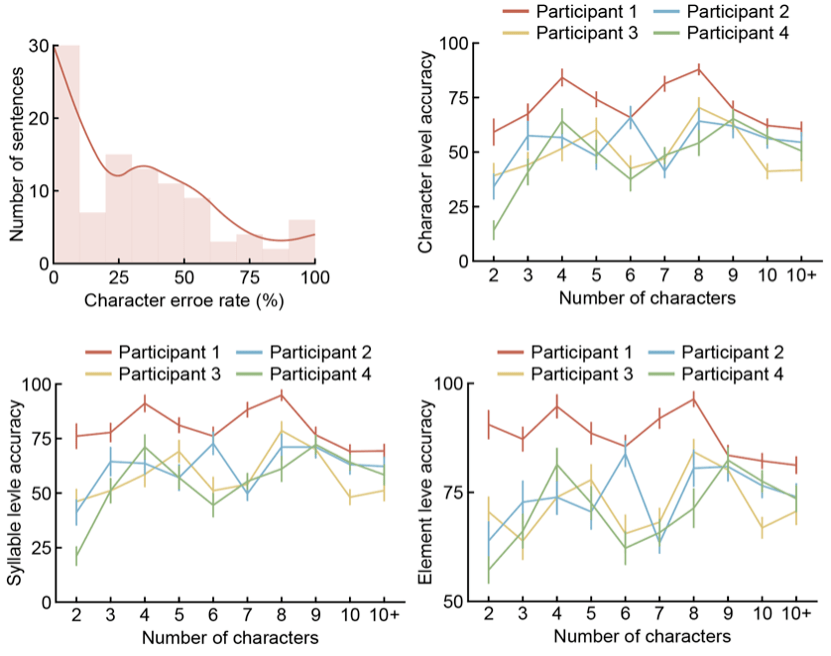

The research team evaluated the decoding ability of this brain-computer interface system in a simulated daily Chinese environment. After more than 100 randomly selected complex communication scene decoding tests of 2 to 15 characters, the median character error rate of all participants averaged only 29%, and some participants obtained completely correct sentences through EEG decoding. reached 30%. The relatively efficient decoding performance is due to the excellent performance of the three independent syllable element decoders and the perfect cooperation of the intelligent language model. In particular, in terms of classifying 21 initial consonants, the accuracy of the initial consonant decoder exceeds 40% (more than 3 times the baseline), and the Top 3 accuracy rate reaches almost 100%; while the tone decoder used to distinguish 4 tones The accuracy rate also reached 50% (more than 2 times the baseline). In addition to the outstanding contributions of the three independent syllable element decoders, the powerful automatic error correction and context connection capabilities of the intelligent language model also make the performance of the entire language brain-computer interface system even more outstanding.

This research provides a new perspective for the study of BCI decoding of Chinese, a phonetic language, and also proves that the performance of language brain-computer interface systems can be significantly improved through powerful language models, laying the foundation for future phonetic language neural prostheses. Research provides new directions. This work also indicates that patients with neurological diseases will soon be able to control computer-generated Chinese sentences through their thoughts and regain the ability to communicate!

Reference content

https://www.biorxiv.org/content/10.1101/2023.11.05.562313v1.full.pdf

The above is the detailed content of Important breakthrough! The Westlake University team and Zhejiang Second Hospital jointly realized Chinese decoding of brain-computer interface. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1662

1662

14

14

1419

1419

52

52

1312

1312

25

25

1262

1262

29

29

1235

1235

24

24

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must Explore

Apr 13, 2025 am 01:14 AM

Hey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

AV Bytes: Meta's Llama 3.2, Google's Gemini 1.5, and More

Apr 11, 2025 pm 12:01 PM

This week's AI landscape: A whirlwind of advancements, ethical considerations, and regulatory debates. Major players like OpenAI, Google, Meta, and Microsoft have unleashed a torrent of updates, from groundbreaking new models to crucial shifts in le

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's Manifesto

Apr 10, 2025 am 11:19 AM

Shopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

GPT-4o vs OpenAI o1: Is the New OpenAI Model Worth the Hype?

Apr 13, 2025 am 10:18 AM

Introduction OpenAI has released its new model based on the much-anticipated “strawberry” architecture. This innovative model, known as o1, enhances reasoning capabilities, allowing it to think through problems mor

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)

Apr 12, 2025 am 11:58 AM

Introduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

Newest Annual Compilation Of The Best Prompt Engineering Techniques

Apr 10, 2025 am 11:22 AM

For those of you who might be new to my column, I broadly explore the latest advances in AI across the board, including topics such as embodied AI, AI reasoning, high-tech breakthroughs in AI, prompt engineering, training of AI, fielding of AI, AI re

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

How to Add a Column in SQL? - Analytics Vidhya

Apr 17, 2025 am 11:43 AM

SQL's ALTER TABLE Statement: Dynamically Adding Columns to Your Database In data management, SQL's adaptability is crucial. Need to adjust your database structure on the fly? The ALTER TABLE statement is your solution. This guide details adding colu