Amazon is making every effort to defend its leadership in cloud computing. On the one hand, they upgraded their own cloud chips and launched Amazon's version of GPT, an artificial intelligence chatbot; on the other hand, they also deepened their cooperation with NVIDIA, launched new services based on NVIDIA chips, and jointly developed them with NVIDIA supercomputer

Dave Brown, vice president of AWS, said that by focusing the design of self-developed chips on actual workloads that are important to customers, AWS can provide them with the most advanced cloud infrastructure. The Graviton 4 launched this time is the fourth generation chip product within five years. As people’s interest in generative AI rises, the second generation AI chip Trainium 2 will help customers train themselves faster at lower cost and higher energy efficiency. machine learning model.

Graviton4 computing performance is improved by up to 30% compared to the previous generation

On Tuesday, November 28th, Eastern Time, Amazon’s cloud computing business AWS announced the launch of a new generation of AWS self-developed chips. Among them, the computing performance of the general-purpose chip Graviton4 is up to 30% higher than the previous generation Graviton3, with a 50% increase in cores and a 75% increase in memory bandwidth, thus providing the highest cost performance and energy utilization on the Amazon cloud server hosting service Amazon Elastic Compute Cloud (EC2) Effect.

Graviton4 improves security with full encryption of all high-speed physical hardware interfaces. AWS said Graviton4 will be available on memory-optimized Amazon EC2 R8g instances to help customers improve the execution of high-performance database, in-memory cache, and big data analytics workloads. R8g instances offer larger instance sizes with up to three times more vCPUs and three times more memory than previous generation R7g instances

In the next few months, it is planned to launch computers equipped with Graitons4. AWS said that in the five years since the launch of the Garviton project, more than 2 million Garviton processors have been produced, and the first 100 users of AWS EC2 have chosen to use Graviton

Trainium2 is four times faster and can train models with trillions of parameters

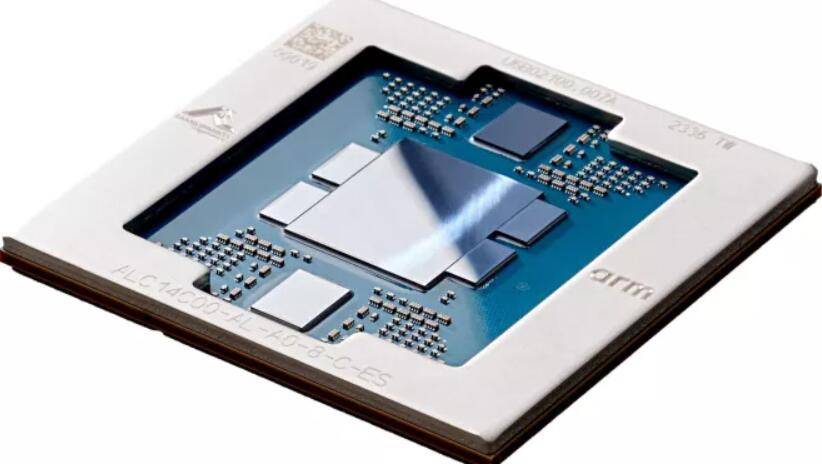

AWS has launched a new generation of AI chips called Trainium2, which is four times faster than the previous generation Trainium1. Trainium2 can deploy up to 100,000 chips in EC2 UltraCluster, enabling users to train base models (PM) and large language models (LLM) with trillions of parameters in a short time. Compared with the previous generation, Trainium2’s energy utilization has increased by two times

Trainium2 will be used on Amazon EC2 Trn2 instances, each containing 16 Trainium chips. Trn2 instances are designed to help customers scale the number of chip applications in next-generation EC2 UltraCluster, up to 100,000 Trainium2 chips, and provide up to 65 Execute computing power through petabyte-scale network connections through AWS Elastic Fabrication Adapters (EFA)

According to AWS, Trainium2 will be used to support new services starting next year

The first major customer, DGX Cloud, uses the upgraded version of Grace Hopper GH200 NVL32, which is the fastest GPU-driven AI supercomputer

During the annual conference re:Invent, AWS and NVIDIA announced on Tuesday an expanded strategic cooperation to provide state-of-the-art infrastructure, software and services to promote customers' generative AI innovation. This cooperation not only involves self-developed chips, but also includes cooperation in other fields

AWS will become the first cloud service provider to use the new multi-node NVLink technology NVIDIA H200 Grace Hopper super chip in the cloud. In other words, AWS will become the first important customer of the upgraded version of Grace Hopper

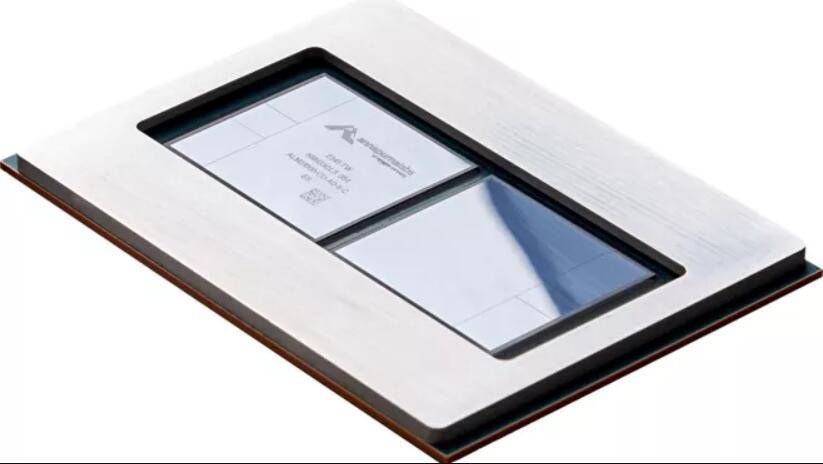

NVIDIA’s H200 NVL32 multi-node platform uses 32 Grace Hopper chips with NVLink and NVSwitch technology in a single instance. The platform will be used on Amazon EC2 instances connected to Amazon Network EFA and is powered by advanced virtualization (AWS Nitro System) and ultra-scale clusters (Amazon EC2 UltraClusters), allowing joint Amazon and Nvidia customers to scale deployments into the thousands. Designed H200 chip

NVIDIA and AWS will collaborate to host NVIDIA’s AI training-as-a-service DGX Cloud on AWS. This will be the first DGX cloud to feature the GH200 NVL32, providing developers with a single instance with maximum shared memory. AWS’s DGX Cloud will advance cutting-edge generative AI and training of large language models with over 1 trillion parameters

Nvidia and AWS are collaborating on a project called Ceiba to design the world’s fastest GPU-powered AI supercomputer. Powered by GH200 NVL32 and Amazon EFA's interconnect technology, this computer is a massive system. It is equipped with 16,384 GH200 super chips and has 65 exaflops of AI processing power. NVIDIA plans to use it to drive the next wave of generative AI innovation

The preview version of Amazon Q, the enterprise customer robot, is now online and can help developers develop applications on AWS

In addition to providing chips and cloud services, AWS also released a preview version of an AI chatbot called Amazon Q. Amazon Q is a new type of digital assistant that uses generative AI technology to work based on the business needs of enterprise customers. It helps enterprise customers search for information, write code and review business metrics

Q has received some training on code and documentation within AWS, which can be used by developers in the AWS cloud.

Developers can use Q to create applications on AWS, research best practices, correct errors, and get help writing new features for applications. Users can interact with Q through conversational Q&A to learn new knowledge, research best practices, and understand how to build applications on AWS without leaving the AWS console

Amazon will add Q to programs for enterprise intelligence software, call center workers and logistics management. AWS says customers can customize Q based on company data or personal profiles

Conversational Q&A is currently available in preview in all enterprise regions provided by AWS

The above is the detailed content of Amazon strives to defend its cloud status, upgrades its self-developed AI chips, releases chat robot Q, and is the first to use Nvidia's new generation super chip. For more information, please follow other related articles on the PHP Chinese website!

Why can't Amazon open

Why can't Amazon open

What are the dos commands?

What are the dos commands?

How to buy and sell Bitcoin legally

How to buy and sell Bitcoin legally

What are the python artificial intelligence libraries?

What are the python artificial intelligence libraries?

What are the parameters of marquee?

What are the parameters of marquee?

Five reasons why your computer won't turn on

Five reasons why your computer won't turn on

How is the performance of php8?

How is the performance of php8?

How to dress up Douyin Xiaohuoren

How to dress up Douyin Xiaohuoren

How to uninstall phpnow

How to uninstall phpnow