Stability AI launches Stable Diffusion XL Turbo model

IT House reported on November 30 that Stability AI recently launched Stable Diffusion XL Turbo (SDXL Turbo), which is an improved version of the previous SDXL model. It is said that SDXL Turbo uses "Adversarial Diffusion Distillation technology" to reduce the iteration steps of image generation from the original 50 steps to 1 step. It is said that "only one iteration step is needed to generate high-quality images." Image”

It is reported that the biggest feature of the Stable Diffusion XL Turbo model is the above-mentioned "generating images in one iteration", which is claimed to be able to perform "instant text-to-image output" and ensure the quality of the images.

What needs to be rewritten is: One of them is called "adversarial diffusion distillation technology", which is a technology that uses the existing large-scale image diffusion model as a "teacher network" to guide the generation process. This technology combines "distillation technology" and "adversarial training", where "distillation technology" refers to condensing the knowledge of a large model into a smaller model to streamline the output of the model. Adversarial training can improve the model so that it can better imitate the output of the teacher model

Previous model distillation technology was difficult to balance efficiency and quality because fast sampling usually weakens the output quality. Therefore, this Stable Diffusion XL Turbo model uses "adversarial diffusion distillation technology" to efficiently generate high-quality images. It is an important progress.

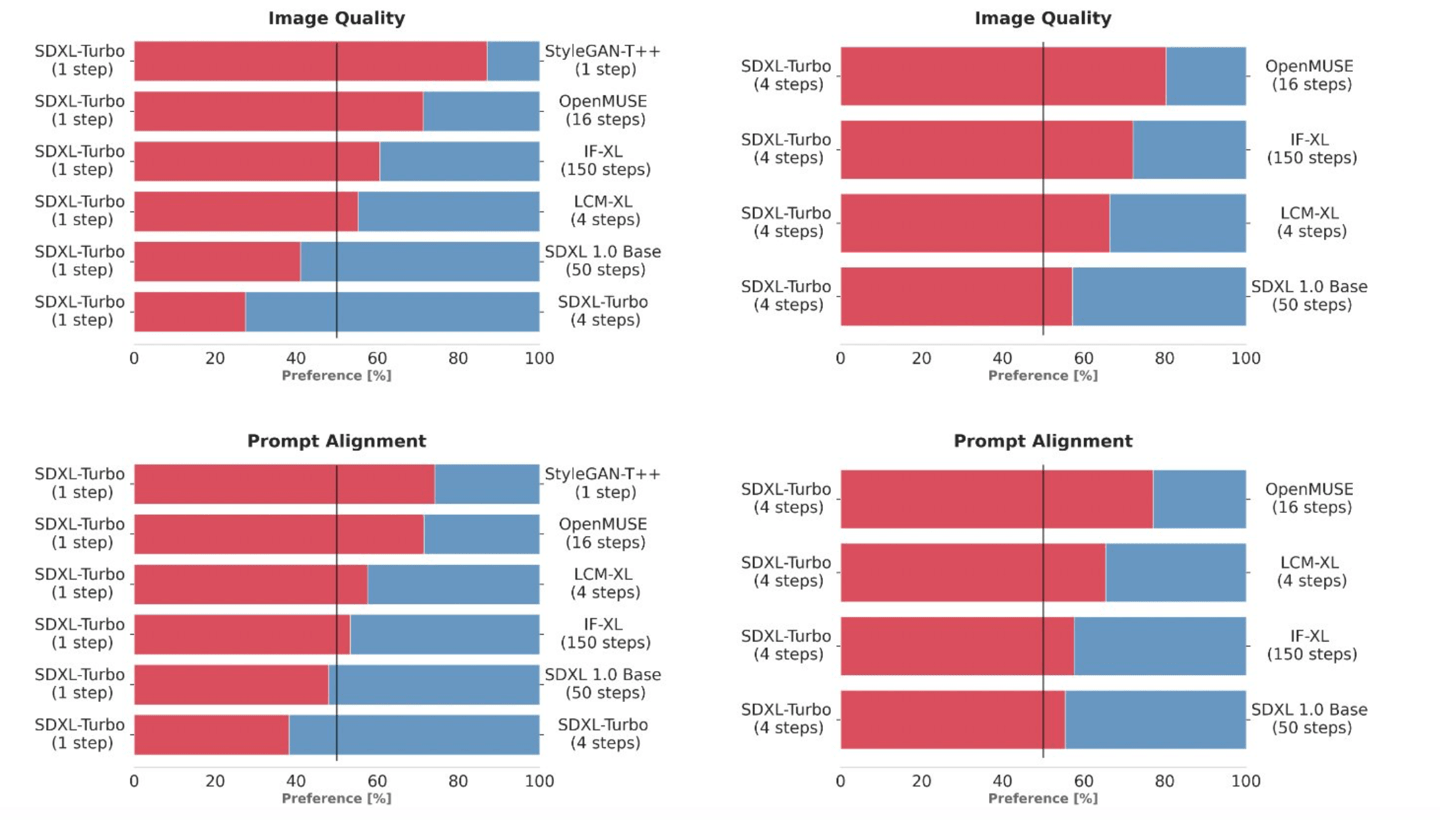

Officially compared Stable Diffusion XL Turbo with multiple different model variants, including StyleGAN-T, OpenMUSE, IF-XL, SDXL and LCM-XL, and conducted two experiments. The first experiment required the model The evaluators randomly view the output of the two models and select the output image that best matches the prompt word. The second experiment is performed roughly the same as the first experiment. Model evaluation requires selecting the one with the best image quality in the model. .

▲ Picture from Stability AI Blog

Experimental results show that Stable Diffusion XL Turbo can significantly reduce computing requirements while still maintaining excellent image generation quality. This model surpasses LCM-XL in just one iteration compared to 4 iterations. The Stable Diffusion XL Turbo, which has gone through 4 iterations, can easily beat the Stable Diffusion XL that previously required 50 iterations to configure. Computing an image at 512x512 resolution in just 207 milliseconds using the A100 GPU

IT House noticed that Stability AI has currently published the relevant code on Hugging Face for personal and non-commercial use. Interested friends can click here to visit.

The above is the detailed content of Stability AI launches Stable Diffusion XL Turbo model. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?

Mar 16, 2025 am 11:47 AM

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?

Mar 16, 2025 am 11:47 AM

OpenAI's o1: A 12-Day Gift Spree Begins with Their Most Powerful Model Yet December's arrival brings a global slowdown, snowflakes in some parts of the world, but OpenAI is just getting started. Sam Altman and his team are launching a 12-day gift ex

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

The article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)