Technology peripherals

Technology peripherals

AI

AI

'Subject Three' that attracts global attention: Messi, Iron Man, and two-dimensional ladies can handle it easily

'Subject Three' that attracts global attention: Messi, Iron Man, and two-dimensional ladies can handle it easily

'Subject Three' that attracts global attention: Messi, Iron Man, and two-dimensional ladies can handle it easily

In recent times, you may have heard more or less of "Subject 3", with waving hands, half-crotch feet, and matching rhythmic music. This dance move has been popular all over the Internet. imitate.

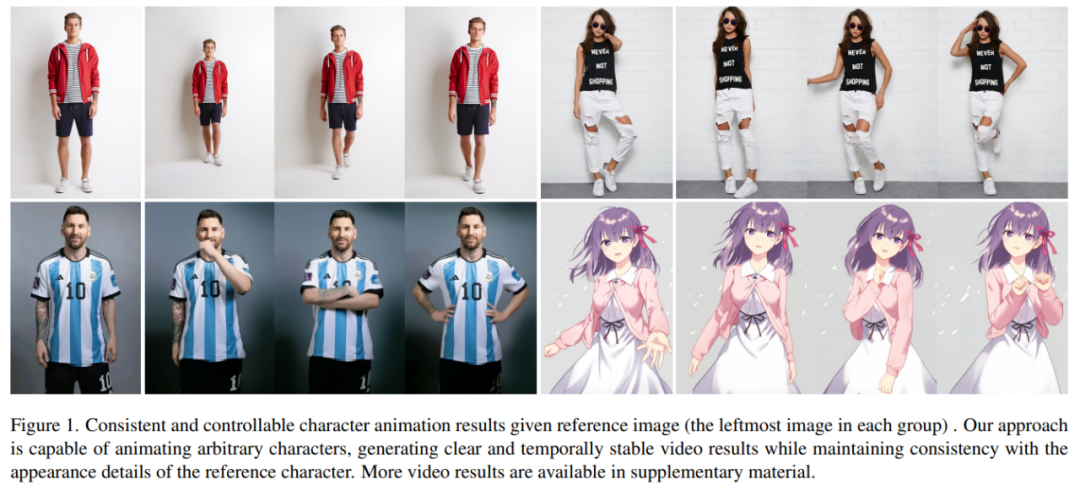

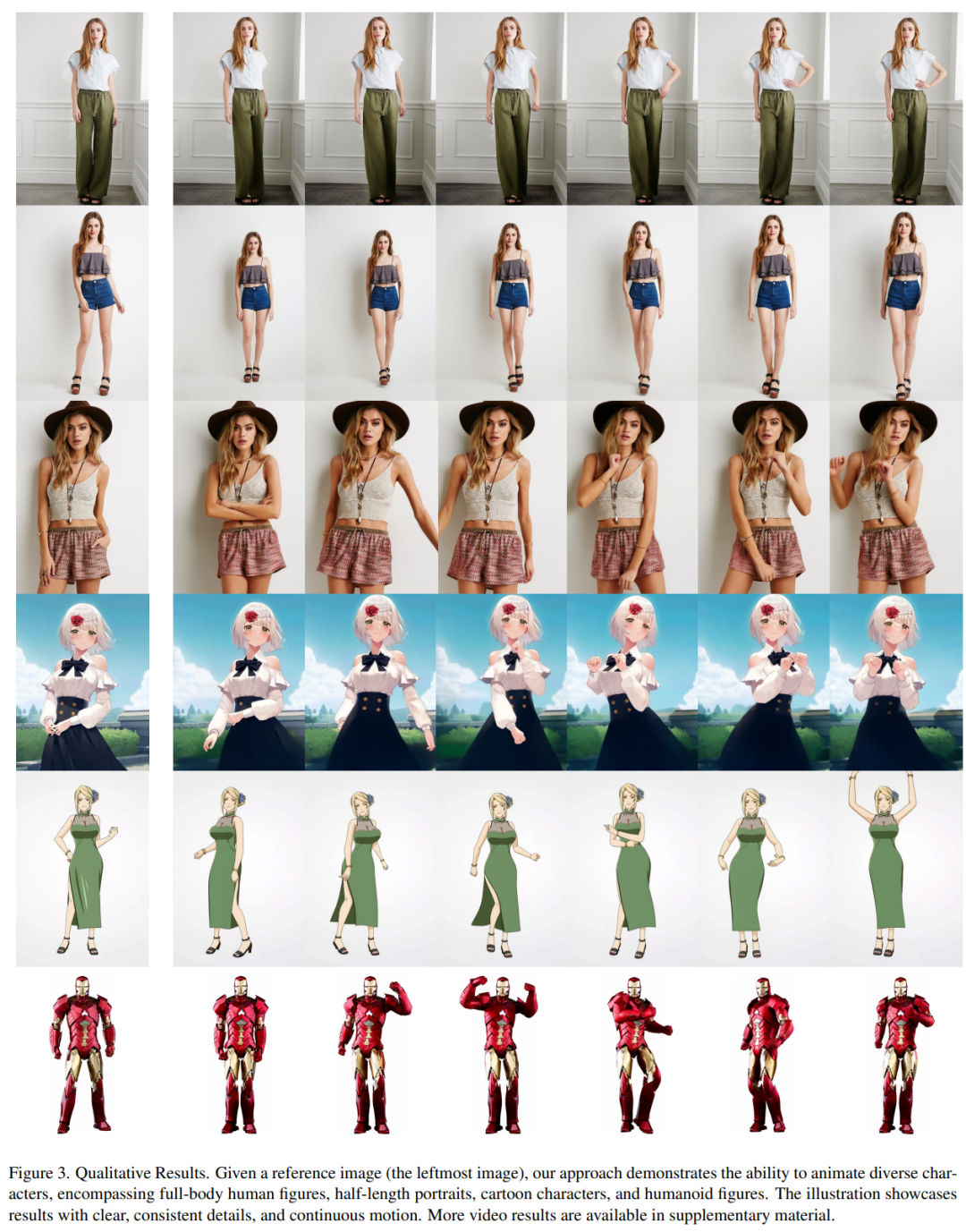

What would happen if similar dances were generated by AI? As shown in the picture below, both modern people and paper people are doing uniform movements. What you might not guess is that this is a dance video generated based on a picture.

The character movements become more difficult, and the generated video is also very smooth (far right):

Let Messi and Iron Man move, it’s no problem:

There are also various anime ladies.

How are these effects achieved? Let’s continue reading

Character animation is the process of converting original character images into realistic videos in the desired pose sequence. This task has many potential application areas, such as online retail, entertainment videos, art creation, virtual characters, etc.

Since the advent of GAN technology, researchers have been continuously exploring in-depth Methods for converting images into animations and completing pose transfer. However, the generated images or videos still have some problems, such as local distortion, blurred details, semantic inconsistency, and temporal instability, which hinder the application of these methods.

Ali's research The authors proposed a method called Animate Anybody that converts character images into animated videos that follow the desired pose sequence. This study adopted a Stable Diffusion network design and pre-trained weights, and modified the denoising UNet to accommodate multi-frame input

- Paper address: https://arxiv.org/pdf/2311.17117.pdf

- Project address: https://humanaigc.github.io/ animate-anyone/

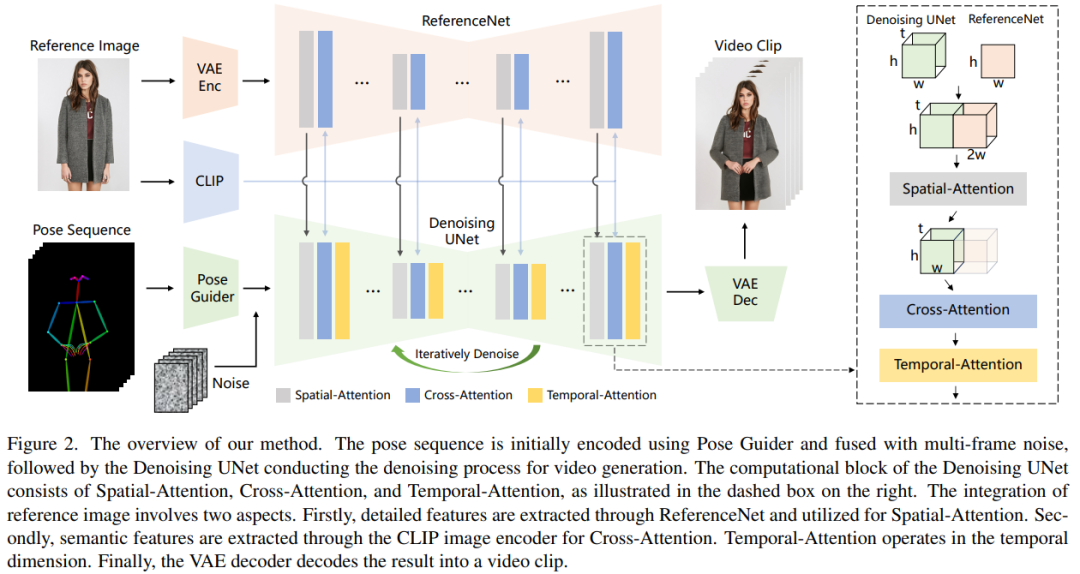

To keep the appearance consistent, the study introduced ReferenceNet. The network adopts a symmetric UNet structure and aims to capture the spatial details of the reference image. In each corresponding UNet block layer, this study uses a spatial-attention mechanism to integrate the features of ReferenceNet into the denoising UNet. This architecture enables the model to comprehensively learn the relationship with the reference image in a consistent feature space

To ensure pose controllability, this study designed a lightweight pose guidance processor to effectively integrate attitude control signals into the denoising process. In order to achieve temporal stability, this paper introduces a temporal layer to model the relationship between multiple frames, thereby retaining high-resolution details of visual quality while simulating a continuous and smooth temporal motion process.

Animate Anybody was trained on an in-house dataset of 5K character video clips, as shown in Figure 1, showing the animation results for various characters. Compared with previous methods, the method in this article has several obvious advantages:

- First, it effectively maintains the spatial and temporal consistency of the appearance of characters in the video.

- Secondly, the high-definition video it generates will not have problems such as time jitter or flickering.

- Third, it can animate any character image into a video without being restricted by a specific field.

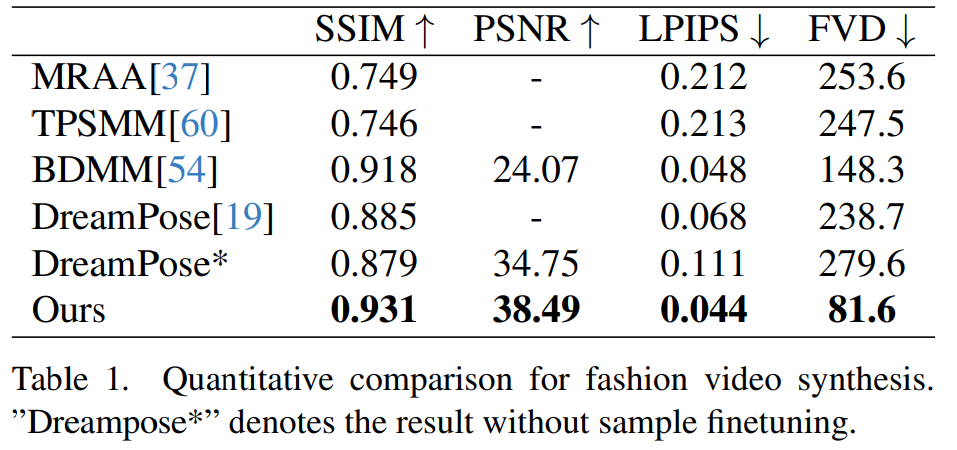

This paper is evaluated on two specific human video synthesis benchmarks (UBC Fashion Video Dataset and TikTok Dataset). The results show that Animate Anybody achieves SOTA results. Additionally, the study compared the Animate Anybody method with general image-to-video methods trained on large-scale data, showing that Animate Anybody demonstrates superior capabilities in character animation.

Animate Anybody compared to other methods:

Method introduction

The processing method of this article is shown in Figure 2. The original input of the network is composed of multi-frame noise. In order to achieve the denoising effect, the researchers adopted a configuration based on SD design, using the same framework and block units, and inheriting the training weights from SD. Specifically, this method includes three key parts, namely:

- ReferenceNet, which encodes the appearance characteristics of the character in the reference image;

- Pose Guider (posture guide), encodes action control signals to achieve controllable character movement;

- Temporal layer (temporal layer), encodes temporal relationships to ensure the continuity of character actions .

ReferenceNet

ReferenceNet is a reference image feature extraction Network, its framework is roughly the same as the denoising UNet, only the temporal layer is different. Therefore, ReferenceNet inherits the original SD weights similar to the denoising UNet, and each weight update is performed independently. The researchers explain how to integrate features from ReferenceNet into denoising UNet.

The design of ReferenceNet has two advantages. First, ReferenceNet can leverage the pre-trained image feature modeling capabilities of raw SD to produce well-initialized features. Second, since ReferenceNet and denoising UNet essentially have the same network structure and shared initialization weights, denoising UNet can selectively learn features associated in the same feature space from ReferenceNet.

Attitude guide

The rewritten content is: This lightweight attitude guide uses four convolutional layers (4 × 4 kernels, 2 × 2 stride) with channel numbers of 16, 32, 64, 128, similar to the conditional encoder in [56], used to align the gesture image. The processed pose image is added to the latent noise and then input to the denoising UNet for processing. The pose guide is initialized with Gaussian weights and uses zero convolutions in the final mapping layer

Temporal layer

The design of the time layer is inspired by AnimateDiff. For a feature map x∈R^b×t×h×w×c, the researcher first deforms it into x∈R^(b×h×w)×t×c, and then performs temporal attention, that is, along Self-attention in dimension t. The features of the temporal layer are merged into the original features through residual connections. This design is consistent with the two-stage training method below. Temporal layers are used exclusively within the Res-Trans block of denoising UNet.

Training strategy

The training process is divided into two stages.

Rewritten content: In the first stage of training, a single video frame is used for training. In the denoising UNet model, the researchers temporarily excluded the temporal layer and took single-frame noise as input. At the same time, the reference network and attitude guide are also trained. Reference images are randomly selected from the entire video clip. They used pretrained weights to initialize the denoising UNet and ReferenceNet models. The pose guide is initialized with Gaussian weights, except for the final projection layer, which uses zero convolutions. The weights of the VAE encoder and decoder and the CLIP image encoder remain unchanged. The optimization goal of this stage is to generate high-quality animated images given the reference image and target pose

In the second stage, the researcher introduces the temporal layer into the previously trained model and initialize it using the pre-trained weights in AnimateDiff. The input to the model consists of a 24-frame video clip. At this stage, only the temporal layer is trained, while the weights of other parts of the network are fixed.

Experiments and results

Qualitative results: As shown in Figure 3, the method in this article can produce animations of any character, including full-body portraits and half-length portraits , cartoon characters and humanoid characters. This method is capable of producing high definition and realistic human details. It maintains temporal consistency with the reference image and exhibits temporal continuity from frame to frame even in the presence of large motions.

Fashion video synthesis. The goal of fashion video synthesis is to transform fashion photos into realistic animated videos using driven pose sequences. Experiments are conducted on the UBC Fashion Video Dataset, which consists of 500 training videos and 100 testing videos, each containing approximately 350 frames. Quantitative comparisons are shown in Table 1. It can be found in the results that the method in this paper is better than other methods, especially in video measurement indicators, showing a clear lead.

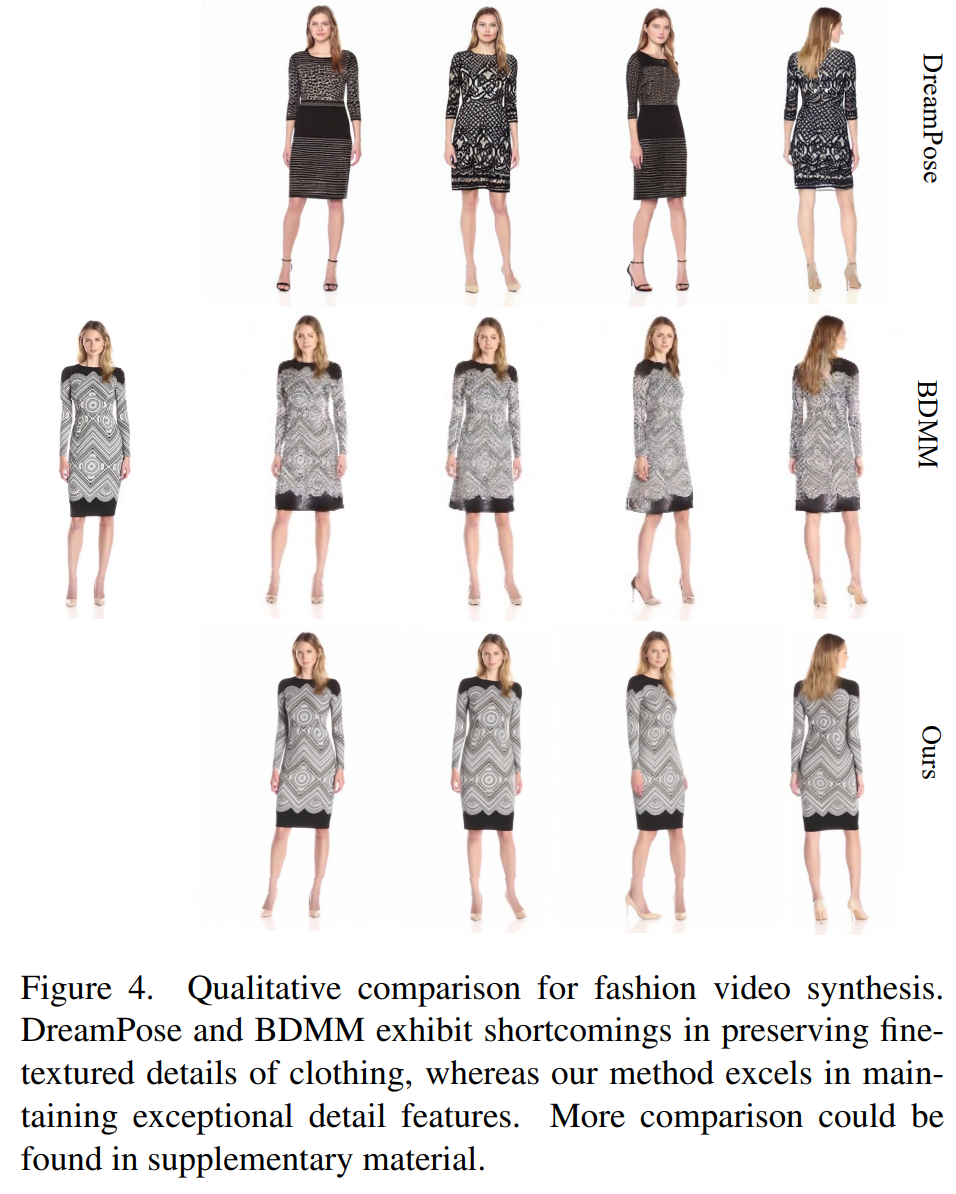

Qualitative comparison is shown in Figure 4. For a fair comparison, the researchers used DreamPose’s open source code to obtain results without sample fine-tuning. In the field of fashion videos, the requirements for clothing details are very strict. However, videos generated by DreamPose and BDMM fail to maintain consistency in clothing details and exhibit significant errors in color and fine structural elements. In contrast, the results generated by this method can more effectively maintain the consistency of clothing details.

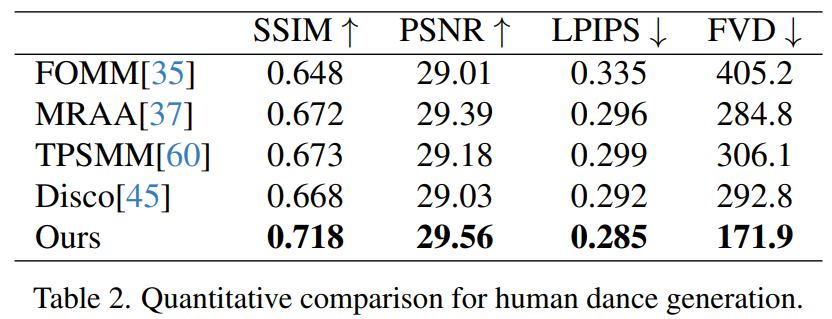

Human dance generation is a study that aims to generate humans by animating images of realistic dance scenes dance. The researchers used the TikTok data set, which includes 340 training videos and 100 test videos. They performed a quantitative comparison using the same test set, which included 10 TikTok-style videos, following DisCo's dataset partitioning method. As can be seen from Table 2, the method in this article achieves the best results. In order to enhance the generalization ability of the model, DisCo combines human attribute pre-training and uses a large number of image pairs to pre-train the model. In contrast, other researchers only trained on the TikTok data set, but the results were still better than DisCo

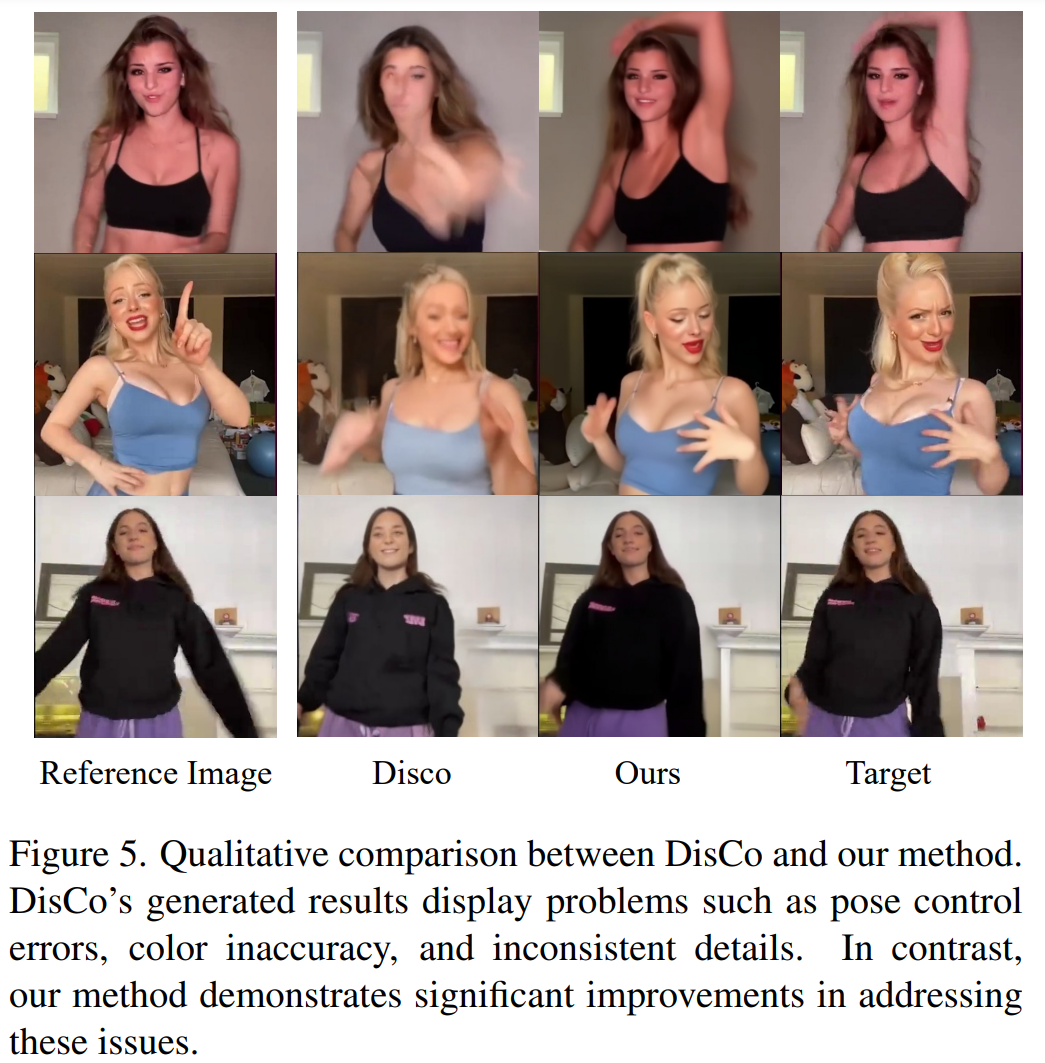

A qualitative comparison with DisCo is shown in Figure 5 . Considering the complexity of the scene, DisCo's method requires the additional use of SAM to generate human foreground masks. In contrast, our method shows that even without explicit human mask learning, the model can grasp the foreground-background relationship from the subject's motion without prior human segmentation. Furthermore, in complex dance sequences, the model excels at maintaining visual continuity throughout the action and shows greater robustness in handling different character appearances.

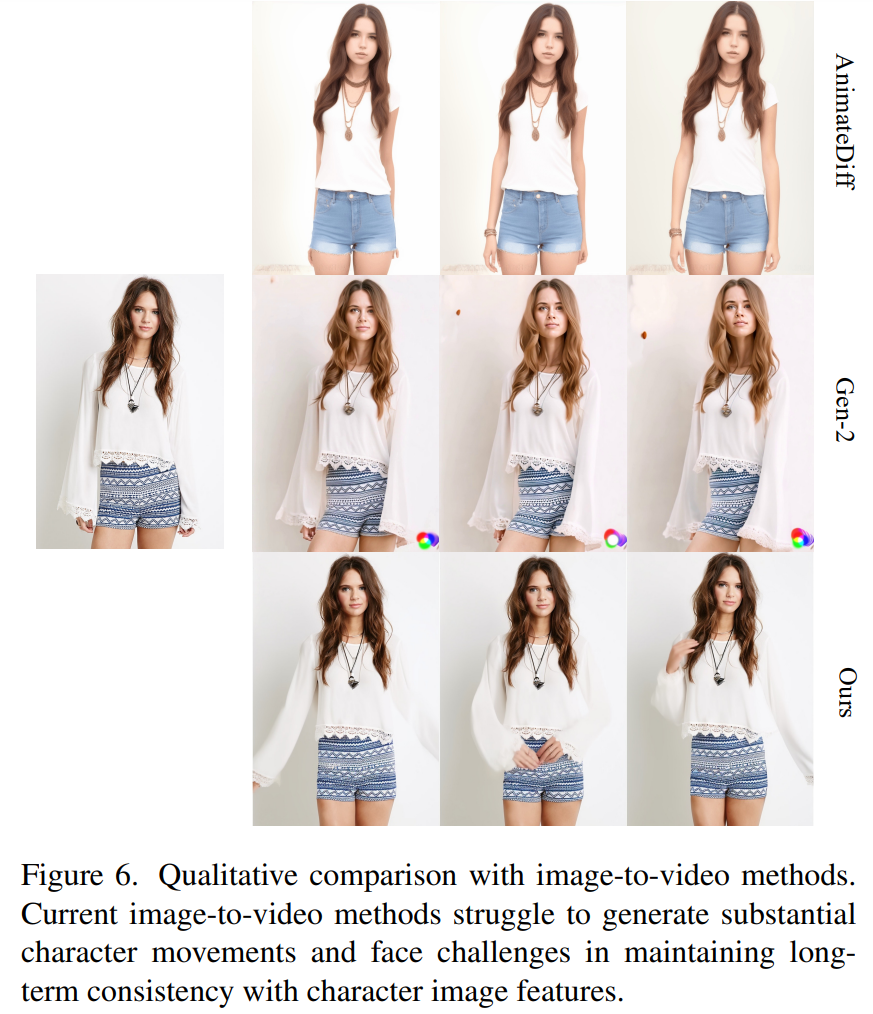

Image - Generic method for video. Currently, many studies have proposed video diffusion models with strong generation capabilities based on large-scale training data. The researchers chose two of the best-known and most effective image-video methods for comparison: AnimateDiff and Gen2. Since these two methods do not perform pose control, the researchers only compared their ability to maintain the appearance fidelity of the reference image. As shown in Figure 6, current image-to-video approaches face challenges in generating a large number of character actions and struggle to maintain long-term appearance consistency across videos, thus hindering effective support for consistent character animation.

Please consult the original paper for more information

The above is the detailed content of 'Subject Three' that attracts global attention: Messi, Iron Man, and two-dimensional ladies can handle it easily. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place