Technology peripherals

Technology peripherals

AI

AI

Apple builds an open source framework MLX for its own chips, implements Llama 7B and runs it on M2 Ultra

Apple builds an open source framework MLX for its own chips, implements Llama 7B and runs it on M2 Ultra

Apple builds an open source framework MLX for its own chips, implements Llama 7B and runs it on M2 Ultra

In November 2020, Apple launched the M1 chip, which was astonishingly fast and powerful. Apple will launch M2 in 2022, and in October this year, the M3 chip will officially debut.

When Apple releases its chips, it attaches great importance to its AI model training and deployment capabilities

The ML Compute launched by Apple can be used on Mac The TensorFlow model is trained on. PyTorch supports GPU-accelerated PyTorch machine learning model training on the M1 version of Mac, using Apple Metal Performance Shaders (MPS) as the backend. These enable Mac users to train neural networks locally.

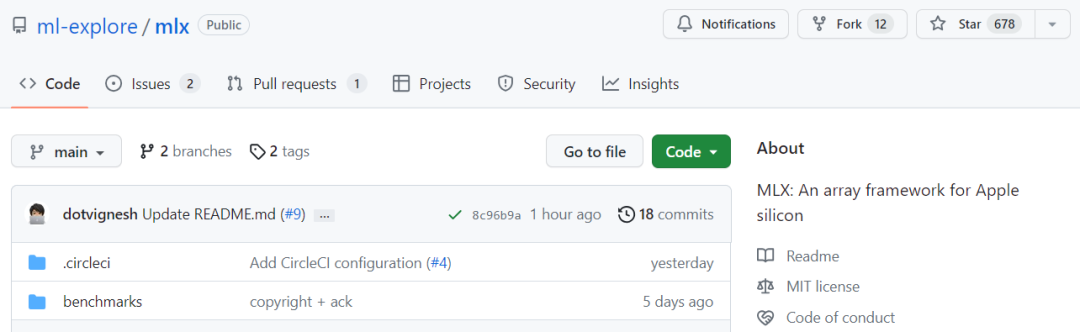

Apple announced the launch of an open source array framework specifically for machine learning, which will run on Apple chips and is called MLX

MLX is a framework specifically designed for machine learning researchers to efficiently train and deploy AI models. The design concept of this framework is simple and easy to understand. Researchers can easily extend and improve MLX to quickly explore and test new ideas. The design of MLX is inspired by frameworks such as NumPy, PyTorch, Jax and ArrayFire

Project address: https://github .com/ml-explore/mlx

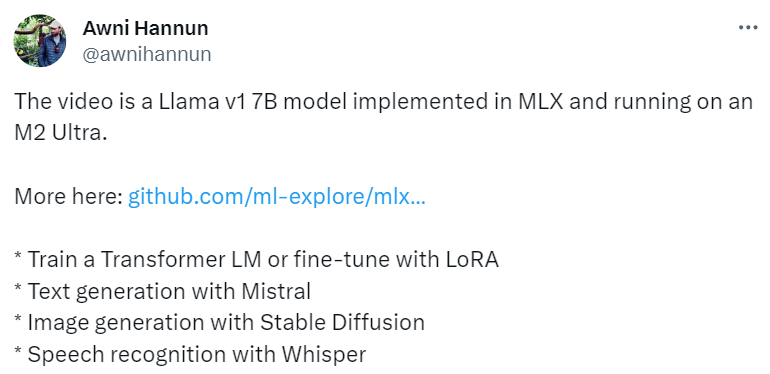

One of the MLX project contributors and Apple Machine Learning Research Team (MLR) research scientist Awni Hannun demonstrated a section using the MLX framework to implement Llama 7B and Video running on M2 Ultra.

MLX quickly attracted the attention of machine learning researchers. Chen Tianqi, author of TVM, MXNET and XGBoost, assistant professor at CMU and CTO of OctoML, retweeted: "Apple chips have a new deep learning framework."

Some people think that Apple has "repeated the same mistakes" again. This is an evaluation of MLX

In order to keep the original meaning unchanged, the content needs to be rewritten into Chinese. The original sentence does not need to appear

MLX features and examples

In this project, we can observe that MLX has the following main features

Familiar API. MLX has a Python API that is very NumPy-like, as well as a full-featured C API that is very similar to the Python API. MLX also has more advanced packages (such as mlx.nn and mlx.optimizers) whose APIs are very similar to PyTorch and can simplify building more complex models.

Combinable function transformation. MLX features composable function transformations with automatic differentiation, automatic vectorization, and computational graph optimization.

Lazy calculation. Computation in MLX is lazy and arrays are instantiated only when needed.

Dynamic graph construction. The calculation graph construction in MLX is dynamic, changing the shape of function parameters will not cause compilation to slow down, and debugging is simple and easy to use.

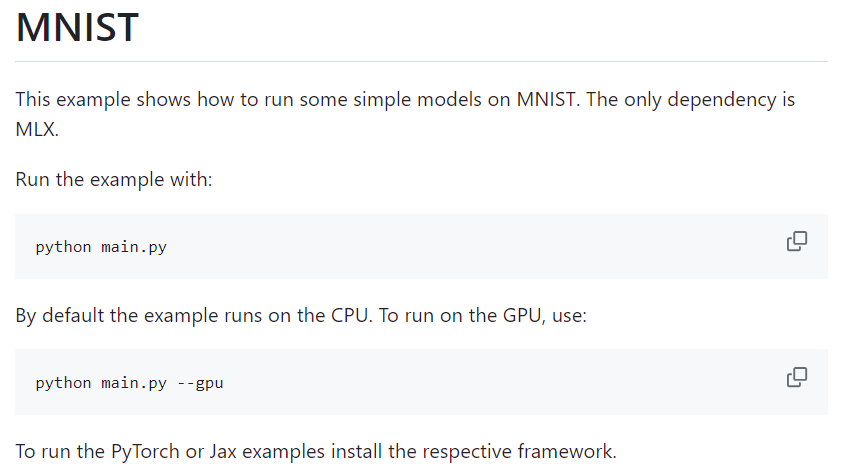

Multiple devices. Operations can be run on any supported device such as CPU and GPU.

Unified Memory. The significant difference between MLX and other frameworks is unified memory, array shared memory. Operations on MLX can run on any supported device type without moving data.

In addition, the project provides a variety of examples of using the MLX framework, such as the MNIST example, which can well help you learn how to use MLX

Image source: https://github.com/ml-explore/mlx-examples/tree/main/mnist

In addition to the above examples , MLX also provides other more practical examples, such as:

- Transformer language model training;

- LLaMA large-scale text generation and LoRA fine-tuning;

- Stable Diffusion generation Image;

- OpenAI’s Whisper speech recognition.

For more detailed documentation, please refer to: https://ml-explore.github.io/mlx/build/html/install.html

#The above is the detailed content of Apple builds an open source framework MLX for its own chips, implements Llama 7B and runs it on M2 Ultra. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

deepseek ios version download and installation tutorial

Feb 19, 2025 pm 04:00 PM

deepseek ios version download and installation tutorial

Feb 19, 2025 pm 04:00 PM

DeepSeek Smart AI Tool Download and Installation Guide (Apple Users) DeepSeek is a powerful AI tool. This article will guide Apple users how to download and install it. 1. Download and install steps: Open the AppStore app store and enter "DeepSeek" in the search bar. Carefully check the application name and developer information to ensure the correct version is downloaded. Click the "Get" button on the application details page. The first download may require AppleID password verification. After the download is completed, you can open it directly. 2. Registration process: Find the login/registration portal in the DeepSeek application. It is recommended to register with a mobile phone number. Enter your mobile phone number and receive the verification code. Check the user agreement,

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

gate.io sesame door download Chinese tutorial

Feb 28, 2025 am 10:54 AM

gate.io sesame door download Chinese tutorial

Feb 28, 2025 am 10:54 AM

This article will guide you in detail how to access the official website of Gate.io, switch Chinese language, register or log in to your account, as well as optional mobile app download and use procedures, helping you easily get started with the Gate.io exchange. For more tutorials on using Gate.io in Chinese, please continue reading.

Sesame Open Door Exchange App Official Download Sesame Open Door Exchange Official Download

Mar 04, 2025 pm 11:54 PM

Sesame Open Door Exchange App Official Download Sesame Open Door Exchange Official Download

Mar 04, 2025 pm 11:54 PM

The official download steps of the Sesame Open Exchange app cover the Android and iOS system download process, as well as common problems solutions, helping you download safely and quickly and enable convenient transactions of cryptocurrencies.

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

Is there any mobile app that can convert XML into PDF?

Apr 02, 2025 pm 08:54 PM

An application that converts XML directly to PDF cannot be found because they are two fundamentally different formats. XML is used to store data, while PDF is used to display documents. To complete the transformation, you can use programming languages and libraries such as Python and ReportLab to parse XML data and generate PDF documents.

How to download gate exchange Apple mobile phone Gate.io Apple mobile phone download guide

Mar 04, 2025 pm 09:51 PM

How to download gate exchange Apple mobile phone Gate.io Apple mobile phone download guide

Mar 04, 2025 pm 09:51 PM

Gate.io Apple mobile phone download guide: 1. Visit the official Gate.io website; 2. Click "Use Apps"; 3. Select "App"; 4. Download the App Store; 5. Install and allow permissions; 6. Register or log in; 7. Complete KYC verification; 8. Deposit; 9. Transaction of cryptocurrency; 10. Withdrawal.

Compilation and installation of Redis on Apple M1 chip Mac failed. How to troubleshoot PHP7.3 compilation errors?

Mar 31, 2025 pm 11:39 PM

Compilation and installation of Redis on Apple M1 chip Mac failed. How to troubleshoot PHP7.3 compilation errors?

Mar 31, 2025 pm 11:39 PM

Problems and solutions encountered when compiling and installing Redis on Apple M1 chip Mac, many users may...