Technology peripherals

Technology peripherals

AI

AI

Mamba's popular SSM attracts the attention of Apple and Cornell: abandon the distraction model

Mamba's popular SSM attracts the attention of Apple and Cornell: abandon the distraction model

Mamba's popular SSM attracts the attention of Apple and Cornell: abandon the distraction model

The latest research from Cornell University and Apple concluded that in order to generate high-resolution images with less computing power, the attention mechanism can be eliminated

As we all know, the attention mechanism is the core component of the Transformer architecture and is crucial for high-quality text and image generation. But its flaw is also obvious, that is, the computational complexity will increase quadratically as the sequence length increases. This is a vexing problem in long text and high-resolution image processing.

To solve this problem, this new research replaces the attention mechanism in the traditional architecture with a more scalable state space model (SSM) backbone and develops a A new architecture called Diffusion State Space Model (DIFFUSSM). This new architecture can use less computing power to match or exceed the image generation effect of existing diffusion models with attention modules, and excellently generate high-resolution images.

Thanks to the release of “Mamba” last week, the state space model SSM is receiving more and more attention. The core of Mamba is the introduction of a new architecture - "selective state space model", which makes Mamba comparable to or even beating Transformer in language modeling. At the time, paper author Albert Gu said that Mamba’s success gave him confidence in the future of SSM. Now, this paper from Cornell University and Apple seems to have added new examples of the application prospects of SSM.

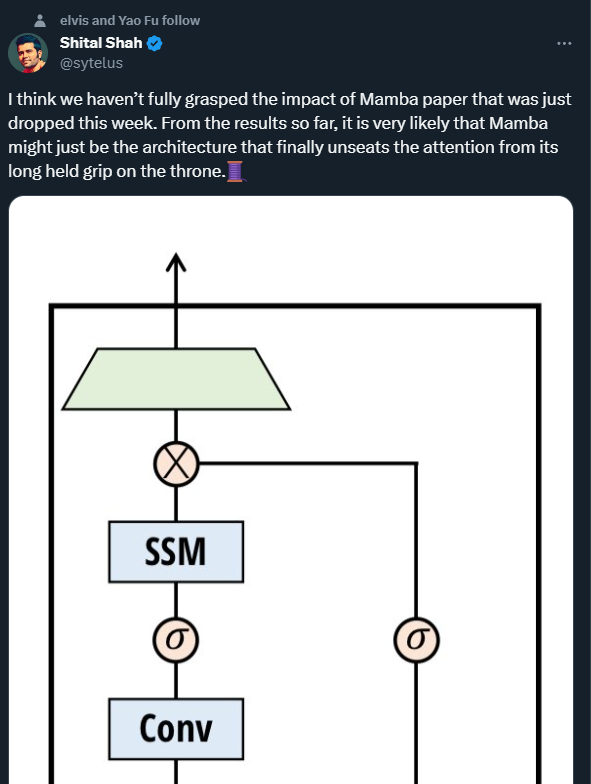

Microsoft principal research engineer Shital Shah warned that the attention mechanism may be pulled off the throne it has been sitting on for a long time.

Paper Overview

The rapid progress in the field of image generation has benefited from the denoising diffusion probability model ( DDPMs). Such models model the generation process as iterative denoising latent variables, and when sufficient denoising steps are performed, they are able to produce high-fidelity samples. The ability of DDPMs to capture complex visual distributions makes them potentially advantageous in driving high-resolution, photorealistic compositions.

Significant computational challenges remain in scaling DDPMs to higher resolutions. The main bottleneck is the reliance on self-attention when achieving high-fidelity generation. In U-Nets architecture, this bottleneck comes from combining ResNet with attention layers. DDPMs go beyond generative adversarial networks (GANs) but require multi-head attention layers. In the Transformer architecture, attention is the central component and therefore critical to achieving state-of-the-art image synthesis results. In both architectures, the complexity of attention scales quadratically with sequence length, so becomes infeasible when processing high-resolution images.

The computational cost has prompted previous researchers to use representation compression methods. High-resolution architectures often employ patchifying or multi-scale resolution. Blocking can create coarse-grained representations and reduce computational costs, but at the expense of critical high-frequency spatial information and structural integrity. Multi-scale resolution, while reducing the computation of attention layers, also reduces spatial detail through downsampling and introduces artifacts when upsampling is applied.

DIFFUSSM is a diffusion state space model that does not use an attention mechanism and is designed to solve the problems encountered when applying attention mechanisms in high-resolution image synthesis. DIFFUSSM uses a gated state space model (SSM) in the diffusion process. Previous studies have shown that the SSM-based sequence model is an effective and efficient general neural sequence model. By adopting this architecture, the SSM core can be enabled to handle finer-grained image representations, eliminating global tiling or multi-scale layers. To further improve efficiency, DIFFUSSM adopts an hourglass architecture in dense components of the network

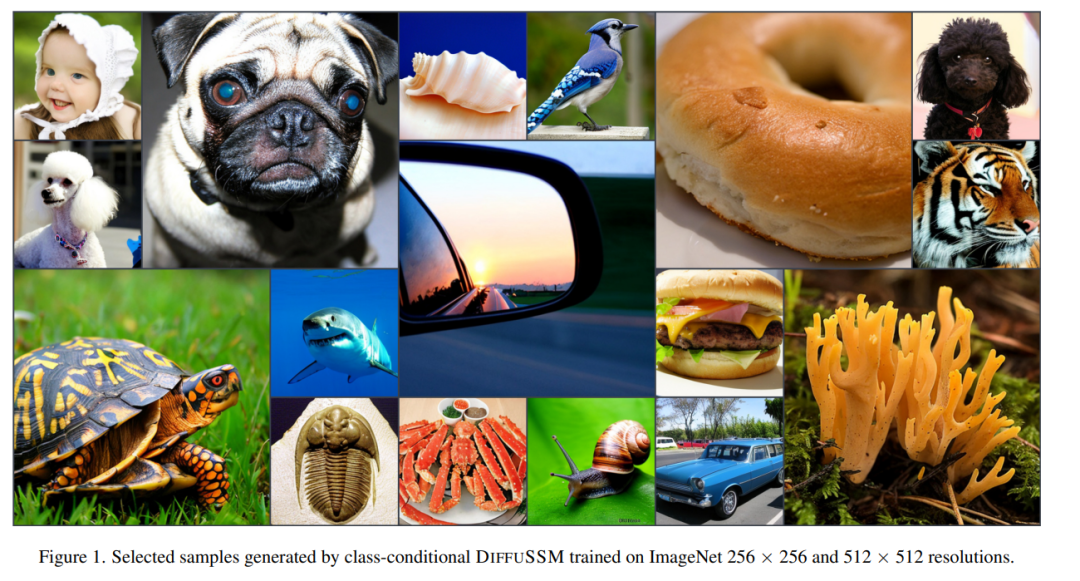

The authors verified the performance of DIFFUSSM at different resolutions. Experiments on ImageNet demonstrate that DIFFUSSM achieves consistent improvements in FID, sFID, and Inception Score at various resolutions with fewer total Gflops.

Paper link: https://arxiv.org/pdf/2311.18257.pdf

DIFFUSSM Framework

In order not to change the original meaning, the content needs to be rewritten into Chinese. The authors' goal was to design a diffusion architecture capable of learning long-range interactions at high resolution without the need for "length reduction" like blocking. Similar to DiT, this approach works by flattening the image and treating it as a sequence modeling problem. However, unlike Transformer, this method uses sub-quadratic calculations when processing the length of this sequence.

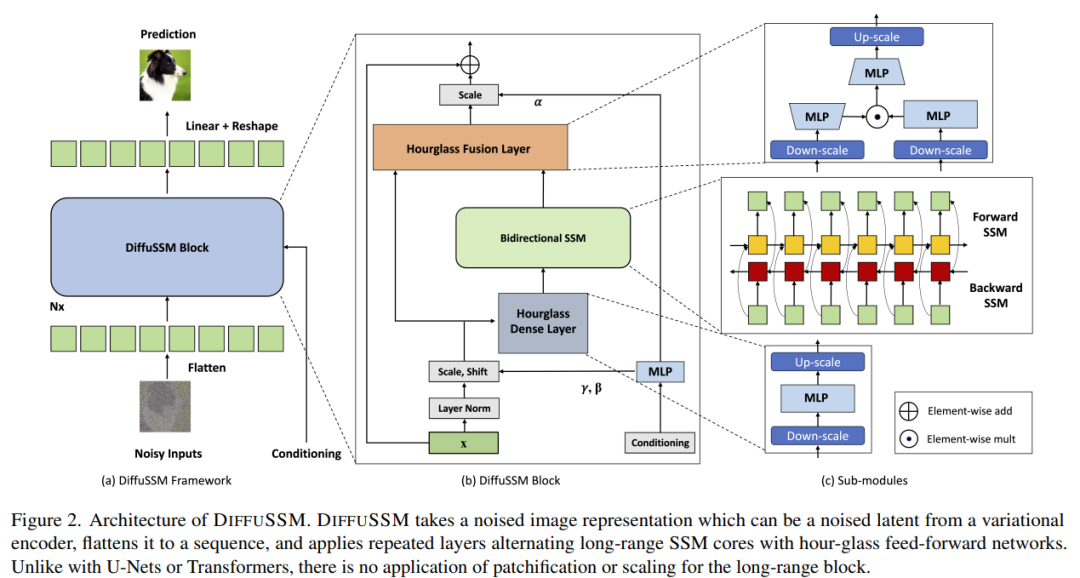

DIFFUSSM is a gate optimized for processing long sequences. The core component of two-way SSM. In order to improve efficiency, the author introduced the hourglass architecture in the MLP layer. This design alternately expands and contracts the sequence length around the bidirectional SSM while selectively reducing the sequence length in the MLP. The complete model architecture is shown in Figure 2

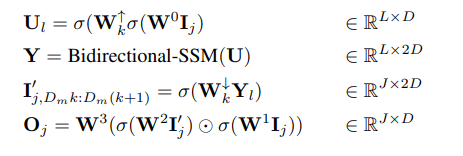

Specifically, each hourglass layer receives a shortened and flattened input sequence I ∈ R ^(J×D), where M = L/J is the ratio of reduction and enlargement. At the same time, the entire block, including bidirectional SSM, is computed on the original length, taking full advantage of the global context. σ is used in this article to represent the activation function. For l ∈ {1 . . . L}, where j = ⌊l/M⌋, m = l mod M, D_m = 2D/M, the calculation equation is as follows:

The authors integrate gated SSM blocks using skip connections in each layer. The authors integrate a combination of class label y ∈ R^(L×1) and time step t ∈ R^(L×1) at each location, as shown in Figure 2.

Parameters: The number of parameters in the DIFFUSSM block is mainly determined by the linear transformation W, which contains 9D^2 2MD^2 parameters. When M = 2, this yields 13D^2 parameters. The DiT transform block has 12D^2 parameters in its core transform layer; however, the DiT architecture has many more parameters in other layer components (adaptive layer normalization). The researchers matched the parameters in their experiments by using additional DIFFUSSM layers.

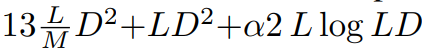

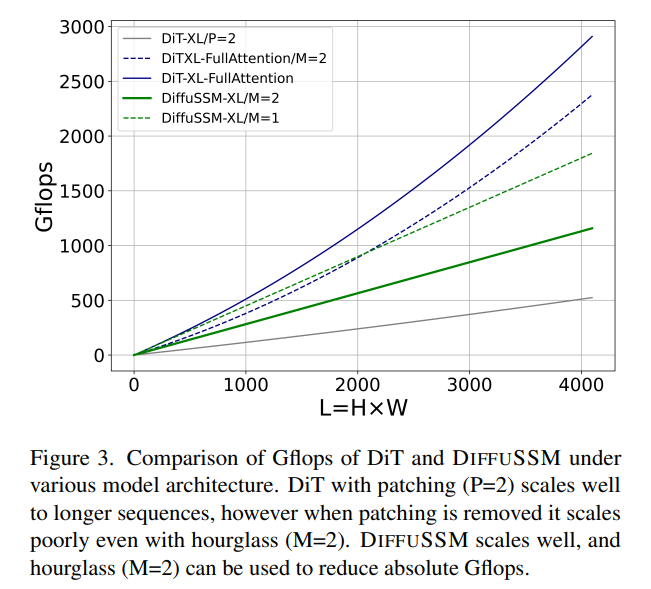

FLOPs: Figure 3 compares Gflops between DiT and DIFFUSSM. The total Flops of a DIFFUSSM layer is  , where α represents the constant of the FFT implementation. This yields approximately 7.5LD^2 Gflops when M = 2 and linear layers dominate the calculation. In comparison, if full-length self-attention is used instead of SSM in this hourglass architecture, there are an additional 2DL^2 Flops.

, where α represents the constant of the FFT implementation. This yields approximately 7.5LD^2 Gflops when M = 2 and linear layers dominate the calculation. In comparison, if full-length self-attention is used instead of SSM in this hourglass architecture, there are an additional 2DL^2 Flops.

Consider two experimental scenarios: 1) D ≈ L = 1024, which will bring additional 2LD^2 Flops, 2) 4D ≈ L = 4096, which would yield 8LD^2 Flops and significantly increase the cost. Since the core cost of bidirectional SSM is small relative to the cost of using attention, using an hourglass architecture does not work for attention-based models. As discussed earlier, DiT avoids these problems by using chunking at the expense of compressed representation.

Experimental results

Generate category condition image

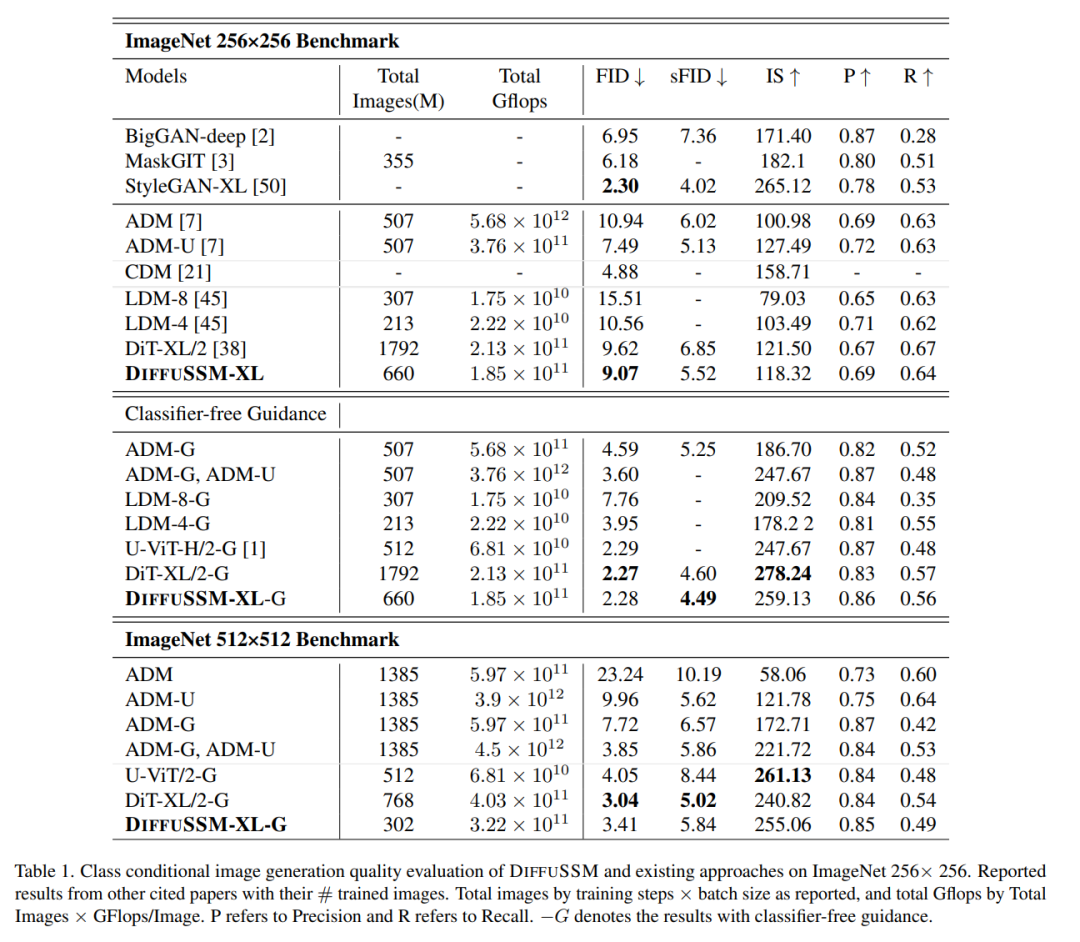

Table below is the comparison result of DIFFUSSM with all current state-of-the-art category conditional generation models

when no classifier guidance is used , DIFFUSSM outperforms other diffusion models in both FID and sFID, reducing the optimal score of the previous non-classifier-guided latent diffusion model from 9.62 to 9.07, while reducing the number of training steps used to about 1/3 of the original. In terms of total Gflops trained, the uncompressed model reduces total Gflops by 20% compared to DiT. When classifier-free guidance is introduced, the model achieves the best sFID score among all DDPM-based models, outperforming other state-of-the-art strategies, indicating that images generated by DIFFUSSM are more robust to spatial distortion.

DIFFUSSM’s FID score when using classifier-free guidance surpasses all models and maintains a fairly small gap (0.01) when compared to DiT. Note that DIFFUSSM trained with a 30% reduction in total Gflops already outperforms DiT without applying classifier-free guidance. U-ViT is another Transformer-based architecture, but uses a UNet-based architecture with long hop connections between blocks. U-ViT uses fewer FLOPs and performs better at 256×256 resolution, but this is not the case in the 512×512 dataset. The author mainly compares with DiT. For the sake of fairness, this long-hop connection is not adopted. The author believes that the idea of adopting U-Vit may be beneficial to both DiT and DIFFUSSM.

The authors further conduct comparisons on higher-resolution benchmarks using classifier-free guidance. The results of DIFFUSSM are relatively strong and close to state-of-the-art high-resolution models, only inferior to DiT on sFID, and achieve comparable FID scores. DIFFUSSM was trained on 302 million images, observed 40% of the images, and used 25% fewer Gflops than DiT

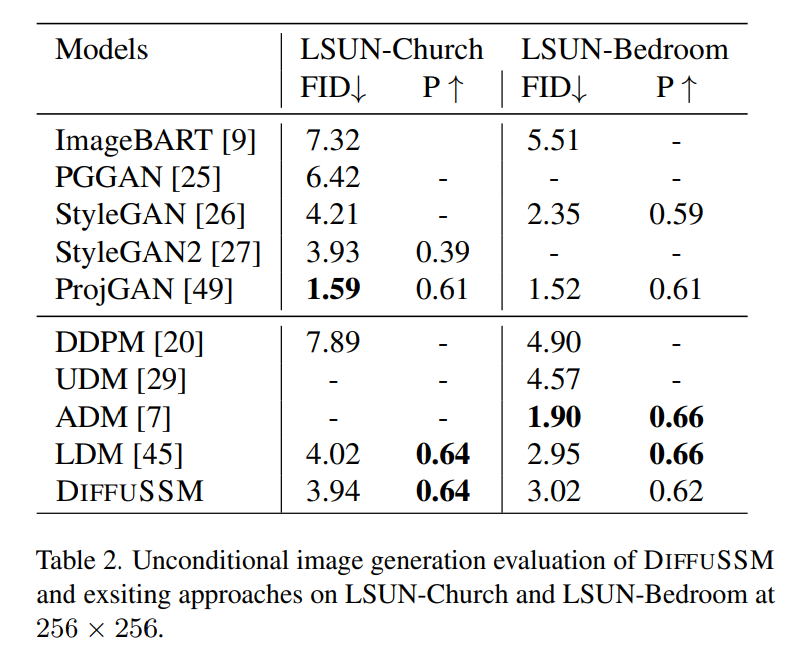

Unconditional image generation

The results of the comparison of the unconditional image generation capabilities of the models according to the authors are shown in Table 2. The authors' research found that DIFFUSSM achieved comparable FID scores (differences of -0.08 and 0.07) under comparable training budgets as LDM. This result highlights the applicability of DIFFUSSM across different benchmarks and different tasks. Similar to LDM, this method does not outperform ADM on the LSUN-Bedrooms task since it only uses 25% of the total training budget of ADM. For this task, the best GAN model outperforms the diffusion model in model categories

Please refer to the original paper for more details

The above is the detailed content of Mamba's popular SSM attracts the attention of Apple and Cornell: abandon the distraction model. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile