Technology peripherals

Technology peripherals

AI

AI

This article summarizes the classic methods and effect comparison of feature enhancement & personalization in CTR estimation.

This article summarizes the classic methods and effect comparison of feature enhancement & personalization in CTR estimation.

This article summarizes the classic methods and effect comparison of feature enhancement & personalization in CTR estimation.

In CTR estimation, the mainstream method uses feature embedding MLP, where features are very critical. However, for the same features, the representation is the same in different samples. This way of inputting to the downstream model will limit the expressive ability of the model.

In order to solve this problem, a series of related work has been proposed in the field of CTR estimation, called feature enhancement module. The feature enhancement module corrects the output results of the embedding layer based on different samples to adapt to the feature representation of different samples and improve the expression ability of the model.

Recently, Fudan University and Microsoft Research Asia jointly released a review on feature enhancement work, comparing the implementation methods and effects of different feature enhancement modules. Now, let’s introduce the implementation methods of several feature enhancement modules, as well as the related comparative experiments conducted in this article

Title of the paper: A Comprehensive Summarization and Evaluation of Feature Refinement Modules for CTR Prediction

Title of the paper: A Comprehensive Summarization and Evaluation of Feature Refinement Modules for CTR Prediction

Download address: https://arxiv.org/pdf/2311.04625v1.pdf

1. Feature enhancement modeling idea

Feature enhancement module is designed to improve the CTR prediction model The expressive ability of the Embedding layer enables differentiation of representations of the same features in different samples. The feature enhancement module can be expressed by the following unified formula, input the original Embedding, and after passing a function, generate the personalized Embedding of this sample.

Picture

Picture

The general idea of this method is that after obtaining the initial embedding of each feature, use the representation of the sample itself to embedding the feature Make a transformation to get the personalized embedding of the current sample. Here we introduce some classic feature enhancement module modeling methods.

2. Classic method of feature enhancement

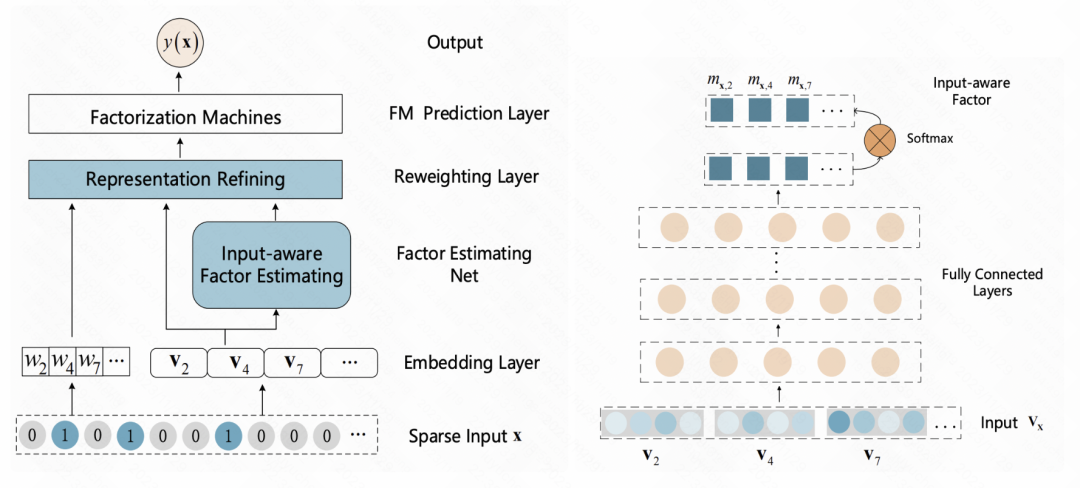

An Input-aware Factorization Machine for Sparse Prediction (IJCAI 2019) This article adds a reweight layer after the embedding layer, and inputs the initial embedding of the sample into A vector representing the sample is obtained in an MLP, and softmax is used for normalization. Each element after Softmax corresponds to a feature, representing the importance of this feature. This softmax result is multiplied by the initial embedding of each corresponding feature to achieve feature embedding weighting at sample granularity.

Picture

Picture

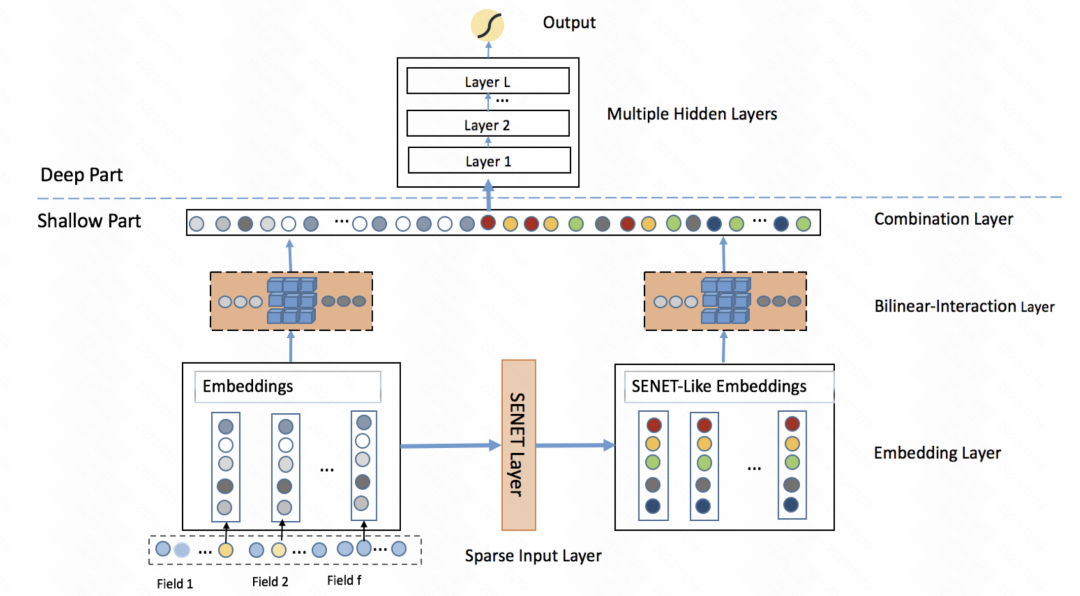

FiBiNET: Click-through rate prediction model combining feature importance and second-order feature interaction (RecSys 2019) also adopts a similar idea. The model learns a personalized weight of a feature for each sample. The whole process is divided into three steps: squeeze, extraction and reweight. In the squeezing stage, the embedding vector of each feature is obtained as a statistical scalar through the pooling method. In the extraction stage, these scalars are input into a multilayer perceptron (MLP) to obtain the weight of each feature. Finally, these weights are multiplied by the embedding vector of each feature to obtain the weighted embedding result, which is equivalent to filtering feature importance at the sample level

Picture

Picture

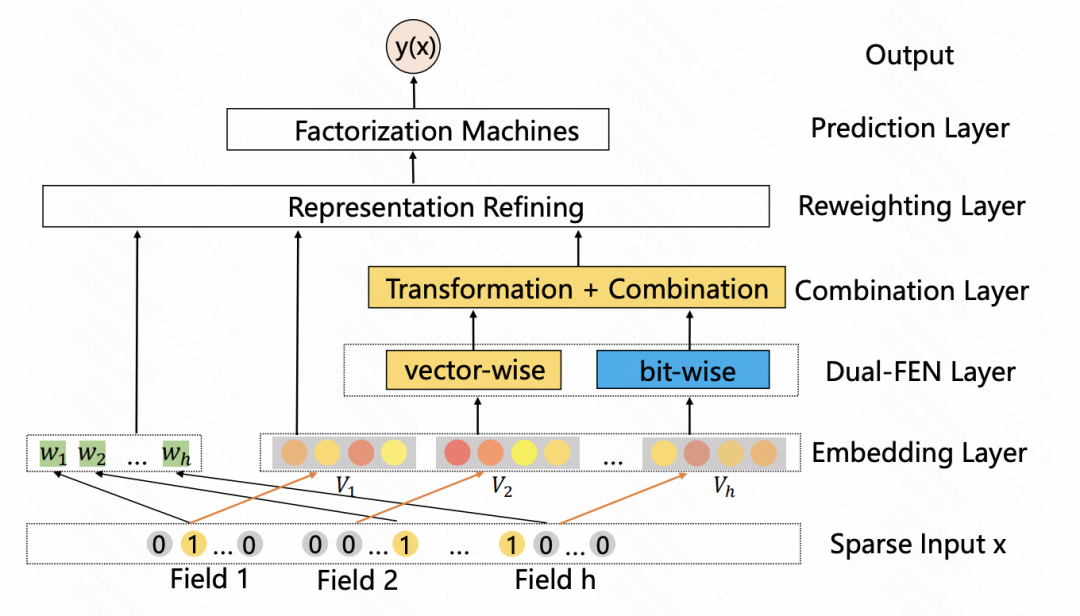

A Dual Input-aware Factorization Machine for CTR Prediction (IJCAI 2020) is similar to the previous article, and also uses self-attention to enhance features. The whole is divided into two modules: vector-wise and bit-wise. Vector-wise treats the embedding of each feature as an element in the sequence and inputs it into the Transformer to obtain the fused feature representation; the bit-wise part uses multi-layer MLP to map the original features. After the input results of the two parts are added, the weight of each feature element is obtained, and multiplied by each bit of the corresponding original feature to obtain the enhanced feature.

Image

Image

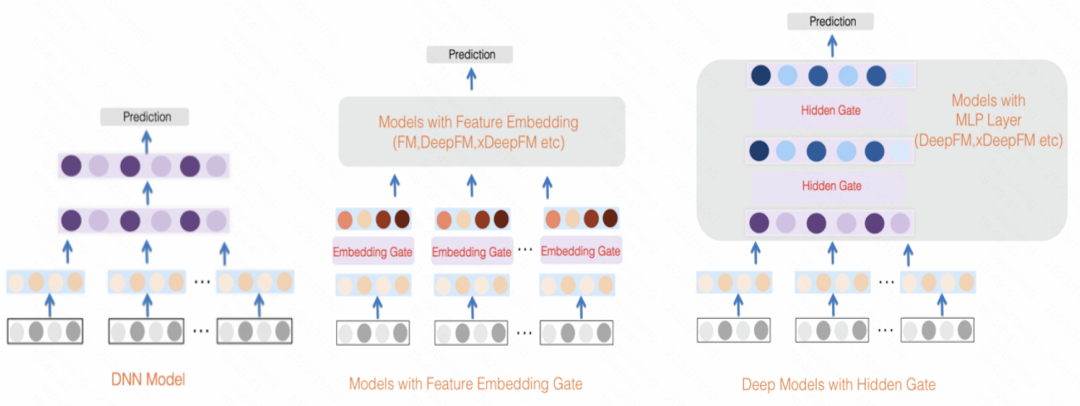

GateNet: Enhanced gated deep network for click-through rate prediction (2020) utilizes the initial embedding vector of each feature through an MLP and sigmoid The function generates its independent feature weight scores while using an MLP to map all features into bitwise weight scores, combining the two to weight the input features. In addition to the feature layer, in the hidden layer of MLP, a similar method is also used to weight the input of each hidden layer

Picture

Picture

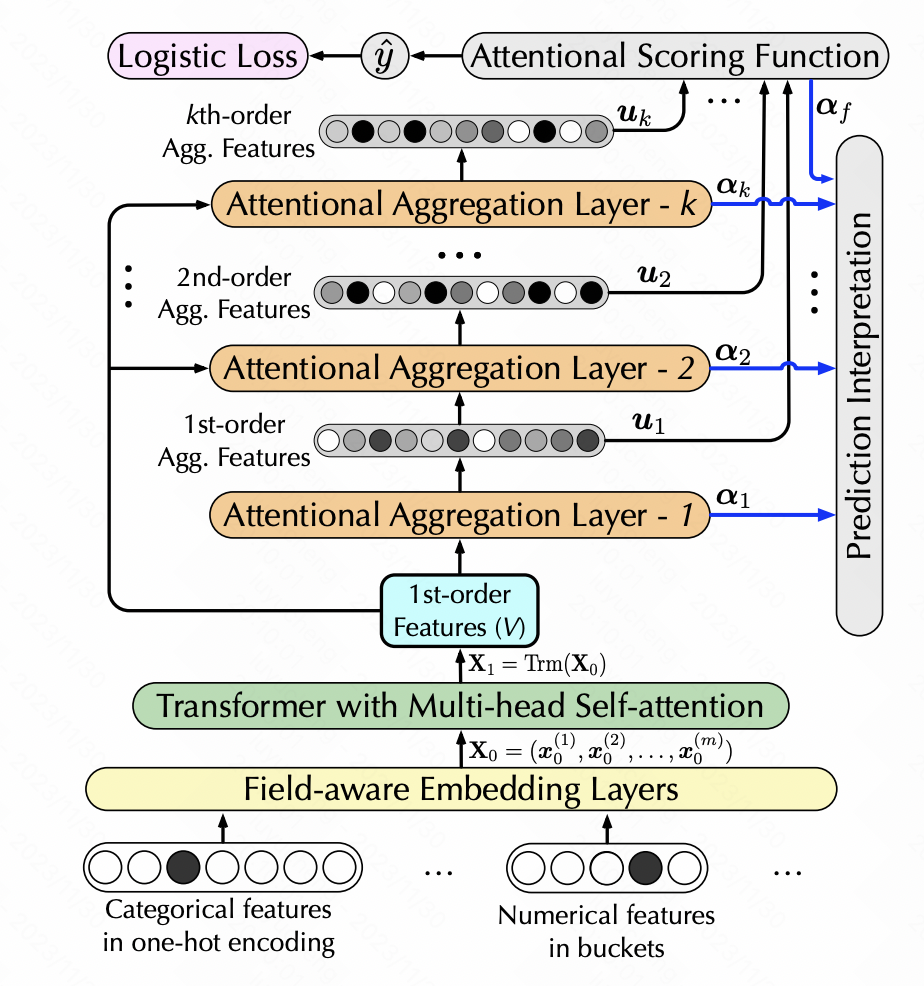

Interpretable Click-Through Rate Prediction through Hierarchical Attention (WSDM 2020) also uses self-attention to achieve feature conversion, but adds the generation of high-order features. Hierarchical self-attention is used here. Each layer of self-attention takes the output of the previous layer of self-attention as input. Each layer adds a first-order high-order feature combination to achieve hierarchical multi-order feature extraction. Specifically, after each layer performs self-attention, the generated new feature matrix is passed through softmax to obtain the weight of each feature. The new features are weighted according to the weights of the original features, and then a dot product is performed with the original features to achieve an increase of one feature. Characteristic intersection of levels.

Picture

Picture

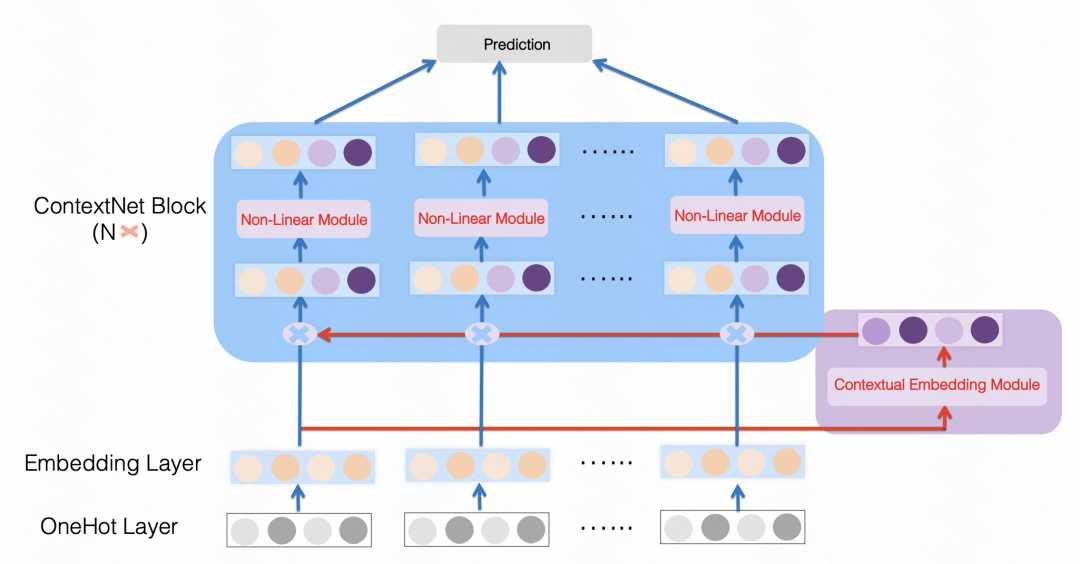

ContextNet: A Click-Through Rate Prediction Framework Using Contextual information to Refine Feature Embedding (2021) is a similar approach, using an MLP to All features are mapped into a dimension of each feature embedding size, and the original features are scaled. The article uses personalized MLP parameters for each feature. In this way, each feature is enhanced using other features in the sample as upper and lower bits.

Picture

Picture

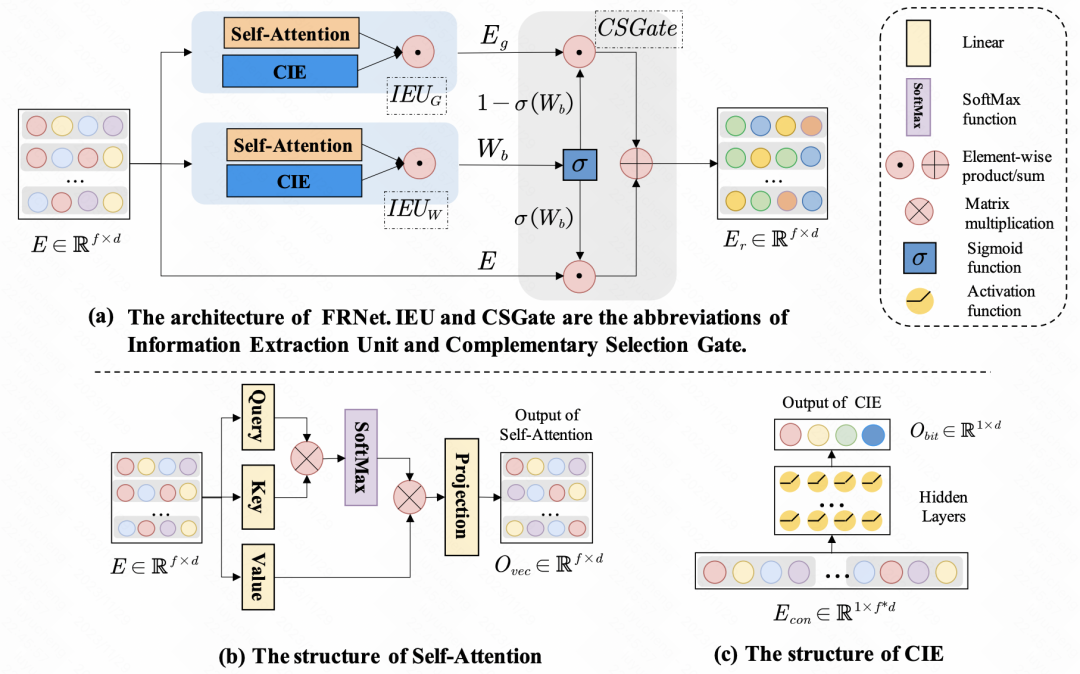

Enhancing CTR Prediction with Context-Aware Feature Representation Learning (SIGIR 2022) uses self-attention for feature enhancement, for a set of input features , each feature has a different degree of influence on other features. Through self-attention, self-attention is performed on the embedding of each feature to achieve information interaction between features within the sample. In addition to the interaction between features, the article also uses MLP for bit-level information interaction. The new embedding generated above will be merged with the original embedding through a gate network to obtain the final refined feature representation.

Picture

Picture

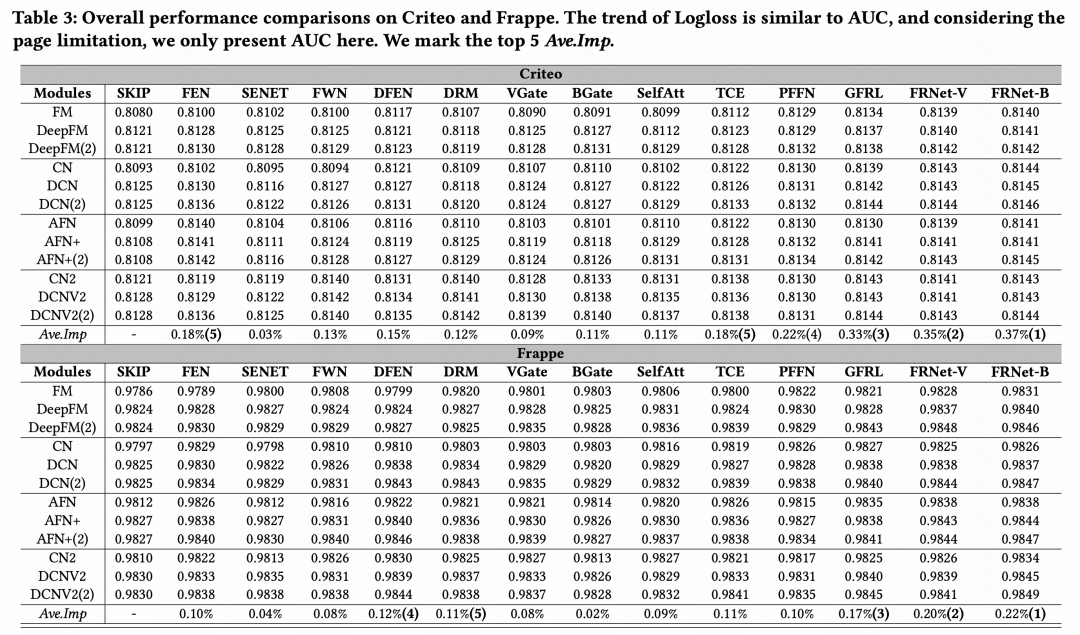

3. Experimental results

After comparing the effects of various feature enhancement methods, we came to the overall conclusion: Among the many feature enhancement modules, GFRL, FRNet-V, and FRNetB perform best and are better than other feature enhancement methods

##  picture

picture

The above is the detailed content of This article summarizes the classic methods and effect comparison of feature enhancement & personalization in CTR estimation.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

![WLAN expansion module has stopped [fix]](https://img.php.cn/upload/article/000/465/014/170832352052603.gif?x-oss-process=image/resize,m_fill,h_207,w_330) WLAN expansion module has stopped [fix]

Feb 19, 2024 pm 02:18 PM

WLAN expansion module has stopped [fix]

Feb 19, 2024 pm 02:18 PM

If there is a problem with the WLAN expansion module on your Windows computer, it may cause you to be disconnected from the Internet. This situation is often frustrating, but fortunately, this article provides some simple suggestions that can help you solve this problem and get your wireless connection working properly again. Fix WLAN Extensibility Module Has Stopped If the WLAN Extensibility Module has stopped working on your Windows computer, follow these suggestions to fix it: Run the Network and Internet Troubleshooter to disable and re-enable wireless network connections Restart the WLAN Autoconfiguration Service Modify Power Options Modify Advanced Power Settings Reinstall Network Adapter Driver Run Some Network Commands Now, let’s look at it in detail

WLAN extensibility module cannot start

Feb 19, 2024 pm 05:09 PM

WLAN extensibility module cannot start

Feb 19, 2024 pm 05:09 PM

This article details methods to resolve event ID10000, which indicates that the Wireless LAN expansion module cannot start. This error may appear in the event log of Windows 11/10 PC. The WLAN extensibility module is a component of Windows that allows independent hardware vendors (IHVs) and independent software vendors (ISVs) to provide users with customized wireless network features and functionality. It extends the capabilities of native Windows network components by adding Windows default functionality. The WLAN extensibility module is started as part of initialization when the operating system loads network components. If the Wireless LAN Expansion Module encounters a problem and cannot start, you may see an error message in the event viewer log.

Python commonly used standard libraries and third-party libraries 2-sys module

Apr 10, 2023 pm 02:56 PM

Python commonly used standard libraries and third-party libraries 2-sys module

Apr 10, 2023 pm 02:56 PM

1. Introduction to the sys module The os module introduced earlier is mainly for the operating system, while the sys module in this article is mainly for the Python interpreter. The sys module is a module that comes with Python. It is an interface for interacting with the Python interpreter. The sys module provides many functions and variables to deal with different parts of the Python runtime environment. 2. Commonly used methods of the sys module. You can check which methods are included in the sys module through the dir() method: import sys print(dir(sys))1.sys.argv-Get the command line parameters sys.argv is used to implement the command from outside the program. The program is passed parameters and it is able to obtain the command line parameter column

How does Python's import work?

May 15, 2023 pm 08:13 PM

How does Python's import work?

May 15, 2023 pm 08:13 PM

Hello, my name is somenzz, you can call me Brother Zheng. Python's import is very intuitive, but even so, sometimes you will find that even though the package is there, we will still encounter ModuleNotFoundError. Obviously the relative path is very correct, but the error ImportError:attemptedrelativeimportwithnoknownparentpackage imports a module in the same directory and a different one. The modules of the directory are completely different. This article helps you easily handle the import by analyzing some problems often encountered when using import. Based on this, you can easily create attributes.

Python programming: Detailed explanation of the key points of using named tuples

Apr 11, 2023 pm 09:22 PM

Python programming: Detailed explanation of the key points of using named tuples

Apr 11, 2023 pm 09:22 PM

Preface This article continues to introduce the Python collection module. This time it mainly introduces the named tuples in it, that is, the use of namedtuple. Without further ado, let’s get started – remember to like, follow and forward~ ^_^Creating named tuples The named tuple class namedTuples in the Python collection gives meaning to each position in the tuple and enhances the readability of the code Sexual and descriptive. They can be used anywhere regular tuples are used, and add the ability to access fields by name rather than positional index. It comes from the Python built-in module collections. The general syntax used is: import collections XxNamedT

Detailed explanation of how Ansible works

Feb 18, 2024 pm 05:40 PM

Detailed explanation of how Ansible works

Feb 18, 2024 pm 05:40 PM

The working principle of Ansible can be understood from the above figure: the management end supports three methods of local, ssh, and zeromq to connect to the managed end. The default is to use the ssh-based connection. This part corresponds to the connection module in the above architecture diagram; you can press the application type HostInventory (host list) classification is carried out in other ways. The management node implements corresponding operations through various modules. A single module and batch execution of a single command can be called ad-hoc; the management node can implement a collection of multiple tasks through playbooks. Implement a type of functions, such as installation and deployment of web services, batch backup of database servers, etc. We can simply understand playbooks as, the system passes

How to use DateTime in Python

Apr 19, 2023 pm 11:55 PM

How to use DateTime in Python

Apr 19, 2023 pm 11:55 PM

All data are automatically assigned a "DOB" (Date of Birth) at the beginning. Therefore, it is inevitable to encounter date and time data when processing data at some point. This tutorial will take you through the datetime module in Python and using some peripheral libraries such as pandas and pytz. In Python, anything related to date and time is handled by the datetime module, which further divides the module into 5 different classes. Classes are simply data types that correspond to objects. The following figure summarizes the 5 datetime classes in Python along with commonly used attributes and examples. 3 useful snippets 1. Convert string to datetime format, maybe using datet

This article summarizes the classic methods and effect comparison of feature enhancement & personalization in CTR estimation.

Dec 15, 2023 am 09:23 AM

This article summarizes the classic methods and effect comparison of feature enhancement & personalization in CTR estimation.

Dec 15, 2023 am 09:23 AM

In CTR estimation, the mainstream method uses feature embedding+MLP, in which features are very critical. However, for the same features, the representation is the same in different samples. This way of inputting to the downstream model will limit the expressive ability of the model. In order to solve this problem, a series of related work has been proposed in the field of CTR estimation, which is called feature enhancement module. The feature enhancement module corrects the output results of the embedding layer based on different samples to adapt to the feature representation of different samples and improve the expression ability of the model. Recently, Fudan University and Microsoft Research Asia jointly published a review on feature enhancement work, comparing the implementation methods and effects of different feature enhancement modules. Now, let us introduce a