Technology peripherals

Technology peripherals

AI

AI

Open source large models must surpass closed source - LeCun reveals 2024 AI trend chart

Open source large models must surpass closed source - LeCun reveals 2024 AI trend chart

Open source large models must surpass closed source - LeCun reveals 2024 AI trend chart

2023 is coming to an end. Over the past year, various large models have been released. While technology giants such as OpenAI and Google are competing, another "power" is quietly rising - open source.

The open source model has always been questioned a lot. Are they as good as proprietary models? Can it match the performance of proprietary models? So far, we've been able to say that we're only somewhat close. Even so, open source models will always bring us empirical performance, which makes us admire with admiration.

The rise of the open source model is changing the rules of the game. Meta’s LLaMA series, for example, is gaining popularity for its rapid iteration, customizability, and privacy. These models are being rapidly developed by the community, creating a powerful challenge to proprietary models and capable of changing the competitive landscape of large technology companies.

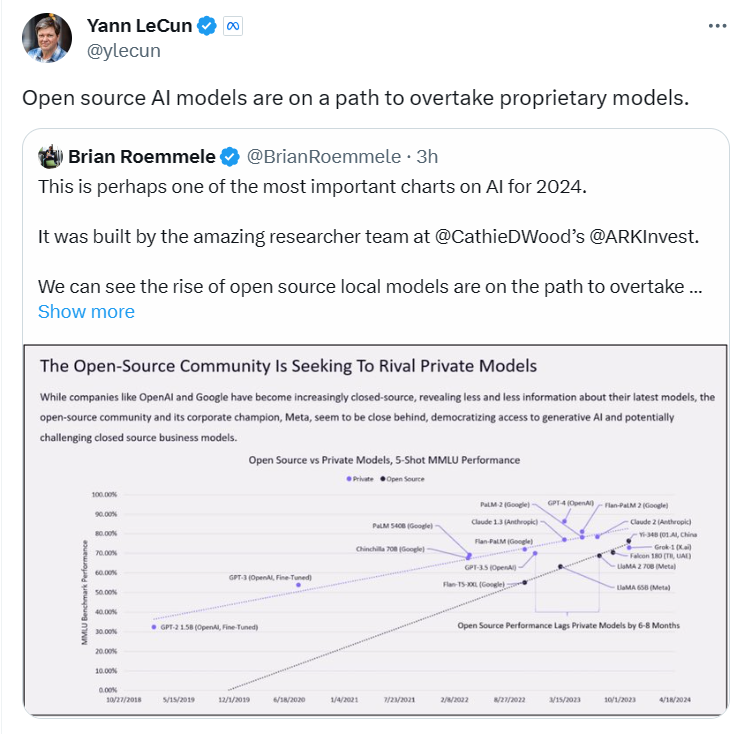

But before, most of people’s ideas just came from “feelings”. This morning, Meta chief AI scientist and Turing Award winner Yann LeCun suddenly lamented: "Open source artificial intelligence models are on the road to surpassing proprietary models."

This trend chart produced by the ARK Invest team is considered to possibly predict the development of artificial intelligence in 2024. It showcases the growth of open source communities versus proprietary models in generative artificial intelligence

As companies like OpenAI and Google become more insular, they Information about the latest models is being made public less and less frequently. As a result, the open source community and its corporate backer Meta are starting to step in to democratize generative AI, which may pose a challenge to the business model of proprietary models.

In this scatter plot The performance percentages of various AI models are shown in . Proprietary models are shown in blue and open source models in black. We can see the performance of different AI models such as GPT-3, Chinchilla 70B (Google), PaLM (Google), GPT-4 (OpenAI), and Llama65B (Meta) at different points in time.

When Meta originally released LLaMA, the number of parameters ranged from 7 billion to 65 billion. The performance of these models is excellent: the Llama model with 13 billion parameters can outperform GPT-3 (175 billion parameters) "on most benchmarks" and can run on a single V100 GPU; while the largest 65 billion The parameters of the Llama model are comparable to Google's Chinchilla-70B and PaLM-540B.

Falcon-40B shot to the top of Huggingface’s OpenLLM rankings as soon as it was released, changing the scene where Llama stands out.

Llama 2 is open source, once again causing great changes in the large model landscape. Compared with Llama 1, Llama 2 has 40% more training data, doubles the context length, and adopts a grouped query attention mechanism.

Recently, the open source large model universe has gained a new heavyweight member - the Yi model. It can handle 400,000 Chinese characters at a time, and both Chinese and English dominate the list. Yi-34B has also become the only domestic model to successfully top the Hugging Face open source model rankings so far.

According to the scatter plot, the performance of the open source model continues to catch up with the proprietary model. This means that in the near future, the open source model is expected to be on par with, or even surpass, the performance of the proprietary model. received high praise from researchers, who stated that "the closed-source large model has come to an end."

Some netizens have already begun to wish "2024" Becoming the Year of Open Source Artificial Intelligence", he believes that "we are approaching a tipping point. Considering the current development speed of open source community projects, we expect to reach the level of GPT-4 within the next 12 months."

Next, we will wait and see whether the future of the open source model is smooth sailing and what kind of performance it will show

The above is the detailed content of Open source large models must surpass closed source - LeCun reveals 2024 AI trend chart. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

How to get logged in user information in WordPress for personalized results

Apr 19, 2025 pm 11:57 PM

How to get logged in user information in WordPress for personalized results

Apr 19, 2025 pm 11:57 PM

Recently, we showed you how to create a personalized experience for users by allowing users to save their favorite posts in a personalized library. You can take personalized results to another level by using their names in some places (i.e., welcome screens). Fortunately, WordPress makes it very easy to get information about logged in users. In this article, we will show you how to retrieve information related to the currently logged in user. We will use the get_currentuserinfo(); function. This can be used anywhere in the theme (header, footer, sidebar, page template, etc.). In order for it to work, the user must be logged in. So we need to use

How to elegantly obtain entity class variable names to build database query conditions?

Apr 19, 2025 pm 11:42 PM

How to elegantly obtain entity class variable names to build database query conditions?

Apr 19, 2025 pm 11:42 PM

When using MyBatis-Plus or other ORM frameworks for database operations, it is often necessary to construct query conditions based on the attribute name of the entity class. If you manually every time...

Java BigDecimal operation: How to accurately control the accuracy of calculation results?

Apr 19, 2025 pm 11:39 PM

Java BigDecimal operation: How to accurately control the accuracy of calculation results?

Apr 19, 2025 pm 11:39 PM

Java...

How to process and display percentage numbers in Java?

Apr 19, 2025 pm 10:48 PM

How to process and display percentage numbers in Java?

Apr 19, 2025 pm 10:48 PM

Display and processing of percentage numbers in Java In Java programming, the need to process and display percentage numbers is very common, for example, when processing Excel tables...

How to properly configure apple-app-site-association file in pagoda nginx to avoid 404 errors?

Apr 19, 2025 pm 07:03 PM

How to properly configure apple-app-site-association file in pagoda nginx to avoid 404 errors?

Apr 19, 2025 pm 07:03 PM

How to correctly configure apple-app-site-association file in Baota nginx? Recently, the company's iOS department sent an apple-app-site-association file and...

How to package in IntelliJ IDEA for specific Git versions to avoid including unfinished code?

Apr 19, 2025 pm 08:18 PM

How to package in IntelliJ IDEA for specific Git versions to avoid including unfinished code?

Apr 19, 2025 pm 08:18 PM

In IntelliJ...

How do subclasses modify private properties by inheriting the public method of parent class?

Apr 19, 2025 pm 11:12 PM

How do subclasses modify private properties by inheriting the public method of parent class?

Apr 19, 2025 pm 11:12 PM

How to modify private properties by inheriting the parent class's public method When learning object-oriented programming, understanding the inheritance of a class and access to private properties is a...

How to efficiently query large amounts of personnel data through natural language processing?

Apr 19, 2025 pm 09:45 PM

How to efficiently query large amounts of personnel data through natural language processing?

Apr 19, 2025 pm 09:45 PM

Effective method of querying personnel data through natural language processing How to efficiently use natural language processing (NLP) technology when processing large amounts of personnel data...