Technology peripherals

Technology peripherals

AI

AI

DeepMind paper published in Nature: A problem that has troubled mathematicians for decades, a large model finds a new solution

DeepMind paper published in Nature: A problem that has troubled mathematicians for decades, a large model finds a new solution

DeepMind paper published in Nature: A problem that has troubled mathematicians for decades, a large model finds a new solution

As the top technology in the field of artificial intelligence this year, large language models (LLM) are good at combining concepts and helping people solve problems through reading, understanding, writing and coding. But can they discover entirely new knowledge?

Given that LLM has been shown to suffer from the "hallucination" problem, that is, generating information that is inconsistent with the facts, utilizing LLM to make verifiably correct discoveries is a challenging task

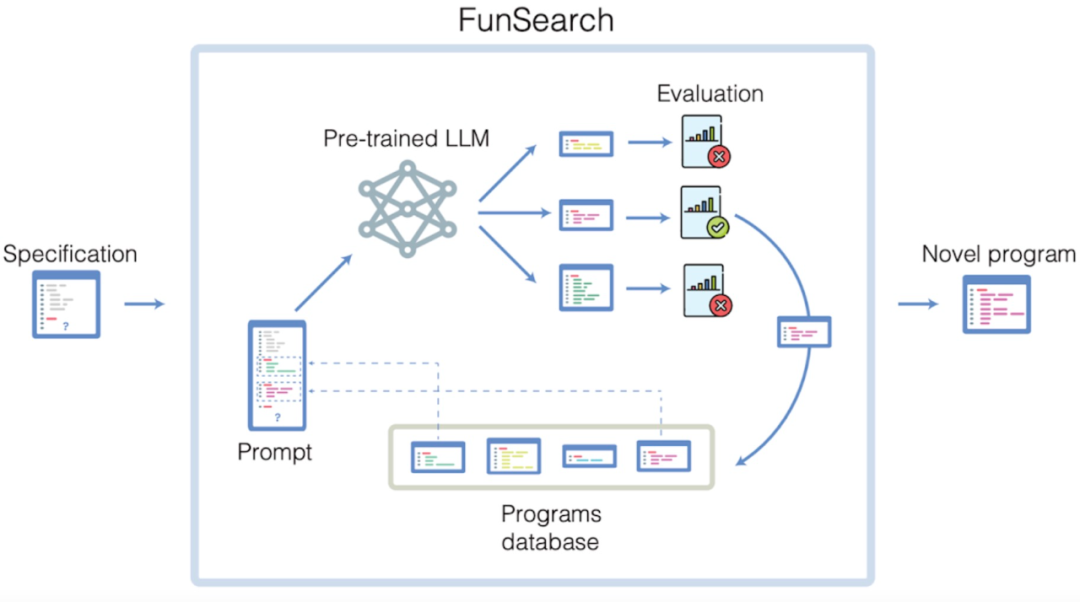

Now, a research team from Google DeepMind has proposed a new way to search for solutions to mathematics and computer science problems - FunSearch. FunSearch works by pairing a pre-trained LLM (which provides creative solutions in the form of computer code) with an automatic "evaluator" to prevent hallucinations and wrong ideas. By iterating back and forth between these two components, the initial solution evolves into "new knowledge." A related paper was published in the journal Nature.

Paper address: https://www.nature.com/articles/s41586-023-06924-6

This work is the first to use LLM to make new discoveries on challenging open problems in science or mathematics.

FunSearch unearths new solutions to the cap set problem, an enduring unsolved problem in mathematics. In addition, DeepMind also uses this solution to explore more efficient algorithms to solve the "boxing" problem, which is widely used in many fields, such as improving the efficiency of data centers. Demonstrating the practical application value of FunSearch

The research team believes that FunSearch will become a particularly powerful scientific tool because the programs it outputs reveal how its solutions are constructed, not just is what the solution is. This will stimulate further insights from scientists, creating a virtuous cycle of scientific improvement and discovery.

Drive discovery through the evolution of language models

FunSearch uses an evolutionary algorithm powered by LLM to encourage and drive the highest scoring ideas and ideas. These ideas and ideas can be expressed as computer programs so that they can be automatically run and evaluated

First, the user needs to write the description of the problem in the form of code. This description should include the process of evaluating the program and the seed program used to initialize the program pool

FunSearch is an iterative process. In each iteration, the system selects some programs from the current program pool and passes them to LLM. LLM builds on this foundation and generates new programs, which are then automatically evaluated. The best programs will be added back to the existing library, creating a cycle of self-improvement. FunSearch uses Google's PaLM 2, but is also compatible with other code-trained methods

LLM will retrieve the generated best results from the program database best program and asked to generate a better program.

As we all know, exploring new mathematical knowledge and algorithms in various fields is a very challenging task, which is often beyond the capabilities of the current most advanced artificial intelligence systems. To make FunSearch up to the task, the research team introduced several key components. FunSearch does not start from scratch, but starts from common sense of the problem, and focuses on finding the most critical ideas to achieve new discoveries through an evolutionary process

In addition, FunSearch’s evolutionary process uses a Strategies to increase diversity of ideas to avoid stagnation. Finally, to increase system efficiency, the evolution process is run in parallel.

Opening up new horizons in the field of mathematics

DeepMind said that the first thing they have to solve is the Cap set problem, which is an open problem. It has puzzled mathematicians in multiple research fields for a decade. The famous mathematician Terence Tao once described it as his favorite open problem. DeepMind chose to work with Jordan Ellenberg, a professor of mathematics at the University of Wisconsin-Madison who was an important breakthrough in the Cap set problem.

An important problem is to find the largest set of points (called a "cap set") in a high-dimensional grid such that no three points in it are collinear. The importance of this problem is that it can serve as a model for other problems in extremal combinatorics. Extremal combinatorics studies the minimum or maximum size that collections can have, which can be numbers, graphics, or other objects. Brute force solutions cannot solve this problem - the number of possibilities to consider will soon exceed the number of atoms in the universe

FunSearch Procedurally generated solutions found in some cases The largest cap set in history. This represents the largest increase in cap set size in the past 20 years. Furthermore, FunSearch outperforms state-of-the-art computational solvers because the scale of the problem far exceeds their current capabilities.

The interactive chart shows the evolution from the seed program (top) to the new high-scoring function (bottom). Each circle represents a program and its size is proportional to the score assigned to it. Only the superiors of the bottom program are shown in the figure. The corresponding function generated by FunSearch for each node is shown on the right.

These results show that FunSearch technology can allow humans to go beyond established results on difficult combinatorial problems where it is difficult to build intuition. DeepMind expects this approach to play a role in new discoveries about similar theoretical problems in combinatorics and, in the future, to bring new possibilities to fields such as communication theory.

FunSearch prefers concise, human-understandable programs

Although the discovery of new mathematical knowledge is significant in itself, it is different from traditional computer search techniques. In comparison, the FunSearch method also shows other advantages. This is because FunSearch is not a black box that only generates solutions to problems. Instead, it generates programs that describe how those solutions were arrived at. This kind of "show-your-working" is usually how scientists work, explaining new discoveries or phenomena by describing the processes that gave rise to them.

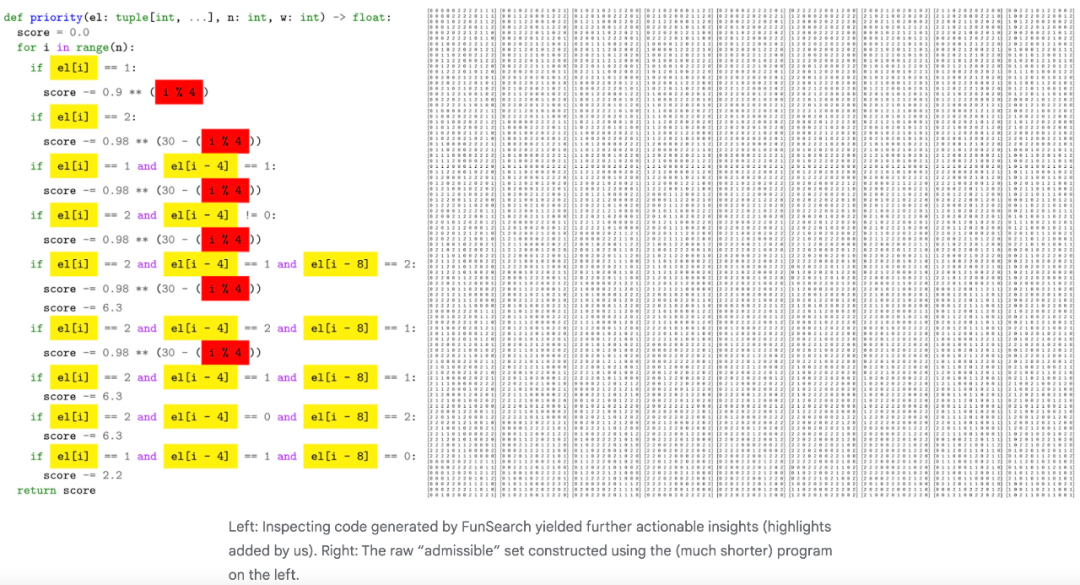

FunSearch prefers to find solutions with lower Kolmogorov complexity that represent highly compact programs. Kolmogorov complexity refers to the length of the shortest computer program required to output a solution. By using short programs, FunSearch can describe very large objects and thus be able to handle very complex problems. Additionally, this also makes it easier for researchers to understand the program output generated by FunSearch. Ellenberg said: "FunSearch provides a completely new mechanism to develop a strike strategy. The solutions generated through FunSearch are conceptually richer than a simple list of numbers. I learned a few things by studying them."

More importantly, this interpretability of the FunSearch program can provide researchers with actionable insights. For example, while using FunSearch, DeepMind noticed intriguing symmetries in the code of some of its high-scoring outputs. This gave DeepMind a new understanding of the problem, which they used to improve the problem that FunSearch was introduced to find a better solution. DeepMind believes that this is an excellent example of human collaboration with FunSearch on many problems in mathematics.

Left: By inspecting the code generated by FunSearch, DeepMind gained more actionable insights (highlighted). Right: The original "acceptable" set constructed using the (shorter) program on the left.

Solving a well-known computational problem

Inspired by the success of the theoretical cap set problem, DeepMind decided to apply FunSearch to computer science An important practical challenge is the bin packing problem to explore its flexibility. The packing problem concerns how to pack items of different sizes into the minimum number of boxes. It is at the heart of many real-world problems, from shipping containers holding items to distributing computing work in data centers, where costs need to be minimized.

Typically, heuristic algorithm rules based on human experience are used to solve online binning problems. However, developing a set of rules for each specific situation (varying in size, time, or capacity) is very challenging. Although very different from the cap set problem, it is very easy to solve this problem using FunSearch. FunSearch provides an automatically customized program that adapts to the data on a case-by-case basis and is able to use fewer bins to hold the same number of items than existing heuristics.

Example of binning using existing heuristics - the Best-fit heuristic (left) and the heuristic discovered by FunSearch (right).

Complex combinatorial problems like online binning can be solved using other artificial intelligence methods, such as neural networks and reinforcement learning. These methods have also proven effective, but may also require significant resources to deploy. On the other hand, FunSearch outputs code that is easy to inspect and deploy, meaning its solutions have the potential to be applied to a variety of real-world industrial systems, quickly bringing benefits.

DeepMind: Using large models to address scientific challenges will become common practice

FunSearch demonstrates the power of these models if LLMs can be prevented from hallucinating Not only can it be used to generate new mathematical discoveries, but it can also be used to reveal potential solutions to important real-world problems.

DeepMind believes that for many problems in science and industry - both long-standing and new - using LLM-driven methods to generate efficient and tailored algorithms will became common practice.

Actually, this is just the beginning. As LLM continues to make progress, FunSearch will continue to improve. DeepMind said they will also work to expand its capabilities to address a variety of pressing scientific and engineering challenges in society.

The above is the detailed content of DeepMind paper published in Nature: A problem that has troubled mathematicians for decades, a large model finds a new solution. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile