Recently, researchers from Waabi AI, University of Toronto, University of Waterloo and MIT proposed a new autonomous driving lighting simulation platform LightSim at NeurIPS 2023. Researchers have proposed methods to generate paired illumination training data from real data, solving the problems of missing data and model migration loss. LightSim uses neural radiation fields (NeRF) and physics-based deep networks to render vehicle driving videos, achieving lighting simulation of dynamic scenes on large-scale real data for the first time.

- Project website: https://waabi.ai/lightsim

- Paper link: https://openreview.net/pdf?id=mcx8IGneYw

Why is automatic driving lighting simulation needed? ? #Camera simulation is very important in robotics, especially for autonomous vehicles to perceive outdoor scenes. However, existing camera perception systems perform poorly once they encounter outdoor lighting conditions that have not been learned during training. Generating a rich dataset of outdoor lighting changes through camera simulation can improve the robustness of autonomous driving systems. Common camera simulation methods are generally based on physics engines. This method renders the scene by setting the 3D model and lighting conditions. However, simulation effects often lack diversity and are not realistic enough. Furthermore, due to the limited number of high-quality 3D models, the physical rendering results do not exactly match the real-world scenes. This leads to poor generalization ability of the trained model on real data. The other is based on data-driven simulation method. It uses neural rendering to reconstruct real-world digital twins that replicate data observed by sensors. This approach allows for more scalable scene creation and improved realism, but existing technologies tend to bake scene lighting into the 3D model, which hinders editing of the digital twin, such as changing lighting conditions or adding or deleting new ones. Objects etc. In a work at NeurIPS 2023, researchers from Waabi AI demonstrated a lighting simulation system LightSim based on a physics engine and neural network: Neural Lighting Simulation for Urban Scenes.

Different from previous work, LightSim also achieves: 1. Real ( realistic): For the first time, it is possible to simulate the lighting of large-scale outdoor dynamic scenes, and can more accurately simulate shadows, lighting effects between objects, etc. 2. Controllable: Supports editing of dynamic driving scenes (adding, deleting objects, camera positions and parameters, changing lighting, generating safety-critical scenes, etc.) to generate more Realistic and more consistent video to improve system robustness to lighting and edge conditions. 3. Scalable(scalable): It is easy to expand to more scenarios and different data sets. It only needs to collect data once (single pass) to reconstruct and conduct real-life data collection. Controlled simulation testing.

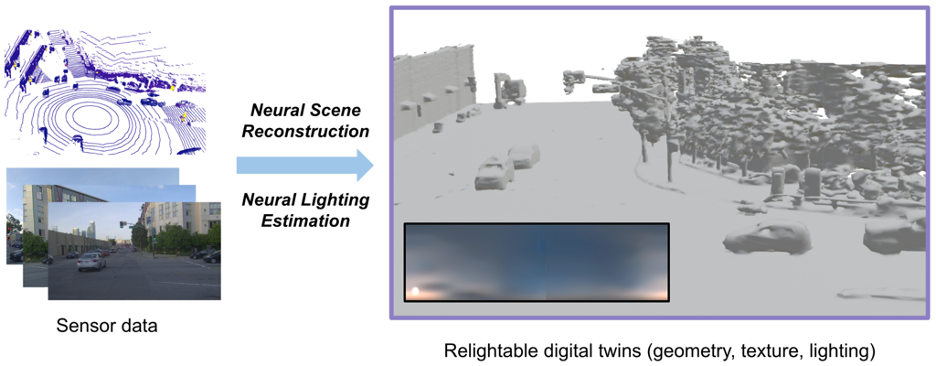

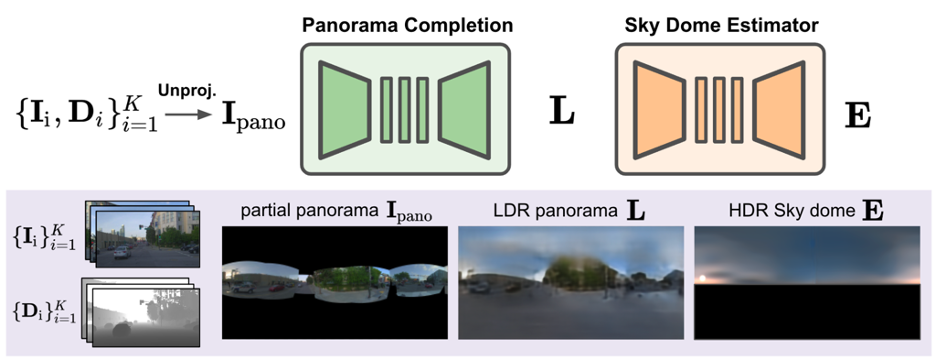

Building a simulation systemStep 1: Build a real-world re-illuminable digital twinIn order to reconstruct autonomous driving scenes in the digital world, LightSim first divides dynamic objects and static scenes from the collected data. This step uses UniSim to reconstruct the scene and remove camera view dependence in the network. Then use the marching cube to get the geometry, and further convert it to a mesh with basic materials. In addition to materials and geometry, LightSim can also estimate outdoor lighting based on the sun and sky, the main light sources of outdoor daytime scenes, and obtain a high dynamic range environment map (HDR Sky dome) . Using sensor data and extracted geometry, LightSim can estimate an incomplete panoramic image and then complete it to obtain a full 360° view of the sky. This panoramic image and GPS information are then used to generate an HDR environment map that accurately estimates sun intensity, sun direction and sky appearance.

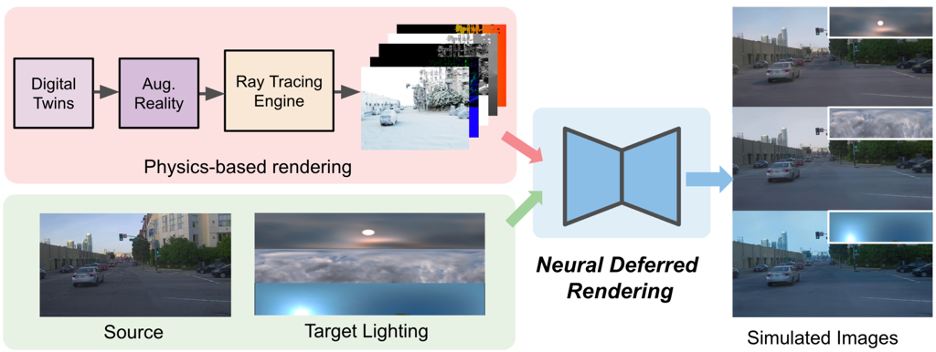

Step Two: Neural Lighting Simulation of Dynamic Urban ScenesAfter getting the numbers Once twinned, it can be further modified, such as adding or removing objects, changing vehicle trajectories, or changing lighting, etc., to generate an augmented reality representation. LightSim will perform physically based rendering, generating lighting-related data such as base color, depth, normal vectors, and shadows for modifying the scene. Using this lighting-related data and an estimate of the scene's source and target lighting conditions, the LightSim workflow is as follows.

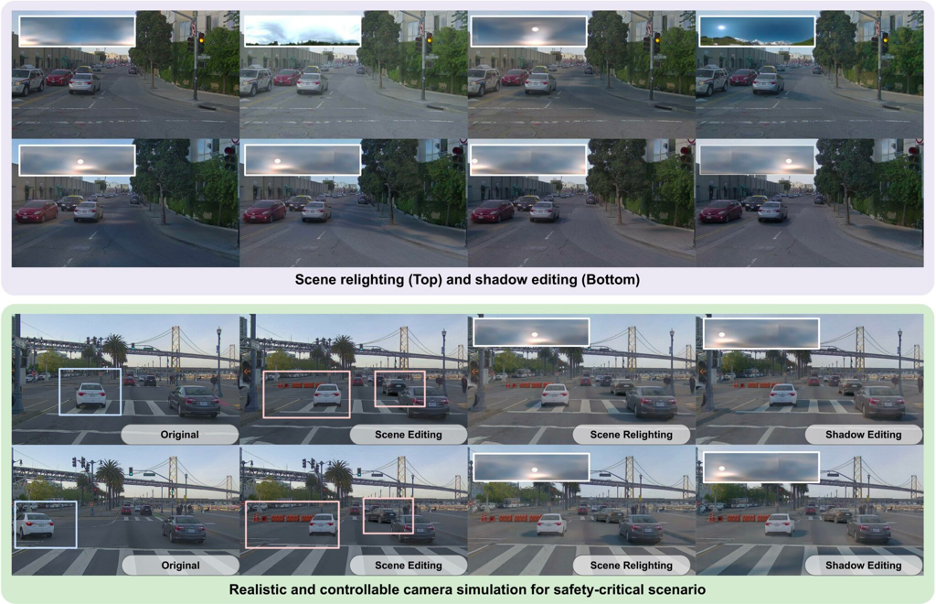

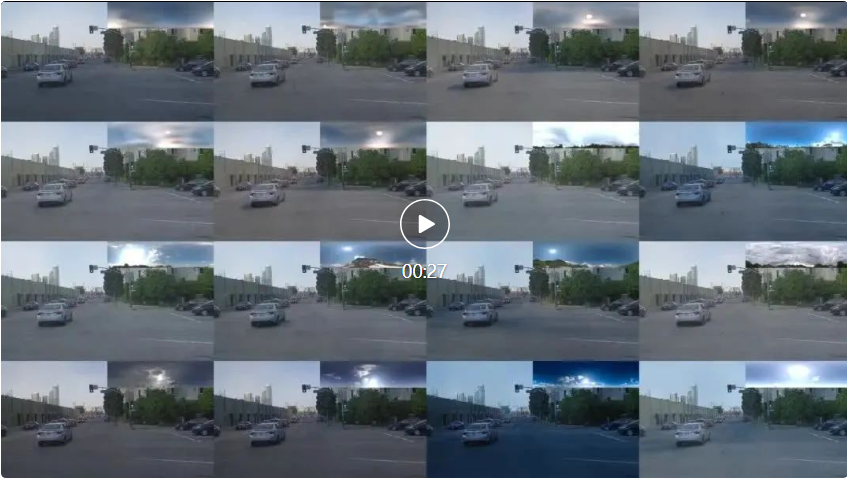

Although physically based rendered images reconstruct the lighting effects in the scene well, due to imperfections in geometry and errors in material/lighting decomposition, the rendering results often lack realism, such as blurry, unrealistic Surface reflections and boundary artifacts. Therefore, researchers have proposed neural deferred rendering to enhance realism. They introduced an image synthesis network that takes a source image and a precomputed buffer of lighting-related data generated by the rendering engine to generate the final image. At the same time, the method in the paper also provides the network with an environment map to enhance the lighting context, and generates paired images through the digital twin, providing a novel pairwise simulation and real data training scheme. Simulation capability displayChange the lighting of the scene (Scene Relighting) LightSim can render the same scene in a time-consistent manner under new lighting conditions. As shown in the video, the new sun position and sky appearance cause the scene's shadows and appearance to change.  LightSim can perform batch relighting of scenes, generating new time-consistent and 3D-aware lighting changes of the same scene from estimated and real HDR environment maps.

LightSim can perform batch relighting of scenes, generating new time-consistent and 3D-aware lighting changes of the same scene from estimated and real HDR environment maps.  LightSim The lighting representation is editable and can change the direction of the sun, thus updating lighting changes and shadows related to the direction of the sun's light. LightSim generates the following video by rotating an HDR environment map and passing it to the Neural Deferred Rendering module.

LightSim The lighting representation is editable and can change the direction of the sun, thus updating lighting changes and shadows related to the direction of the sun's light. LightSim generates the following video by rotating an HDR environment map and passing it to the Neural Deferred Rendering module.  LightSim can also perform shadow editing in batches.

LightSim can also perform shadow editing in batches.  Lighting-Aware Actor InsertionExcept In addition to modifying lighting, LightSim can also perform lighting-aware additions to unusual objects, such as architectural obstructions. These added objects can update the object's lighting shadows, accurately occlude objects, and spatially adapt to the entire camera configuration.

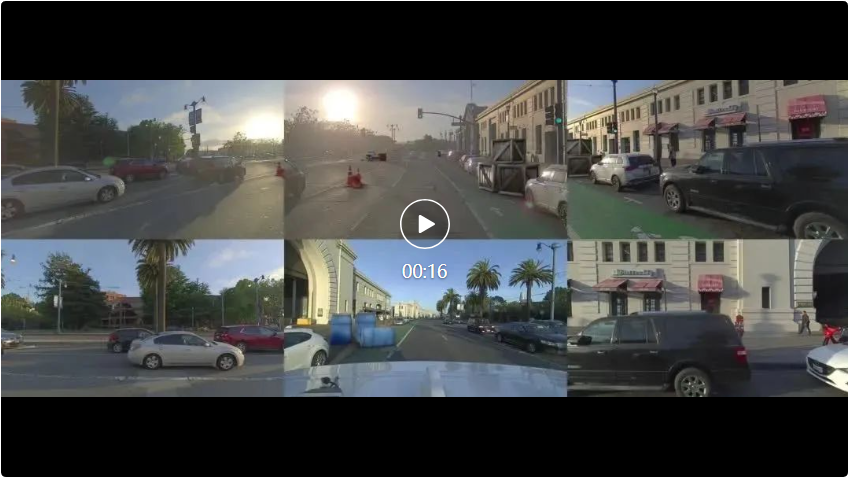

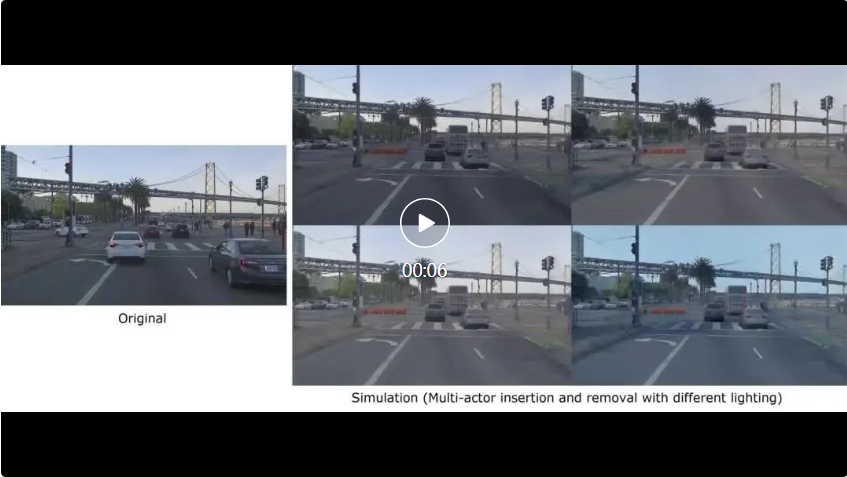

Lighting-Aware Actor InsertionExcept In addition to modifying lighting, LightSim can also perform lighting-aware additions to unusual objects, such as architectural obstructions. These added objects can update the object's lighting shadows, accurately occlude objects, and spatially adapt to the entire camera configuration.  Simulation migration (Generalization to nuScenes)Due to LightSim’s neural deferred rendering network It is trained on multiple driving videos, so LightSim can be generalized to new scenarios. The following video demonstrates LightSim's ability to generalize to driving scenes in nuScenes. LightSim builds a lighting-aware digital twin of each scene, which is then applied to a neural deferred rendering model pre-trained on PandaSet. LightSim migration performs well and can relight scenes relatively robustly.

Simulation migration (Generalization to nuScenes)Due to LightSim’s neural deferred rendering network It is trained on multiple driving videos, so LightSim can be generalized to new scenarios. The following video demonstrates LightSim's ability to generalize to driving scenes in nuScenes. LightSim builds a lighting-aware digital twin of each scene, which is then applied to a neural deferred rendering model pre-trained on PandaSet. LightSim migration performs well and can relight scenes relatively robustly.  Real and controllable camera simulationIn summary With all the features demonstrated, LightSim enables controllable, diverse and realistic camera simulations. The following video demonstrates LightSim's scene simulation capabilities. In the video, a white car made an emergency lane change to the SDV lane, introducing a new roadblock, which caused the white car to enter and create a brand new scene. The effects generated by LightSim under various lighting conditions in the new scene are as follows: .

Real and controllable camera simulationIn summary With all the features demonstrated, LightSim enables controllable, diverse and realistic camera simulations. The following video demonstrates LightSim's scene simulation capabilities. In the video, a white car made an emergency lane change to the SDV lane, introducing a new roadblock, which caused the white car to enter and create a brand new scene. The effects generated by LightSim under various lighting conditions in the new scene are as follows: . Another example is shown in the video below, where a new set of vehicles has been added after a new road obstacle has been inserted. Using simulated lighting built with LightSim, newly added vehicles can be seamlessly integrated into the scene.

Another example is shown in the video below, where a new set of vehicles has been added after a new road obstacle has been inserted. Using simulated lighting built with LightSim, newly added vehicles can be seamlessly integrated into the scene.  LightSim is a perceptible A lighting camera simulation platform for processing large-scale dynamic driving scenes. It can build lighting-aware digital twins based on real-world data and modify them to create new scenes with different object layouts and SDV perspectives. LightSim is capable of simulating new lighting conditions on a scene for diverse, realistic and controllable camera simulation, resulting in temporally/spatially consistent video. It is worth noting that LightSim can also be combined with reverse rendering, weather simulation and other technologies to further improve simulation performance.

LightSim is a perceptible A lighting camera simulation platform for processing large-scale dynamic driving scenes. It can build lighting-aware digital twins based on real-world data and modify them to create new scenes with different object layouts and SDV perspectives. LightSim is capable of simulating new lighting conditions on a scene for diverse, realistic and controllable camera simulation, resulting in temporally/spatially consistent video. It is worth noting that LightSim can also be combined with reverse rendering, weather simulation and other technologies to further improve simulation performance. The above is the detailed content of LightSim: An autonomous driving lighting simulation platform launched at NeurIPS 2023 to achieve a realistic, controllable and scalable simulation experience. For more information, please follow other related articles on the PHP Chinese website!

LightSim can perform batch relighting of scenes, generating new time-consistent and 3D-aware lighting changes of the same scene from estimated and real HDR environment maps.

LightSim can perform batch relighting of scenes, generating new time-consistent and 3D-aware lighting changes of the same scene from estimated and real HDR environment maps.

LightSim can also perform shadow editing in batches.

LightSim can also perform shadow editing in batches.

Another example is shown in the video below, where a new set of vehicles has been added after a new road obstacle has been inserted. Using simulated lighting built with LightSim, newly added vehicles can be seamlessly integrated into the scene.

Another example is shown in the video below, where a new set of vehicles has been added after a new road obstacle has been inserted. Using simulated lighting built with LightSim, newly added vehicles can be seamlessly integrated into the scene.

unicode to Chinese

unicode to Chinese

Introduction to xmpp protocol

Introduction to xmpp protocol

What to do if the CPU temperature is too high

What to do if the CPU temperature is too high

Usage of sprintf function in php

Usage of sprintf function in php

How to open mds file

How to open mds file

The role of the formatfactory tool

The role of the formatfactory tool

JS array sorting: sort() method

JS array sorting: sort() method

The latest ranking of the top ten exchanges in the currency circle

The latest ranking of the top ten exchanges in the currency circle

How to check if port 445 is closed

How to check if port 445 is closed