Technology peripherals

Technology peripherals

AI

AI

Kuaishou's research result SAMP was recognized at the EMNLP2023 International Artificial Intelligence Conference

Kuaishou's research result SAMP was recognized at the EMNLP2023 International Artificial Intelligence Conference

Kuaishou's research result SAMP was recognized at the EMNLP2023 International Artificial Intelligence Conference

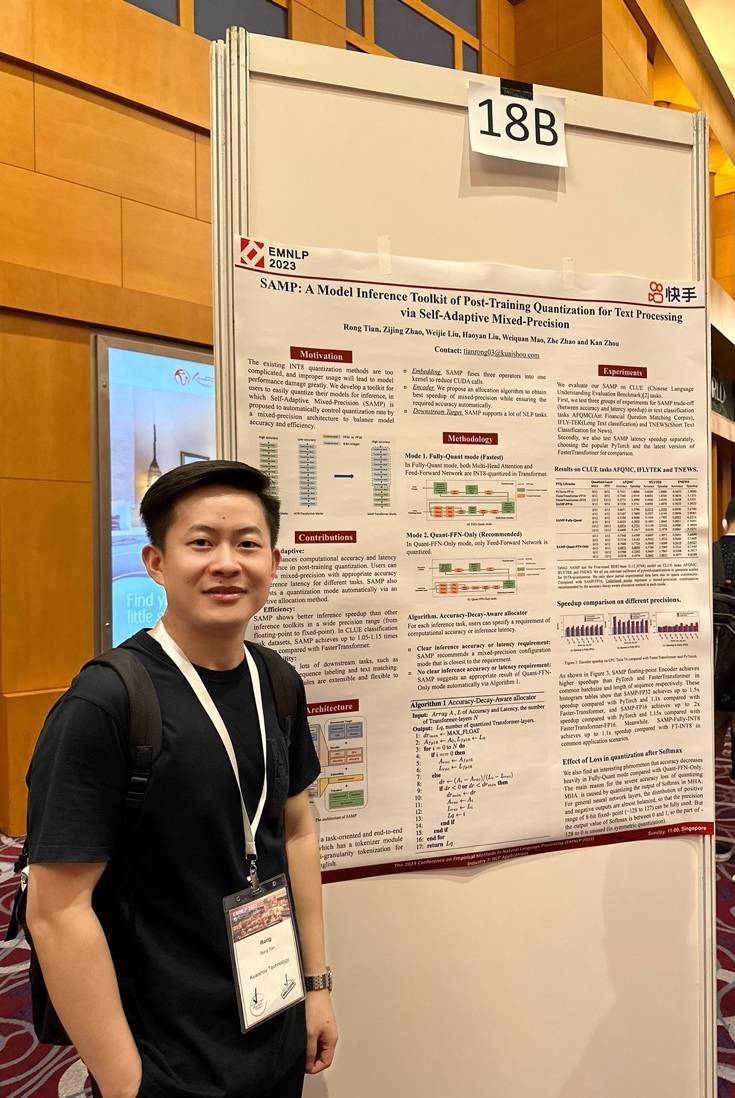

With the wide application of deep learning models in fields such as natural language processing, model inference speed and performance have become important issues. Recently, the research result led by Kuaishou "SAMP: Post-training Quantitative Model Inference Library Based on Adaptive Mixed Precision" was successfully selected into the top conference EMNLP 2023 and displayed and shared in Singapore

This study proposes an inference acceleration tool called SAMP, which uses adaptive mixed precision technology to significantly increase the inference speed while maintaining model performance. It contains an adaptive mixed-precision encoder and a series of advanced fusion strategies. The adaptive mixed-precision encoder can find the best floating-point and fixed-point mixed precision combination in a large number of general matrix multiplication (GEMM) operations and Transformer layers, so that the performance of model inference is closest to user needs (computation accuracy or inference efficiency). Ultimately, mixed-precision calculations achieve better computational accuracy than full fixed-point calculations. The fusion strategy integrates and improves embedding operators and quantization-related calculation operations, reducing CUDA kernel calls by half. At the same time, SAMP is an end-to-end toolkit implemented in the C programming language. It has excellent inference speed and also lowers the industrial application threshold for quantitative inference after training.

What needs to be rewritten is: the innovation point of SAMP compared with similar systems, as shown in Table 1

SAMP has the following main highlights:

1. Adaptive. SAMP balances computational accuracy and latency performance in a post-training quantized inference approach. Users can choose mixed-precision configurations with appropriate accuracy and inference latency for different tasks. SAMP can also recommend the best quantization combination mode to users through adaptive allocation methods.

2. Reasoning efficiency. SAMP shows better inference speedup than other inference toolkits over a wide precision range (floating point to fixed point). In the Chinese Language Understanding Evaluation Benchmark (CLUE) classification task data set, SAMP achieved an acceleration of up to 1.05-1.15 times compared with FasterTransformer.

3. Flexibility. SAMP covers numerous downstream tasks such as classification, sequence labeling, text matching, etc. Target modules are extensible and can be flexibly customized. It is user-friendly and less platform-dependent. SAMP supports C and Python APIs and only requires CUDA 11.0 or higher. In addition, SAMP also provides many model conversion tools to support mutual conversion between models in different formats.

Picture 1: This research paper will be presented and shared at the EMNLP2023 conference

The main researcher, Tian Rong from Kuaishou, said that the result of the joint efforts of the entire team is to achieve good results in scenarios such as model inference. SAMP has made contributions in three aspects: first, it solves the problem of large accuracy loss in existing post-quantization (PTQ) reasoning tools in industrial applications; second, it promotes the use of post-quantization (PTQ) technology in multiple downstream tasks of NLP. Large-scale application; at the same time, the inference library is also lightweight, flexible, user-friendly, and supports user-defined task goals

It is reported that EMNLP (Empirical Methods in Natural Language Processing) is one of the top international conferences in the field of natural language processing and artificial intelligence. It focuses on the academic research of natural language processing technology in various application scenarios, with special emphasis on the empirical evidence of natural language processing. Research. This conference has promoted core innovations in the field of natural language processing such as pre-training language models, text mining, dialogue systems, and machine translation. It has a huge influence in both academic and industrial circles. This selection also means that Kuaishou’s progress in this field The research results have been recognized by international scholars.

The above is the detailed content of Kuaishou's research result SAMP was recognized at the EMNLP2023 International Artificial Intelligence Conference. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to use a small yellow cart to sell things in Kuaishou - How to sell things in a small yellow cart in Kuaishou

Apr 02, 2024 am 09:34 AM

How to use a small yellow cart to sell things in Kuaishou - How to sell things in a small yellow cart in Kuaishou

Apr 02, 2024 am 09:34 AM

Many users have the idea of hanging a small yellow cart to sell things to make money, but they don’t know how to operate it. Below, the editor will introduce in detail the requirements and specific procedures for hanging a small yellow cart. If you are interested, let’s take a look! To sell things on a Kuaishou cart, you first need to open a Kuaishou store. 1. Download and open Kuaishou login. 2. Click the "three stripes" icon in the upper left corner, and click "More" in the left navigation bar that pops up. 3. Select "Store Order" in more functions and enter the Kuaishou Store page. 4. Check "I want to open a store" and follow the guidelines for real-name authentication and face recognition authentication. After completion, you can successfully open a Kuaishou store. 5. After opening a Kuaishou store, you need to bind a payment account. Enter the "Account Association and Settings" page and bind the collection account and Alipay/WeChat account. Hang Xiao Huang

How to close password-free payment in Kuaishou Kuaishou tutorial on how to close password-free payment

Mar 23, 2024 pm 09:21 PM

How to close password-free payment in Kuaishou Kuaishou tutorial on how to close password-free payment

Mar 23, 2024 pm 09:21 PM

Kuaishou is an excellent video player. The password-free payment function in Kuaishou is very familiar to everyone. It can be of great help to us in daily life, especially when purchasing the goods we need on the platform. Okay, let’s go and pay. Now we have to cancel it. How can we cancel it? How can we effectively cancel the password-free payment function? The method of canceling password-free payment is very simple. The specific operation methods have been sorted out. Let’s go through it together. Let’s take a look at the entire guide on this site, I hope it can help everyone. Tutorial on how to close password-free payment in Kuaishou 1. Open the Kuaishou app and click on the three horizontal lines in the upper left corner. 2. Click Kuaishou Store. 3. In the options bar above, find password-free payment and click on it. 4. Click to support

What is the method to pass the reason for unblocking in Kuaishou? How to forcibly lift a permanently banned account?

Mar 21, 2024 pm 07:21 PM

What is the method to pass the reason for unblocking in Kuaishou? How to forcibly lift a permanently banned account?

Mar 21, 2024 pm 07:21 PM

As a well-known short video platform in China, Kuaishou has a large number of users. However, sometimes due to various reasons, some users may face account bans. It’s important to understand Kuaishou’s methods of unblocking accounts and how to deal with permanent account bans. This article will explore these issues in detail to help users successfully unblock their accounts. 1. What is the method to pass the reason for unblocking in Kuaishou? In order for Kuaishou's reason for unblocking to be approved, detailed explanations and relevant evidence need to be submitted to convince the reviewer that the reason for the account being blocked is unreasonable. The following suggestions may help you: Make sure you collect enough evidence when submitting your unban application to prove that your account was unjustly banned. Such evidence may include screenshots of banned accounts, creative content, and fan interactions. Providing detailed information will

How can others view the records of Kuaishou comments? How to delete other people's comments?

Mar 22, 2024 am 09:40 AM

How can others view the records of Kuaishou comments? How to delete other people's comments?

Mar 22, 2024 am 09:40 AM

On the Kuaishou platform, interaction between users is one of the main ways to enhance friendship and share happiness. After commenting on other people's works, some users may want to check their own comment records in order to review previous interaction content and understand the interaction with others. 1. How can others view the records of Kuaishou comments? 1. Open Kuaishou App and log in to your account. 2. Click the "Discover" button at the bottom of the homepage to enter the discovery page. 3. In the search box on the discovery page, enter the username or keywords of the author of the work you want to view comments, and then click the search button. 4. On the search results page, find the target user's work and click to enter the work details page. 5. On the work details page, find the comment area and click your comment nickname. 6. Enter personal review

What should I do if the number of Kuaishou comments reaches the upper limit? What is the maximum number of comments it can have?

Mar 23, 2024 pm 02:20 PM

What should I do if the number of Kuaishou comments reaches the upper limit? What is the maximum number of comments it can have?

Mar 23, 2024 pm 02:20 PM

On Kuaishou, users can comment on posted short videos, which is a way of interactive communication. Occasionally we may encounter an issue where the number of comments has reached the limit, preventing us from continuing to comment. So, what should you do when the number of Kuaishou comments reaches the upper limit? This article will introduce in detail the methods to deal with the upper limit of the number of Kuaishou comments, as well as the relevant regulations on the upper limit of the number of Kuaishou comments. 1. What should I do if the number of Kuaishou comments reaches the upper limit? When the number of Kuaishou comments reaches the upper limit, in order to maintain the order and healthy development of the platform, Kuaishou will restrict users' comment behavior. Users need to wait patiently for a period of time before continuing to comment. This is to ensure that the communication environment on the platform can continue to be good. This restrictive measure helps reduce malicious comments and spamming behavior and improves user experience.

How can works published by Kuaishou become popular? How to attract more fans on Kuaishou?

Mar 21, 2024 pm 11:50 PM

How can works published by Kuaishou become popular? How to attract more fans on Kuaishou?

Mar 21, 2024 pm 11:50 PM

As a popular short video platform, Kuaishou has a huge user base. Many users publish works on the Kuaishou platform, hoping to attract more attention by showing off their talents and sharing their daily life. So, how can works published by Kuaishou become popular? This article will discuss this issue. 1. How can works published by Kuaishou become popular? For a work to be popular, the first condition is that the content must be of high quality. The content must be unique, creative, engaging and interesting to arouse the interest of the audience. In addition, picture quality and editing skills are also important points to pay attention to. Keeping up with trending topics is a great way to increase the visibility of your work. You can choose to follow the hot topics of the moment, or you can try to create attention-grabbing trending topics. Hot events, popular words and trends

Where can Kuaishou publish works regularly? How to publish tutorials on a regular basis?

Mar 22, 2024 am 09:11 AM

Where can Kuaishou publish works regularly? How to publish tutorials on a regular basis?

Mar 22, 2024 am 09:11 AM

When publishing works on the Kuaishou platform, in addition to interacting with fans in real time, you can also use the precise timing publishing function to present the works at the best time, thereby attracting more attention. However, many users may not know how to set up the scheduled publishing function on Kuaishou. This article will give you a detailed explanation on how to set up scheduled release of works on Kuaishou, and provide corresponding tutorial guidance. 1. Where can Kuaishou publish works regularly? As the leading short video platform in China, Kuaishou provides users with a convenient scheduled release function. Users can easily set the release time of their works in the Kuaishou App, thereby realizing the convenience of automatically releasing content at a specific time. 1. Open Kuaishou App and enter the "Creation Center" or "Publish Works" page. 2. Click "Publish Work" or "Publish

How do you know if the recipient of a Kuaishou private message has not been read back? What's going on if the other party can't read the private message?

Mar 22, 2024 am 11:21 AM

How do you know if the recipient of a Kuaishou private message has not been read back? What's going on if the other party can't read the private message?

Mar 22, 2024 am 11:21 AM

On Kuaishou’s social platform, interaction between users is crucial. The private message function allows users to communicate more conveniently. Sometimes the private messages we send to the other party have been seen, but they have not responded. So, how do we know that the recipient of a Kuaishou private message has not been able to read it back? 1. How do you know that the recipient of a Kuaishou private message has not been able to read it? In Kuaishou’s private message function, users can use the “read” function to know whether the other party has read the private message they sent. Once the other party opens and views the private message, the sender will see a "Read" mark appear on the chat interface. This function allows users to know in time whether their messages have been received and read. If the other party has read but did not reply, the user can see the status of the private message change to "read" in the chat list, and