Technology peripherals

Technology peripherals

AI

AI

OpenAI guidance allows boards to restrict CEOs from releasing new models to guard against AI risks

OpenAI guidance allows boards to restrict CEOs from releasing new models to guard against AI risks

OpenAI guidance allows boards to restrict CEOs from releasing new models to guard against AI risks

In order to avoid the huge risks that artificial intelligence (AI) may bring, OpenAI decided to give the board of directors greater power to oversee security matters and conduct strict supervision on CEO Sam Altman, who just won an internal battle last month

OpenAI released a series of guidelines on Monday, December 18, Eastern Time, designed to track, assess, predict, and prevent catastrophic risks posed by increasingly powerful artificial intelligence (AI) models. OpenAI defines "catastrophic risk" as any risk that could result in hundreds of billions of dollars in economic losses, or serious injury or death to multiple people

The 27-page guidance, known as the "Readiness Framework," states that even if a company's top managers, including the CEO or a person designated by leadership, believe that an AI model to be released is safe, the company's board of directors still Option to postpone the release of the model. This means that while OpenAI’s CEO is responsible for day-to-day decisions, the board of directors will be informed of the discovery of risks and have the power to veto the CEO’s decisions

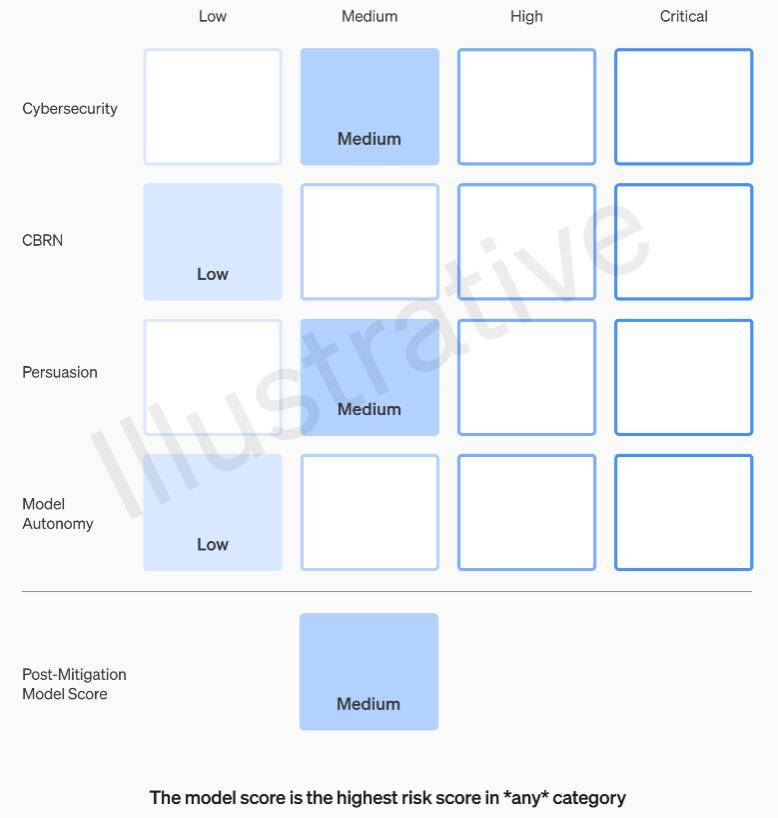

OpenAI’s Readiness Framework recommends using a matrix approach to document the level of risk posed by cutting-edge AI models across multiple categories, in addition to provisions for company leadership and board authority. These risks include bad actors using AI models to create malware, launch social engineering attacks, or spread harmful nuclear or biological weapons information

Specifically, OpenAI sets risk thresholds in four categories: cybersecurity, CBRN (chemical, biological, radiological, nuclear threats), persuasion, and model autonomy. Before and after risk mitigation measures are implemented, OpenAI classifies each risk into four levels: low, medium, high, or severe

OpenAI stipulates that only AI models rated "medium" or below after risk mitigation can be deployed, and only models rated "high" or below after risk mitigation can continue to be developed. If the risk cannot be reduced, Downgrades below critical and the company will stop developing the model. OpenAI will also take additional security measures for models assessed as high risk or severe risk until the risk is mitigated

OpenAI divides security issue handlers into three teams. The Security Systems team is focused on mitigating and addressing risks posed by current products such as GPT-4. The Super Alignment team is concerned about the problems that may arise when future systems exceed human capabilities. Additionally, there is a new team called Prepare, led by Aleksander Madry, professor in the Department of Electrical Engineering and Computer Science (EECS) at the Massachusetts Institute of Technology (MIT)

The new team will evaluate robust model development and implementation. They will be specifically responsible for overseeing the technical work and operational architecture related to security decisions. They will drive technical work, review limitations of cutting-edge model capabilities, and conduct assessments and synthesis related reports

Madry said that his team will regularly evaluate the risk level of OpenAI’s most advanced artificial intelligence models that have not yet been released, and submit monthly reports to OpenAI’s internal Security Advisory Group (SAG). SAG will analyze Madry’s team’s work and provide recommendations to CEO Altman and the company’s Board of Directors

According to guidance documents released on Monday, Altman and his leadership can use these reports to decide whether to release new AI systems, but the board of directors retains the power to overturn their decisions

Currently, Madry’s team only has four people, but he is working hard to recruit more members. It is expected that the team will reach 15 to 20 people, which is similar to the existing security team and hyper-alignment team.

Madry hopes other AI companies will assess the risks of their models in a similar way and believes this could become a model for regulation

The above is the detailed content of OpenAI guidance allows boards to restrict CEOs from releasing new models to guard against AI risks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

Guide to Uber's H3 for Spatial Indexing

Mar 22, 2025 am 10:54 AM

Guide to Uber's H3 for Spatial Indexing

Mar 22, 2025 am 10:54 AM

In today’s data-driven world, efficient geospatial indexing is crucial for applications ranging from ride-sharing and logistics to environmental monitoring and disaster response. Uber’s H3, a powerful open-source spat