The historical evolution of Python programming

| Introduction | Once you embark on the road of programming, if you don't figure out the coding problem, it will haunt you like a ghost throughout your career, and various supernatural events will follow one after another and linger. Only by giving full play to the spirit of programmers to fight to the end can you completely get rid of the troubles caused by coding problems. |

The first time I encountered a coding problem was when I was writing a JavaWeb-related project. A string of characters wandered from the browser to the application code, and immersed itself in the database. It is possible to step on coding mines anytime and anywhere. The second time I encountered a coding problem was when I was learning Python. When crawling web page data, the coding problem came up again. At that time, my mood collapsed. The most popular sentence nowadays is: "I was confused at the time. ".

In order to understand character encoding, we have to start from the origin of computers. All data in computers, whether text, pictures, videos, or audio files, are ultimately stored in a digital form similar to 01010101. We are lucky, and we are also unfortunate. Fortunately, the times have given us the opportunity to come into contact with computers. Unfortunately, computers were not invented by our countrymen, so the standards of computers must be designed according to the habits of the American Empire. So in the end How did computers first represent characters? This starts with the history of computer coding.

ASCIIEvery novice who does JavaWeb development will encounter garbled code problems, and every novice who does Python crawler will encounter encoding problems. Why are encoding problems so painful? This problem started when Guido van Rossum created the Python language in 1992. At that time, Guido never expected that the Python language would be so popular today, nor did he expect that the speed of computer development would be so amazing. Guido did not need to care about encoding when he originally designed this language, because in the English world, the number of characters is very limited, 26 letters (upper and lower case), 10 numbers, punctuation marks, and control characters, that is, on the keyboard The characters corresponding to all keys add up to just over a hundred characters. This is more than enough to use one byte of storage space to represent one character in a computer, because one byte is equivalent to 8 bits, and 8 bits can represent 256 symbols. So smart Americans developed a set of character encoding standards called ASCII (American Standard Code for Information Interchange). Each character corresponds to a unique number. For example, the binary value corresponding to character A is 01000001, and the corresponding decimal value is 65 . At first, ASCII only defined 128 character codes, including 96 text and 32 control symbols, a total of 128 characters. Only 7 bits of one byte are needed to represent all characters, so ASCII only uses one byte. The last 7 bits and the highest bit are all 0.

However, when computers slowly spread to other Western European regions, they discovered that there were many characters unique to Western Europe that were not in the ASCII encoding table, so later an extensible ASCII called EASCII appeared. As the name suggests, it is based on ASCII It is extended on the basis of the original 7 bits to 8 bits. It is fully compatible with ASCII. The extended symbols include table symbols, calculation symbols, Greek letters and special Latin symbols. However, the EASCII era is a chaotic era. There is no unified standard. They each use the highest bit to implement their own set of character encoding standards according to their own standards. The more famous ones are CP437, CP437 is a Windows system The character encoding used in , as shown below:

Another widely used EASCII is ISO/8859-1(Latin-1), which is a series of 8-bit codes jointly developed by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC). The character set standard, ISO/8859-1, only inherits the characters between 128-159 of the CP437 character encoding, so it is defined starting from 160. Unfortunately, these numerous ASCII extended character sets are incompatible with each other.

As the times progress, computers begin to spread to thousands of households, and Bill Gates makes the dream of everyone having a computer on their desktop come true. However, one problem that computers have to face when entering China is character encoding. Although Chinese characters in our country are the most frequently used characters by humans, Chinese characters are broad and profound, and there are tens of thousands of common Chinese characters, which is far beyond what ASCII encoding can represent. Even EASCII seemed to be a drop in the bucket, so the smart Chinese made their own set of codes called GB2312, also known as GB0, which was released by the State Administration of Standards of China in 1981. GB2312 encoding contains a total of 6763 Chinese characters, and it is also compatible with ASCII. The emergence of GB2312 basically meets the computer processing needs of Chinese characters. The Chinese characters it contains have covered 99.75% of the frequency of use in mainland China. However, GB2312 still cannot 100% meet the needs of Chinese characters. GB2312 cannot handle some rare characters and traditional characters. Later, a code called GBK was created based on GB2312. GBK not only contains 27,484 Chinese characters, but also major ethnic minority languages such as Tibetan, Mongolian, and Uyghur. Similarly, GBK is also compatible with ASCII encoding. English characters are represented by 1 byte, and Chinese characters are represented by two bytes.

Unicode We can set up a separate mountain to deal with Chinese characters and develop a set of coding standards according to our own needs. However, computers are not only used by Americans and Chinese, but also include characters from other countries in Europe and Asia, such as The total number of Japanese and Korean characters from all over the world is estimated to be hundreds of thousands, which is far beyond the range that ASCII code or even GBK can represent. Besides, why do people use your GBK standard? How to express such a huge character library? So the United Alliance International Organization proposed the Unicode encoding. The scientific name of Unicode is "Universal Multiple-Octet Coded Character Set", referred to as UCS.

Unicode has two formats: UCS-2 and UCS-4. UCS-2 uses two bytes to encode, with a total of 16 bits. In theory, it can represent up to 65536 characters. However, to represent all the characters in the world, 65536 numbers are obviously not enough, because there are nearly 65536 characters in Chinese characters alone. 100,000, so the Unicode 4.0 specification defines a set of additional character encodings. UCS-4 uses 4 bytes (actually only 31 bits are used, and the highest bit must be 0).

Unicode can theoretically cover symbols used in all languages. Any character in the world can be represented by a Unicode encoding. Once the Unicode encoding of a character is determined, it will not change. However, Unicode has certain limitations. When a Unicode character is transmitted on the network or finally stored, it does not necessarily require two bytes for each character. For example, one character "A" can be represented by one byte. Characters need to use two bytes, which is obviously a waste of space. The second problem is that when a Unicode character is saved in the computer, it is a string of 01 numbers. So how does the computer know whether a 2-byte Unicode character represents a 2-byte character or two 1-byte characters? , if you don’t tell the computer in advance, the computer will also be confused. Unicode only stipulates how to encode, but does not specify how to transmit or save this encoding. For example, the Unicode encoding of the character "汉" is 6C49. I can use 4 ASCII numbers to transmit and save this encoding; I can also use 3 consecutive bytes E6 B1 89 encoded in UTF-8 to represent it. The key is that both parties to the communication must agree. Therefore, Unicode encoding has different implementation methods, such as: UTF-8, UTF-16, etc. Unicode here is just like English. It is a universal standard for communication between countries. Each country has its own language. They translate standard English documents into the text of their own country. This is the implementation method, just like UTF- 8.

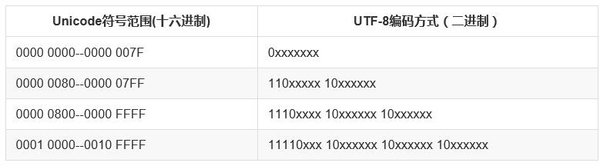

UTF-8 (Unicode Transformation Format) is an implementation of Unicode and is widely used on the Internet. It is a variable-length character encoding that can use 1-4 bytes to represent a character depending on the specific situation. For example, English characters that can originally be expressed in ASCII code only require one byte of space when expressed in UTF-8, which is the same as ASCII. For multi-byte (n bytes) characters, the first n bits of the first byte are set to 1, the nth bit is set to 0, and the first two bits of the following bytes are set to 10. The remaining binary digits are filled with the UNICODE code of the character.

Take the Chinese character "好" as an example. The Unicode corresponding to "好" is 597D, and the corresponding interval is 0000 0800 -- 0000 FFFF. Therefore, when it is expressed in UTF-8, it requires 3 bytes to store. 597D is expressed in binary. : 0101100101111101, fill to 1110xxxx 10xxxxxx 10xxxxxx to get 11100101 10100101 10111101, converted to hexadecimal: E5A5BD, so the UTF-8 encoding corresponding to the "good" Unicode "597D" is "E5A5BD".

unicode 0101 100101 111101

Encoding rules 1110xxxx 10xxxxxx 10xxxxxx

--------------------------

utf-8 11100101 10100101 10111101

--------------------------

Hexadecimal utf-8 e 5 a 5 b d Python character encoding

Now I finally finish the theory. Let’s talk about coding issues in Python. Python was born much earlier than Unicode, and Python's default encoding is ASCII.

>>> import sys

>>> sys.getdefaultencoding()

'ascii'

Therefore, if the encoding is not explicitly specified in the Python source code file, a syntax error will occur

#test.py

print "Hello"

The above is the test.py script, run

python test.py

will include the following error:

File “test.py”, line 1 yntaxError: Non-ASCII character ‘/xe4′ in file test.py on line 1, but no encoding declared;

In order to support non-ASCII characters in source code, the encoding format must be explicitly specified on the first or second line of the source file:

# coding=utf-8

or it could be:

#!/usr/bin/python # -*- coding: utf-8 -*-

The data types related to strings in Python are str and unicode. They are both subclasses of basestring. It can be seen that str and unicode are two different types. A string object of type .

basestring

/ /

/ /

str unicodeFor the same Chinese character "好", when expressed as str, it corresponds to the UTF-8 encoding '/xe5/xa5/xbd', and when expressed as Unicode, its corresponding symbol is u'/u597d' is equivalent to u "good". It should be added that the specific encoding format of str type characters is UTF-8, GBK, or other formats, depending on the operating system. For example, in Windows system, the cmd command line displays:

# windows终端 >>> a = '好' >>> type(a) <type 'str'> >>> a '/xba/xc3'

What is displayed in the command line of the Linux system is:

# linux终端 >>> a='好' >>> type(a) <type 'str'> >>> a '/xe5/xa5/xbd' >>> b=u'好' >>> type(b) <type 'unicode'> >>> b u'/u597d'

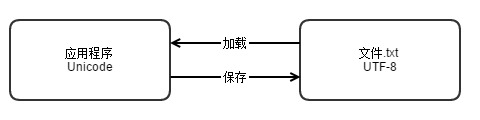

Whether it is Python3x, Java or other programming languages, Unicode encoding has become the default encoding format of the language. When the data is finally saved to the media, different media can use different methods. Some people like to use UTF-8 , some people like to use GBK, it doesn't matter, as long as the platform has a unified encoding standard, it doesn't matter how it is implemented.

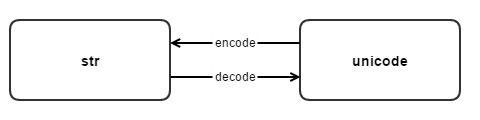

So how to convert between str and unicode in Python? The conversion between these two types of string types relies on these two methods: decode and encode.

#从str类型转换到unicode

s.decode(encoding) =====> <type 'str'> to <type 'unicode'>

#从unicode转换到str

u.encode(encoding) =====> <type 'unicode'> to <type 'str'>

>>> c = b.encode('utf-8')

>>> type(c)

<type 'str'>

>>> c

'/xe5/xa5/xbd'

>>> d = c.decode('utf-8')

>>> type(d)

<type 'unicode'>

>>> d

u'/u597d'

This'/xe5/xa5/xbd' is the UTF-8 encoded str type string obtained by Unicode u'hao'encoding through the function encode. Vice versa, str type c is decoded into Unicode string d through the function decode.

str(s) and unicode(s)str(s) and unicode(s) are two factory methods that return str string objects and Unicode string objects respectively. str(s) is the abbreviation of s.encode(‘ascii’). experiment:

>>> s3 = u"你好" >>> s3 u'/u4f60/u597d' >>> str(s3) Traceback (most recent call last): File "<stdin>", line 1, in <module> UnicodeEncodeError: 'ascii' codec can't encode characters in position 0-1: ordinal not in range(128)

The above s3 is a Unicode type string. str(s3) is equivalent to executing s3.encode('ascii'). Because the two Chinese characters "Hello" cannot be represented by ASCII code, an error is reported. The specification is correct. Encoding: s3.encode('gbk') or s3.encode('utf-8') will not cause this problem. Similar Unicode has the same error:

>>> s4 = "你好" >>> unicode(s4) Traceback (most recent call last): File "<stdin>", line 1, in <module> UnicodeDecodeError: 'ascii' codec can't decode byte 0xc4 in position 0: ordinal not in range(128) >>>

unicode(s4)Equivalent to s4.decode('ascii')

, so for correct conversion, you must correctly specify its encoding s4.decode('gbk') or s4.decode('utf-8').

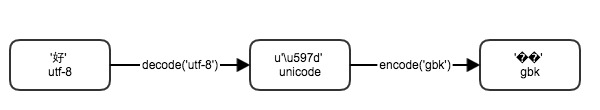

All reasons for garbled characters can be attributed to the inconsistent encoding formats used in the encoding process of characters after different encoding and decoding, such as:

# encoding: utf-8

>>> a='好'

>>> a

'/xe5/xa5/xbd'

>>> b=a.decode("utf-8")

>>> b

u'/u597d'

>>> c=b.encode("gbk")

>>> c

'/xba/xc3'

>>> print c

UTF-8 encoded character '好' occupies 3 bytes. After decoding to Unicode, if you use GBK to decode, it will only be 2 bytes long, and garbled characters will appear in the end. problem, so the best way to prevent garbled characters is to always use the same encoding format to encode and decode characters.

For strings in Unicode form (str type):

s = 'id/u003d215903184/u0026index/u003d0/u0026st/u003d52/u0026sid'

To convert to real Unicode you need to use:

s.decode('unicode-escape')test:

>>> s = 'id/u003d215903184/u0026index/u003d0/u0026st/u003d52/u0026sid/u003d95000/u0026i'

>>> print(type(s))

<type 'str'>

>>> s = s.decode('unicode-escape')

>>> s

u'id=215903184&index=0&st=52&sid=95000&i'

>>> print(type(s))

<type 'unicode'>

>>>

The above codes and concepts are based on Python2.x.

The above is the detailed content of The historical evolution of Python programming. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to start nginx in Linux

Apr 14, 2025 pm 12:51 PM

How to start nginx in Linux

Apr 14, 2025 pm 12:51 PM

Steps to start Nginx in Linux: Check whether Nginx is installed. Use systemctl start nginx to start the Nginx service. Use systemctl enable nginx to enable automatic startup of Nginx at system startup. Use systemctl status nginx to verify that the startup is successful. Visit http://localhost in a web browser to view the default welcome page.

How to check whether nginx is started

Apr 14, 2025 pm 01:03 PM

How to check whether nginx is started

Apr 14, 2025 pm 01:03 PM

How to confirm whether Nginx is started: 1. Use the command line: systemctl status nginx (Linux/Unix), netstat -ano | findstr 80 (Windows); 2. Check whether port 80 is open; 3. Check the Nginx startup message in the system log; 4. Use third-party tools, such as Nagios, Zabbix, and Icinga.

How to solve nginx403

Apr 14, 2025 am 10:33 AM

How to solve nginx403

Apr 14, 2025 am 10:33 AM

How to fix Nginx 403 Forbidden error? Check file or directory permissions; 2. Check .htaccess file; 3. Check Nginx configuration file; 4. Restart Nginx. Other possible causes include firewall rules, SELinux settings, or application issues.

How to start nginx server

Apr 14, 2025 pm 12:27 PM

How to start nginx server

Apr 14, 2025 pm 12:27 PM

Starting an Nginx server requires different steps according to different operating systems: Linux/Unix system: Install the Nginx package (for example, using apt-get or yum). Use systemctl to start an Nginx service (for example, sudo systemctl start nginx). Windows system: Download and install Windows binary files. Start Nginx using the nginx.exe executable (for example, nginx.exe -c conf\nginx.conf). No matter which operating system you use, you can access the server IP

How to check whether nginx is started?

Apr 14, 2025 pm 12:48 PM

How to check whether nginx is started?

Apr 14, 2025 pm 12:48 PM

In Linux, use the following command to check whether Nginx is started: systemctl status nginx judges based on the command output: If "Active: active (running)" is displayed, Nginx is started. If "Active: inactive (dead)" is displayed, Nginx is stopped.

How to solve nginx403 error

Apr 14, 2025 pm 12:54 PM

How to solve nginx403 error

Apr 14, 2025 pm 12:54 PM

The server does not have permission to access the requested resource, resulting in a nginx 403 error. Solutions include: Check file permissions. Check the .htaccess configuration. Check nginx configuration. Configure SELinux permissions. Check the firewall rules. Troubleshoot other causes such as browser problems, server failures, or other possible errors.

How to solve nginx304 error

Apr 14, 2025 pm 12:45 PM

How to solve nginx304 error

Apr 14, 2025 pm 12:45 PM

Answer to the question: 304 Not Modified error indicates that the browser has cached the latest resource version of the client request. Solution: 1. Clear the browser cache; 2. Disable the browser cache; 3. Configure Nginx to allow client cache; 4. Check file permissions; 5. Check file hash; 6. Disable CDN or reverse proxy cache; 7. Restart Nginx.

How to clean nginx error log

Apr 14, 2025 pm 12:21 PM

How to clean nginx error log

Apr 14, 2025 pm 12:21 PM

The error log is located in /var/log/nginx (Linux) or /usr/local/var/log/nginx (macOS). Use the command line to clean up the steps: 1. Back up the original log; 2. Create an empty file as a new log; 3. Restart the Nginx service. Automatic cleaning can also be used with third-party tools such as logrotate or configured.