System Tutorial

System Tutorial

LINUX

LINUX

Analytical ideas for cloud platform architecture of large group companies

Analytical ideas for cloud platform architecture of large group companies

Analytical ideas for cloud platform architecture of large group companies

Over the past two decades, many large domestic group companies have established huge business information systems, including OA systems, ERP systems, CRM systems, HRM systems and various industry application systems. In order to ensure the long-term stable operation of these systems, a series of supporting information systems have been established, such as monitoring systems, identity authentication systems, security operation centers, etc. However, these information systems are usually independently tendered and procured during the construction period, monopolizing software and hardware resources, and do not centralize tendering or share resources with other systems, leading to widespread problems of resource waste and high construction costs. During the operation and maintenance period, independent operation and maintenance is also adopted, and operation and maintenance personnel, tools and technologies are not shared with other systems, resulting in high operation and maintenance costs and low efficiency. As the scale of existing information systems continues to expand and new systems are added, the problems of resource waste, inefficiency in operation and maintenance, and high costs have become more prominent. Therefore, more and more large group companies are beginning to introduce private clouds to solve these problems.

However, large group companies have obvious characteristics in terms of IT infrastructure, which also gives rise to relatively unique cloud computing needs. Therefore, when designing the overall architecture of the cloud platform, it is necessary to combine the IT status quo and cloud computing needs of large group companies. In order to meet these needs, this paper proposes a cloud platform architecture with certain adaptability based on the industry standard cloud platform architecture to cope with the cloud computing needs of large group companies.

IT infrastructure characteristics of large group companiesAfter years of informatization construction in large group companies, their IT infrastructure has gradually formed the following characteristics:

1) The IT organizational structure is relatively complex.Large group companies set up subsidiaries across the country or even around the world to carry out business. At the group level, they set up headquarters-level information management units. Subsidiaries usually also set up their own IT departments. Some large group companies will also set up a dedicated IT system construction and operation and maintenance subsidiaries. In addition, large group companies have been integrated or split based on business development needs over the years, and their IT departments have also been integrated or split accordingly. These factors directly lead to the very complex IT organizational structure of large group companies. Generally, a subsidiary will undertake the construction, operation and maintenance of multiple information systems, and set up a dedicated project team for each information system.

2) There is a hierarchical structure in the data center.Large group companies usually have built or plan to build a headquarters-level data center architecture of "two places and three centers", and allow each branch to build a regional data center nearby. The headquarters-level data center and the regional-level data center form a two-level architecture of the data center, and the two are interconnected through the WAN. The headquarters-level data center usually deploys information systems used throughout the entire group company, while the regional-level data center deploys information systems dedicated to regional subsidiaries or information systems that are sensitive to network delays.

3) IT resources are highly heterogeneousLarge group companies already have a large number of information systems. Each information system was constructed by different project teams at different times. The purchased software and hardware come from different products from different manufacturers, resulting in the coexistence of a large number of heterogeneous IT resources.

4) Diversify IT resource allocation strategies.The resource allocation strategy of each information system in a large group company may be different. The core system needs to adopt the best performance allocation strategy, and the non-core system can adopt the best utilization allocation strategy. Some applications with high performance requirements require Physical machine deployment is used, and some applications with lower performance requirements can be deployed on virtual machines.

5) There are many legacy information systems.Before building a cloud platform, large group companies already have supporting information systems such as monitoring systems, ITSM systems, CMDBs, identity authentication systems, and security operation centers. The functions provided by these supporting information systems should belong to the complete cloud platform. Part of it, but it already existed before the construction of the cloud platform, so there is no need to repeat the construction when building the cloud platform, but to consider how to integrate it.

Cloud computing business needs of large group companiesThe characteristics of the IT infrastructure of large group companies determine that the business requirements for cloud platforms are also relatively unique.

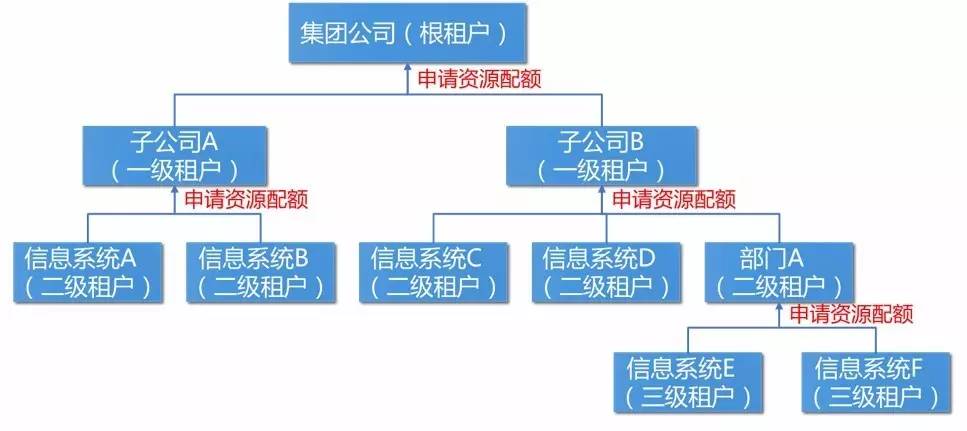

1) The tenant system of the cloud platform needs to be designed as a multi-level structure.As shown in Figure 1, multi-level tenants need to be designed in the cloud platform architecture. The root tenant corresponds to the entire group company, and uniformly manages and distributes all cloud resources within the group company, that is, all resources managed by the cloud platform. The first-level tenant corresponds to the subsidiary level and is the construction and management unit of the information system. One subsidiary can manage multiple information systems. Secondary tenants can correspond to the information system level, which is the specific construction and operation and maintenance project team of the information system; for large subsidiaries, the secondary tenants can also be mapped to their internal departments, and then set up under them to correspond to the information system. of third-tier tenants. When a lower-level tenant needs resources, it should apply for a resource quota from the upper-level tenant. The upper-level tenant checks the resource quota currently available to the tenant. If it is enough, it can be allocated to the lower-level tenant. If it is not enough, it will apply for a resource quota from the higher-level tenant. The resource quota of the root tenant is the set of all available resources of the cloud platform. If the resource quota of the root tenant is insufficient, it means that resource expansion needs to be carried out in time. This multi-level tenant architecture is the specific application of the Composite pattern in the design pattern.

Figure 1 – Cloud platform tenant system

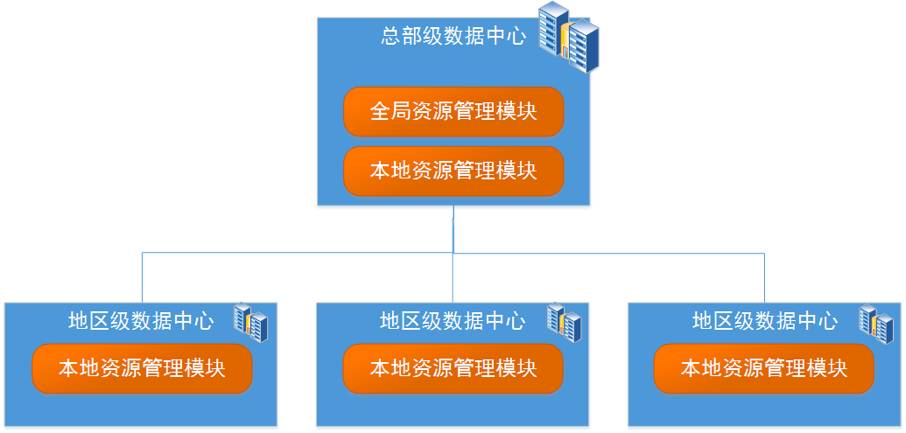

2) The resource management system of the cloud platform needs to consider the two-level architecture of the data center.As shown in Figure 2, the cloud platform needs to design a two-level resource management system. The local resource management module manages and controls the resources in this data center, and reports the resource list and resource usage of this data center to the global resource management module. Receive and execute instructions issued by the global resource management module; the global resource management module collects and summarizes the resource information reported by each local resource management module to form a global view of resources, uniformly manages and schedules resources located in all data centers, and issues instructions to local Resource management module. From a deployment perspective, the local resource management module will be deployed in every data center, including headquarters-level data centers and regional data centers, while the global resource management module is only deployed in the headquarters-level data center.

Figure 2 – Cloud platform resource management system

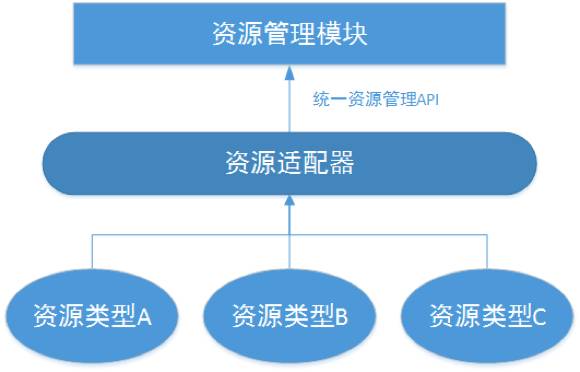

3) The resource management module of the cloud platform needs to support various heterogeneous resources.Since large group companies have many heterogeneous resources, the resource management module should avoid operating various underlying resources directly through API or CLI. Instead, add a resource adapter in the middle to allow the resource adapter to adapt to various underlying resources. For resources of different manufacturers and models, the resource adapter provides a unified resource management API for the resource management module, as shown in Figure 3. If you need to adapt to new heterogeneous resources, you only need to adapt and integrate the resource adapter with it, without modifying the code of the resource management module. This is actually the specific application of the Facade pattern in design patterns.

Figure 3 – Resource Adapter

4) The cloud platform needs to support the customization of resource allocation strategies.The cloud platform will have some common resource allocation strategies built-in, but it is impossible for the cloud platform to have all resource allocation strategies built-in. The cloud platform must allow information system administrators to customize resource allocation strategies according to their business needs, so the cloud platform needs to be designed A policy engine module, responsible for the definition, parsing and execution of policies. This is also a specific application of the Strategy pattern in design patterns.

5) The cloud platform needs to be integrated with existing supporting information systems.The cloud platform itself is also a support information system. A complete cloud platform needs to provide monitoring, identity authentication, log analysis and other functions. However, these functions often already exist in the existing support information system. When designing the cloud platform, it is necessary to provide Fully consider reusing these existing supporting information systems and protecting existing IT investments. This requires the cloud platform to reserve various integration interfaces for data exchange and application integration with these systems. The Adapter pattern in the design pattern will be used here.

Overview of cloud platform architecture of large group companiesIn view of the above-mentioned cloud computing business needs of large group companies, and with reference to the industrial cloud computing platform architecture and related international standards, this article proposes a cloud platform architecture as shown in Figure 4.

The cloud platform framework includes resource adapters, resource pools, resource management modules (including local resource management modules and global resource management modules), policy engines, process engines, resource orchestration modules, service management modules, portal modules, and operation management modules. , operation and maintenance management module and plug-in management module. The resource adapter below is responsible for adapting, organizing, and integrating underlying heterogeneous IT resources to build various resource pools. The resource adapter provides a unified resource management API for the upper resource management module. The resource management module is responsible for managing the resource pool, managing the resources in the resource pool according to the resource life cycle, and allocating and scheduling resources from the resource pool according to user needs.

In order to allocate and schedule resources more flexibly, this architecture designs a process engine, policy engine and resource orchestration module. The process engine is responsible for connecting various operation steps together to realize various automated processes; the policy engine facilitates administrators to customize resource allocation strategies according to business needs; the resource orchestration module is responsible for orchestrating and connecting various cloud resources to form related Resource composition templates to enable rapid deployment or expansion of complex information systems. The service management module manages cloud services according to the life cycle of cloud services. The portal module provides a unified operation interface for cloud platform users, tenant administrators and platform administrators respectively. The operation and maintenance management module is responsible for the technical operation and maintenance of the cloud platform and resource pool. It follows the ITIL v3 standard and is oriented to machines and systems. The operation management module is responsible for the business operations of the cloud platform, facing users and tenants. The plug-in management module provides a plug-in mechanism for the cloud platform and integrates with existing supporting information systems through various plug-ins. Each business information system identifies the required cloud services based on business needs, completes the production and release of services in the cloud platform, forms a service catalog, and completes the deployment of the information system by applying for cloud services.

Figure 4 – Cloud platform architecture

Large group companies have a large number of heterogeneous network, storage, virtualization and physical machine resources. Network resources include firewalls, load balancing, network switches, etc. from various manufacturers and with various technical specifications; storage resources include storage arrays and SAN switches from different manufacturers and models; virtualization resources include hypervisors from different manufacturers and different versions. ;Physical machine resources include x86 servers or minicomputers from different manufacturers and models. In order to provide a unified API interface for the resource management module of the cloud platform, a resource adapter is designed for each type of resource in the cloud platform architecture, including network resource adapters, storage resource adapters, virtualization adapters and physical machine adapters. These adapters shield the resource management module from the heterogeneity and complexity of the underlying resources. The resource management module only needs to call the API of the adapter, and then the adapter actually controls the underlying resources. In addition, the adapter organizes, integrates, and pools various heterogeneous resources by adapting these resources to form a network resource pool, a storage resource pool, a virtual server resource pool, and a physical server resource pool. Of course, the cloud platform needs to put resources with the same capabilities and attributes into the same resource pool according to certain rules. For example, put high-performance storage resources into the gold storage resource pool, and put medium-performance storage resources into the silver storage resource pool. . From the perspective of the resource management module, you can see various resource pools and not the underlying heterogeneous resources.

Above the resource pool is the resource management module, including the local resource management module and the global resource management module. The local resource management module focuses on managing and controlling the resource pool of the local data center, managing the resources in the resource pool to the cloud platform, and is responsible for executing the resource deployment commands issued by the global resource management module, allocating, scheduling, and Expand and monitor, and feed the results back to the global resource management module. Resource allocation refers to selecting the best resources for the system when the system is deployed. Resource scheduling refers to the vertical or horizontal scaling of resources according to the increase or decrease in business volume during system operation. . The global resource management module collects and summarizes the resource information reported by each local resource management module to form a global view of resources. The global view can be viewed according to tenants, data centers, resource types and other dimensions; the global resource management module uniformly monitors, allocates and schedules locations in different data Center resources; the global resource management module is also responsible for unified processing of user resource deployment requests and issuing resource allocation and scheduling commands to each local resource management module. Local resource management and global resource management constitute a two-level resource management system to achieve an effective combination of centralized management and control of resources and distributed deployment.

The policy engine is responsible for the definition, parsing and execution of resource allocation policies, and realizes automatic allocation and scheduling based on policies. If the policies in the policy warehouse cannot meet business requirements, it can also allow administrators to customize policies so that an information system can be customized according to the policy. Specific policies allocate and schedule resources, and customized policies can be stored in the policy warehouse for reuse by other information systems. The strategy includes allocation strategy and scheduling strategy. The allocation strategy refers to the strategy of selecting the best resources when the information system is deployed. The scheduling strategy refers to the strategy of adjusting and migrating resources according to the requirements of system maintenance or the increase or decrease of business volume when the system is running. .

The process engine is responsible for connecting the actual operation steps involved in the resource allocation, scheduling, configuration and operation and maintenance processes to realize the automatic flow of operation steps and further realize complex automation processes, including server automation processes and network automation processes. , storage automation process, etc. The process engine allows administrators to define and orchestrate processes. During the actual resource allocation, scheduling, configuration, and operation and maintenance processes, the process engine parses and executes the involved processes, and provides configurable capabilities for automated processes. Processes created by administrators can be stored in the process warehouse to achieve process reuse.

Service management manages cloud services according to their life cycle, allowing administrators to define the deployment model and functional model of cloud services, and set certain automated processes and distribution strategies for the deployment model, allowing administrators to set applications for cloud services Interface and user input parameters. After the user applies for the required cloud service, the service management module activates the service, informs the process engine of the automated process involved in the service activation, and the process engine parses and executes it, and assigns the resource allocation strategy involved in the service activation. Notify the policy engine, which parses and executes it. The policy engine will send the resource allocation request to the global resource management module, and finally return it to the service management module, thus completing the service activation action. After the service is activated, the cloud platform must ensure that the SLA of the delivered service meets user requirements through standardized operation and maintenance work.

The resource orchestration module provides a graphical interface for administrators (tenant administrators or platform administrators) to configure, combine, orchestrate and connect various cloud resources in the cloud platform to form resource templates to adapt to specific Topology of information systems. After the administrator defines the resource template, it can be published as a new composite cloud service. The user's application for the composite cloud service triggers the activation of the service. The resource orchestration module will parse the resource template and user input parameters to generate specific requirements for resource type, attributes and quantity, and then pass them to the global resource module for resource allocation and deployment. . Administrators can modify the built-in templates in the template warehouse to quickly define resource templates that meet their own needs. The created resource templates can also be stored in the template warehouse for reuse.

The portal module provides user portal, tenant management portal and platform management portal. The user portal provides end users of cloud services with an intuitive and easy-to-use self-service interface, in which end users can complete service applications, resource viewing, resource operations, etc. The tenant management portal provides tenant administrators with an intuitive and easy-to-use self-service management interface. In the interface, tenant administrators can apply for quotas, create users, define services visible to this tenant, define resource templates, and formulate allocation strategies suitable for this tenant, etc. . The platform management portal provides cloud platform administrators with an intuitive and easy-to-use operation interface to help them complete various tasks in the field of system maintenance and operations.

The operation and maintenance management module is responsible for the system operation and maintenance functions of the cloud computing platform. It follows the ITIL V3 standard and provides functions such as capacity management, performance management, security management and daily management. Capacity management performs statistical analysis on the historical operating status of resources and predicts future resource demand according to the capacity prediction model. Performance management monitors the operating status of resources by collecting performance indicators of resources in real time. If the indicators exceed a certain threshold, an alarm event is generated and the corresponding event processing process is called. Security management includes server security, storage security, data security, network security and virtualization security. Daily management includes fault management, problem management, configuration management, system management, monitoring management and change management. If the functions involved in the operation and maintenance management module already exist in the existing supporting information system, there is no need to implement the function in the new cloud platform, but through the plug-in management module to correspond to the existing supporting information system. Function modules are integrated.

The operation management module is responsible for the business operation functions of the cloud computing platform, including user management, tenant management, quota management, permission management, metering and billing management and other functions. User management creates, groups and authorizes users. Tenant management is responsible for managing and configuring multi-level tenants, maintaining the parent-child relationship between tenants, the usage relationship between tenants and users, the association between tenants and quotas, etc. Quota management is responsible for allocating resources to tenants at all levels to ensure that the use of resources by tenants at all levels cannot exceed the quota. Permission management is responsible for specifying operation and data permissions for users. Metering and billing management measures tenants' resource usage, saves metering data, and regularly generates metering reports. Metering information includes resource types, resource usage, and usage time; platform administrators can set rates for various resources. , the system can generate a tenant's expense list based on metering information and rates.

The plug-in management module is mainly designed to integrate with existing supporting information systems. The plug-in management module sets up a plug-in mechanism to allow existing supporting information systems to provide necessary functions for the cloud platform through plug-ins. At the same time, It also allows other business information systems to add additional functions to the cloud platform. The cloud platform performs data exchange and application integration with existing supporting information systems and other business information systems through various plug-ins. For example, the operation and maintenance management module of the cloud platform does not need to Instead of implementing monitoring functions, the relevant functions in the legacy monitoring system are reused through monitoring plug-ins.

Summarize

In order to adapt to the characteristics of the IT infrastructure of large group companies, the cloud platform architecture proposed in this article has specially designed a multi-level tenant management system, a two-level resource management system, a resource adapter module, a plug-in management module and a policy engine, etc., which can be used to a certain extent. To a certain extent, it meets the cloud computing business needs of large group companies, but it also increases the complexity of the cloud platform architecture, thereby increasing the difficulty of cloud platform design and development. Large group companies need to evaluate the work involved in detail when referring to this cloud platform architecture. Quantity and technical risks. In addition, this architecture focuses on the IaaS level and does not take into account the particularity of PaaS. Accordingly, when designing the PaaS platform architecture, it is also necessary to supplement relevant functional modules.

The above is the detailed content of Analytical ideas for cloud platform architecture of large group companies. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1379

1379

52

52

Difference between centos and ubuntu

Apr 14, 2025 pm 09:09 PM

Difference between centos and ubuntu

Apr 14, 2025 pm 09:09 PM

The key differences between CentOS and Ubuntu are: origin (CentOS originates from Red Hat, for enterprises; Ubuntu originates from Debian, for individuals), package management (CentOS uses yum, focusing on stability; Ubuntu uses apt, for high update frequency), support cycle (CentOS provides 10 years of support, Ubuntu provides 5 years of LTS support), community support (CentOS focuses on stability, Ubuntu provides a wide range of tutorials and documents), uses (CentOS is biased towards servers, Ubuntu is suitable for servers and desktops), other differences include installation simplicity (CentOS is thin)

How to install centos

Apr 14, 2025 pm 09:03 PM

How to install centos

Apr 14, 2025 pm 09:03 PM

CentOS installation steps: Download the ISO image and burn bootable media; boot and select the installation source; select the language and keyboard layout; configure the network; partition the hard disk; set the system clock; create the root user; select the software package; start the installation; restart and boot from the hard disk after the installation is completed.

Centos stops maintenance 2024

Apr 14, 2025 pm 08:39 PM

Centos stops maintenance 2024

Apr 14, 2025 pm 08:39 PM

CentOS will be shut down in 2024 because its upstream distribution, RHEL 8, has been shut down. This shutdown will affect the CentOS 8 system, preventing it from continuing to receive updates. Users should plan for migration, and recommended options include CentOS Stream, AlmaLinux, and Rocky Linux to keep the system safe and stable.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to mount hard disk in centos

Apr 14, 2025 pm 08:15 PM

How to mount hard disk in centos

Apr 14, 2025 pm 08:15 PM

CentOS hard disk mount is divided into the following steps: determine the hard disk device name (/dev/sdX); create a mount point (it is recommended to use /mnt/newdisk); execute the mount command (mount /dev/sdX1 /mnt/newdisk); edit the /etc/fstab file to add a permanent mount configuration; use the umount command to uninstall the device to ensure that no process uses the device.

How to use docker desktop

Apr 15, 2025 am 11:45 AM

How to use docker desktop

Apr 15, 2025 am 11:45 AM

How to use Docker Desktop? Docker Desktop is a tool for running Docker containers on local machines. The steps to use include: 1. Install Docker Desktop; 2. Start Docker Desktop; 3. Create Docker image (using Dockerfile); 4. Build Docker image (using docker build); 5. Run Docker container (using docker run).

What to do after centos stops maintenance

Apr 14, 2025 pm 08:48 PM

What to do after centos stops maintenance

Apr 14, 2025 pm 08:48 PM

After CentOS is stopped, users can take the following measures to deal with it: Select a compatible distribution: such as AlmaLinux, Rocky Linux, and CentOS Stream. Migrate to commercial distributions: such as Red Hat Enterprise Linux, Oracle Linux. Upgrade to CentOS 9 Stream: Rolling distribution, providing the latest technology. Select other Linux distributions: such as Ubuntu, Debian. Evaluate other options such as containers, virtual machines, or cloud platforms.