Improve Pytorch key points and improve the optimizer!

Hi, I’m Xiaozhuang!

Today we talk about the optimizer in Pytorch.

The choice of optimizer has a direct impact on the training effect and speed of the deep learning model. Different optimizers are suitable for different problems, and their performance differences may cause the model to converge faster and more stably, or perform better on a specific task. Therefore, when selecting an optimizer, trade-offs and decisions need to be made based on the characteristics of the specific problem.

Therefore, choosing the right optimizer is crucial for tuning deep learning models. The choice of optimizer will significantly affect not only the performance of the model, but also the efficiency of the training process.

PyTorch provides a variety of optimizers that can be used to train neural networks and update model weights. These optimizers include the common SGD, Adam, RMSprop, etc. Each optimizer has its unique characteristics and applicable scenarios. Choosing an appropriate optimizer can accelerate model convergence and improve training results. When using the optimizer, you need to set hyperparameters such as learning rate and weight decay, as well as define loss functions and model parameters.

Common optimizers

Let us first list some commonly used optimizers in PyTorch and give a brief introduction to them:

Let’s understand how SGD (Stochastic Gradient Descent) works. SGD is a commonly used optimization algorithm used to solve the parameters of machine learning models. It estimates the gradient by randomly selecting a small batch of samples and uses the negative direction of the gradient to update the parameters. This allows the model's performance to be gradually optimized during an iterative process. The advantage of SGD is high computational efficiency, especially suitable for

Stochastic gradient descent is a commonly used optimization algorithm used to minimize the loss function. It works by calculating the gradient of the weights relative to the loss function and updating the weights in the negative direction of the gradient. This algorithm is widely used in machine learning and deep learning.

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

(2) Adam

Adam is an adaptive learning rate optimization algorithm that combines the ideas of AdaGrad and RMSProp. Compared with the traditional gradient descent algorithm, Adam can calculate different learning rates for each parameter to better adapt to the characteristics of different parameters. By adaptively adjusting the learning rate, Adam can improve the convergence speed and performance of the model.

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

(3) Adagrad

Adagrad is an adaptive learning rate optimization algorithm that adjusts the learning rate based on the historical gradient of parameters. However, as the learning rate gradually decreases, training may stop prematurely.

optimizer = torch.optim.Adagrad(model.parameters(), lr=learning_rate)

(4) RMSProp

RMSProp is also an adaptive learning rate algorithm that adjusts the learning rate by considering the sliding average of the gradient.

optimizer = torch.optim.RMSprop(model.parameters(), lr=learning_rate)

(5) Adadelta

Adadelta is an adaptive learning rate optimization algorithm and an improved version of RMSProp. It dynamically adjusts learning by considering the moving average of the gradient and the moving average of the parameters. Rate.

optimizer = torch.optim.Adadelta(model.parameters(), lr=learning_rate)

A complete case

Here, let’s talk about how to use PyTorch to train a simple convolutional neural network (CNN) for handwritten digit recognition.

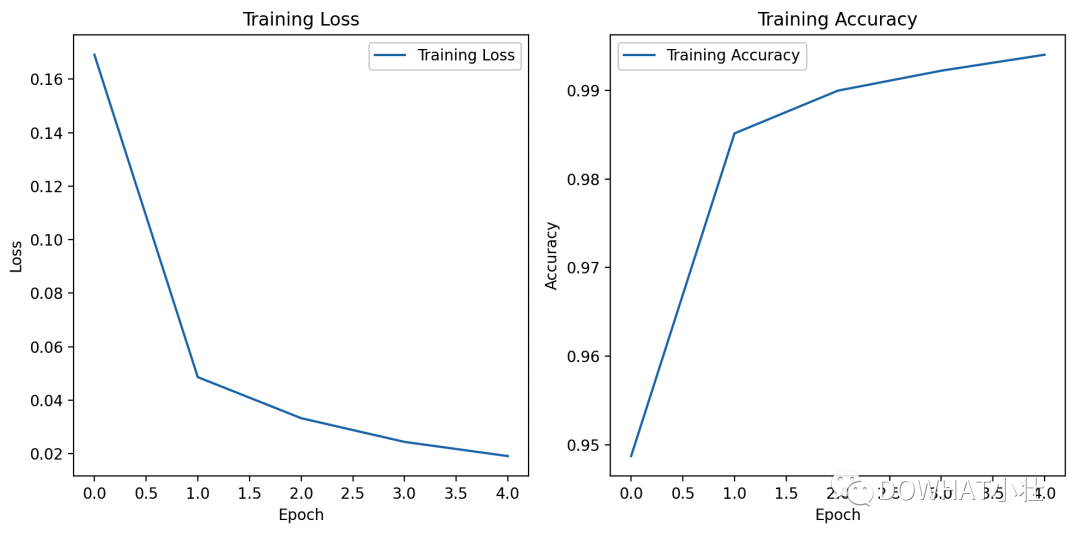

This case uses the MNIST data set, and uses the Matplotlib library to draw the loss curve and accuracy curve.

import torchimport torch.nn as nnimport torch.optim as optimfrom torchvision import datasets, transformsfrom torch.utils.data import DataLoaderimport matplotlib.pyplot as plt# 设置随机种子torch.manual_seed(42)# 定义数据转换transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (0.5,))])# 下载和加载MNIST数据集train_dataset = datasets.MNIST(root='./data', train=True, download=True, transform=transform)test_dataset = datasets.MNIST(root='./data', train=False, download=True, transform=transform)train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True)test_loader = DataLoader(test_dataset, batch_size=1000, shuffle=False)# 定义简单的卷积神经网络模型class CNN(nn.Module):def __init__(self):super(CNN, self).__init__()self.conv1 = nn.Conv2d(1, 32, kernel_size=3, stride=1, padding=1)self.relu = nn.ReLU()self.pool = nn.MaxPool2d(kernel_size=2, stride=2)self.conv2 = nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1)self.fc1 = nn.Linear(64 * 7 * 7, 128)self.fc2 = nn.Linear(128, 10)def forward(self, x):x = self.conv1(x)x = self.relu(x)x = self.pool(x)x = self.conv2(x)x = self.relu(x)x = self.pool(x)x = x.view(-1, 64 * 7 * 7)x = self.fc1(x)x = self.relu(x)x = self.fc2(x)return x# 创建模型、损失函数和优化器model = CNN()criterion = nn.CrossEntropyLoss()optimizer = optim.Adam(model.parameters(), lr=0.001)# 训练模型num_epochs = 5train_losses = []train_accuracies = []for epoch in range(num_epochs):model.train()total_loss = 0.0correct = 0total = 0for inputs, labels in train_loader:optimizer.zero_grad()outputs = model(inputs)loss = criterion(outputs, labels)loss.backward()optimizer.step()total_loss += loss.item()_, predicted = torch.max(outputs.data, 1)total += labels.size(0)correct += (predicted == labels).sum().item()accuracy = correct / totaltrain_losses.append(total_loss / len(train_loader))train_accuracies.append(accuracy)print(f"Epoch {epoch+1}/{num_epochs}, Loss: {train_losses[-1]:.4f}, Accuracy: {accuracy:.4f}")# 绘制损失曲线和准确率曲线plt.figure(figsize=(10, 5))plt.subplot(1, 2, 1)plt.plot(train_losses, label='Training Loss')plt.title('Training Loss')plt.xlabel('Epoch')plt.ylabel('Loss')plt.legend()plt.subplot(1, 2, 2)plt.plot(train_accuracies, label='Training Accuracy')plt.title('Training Accuracy')plt.xlabel('Epoch')plt.ylabel('Accuracy')plt.legend()plt.tight_layout()plt.show()# 在测试集上评估模型model.eval()correct = 0total = 0with torch.no_grad():for inputs, labels in test_loader:outputs = model(inputs)_, predicted = torch.max(outputs.data, 1)total += labels.size(0)correct += (predicted == labels).sum().item()accuracy = correct / totalprint(f"Accuracy on test set: {accuracy * 100:.2f}%")In the above code, we define a simple convolutional neural network (CNN), trained using cross-entropy loss and Adam optimizer.

During the training process, we recorded the loss and accuracy of each epoch, and used the Matplotlib library to draw the loss curve and accuracy curve.

I’m Xiao Zhuang, see you next time!

The above is the detailed content of Improve Pytorch key points and improve the optimizer!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

iFlytek: Huawei's Ascend 910B's capabilities are basically comparable to Nvidia's A100, and they are working together to create a new base for my country's general artificial intelligence

Oct 22, 2023 pm 06:13 PM

iFlytek: Huawei's Ascend 910B's capabilities are basically comparable to Nvidia's A100, and they are working together to create a new base for my country's general artificial intelligence

Oct 22, 2023 pm 06:13 PM

This site reported on October 22 that in the third quarter of this year, iFlytek achieved a net profit of 25.79 million yuan, a year-on-year decrease of 81.86%; the net profit in the first three quarters was 99.36 million yuan, a year-on-year decrease of 76.36%. Jiang Tao, Vice President of iFlytek, revealed at the Q3 performance briefing that iFlytek has launched a special research project with Huawei Shengteng in early 2023, and jointly developed a high-performance operator library with Huawei to jointly create a new base for China's general artificial intelligence, allowing domestic large-scale models to be used. The architecture is based on independently innovative software and hardware. He pointed out that the current capabilities of Huawei’s Ascend 910B are basically comparable to Nvidia’s A100. At the upcoming iFlytek 1024 Global Developer Festival, iFlytek and Huawei will make further joint announcements on the artificial intelligence computing power base. He also mentioned,

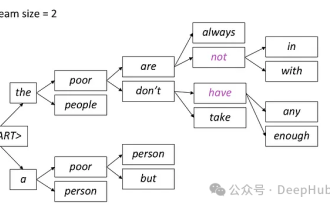

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

In natural language generation tasks, sampling method is a technique to obtain text output from a generative model. This article will discuss 5 common methods and implement them using PyTorch. 1. GreedyDecoding In greedy decoding, the generative model predicts the words of the output sequence based on the input sequence time step by time. At each time step, the model calculates the conditional probability distribution of each word, and then selects the word with the highest conditional probability as the output of the current time step. This word becomes the input to the next time step, and the generation process continues until some termination condition is met, such as a sequence of a specified length or a special end marker. The characteristic of GreedyDecoding is that each time the current conditional probability is the best

The perfect combination of PyCharm and PyTorch: detailed installation and configuration steps

Feb 21, 2024 pm 12:00 PM

The perfect combination of PyCharm and PyTorch: detailed installation and configuration steps

Feb 21, 2024 pm 12:00 PM

PyCharm is a powerful integrated development environment (IDE), and PyTorch is a popular open source framework in the field of deep learning. In the field of machine learning and deep learning, using PyCharm and PyTorch for development can greatly improve development efficiency and code quality. This article will introduce in detail how to install and configure PyTorch in PyCharm, and attach specific code examples to help readers better utilize the powerful functions of these two. Step 1: Install PyCharm and Python

Implementing noise removal diffusion model using PyTorch

Jan 14, 2024 pm 10:33 PM

Implementing noise removal diffusion model using PyTorch

Jan 14, 2024 pm 10:33 PM

Before we understand the working principle of the Denoising Diffusion Probabilistic Model (DDPM) in detail, let us first understand some of the development of generative artificial intelligence, which is also one of the basic research of DDPM. VAEVAE uses an encoder, a probabilistic latent space, and a decoder. During training, the encoder predicts the mean and variance of each image and samples these values from a Gaussian distribution. The result of the sampling is passed to the decoder, which converts the input image into a form similar to the output image. KL divergence is used to calculate the loss. A significant advantage of VAE is its ability to generate diverse images. In the sampling stage, one can directly sample from the Gaussian distribution and generate new images through the decoder. GAN has made great progress in variational autoencoders (VAEs) in just one year.

Tutorial on installing PyCharm with PyTorch

Feb 24, 2024 am 10:09 AM

Tutorial on installing PyCharm with PyTorch

Feb 24, 2024 am 10:09 AM

As a powerful deep learning framework, PyTorch is widely used in various machine learning projects. As a powerful Python integrated development environment, PyCharm can also provide good support when implementing deep learning tasks. This article will introduce in detail how to install PyTorch in PyCharm and provide specific code examples to help readers quickly get started using PyTorch for deep learning tasks. Step 1: Install PyCharm First, we need to make sure we have

Deep Learning with PHP and PyTorch

Jun 19, 2023 pm 02:43 PM

Deep Learning with PHP and PyTorch

Jun 19, 2023 pm 02:43 PM

Deep learning is an important branch in the field of artificial intelligence and has received more and more attention in recent years. In order to be able to conduct deep learning research and applications, it is often necessary to use some deep learning frameworks to help achieve it. In this article, we will introduce how to use PHP and PyTorch for deep learning. 1. What is PyTorch? PyTorch is an open source machine learning framework developed by Facebook. It can help us quickly create and train deep learning models. PyTorc

so fast! Recognize video speech into text in just a few minutes with less than 10 lines of code

Feb 27, 2024 pm 01:55 PM

so fast! Recognize video speech into text in just a few minutes with less than 10 lines of code

Feb 27, 2024 pm 01:55 PM

Hello everyone, I am Kite. Two years ago, the need to convert audio and video files into text content was difficult to achieve, but now it can be easily solved in just a few minutes. It is said that in order to obtain training data, some companies have fully crawled videos on short video platforms such as Douyin and Kuaishou, and then extracted the audio from the videos and converted them into text form to be used as training corpus for big data models. If you need to convert a video or audio file to text, you can try this open source solution available today. For example, you can search for the specific time points when dialogues in film and television programs appear. Without further ado, let’s get to the point. Whisper is OpenAI’s open source Whisper. Of course it is written in Python. It only requires a few simple installation packages.

How to install pytorch in pycharm

Dec 08, 2023 pm 03:05 PM

How to install pytorch in pycharm

Dec 08, 2023 pm 03:05 PM

Installation steps: 1. Open PyCharm and create a new Python project; 2. In the bottom status bar of PyCharm, click the "Terminal" icon to open the terminal window; 3. In the terminal window, use the pip command to install PyTorch, according to the system and requirements, you can choose different installation methods; 4. After the installation is completed, you can write code in PyCharm and import the PyTorch library to use it.