| Introduction | The Apache Hadoop software library is a framework that allows distributed processing of large data sets on a computer cluster using a simple programming model. Apache™ Hadoop® is open source software for reliable, scalable, distributed computing. |

The project includes the following modules:

This article will help you step by step to install hadoop on CentOS and configure a single-node hadoop cluster.

Install JavaBefore installing hadoop, please make sure Java is installed on your system. Use this command to check the installed version of Java.

java -version java version "1.7.0_75" Java(TM) SE Runtime Environment (build 1.7.0_75-b13) Java HotSpot(TM) 64-Bit Server VM (build 24.75-b04, mixed mode)

To install or update Java, please refer to the step-by-step instructions below.

The first step is to download the latest version of java from the Oracle official website.

cd /opt/ wget --no-cookies --no-check-certificate --header "Cookie: gpw_e24=http%3A%2F%2Fwww.oracle.com%2F; oraclelicense=accept-securebackup-cookie" "http://download.oracle.com/otn-pub/java/jdk/7u79-b15/jdk-7u79-linux-x64.tar.gz" tar xzf jdk-7u79-linux-x64.tar.gz

Requires setup to use a newer version of Java as an alternative. Use the following command to do this.

cd /opt/jdk1.7.0_79/ alternatives --install /usr/bin/java java /opt/jdk1.7.0_79/bin/java 2 alternatives --config java There are 3 programs which provide 'java'. Selection Command ----------------------------------------------- * 1 /opt/jdk1.7.0_60/bin/java + 2 /opt/jdk1.7.0_72/bin/java 3 /opt/jdk1.7.0_79/bin/java Enter to keep the current selection[+], or type selection number: 3 [Press Enter]

Now you may also need to use the alternatives command to set the javac and jar command paths.

alternatives --install /usr/bin/jar jar /opt/jdk1.7.0_79/bin/jar 2 alternatives --install /usr/bin/javac javac /opt/jdk1.7.0_79/bin/javac 2 alternatives --set jar /opt/jdk1.7.0_79/bin/jar alternatives --set javac /opt/jdk1.7.0_79/bin/javac

The next step is to configure environment variables. Use the following commands to set these variables correctly.

Set JAVA_HOME variable:

export JAVA_HOME=/opt/jdk1.7.0_79

Set the JRE_HOME variable:

export JRE_HOME=/opt/jdk1.7.0_79/jre

Set the PATH variable:

export PATH=$PATH:/opt/jdk1.7.0_79/bin:/opt/jdk1.7.0_79/jre/bin

After setting up the java environment. Start installing Apache Hadoop.

The first step is to create a system user account for the hadoop installation.

useradd hadoop passwd hadoop

Now you need to configure the ssh key for user hadoop. Use the following command to enable password-less ssh login.

su - hadoop ssh-keygen -t rsa cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 0600 ~/.ssh/authorized_keys exit

Now download the latest available version of hadoop from the official website hadoop.apache.org.

cd ~ wget http://apache.claz.org/hadoop/common/hadoop-2.6.0/hadoop-2.6.0.tar.gz tar xzf hadoop-2.6.0.tar.gz mv hadoop-2.6.0 hadoop

The next step is to set the environment variables used by hadoop.

Edit ~/.bashrc and add the following values at the end of the file.

export HADOOP_HOME=/home/hadoop/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

Apply changes in the current running environment.

source ~/.bashrc

Edit $HADOOP_HOME/etc/hadoop/hadoop-env.sh and set the JAVA_HOME environment variable.

export JAVA_HOME=/opt/jdk1.7.0_79/

Now, start by configuring a basic hadoop single-node cluster.

First edit the hadoop configuration file and make the following changes.

cd /home/hadoop/hadoop/etc/hadoop

Let’s edit core-site.xml.

fs.default.name hdfs://localhost:9000

Then edit hdfs-site.xml:

dfs.replication 1 dfs.name.dir file:///home/hadoop/hadoopdata/hdfs/namenode dfs.data.dir file:///home/hadoop/hadoopdata/hdfs/datanode

And edit mapred-site.xml:

mapreduce.framework.name yarn

Last edit yarn-site.xml:

yarn.nodemanager.aux-services mapreduce_shuffle

Now format the namenode using the following command:

hdfs namenode -format

To start all hadoop services, use the following command:

cd /home/hadoop/hadoop/sbin/ start-dfs.sh start-yarn.sh

To check whether all services start normally, use the jps command:

jps

You should see output like this.

26049 SecondaryNameNode 25929 DataNode 26399 Jps 26129 JobTracker 26249 TaskTracker 25807 NameNode

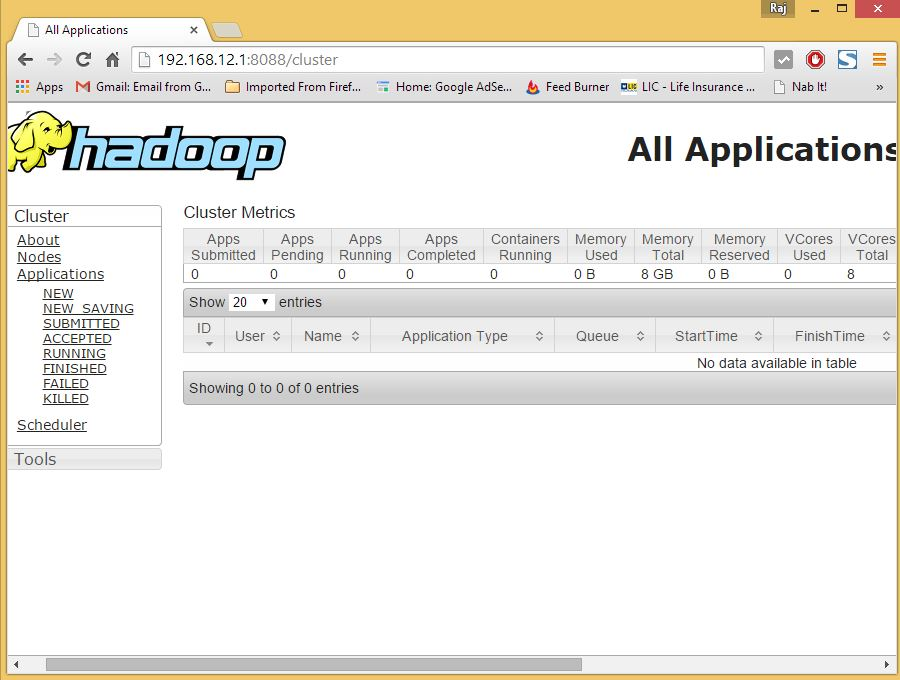

Now, you can access the Hadoop service in your browser: http://your-ip-address:8088/.

hadoop

The above is the detailed content of Install Apache Hadoop on CentOS!. For more information, please follow other related articles on the PHP Chinese website!