Technology peripherals

Technology peripherals

AI

AI

The NPC with high emotional intelligence is here. As soon as it reaches out its hand, it is ready to cooperate with the next move.

The NPC with high emotional intelligence is here. As soon as it reaches out its hand, it is ready to cooperate with the next move.

The NPC with high emotional intelligence is here. As soon as it reaches out its hand, it is ready to cooperate with the next move.

In the fields of virtual reality, augmented reality, games and human-computer interaction, it is often necessary to allow virtual characters to interact with players outside the screen. This interaction is real-time, requiring the virtual character to dynamically adjust according to the operator's movements. Some interactions also involve objects, such as moving a chair with an avatar, which requires special attention to the precise movements of the operator's hands. The emergence of intelligent and interactive virtual characters will greatly enhance the social experience between human players and virtual characters and bring a new way of entertainment.

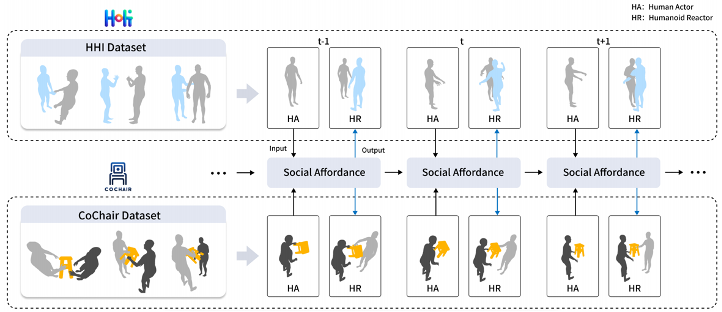

In this study, the author focuses on the interaction tasks between humans and virtual humans, especially the interaction tasks involving objects, and proposes a new task called online whole-body action response synthesis . The new task will generate virtual human reactions based on human movements. Previous research mainly focused on human-to-human interaction, without considering the objects in the task, and the generated body reactions did not include hand movements. In addition, previous work did not treat tasks as online reasoning. In actual situations, virtual humans predict the next step based on the implementation situation.

To support the new task, the authors first constructed two datasets, named HHI and CoChair respectively, and proposed a unified method. Specifically, the authors first construct a social affordance representation. To do this, they select a social affordance vector, learn a local coordinate system for the vector using an SE (3) equivariant neural network, and finally normalize its social affordances. In addition, the author also proposes a scheme for social affordance prediction to enable virtual humans to make decisions based on predictions.

Research results show that this method can effectively generate high-quality reaction actions on the HHI and CoChair data sets, and can achieve a real-time inference speed of 25 frames per second on an A100. In addition, the authors also demonstrate the effectiveness of the method through verification on existing human interaction datasets Interhuman and Chi3D.

##Please refer to the following paper address for more detailed information :[https://arxiv.org/pdf/2312.08983.pdf]. Hope this helps players who are still looking for a way to solve the puzzle.

Please visit the project homepage https://yunzeliu.github.io/iHuman/ for more information on puzzle-solving methods.

Dataset construction

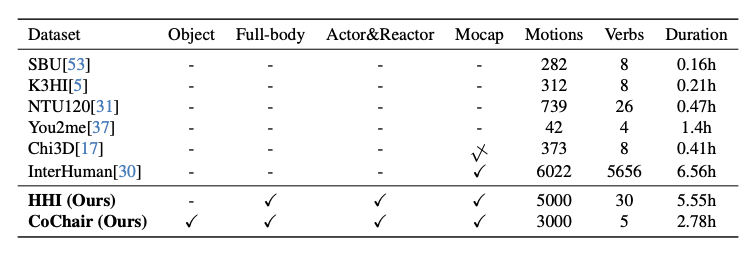

In this article, the author constructed two datasets to support the online whole-body action response synthesis task. One of them is the data set HHI of two-person interaction, and the other is the data set CoChair of two-person interaction with objects. These two datasets provide researchers with valuable resources to further explore the field of full-body motion synthesis. The HHI dataset records various interactions between two people, while the CoChair dataset records interactions between two people and objects. The establishment of these data sets provides researchers with more experiments

The HHI data set is a large-scale whole body action response data set. Contains 30 interaction categories, 10 pairs of human skeleton types and a total of 5000 interaction sequences.

The HHI data set has three characteristics. The first feature is the inclusion of multi-person full-body interaction, including body and hand interaction. The author believes that in multi-person interactions, the interaction of hands cannot be ignored. During handshakes, hugs and handovers, rich information is transmitted through hands. The second feature is that the HHI data set can distinguish clear behavioral initiators and responders. For example, in situations such as shaking hands, pointing in the direction, greetings, handovers, etc., the HHI dataset can identify the initiator of the action, which helps researchers better define and evaluate the problem. The third feature is that the HHI data set contains more diverse types of interactions and reactions, not only including 30 types of interactions between two people, but also providing multiple reasonable reactions to the same actor. For example, when someone greets you, you can respond with a nod, with one hand, or with both hands. This is also a natural feature, but it has rarely been paid attention to and discussed in previous datasets.

CoChair is a large-scale multi-person and object interaction dataset, which includes 8 different chairs, 5 interaction modes and 10 pairs of different skeletons, for a total of 3000 sequences. CoChair has two important characteristics: First, CoChair has information asymmetry in the collaboration process. Every action has an executor/initiator (who knows the destination of the carry-on) and a responder (who doesn't know the destination). Second, it has various carrying modes. The data set includes five carrying modes: one-hand fixed carry, one-hand mobile carry, two-hand fixed carry, two-hand mobile carry and two-hand flexible carry.

Method

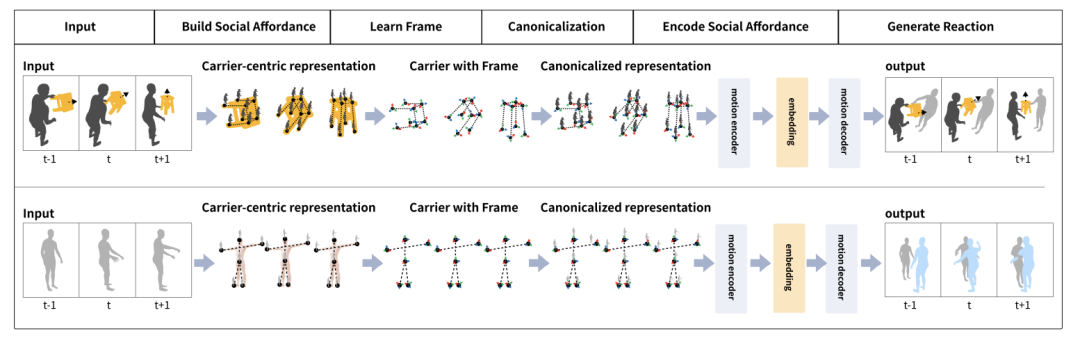

Social affordance vector refers to the object or person that encodes social affordance information . When humans interact with virtual humans, humans typically come into contact with the virtual humans directly or indirectly. And when it comes to objects, humans typically touch objects.

In order to simulate direct or potential contact information in an interaction, a vector needs to be selected to simultaneously represent the human being, the vector itself, and the relationship between them. In this study, the carrier refers to objects or virtual human templates that humans may come into contact with.

Based on this, the author defines a carrier-centered representation of social affordances. Specifically, given a vector, we encode human behavior to obtain a dense human-vehicle joint representation. Based on this representation, the authors propose a social affordance representation that contains the actions of human actions, the dynamic geometric characteristics of the vector, and the person-vehicle relationship at each time step.

It should be noted that the social affordance representation refers to the data flow from the starting moment to a specific time step, rather than the representation of a single frame. The advantage of this method is that it closely associates local areas of the carrier with human behavioral movements, forming a representation that is convenient for network learning.

Through social affordance representation, the author further adopts social affordance normalization to simplify the expression space. The first step is to learn the local framework of the vector. Through the SE (3) equivariant network, the local coordinate system of the carrier is learned. Specifically, human actions are first converted into actions in each local coordinate system. Next, we densely encode the human character’s actions from each point’s perspective to obtain a dense vector-centric action representation. This can be thought of as binding an "observer" to each local point on the vehicle, with each "observer" encoding human actions from a first-person perspective. The advantage of this approach is that while modeling the information generated by contact between humans, virtual humans, and objects, social affordance normalization simplifies the distribution of social affordances and facilitates network learning.

In order to predict the behavior of humans interacting with virtual humans, the author proposes a social affordance prediction module. In real situations, virtual humans can only observe the historical dynamics of human behavior. The author believes that virtual humans should have the ability to predict human behavior in order to better plan their own actions. For example, when someone raises their hand and comes towards you, you might assume they are about to shake your hand and be prepared to receive it.

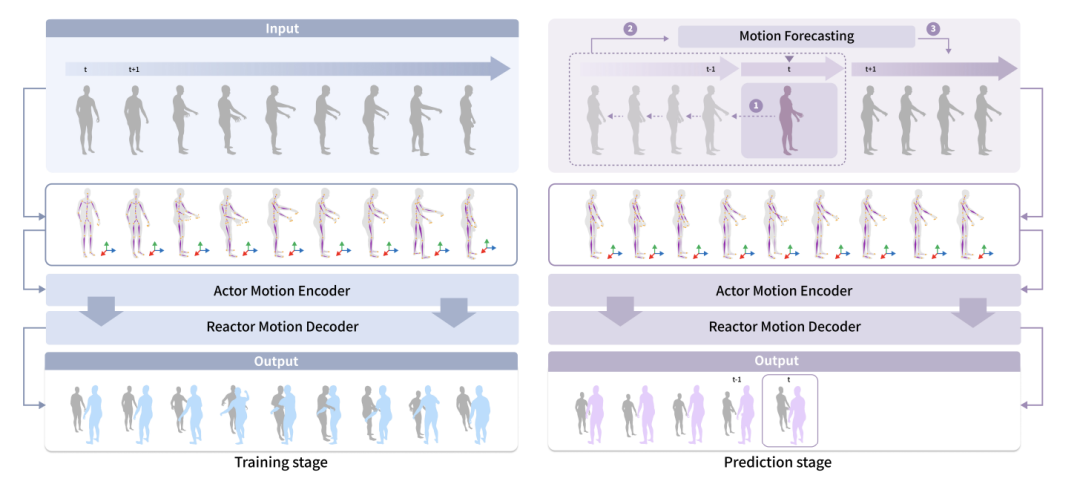

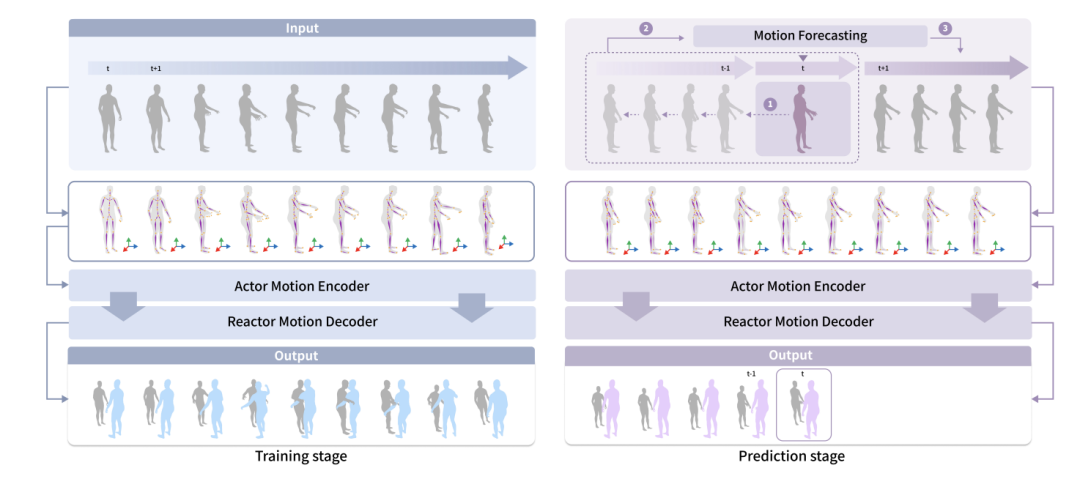

During the training phase, the virtual human can observe all human actions. During the real-world prediction phase, virtual humans can only observe the past dynamics of human behavior. The proposed prediction module can predict the actions that humans will take to improve the perception of virtual humans. The authors use a motion prediction module to predict the actions of human actors and the actions of objects. In the two-person interaction, the author used HumanMAC as the prediction module. In the two-person-object interaction, the author built a motion prediction module based on InterDiff and added a prior condition that the person-object contact is stable to simplify the difficulty of predicting object motion.

experiment

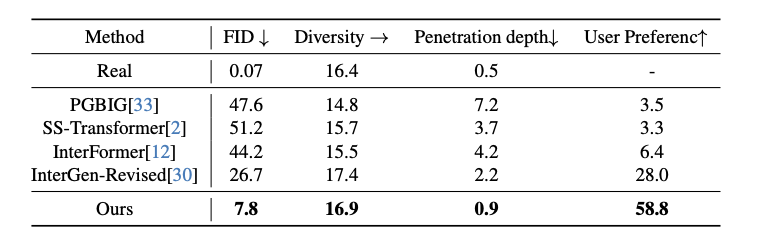

Quantitative testing shows that the research method outperforms existing methods in all metrics. To verify the effectiveness of each design in the method, the authors conducted ablation experiments on the HHI dataset. It can be seen that the performance of this method drops significantly without social affordance normalization. This suggests that using social affordance normalization to simplify feature space complexity is necessary. Without social affordance prediction, our method loses the ability to predict human actor actions, resulting in performance degradation. In order to verify the necessity of using the local coordinate system, the author also compared the effect of using the global coordinate system, and it can be seen that the local coordinate system is significantly better. This also demonstrates the value of using local coordinate systems to describe local geometry and potential contacts.

It can be seen from the visualization results that compared with the past, the virtual characters trained using the method in the article react faster and can better Capture local gestures accurately and generate more realistic and natural grasping actions in collaboration.

For more research details, please see the original paper.

The above is the detailed content of The NPC with high emotional intelligence is here. As soon as it reaches out its hand, it is ready to cooperate with the next move.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files using Visual Studio Code? Create a header file and declare symbols in the header file using the .h or .hpp suffix name (such as classes, functions, variables) Compile the program using the #include directive to include the header file in the source file. The header file will be included and the declared symbols are available.

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Writing C in VS Code is not only feasible, but also efficient and elegant. The key is to install the excellent C/C extension, which provides functions such as code completion, syntax highlighting, and debugging. VS Code's debugging capabilities help you quickly locate bugs, while printf output is an old-fashioned but effective debugging method. In addition, when dynamic memory allocation, the return value should be checked and memory freed to prevent memory leaks, and debugging these issues is convenient in VS Code. Although VS Code cannot directly help with performance optimization, it provides a good development environment for easy analysis of code performance. Good programming habits, readability and maintainability are also crucial. Anyway, VS Code is

Can vscode run kotlin

Apr 15, 2025 pm 06:57 PM

Can vscode run kotlin

Apr 15, 2025 pm 06:57 PM

Running Kotlin in VS Code requires the following environment configuration: Java Development Kit (JDK) and Kotlin compiler Kotlin-related plugins (such as Kotlin Language and Kotlin Extension for VS Code) create Kotlin files and run code for testing to ensure successful environment configuration

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Depending on the specific needs and project size, choose the most suitable IDE: large projects (especially C#, C) and complex debugging: Visual Studio, which provides powerful debugging capabilities and perfect support for large projects. Small projects, rapid prototyping, low configuration machines: VS Code, lightweight, fast startup speed, low resource utilization, and extremely high scalability. Ultimately, by trying and experiencing VS Code and Visual Studio, you can find the best solution for you. You can even consider using both for the best results.

Can vscode be used for java

Apr 15, 2025 pm 08:33 PM

Can vscode be used for java

Apr 15, 2025 pm 08:33 PM

VS Code is absolutely competent for Java development, and its powerful expansion ecosystem provides comprehensive Java development capabilities, including code completion, debugging, version control and building tool integration. In addition, VS Code's lightweight, flexibility and cross-platformity make it better than bloated IDEs. After installing JDK and configuring JAVA_HOME, you can experience VS Code's Java development capabilities by installing "Java Extension Pack" and other extensions, including intelligent code completion, powerful debugging functions, construction tool support, etc. Despite possible compatibility issues or complex project configuration challenges, these issues can be addressed by reading extended documents or searching for solutions online, making the most of VS Code’s

What does sublime renewal balm mean

Apr 16, 2025 am 08:00 AM

What does sublime renewal balm mean

Apr 16, 2025 am 08:00 AM

Sublime Text is a powerful customizable text editor with advantages and disadvantages. 1. Its powerful scalability allows users to customize editors through plug-ins, such as adding syntax highlighting and Git support; 2. Multiple selection and simultaneous editing functions improve efficiency, such as batch renaming variables; 3. The "Goto Anything" function can quickly jump to a specified line number, file or symbol; but it lacks built-in debugging functions and needs to be implemented by plug-ins, and plug-in management requires caution. Ultimately, the effectiveness of Sublime Text depends on the user's ability to effectively configure and manage it.

How to beautify json with vscode

Apr 15, 2025 pm 05:06 PM

How to beautify json with vscode

Apr 15, 2025 pm 05:06 PM

Beautifying JSON data in VS Code can be achieved by using the Prettier extension to automatically format JSON files so that key-value pairs are arranged neatly and indented clearly. Configure Prettier formatting rules as needed, such as indentation size, line breaking method, etc. Use the JSON Schema Validator extension to verify the validity of JSON files to ensure data integrity and consistency.

Can vscode run c

Apr 15, 2025 pm 08:24 PM

Can vscode run c

Apr 15, 2025 pm 08:24 PM

Of course! VS Code integrates IntelliSense, debugger and other functions through the "C/C" extension, so that it has the ability to compile and debug C. You also need to configure a compiler (such as g or clang) and a debugger (in launch.json) to write, run, and debug C code like you would with other IDEs.