Technology peripherals

Technology peripherals

AI

AI

Google Deepmind envisions a future that reinvents robots, bringing embodied intelligence to large models

Google Deepmind envisions a future that reinvents robots, bringing embodied intelligence to large models

Google Deepmind envisions a future that reinvents robots, bringing embodied intelligence to large models

In the past year, a succession of large-scale models have made breakthroughs that are reshaping the field of robotics research.

With the most advanced large models becoming the "brains" of robots, robots are evolving faster than imagined.

In July, Google DeepMind announcedthe launch of RT-2: the world's first vision-language-action (VLA) model to control robots.

Just give the command like a dialogue, and it will be able to identify Swift among a bunch of pictures and give her a jar of "happy water."

#It can even think actively, completing a multi-stage reasoning leap from "selecting an animal for extinction" to grabbing a plastic dinosaur on the table.

After RT-2, Google DeepMind proposed Q-Transformer, and the robotics world also has its own Transformer. Q-Transformer enables robots to break through their reliance on high-quality demonstration data and become better at accumulating experience by relying on independent "thinking".

Just two months after its release, RT-2 is having another ImageNet moment for robots. Google DeepMind and other institutions launched the Open A new idea for training universal robots.

Imagine simply asking your robot assistants to complete these tasks, such as "clean the house" or "make a delicious and healthy meal." For humans, these tasks may be simple, but for robots, they require a deep understanding of the world, which is not easy. Based on years of research in the field of robot Transformers, Google recently announced a series of robot research progress: AutoRT, SARA-RT and RT-Trajectory, which can help robots make decisions faster and more efficiently. Better understand the environment they are in and better guide themselves to complete tasks. Google believes that with the launch of research results such as AutoRT, SARA-RT and RT-Trajectory, it can bring improvements to the data collection, speed and generalization capabilities of real-world robots. Next, let us review these important studies.AutoRT: Leverage large models to better train robots

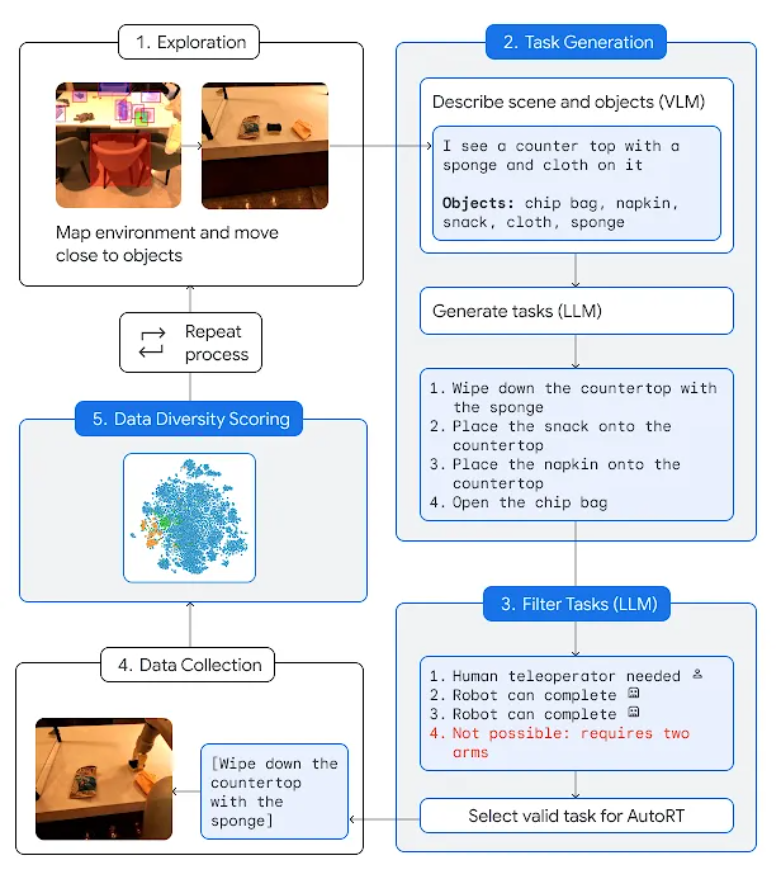

AutoRT combines large base models such as large language models (LLM) or visual language models (VLM) and robot control models (RT-1 or RT-2), creating a system that can deploy robots in new environments to collect training data. AutoRT can simultaneously guide multiple robots equipped with video cameras and end effectors to perform diverse tasks in a variety of environments. Specifically, each robot will use a visual language model (VLM) to "look around" and understand its environment and objects within its line of sight, based on AutoRT. Next, the large language model will propose a series of creative tasks for it, such as "put snacks on the table," and play the role of decision-maker, choosing the tasks for the robot to perform. Researchers conducted an extensive seven-month evaluation of AutoRT in real-world settings. Experiments have proven that the AutoRT system can safely coordinate up to 20 robots at the same time, and a maximum of 52 robots in total. By guiding the robots to perform a variety of tasks within a variety of office buildings, the researchers collected a diverse dataset spanning 77,000 robot trials with 6,650 unique tasks.

While AutoRT is just a data collection system now, think of it as the early stages of autonomous robots in the real world. It features safety guardrails, one of which is a set of safety-focused cue words that provide ground rules to follow when the robot makes LLM-based decisions.

These rules are inspired in part by Isaac Asimov's Three Laws of Robotics, the most important of which is that robots "must not harm humans." Safety rules also require robots not to attempt tasks involving humans, animals, sharp objects or electrical appliances.

Only working on prompt words cannot fully guarantee the safety of robots in practical applications. Therefore, the AutoRT system also includes a layer of practical safety measures that is a classic design of robotics. For example, collaborative robots are programmed to automatically stop if the forces on their joints exceed a given threshold, and all autonomously controlled robots are able to be restricted to the line of sight of a human supervisor via a physical deactivation switch.

SARA-RT: Make the robot Transformer (RT) faster and more streamlined

Another achievement, SARA-RT, can transform the robot Transformer (RT) The model is converted to a more efficient version.

The RT neural network architecture developed by the Google team has been used in the latest robot control systems, including the RT-2 model. The best SARA-RT-2 model is 10.6% more accurate and 14% faster than the RT-2 model when given a brief image history. Google says it's the first scalable attention mechanism that increases computing power without compromising quality.

While Transformers are powerful, they can be limited by computational requirements, slowing decision-making. Transformer mainly relies on the attention module of quadratic complexity. This means that if the input to the RT model is doubled (e.g., providing the robot with more or higher-resolution sensors), the computational resources required to process that input increase fourfold, resulting in slower decision-making.

SARA-RT adopts a novel model fine-tuning method (called "up-training") to improve the efficiency of the model. Uptraining converts quadratic complexity into purely linear complexity, significantly reducing computational requirements. This conversion not only improves the speed of the original model, but also maintains its quality.

Google hopes that many researchers and practitioners will apply this practical system to robotics and other fields. Because SARA provides a general approach to speeding up Transformers without the need for computationally expensive pre-training, this approach has the potential to scale Transformer technology at scale. SARA-RT does not require any additional coding as various open source linear variants are available.

When SARA-RT is applied to the SOTA RT-2 model with billions of parameters, it enables faster decision-making and better performance in a variety of robotic tasks:

SARA-RT-2 model for manipulation tasks. The robot's movements are conditioned on images and textual instructions.

With its solid theoretical foundation, SARA-RT can be applied to various Transformer models. For example, applying SARA-RT to the Point Cloud Transformer, which processes spatial data from a robot's depth camera, can more than double the speed.

RT-Trajectory: Helping Robots Generalize

Humans can intuitively understand and learn how to clean tables, but robots need many possible ways to translate instructions into actual physical action.

Traditionally, training of robotic arms relies on mapping abstract natural language (wipe the table) to concrete actions (close gripper, move left, move right), which makes the model difficult to generalize to a new task. In contrast, the RT-Trajectory Model enables the RT model to understand "how" a task is accomplished by interpreting specific robot actions (such as those in a video or sketch).

RT-Trajectory model can automatically add visual contours to describe the robot's movements in the training video. RT-Trajectory overlays each video in the training dataset with a 2D trajectory sketch of the gripper as the robot arm performs a task. These trajectories, in the form of RGB images, provide low-level, practical visual cues for the model to learn robot control strategies.

When tested on 41 tasks not seen in the training data, the performance of the robot arm controlled by RT-Trajectory was more than double that of the existing SOTA RT model: the task success rate reached 63% , while the success rate of RT-2 is only 29%.

The system is so versatile that RT-Trajectory can also create trajectories by watching human demonstrations of the required tasks, and even accepts hand-drawn sketches. Moreover, it can adapt to different robot platforms at any time.

Left picture: The robot controlled by the RT model trained only using the natural language data set was frustrated when performing the new task of wiping the table, while the robot controlled by the RT trajectory model was After training on the same dataset augmented with 2D trajectories, the wiping trajectory was successfully planned and executed. Right: A trained RT trajectory model, given a new task (wiping the table), can create 2D trajectories in a variety of ways, with human assistance or on its own using a visual language model.

Left picture: The robot controlled by the RT model trained only using the natural language data set was frustrated when performing the new task of wiping the table, while the robot controlled by the RT trajectory model was After training on the same dataset augmented with 2D trajectories, the wiping trajectory was successfully planned and executed. Right: A trained RT trajectory model, given a new task (wiping the table), can create 2D trajectories in a variety of ways, with human assistance or on its own using a visual language model.

RT trajectories exploit the rich robot motion information that is present in all robot datasets but is currently underutilized. RT-Trajectory not only represents another step on the path to creating robots that move efficiently and accurately for new tasks, but also enables the discovery of knowledge from existing data sets.

The above is the detailed content of Google Deepmind envisions a future that reinvents robots, bringing embodied intelligence to large models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

DeepMind robot plays table tennis, and its forehand and backhand slip into the air, completely defeating human beginners

Aug 09, 2024 pm 04:01 PM

But maybe he can’t defeat the old man in the park? The Paris Olympic Games are in full swing, and table tennis has attracted much attention. At the same time, robots have also made new breakthroughs in playing table tennis. Just now, DeepMind proposed the first learning robot agent that can reach the level of human amateur players in competitive table tennis. Paper address: https://arxiv.org/pdf/2408.03906 How good is the DeepMind robot at playing table tennis? Probably on par with human amateur players: both forehand and backhand: the opponent uses a variety of playing styles, and the robot can also withstand: receiving serves with different spins: However, the intensity of the game does not seem to be as intense as the old man in the park. For robots, table tennis

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

On August 21, the 2024 World Robot Conference was grandly held in Beijing. SenseTime's home robot brand "Yuanluobot SenseRobot" has unveiled its entire family of products, and recently released the Yuanluobot AI chess-playing robot - Chess Professional Edition (hereinafter referred to as "Yuanluobot SenseRobot"), becoming the world's first A chess robot for the home. As the third chess-playing robot product of Yuanluobo, the new Guoxiang robot has undergone a large number of special technical upgrades and innovations in AI and engineering machinery. For the first time, it has realized the ability to pick up three-dimensional chess pieces through mechanical claws on a home robot, and perform human-machine Functions such as chess playing, everyone playing chess, notation review, etc.

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The start of school is about to begin, and it’s not just the students who are about to start the new semester who should take care of themselves, but also the large AI models. Some time ago, Reddit was filled with netizens complaining that Claude was getting lazy. "Its level has dropped a lot, it often pauses, and even the output becomes very short. In the first week of release, it could translate a full 4-page document at once, but now it can't even output half a page!" https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ in a post titled "Totally disappointed with Claude", full of

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference being held in Beijing, the display of humanoid robots has become the absolute focus of the scene. At the Stardust Intelligent booth, the AI robot assistant S1 performed three major performances of dulcimer, martial arts, and calligraphy in one exhibition area, capable of both literary and martial arts. , attracted a large number of professional audiences and media. The elegant playing on the elastic strings allows the S1 to demonstrate fine operation and absolute control with speed, strength and precision. CCTV News conducted a special report on the imitation learning and intelligent control behind "Calligraphy". Company founder Lai Jie explained that behind the silky movements, the hardware side pursues the best force control and the most human-like body indicators (speed, load) etc.), but on the AI side, the real movement data of people is collected, allowing the robot to become stronger when it encounters a strong situation and learn to evolve quickly. And agile

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

At this ACL conference, contributors have gained a lot. The six-day ACL2024 is being held in Bangkok, Thailand. ACL is the top international conference in the field of computational linguistics and natural language processing. It is organized by the International Association for Computational Linguistics and is held annually. ACL has always ranked first in academic influence in the field of NLP, and it is also a CCF-A recommended conference. This year's ACL conference is the 62nd and has received more than 400 cutting-edge works in the field of NLP. Yesterday afternoon, the conference announced the best paper and other awards. This time, there are 7 Best Paper Awards (two unpublished), 1 Best Theme Paper Award, and 35 Outstanding Paper Awards. The conference also awarded 3 Resource Paper Awards (ResourceAward) and Social Impact Award (

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

This afternoon, Hongmeng Zhixing officially welcomed new brands and new cars. On August 6, Huawei held the Hongmeng Smart Xingxing S9 and Huawei full-scenario new product launch conference, bringing the panoramic smart flagship sedan Xiangjie S9, the new M7Pro and Huawei novaFlip, MatePad Pro 12.2 inches, the new MatePad Air, Huawei Bisheng With many new all-scenario smart products including the laser printer X1 series, FreeBuds6i, WATCHFIT3 and smart screen S5Pro, from smart travel, smart office to smart wear, Huawei continues to build a full-scenario smart ecosystem to bring consumers a smart experience of the Internet of Everything. Hongmeng Zhixing: In-depth empowerment to promote the upgrading of the smart car industry Huawei joins hands with Chinese automotive industry partners to provide

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Deep integration of vision and robot learning. When two robot hands work together smoothly to fold clothes, pour tea, and pack shoes, coupled with the 1X humanoid robot NEO that has been making headlines recently, you may have a feeling: we seem to be entering the age of robots. In fact, these silky movements are the product of advanced robotic technology + exquisite frame design + multi-modal large models. We know that useful robots often require complex and exquisite interactions with the environment, and the environment can be represented as constraints in the spatial and temporal domains. For example, if you want a robot to pour tea, the robot first needs to grasp the handle of the teapot and keep it upright without spilling the tea, then move it smoothly until the mouth of the pot is aligned with the mouth of the cup, and then tilt the teapot at a certain angle. . this

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Conference Introduction With the rapid development of science and technology, artificial intelligence has become an important force in promoting social progress. In this era, we are fortunate to witness and participate in the innovation and application of Distributed Artificial Intelligence (DAI). Distributed artificial intelligence is an important branch of the field of artificial intelligence, which has attracted more and more attention in recent years. Agents based on large language models (LLM) have suddenly emerged. By combining the powerful language understanding and generation capabilities of large models, they have shown great potential in natural language interaction, knowledge reasoning, task planning, etc. AIAgent is taking over the big language model and has become a hot topic in the current AI circle. Au