Master efficient data crawling technology: Build a powerful Java crawler

Building a powerful Java crawler: Mastering these technologies to achieve efficient data crawling requires specific code examples

1. Introduction

With the rapid development of the Internet With the abundance of data resources, more and more application scenarios require crawling data from web pages. As a powerful programming language, Java has its own web crawler development framework and rich third-party libraries, making it an ideal choice. In this article, we will explain how to build a powerful web crawler using Java and provide concrete code examples.

2. Basic knowledge of web crawlers

- What is a web crawler?

A web crawler is an automated program that simulates human behavior of browsing web pages on the Internet and grabs the required data from web pages. The crawler will extract data from the web page according to certain rules and save it locally or process it further. - The working principle of the crawler

The working principle of the crawler can be roughly divided into the following steps: - Send an HTTP request to obtain the web page content.

- Parse the page and extract the required data.

- For storage or other further processing.

3. Java crawler development framework

Java has many development frameworks that can be used for the development of web crawlers. Two commonly used frameworks are introduced below.

- Jsoup

Jsoup is a Java library for parsing, traversing and manipulating HTML. It provides a flexible API and convenient selectors that make extracting data from HTML very simple. The following is a sample code using Jsoup for data extraction:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

|

- HttpClient

HttpClient is a Java HTTP request library, which can easily simulate the browser sending HTTP requests. and get the response from the server. The following is a sample code that uses HttpClient to send HTTP requests:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

|

4. Advanced technology

- Multi-threading

In order to improve the efficiency of the crawler, we can use Multi-threading to crawl multiple web pages at the same time. The following is a sample code for a crawler implemented using Java multi-threading:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

|

- Agent IP

In order to solve the problem of IP being banned by the server due to high crawling frequency, we can use Proxy IP to hide real IP address. The following is a sample code for a crawler using proxy IP:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

|

5. Summary

In this article, we introduced how to use Java to build a powerful web crawler and provided specific code examples. . By learning these techniques, we can crawl the required data from web pages more efficiently. Of course, the use of web crawlers also requires compliance with relevant laws and ethics, reasonable use of crawler tools, and protection of privacy and the rights of others. I hope this article will help you learn and use Java crawlers!

The above is the detailed content of Master efficient data crawling technology: Build a powerful Java crawler. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

Please note that this square man is frowning, thinking about the identities of the "uninvited guests" in front of him. It turned out that she was in a dangerous situation, and once she realized this, she quickly began a mental search to find a strategy to solve the problem. Ultimately, she decided to flee the scene and then seek help as quickly as possible and take immediate action. At the same time, the person on the opposite side was thinking the same thing as her... There was such a scene in "Minecraft" where all the characters were controlled by artificial intelligence. Each of them has a unique identity setting. For example, the girl mentioned before is a 17-year-old but smart and brave courier. They have the ability to remember and think, and live like humans in this small town set in Minecraft. What drives them is a brand new,

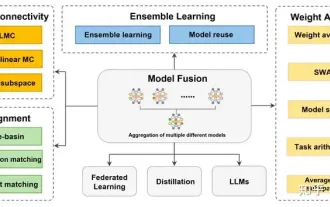

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion

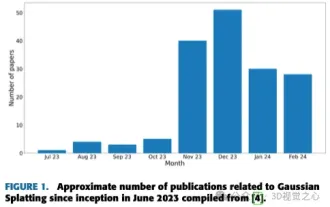

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

Written above & The author’s personal understanding is that image-based 3D reconstruction is a challenging task that involves inferring the 3D shape of an object or scene from a set of input images. Learning-based methods have attracted attention for their ability to directly estimate 3D shapes. This review paper focuses on state-of-the-art 3D reconstruction techniques, including generating novel, unseen views. An overview of recent developments in Gaussian splash methods is provided, including input types, model structures, output representations, and training strategies. Unresolved challenges and future directions are also discussed. Given the rapid progress in this field and the numerous opportunities to enhance 3D reconstruction methods, a thorough examination of the algorithm seems crucial. Therefore, this study provides a comprehensive overview of recent advances in Gaussian scattering. (Swipe your thumb up

Getting started with Java crawlers: Understand its basic concepts and application methods

Jan 10, 2024 pm 07:42 PM

Getting started with Java crawlers: Understand its basic concepts and application methods

Jan 10, 2024 pm 07:42 PM

A preliminary study on Java crawlers: To understand its basic concepts and uses, specific code examples are required. With the rapid development of the Internet, obtaining and processing large amounts of data has become an indispensable task for enterprises and individuals. As an automated data acquisition method, crawler (WebScraping) can not only quickly collect data on the Internet, but also analyze and process large amounts of data. Crawlers have become a very important tool in many data mining and information retrieval projects. This article will introduce the basic overview of Java crawlers

Smooth build: How to correctly configure the Maven image address

Feb 20, 2024 pm 08:48 PM

Smooth build: How to correctly configure the Maven image address

Feb 20, 2024 pm 08:48 PM

Smooth build: How to correctly configure the Maven image address When using Maven to build a project, it is very important to configure the correct image address. Properly configuring the mirror address can speed up project construction and avoid problems such as network delays. This article will introduce how to correctly configure the Maven mirror address and give specific code examples. Why do you need to configure the Maven image address? Maven is a project management tool that can automatically build projects, manage dependencies, generate reports, etc. When building a project in Maven, usually