After reading this article, will you have the illusion that the picture-less perception has come to an end? ? ? The newly released MapEX can’t wait to absorb the essence of a wave of articles and share it with everyone. The core of MapEX is to use historically stored map information to optimize the construction of current local high-precision maps. Historical maps can have only some simple map elements (such as road boundaries), or they can be maps with noise (such as the deviation of each map element). move 5m), or it can be an old map (for example, only a small part of the map elements can be aligned with the current scene). Obviously, these historical map information are useful for the current local high-precision map construction, which also leads to the core of this article, that is, how to use it? ? ? Specifically, MapEX is built based on MapTRv2. These historical map information can be encoded into a series of queries and spliced together with the original query, and the decoder further outputs the prediction results. The article is still very interesting~

The online high-precision map (HDMap) generated by the sensor is considered to be a low-cost alternative to the traditional manual acquisition of HDMap. Therefore, it is expected to reduce the cost of autonomous driving systems that rely on HDMap, and it is also possible to apply it to new systems.

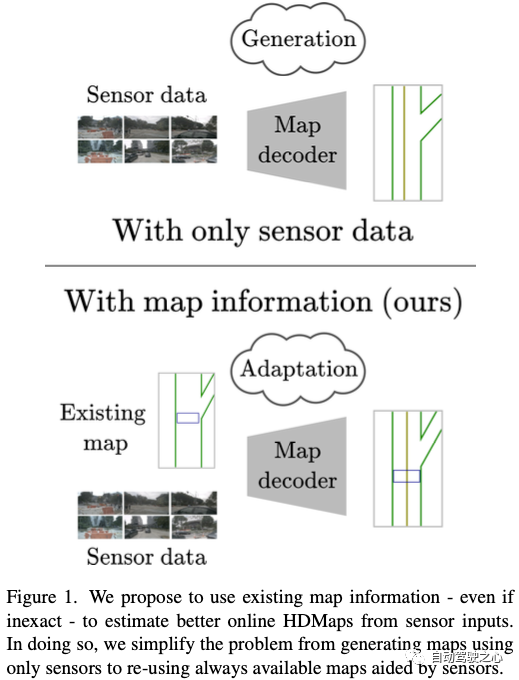

This paper proposes a method to improve online HDMap estimation by considering existing maps for optimization. In the study, the authors identified three reasonable types of existing maps, including simple maps, noisy maps, and old maps. In addition, this paper introduces a new online HDMap building framework called MapEX for sensing existing maps. MapEX achieves this goal by encoding map elements as queries and improving the matching algorithm of the classic query-based map estimation model.

The article finally shows the significant improvement of MapEX on the nuScenes dataset. For example, MapEX (given a noisy map) improves by 38% compared to MapTRv2 detection and is 16% better than the current state of the art.

In summary, the main contributions of MapEX can be summarized as follows:

Here, we briefly outline some content about high-precision maps (HDMaps) in autonomous driving. First, we explored the use of HDMap in trajectory prediction, and then we talked about how to obtain these map data. Finally, we discuss the process of online HDMap construction.

HDMaps for Trajectory Prediction: Autonomous driving often requires a large amount of information about the world in which the vehicle navigates. This information is often embedded in rich HDMaps and serves as input to modify neural networks. HDMaps have proven to be critical to the performance of trajectory prediction. Particularly in trajectory prediction, some methods are explicitly based on HDMap's representation, so access to HDMap is absolutely required.

HDMap acquisition and maintenance: The acquisition and maintenance costs of traditional HDMap are high. While the HDMaps used in forecasting are just a simplified version that contains map elements (lane separators, road boundaries, etc.) and provides much of the complex information found in full HDMaps, they still require very precise measurements. As a result, many companies have been moving towards less stringent standards for medium-definition maps (MDMaps) or even towards navigation maps (Google Maps, SDMaps). Crucially, an MDMap with a few meters accuracy will be a good example of an existing map, providing valuable information for the online HDMap generation process. Our map scenario 2a explores an approximation of this situation.

Online HDMap construction of sensors: Therefore, online HDMap construction has become the core of light image/no image perception. While some work focuses on predicting virtual map elements, i.e., lane centerlines, some work focuses on more visually identifiable map elements: lane dividers, road boundaries, and crosswalks. Perhaps because visual elements are easier to detect by sensors, the latter approach has made rapid progress over the past year. Interestingly, the latest such method, Map-TRv2, does provide an auxiliary setting for detecting actual lane centerlines. This shows convergence to more complex schemes, including a large number of additional map elements (traffic lights, etc.).

The work of this paper is similar to commonly studied change detection problems, which aim to detect changes in maps (such as intersections). The goal of MapEX is to generate accurate online HDMap with the help of existing (possibly very different) maps, which is achieved for the current online HDMap construction problem. Therefore, we not only corrected small errors in the map, but also proposed a more expressive framework that can accommodate any changes (e.g. distorted lines, very noisy elements).

Our core proposition is that leveraging existing maps will facilitate online HDMaps construction. We believe that there are many legitimate circumstances in which imperfect maps may arise.

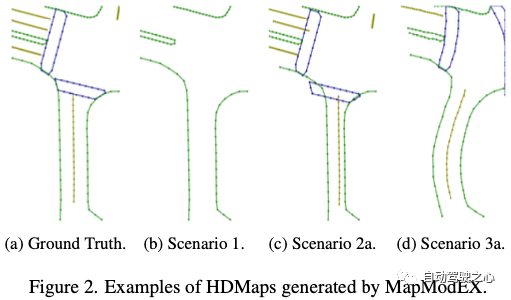

We adopt a standard format for online generation of HDMaps from sensors: We consider HDMaps to consist of 3 types of polylines, road boundaries, lane separations and It consists of a crosswalk with the same colors as the previous green, stone gray and blue, as shown in Figure 2a.

While real HDMaps are much more complex and more sophisticated representations have been proposed, the purpose of this work is to investigate how to interpret existing map information. Therefore, we use the most studied paradigm. The work of this paper will be directly applicable to the prediction of more map elements, finer polylines or rasterized targets.

Since acquisition for standard maps is expensive and time-consuming, we synthetically generated imprecise maps from existing HDMaps .

For this purpose we developed MapModEX, an independent map modification library. It takes nuScenes map files and sample records and outputs for each sample the polyline coordinates of sidewalks, boundaries, and crosswalks in a given patch around the ego vehicle. Importantly, MapModEX provides the ability to modify these polylines to reflect various modifications: deletion of map elements, addition, movement of crosswalks, addition of noise to point coordinates, map movement, map rotation, and map distortion. MapModEX will be available upon release to facilitate further re-searching of existing maps into the sensor's online HDMap acquisition.

We implemented three challenging scenarios using the MapModEX package, as described below, generating 10 variants of scenarios 2 and 3 for each sample (only one variant is allowed for scenario 1). We chose to use a fixed set of modified maps to reduce costs during training and reflect real-world situations where only a limited number of map variants may be available.

The first scenario is that only a rough HDMap (without separation strips and crosswalks) is available, as shown in Figure 2b. Road boundaries are often associated with 3D physical landmarks such as sidewalk edges, while sidewalks and crosswalks are often represented by flat markers that are easier to miss. Additionally, crosswalks and lane dividers are often abandoned due to construction work or road deviations, or even partially hidden by tire tracks.

Therefore, it is reasonable to use HDMaps with only borders. The advantage of this is that only the labeling of road constraints is required, which can reduce the cost of labeling. Additionally, locating only road boundaries may require less precise equipment and updates. Implementation From a practical point of view, the implementation of scenario 1 is simple: we remove dividers and crosswalks from the available HDMaps.

The second possible scenario is that we only have a very noisy map, as shown in Figure 2c. One weakness of existing HDMaps is the need for high accuracy (on the order of a few centimeters), which puts great pressure on their acquisition and maintenance [11]. In fact, a key difference between HDMaps and the emerging MDMaps standard is the lower accuracy (a few centimeters versus a few meters).

We therefore recommend using noisy HDMaps to simulate situations where less accurate maps may be due to a cheaper acquisition process or to using the MDMaps standard instead. Even more interesting is that these less precise maps can be obtained automatically from sensor data. Although methods like MapTRv2 have achieved very impressive performance, they are not yet completely accurate: even with very flexible retrieval thresholds, prediction accuracy is well below 80%.

Implementation: We propose two possible implementations of these noisy HDMaps to reflect various conditions under which we may lack accuracy. In the first scenario 2a, we propose an offset noise setup, where for each map element positioning we add noise from a Gaussian distribution with a standard deviation of 1 meter. This has the effect of applying a uniform translation to every map element (dividers, borders, crosswalks). Such a setup should provide a good approximation of the situation where human annotators quickly provide imprecise annotations from noisy data. We chose a standard deviation of 1 meter to reflect the MDMaps standard accurate to a few meters.

We then test our method on a very challenging point-wise noise scenario 2b: for each ground truth point - remembering that a map element consists of 20 such points - we start from the standard Noise is sampled from a Gaussian distribution with a bias of 5 meters and added to the point coordinates. This provides a worst-case approximation for situations where the map automatically acquires or provides very imprecise positioning.

The final scenario we consider is where we have access to an old map that was accurate in the past (see Figure 2d). It is quite common for paint markings such as crosswalks to shift from time to time. In addition, the city substantially renovated some problematic intersections or renovated areas to accommodate the increased traffic generated by the new attractions.

So it's interesting to work with HDMaps, which are valid in their own right but are largely different from actual HDMaps. When HDMaps were only updated every few years by maintainers to keep costs down, these maps should have appeared frequently. In this case, the existing map will still provide some information about the world, but may not reflect temporary or recent changes.

Implementation: We approximate this by making strong changes to existing HDMaps in scenario 3a. We removed 50% of the crosswalks and lane dividers in the map, added some crosswalks (half of the remaining crosswalks), and finally applied a small warp to the map.

However, it is important to note that large parts of the global map will remain unchanged over time. We count this in our scenario 3b, where we study the impact of randomly choosing (with probability p = 0.5) to consider the real HDMap instead of the perturbed version.

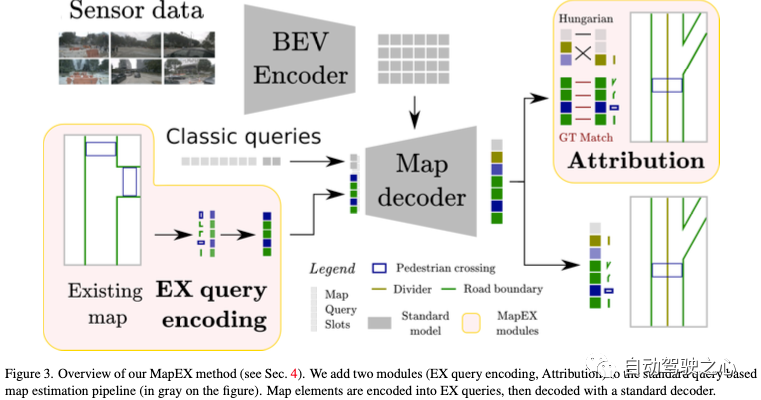

To this end we propose MapEX (see Figure 3), a new framework for online HDMap construction. It follows the standard query-based online HDMap construction paradigm and processes existing map information through two key modules: the map query encoding module and the prediction and GT pre-attribution scheme. This article builds a baseline based on MapTRv2.

The query-based core is shown by the gray elements in Figure 3. It first takes the sensor input (camera or lidar) and encodes it into a Bird's Eye View (BEV) representation as sensor features. Use a DETR-like detection scheme to detect map elements (up to N) to obtain the map itself. This is achieved by passing N×L learned query tokens (N is the maximum number of detected elements, L is the number of points predicted for the element) into a Transformer decoder, which uses the same BEV features as The cross-attention feeds sensor information to the query token. The decoded queries are then transformed into map element coordinates via a linear layer along with class predictions (including additional background classes), such that L query groups represent L points of the map element (L=20 in this paper). Training is done by matching predicted map elements and GT map elements using some variant of the Hungarian algorithm. Once matched, the model is optimized so that the predicted map element matches the GT to which it responds, using regression (for coordinates) and classification (for element categories) losses.

But this framework cannot interpret existing maps, which requires the introduction of new modules at two key levels. At the query level, we encode map elements into unlearnable EX queries. At the matching level, we prepend the query attributes to the GT map elements they represent.

The complete MapEX framework (shown in Figure 3) converts existing map elements into non-learnable map queries and adds learnable queries to reach a certain number of queries N×L. This complete set of queries is then passed to the Transformer decoder and transformed into predictions via a linear layer as usual. When training, our attribution model pre-matches some predictions to GT and the remaining predictions are matched normally using Hungarian matching. At test time, decoded non-background queries produce HDMap representations.

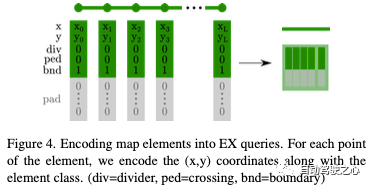

There is no mechanism in the current online HDMap building framework to interpret existing map information. Therefore, we need to design a new scheme that can translate existing maps into a form that can be understood by the standard query-based online HDMap construction framework. We propose a simple method using MapEX to encode existing map elements into an EX query for the decoder, as shown in Figure 4.

For a given map element, we extract L equidistant points, where L is the number of points we seek to predict for any map element. For each point, we make an EX query that encodes its map coordinates (x,y) in the first 2 dimensions and the map element class (divider, intersection, or boundary) in the next 3 dimensions Perform one-time coding. The remainder of the EX query is padded with 0s to achieve the standard query size used by the decoder architecture.

Although this query design is very simple, it provides the key benefits of directly encoding the information of interest (point coordinates and element classes) and minimizing conflicts with learned queries (thanks to the rich 0 padding).

Once we have a set of L queries (for map elements in an existing map), we can retrieve () a set of L taxonomic learnable queries from the standard learnable query pool. Then, following the method of this article, the generated N×L queries are fed to the decoder: in MapTR, N×L queries are treated as independent queries, while MapTRv2 uses a more effective decoupled attention scheme to combine the same map elements. of queries are grouped together. After predicting the map elements from the query, they can be used directly at test time or they can be matched to the trained GT.

While EX queries introduce a way to interpret existing map information, there is nothing to ensure that the model correctly uses these queries to estimate the corresponding element. In fact, if used alone, the network won't even recognize a fully accurate EX query. Therefore, we introduce pre-attribution of prediction and GT elements before using traditional Hungarian matching in training, as shown in Figure 3.

Simply put, we keep track of each map element in the modified map to which GT map element they correspond to: if the map element is not modified, shifted or distorted, we can compare it with the one in the real map associated with the original map elements. To ensure that the model learns to use only useful information, we only maintain a match when the average point-by-point displacement score between the modified map element and the real map element is:

given Determining the correspondence between GT and pre-predicted map elements, we can remove pre-attributed map elements from the pool of elements to be matched. The remaining map elements (predictions and GT) are then matched using some variation of the Hungarian algorithm, as is customary. Therefore, the Hungarian matching step only needs to identify which EX queries correspond to added map elements that do not exist, and find standard learned queries that fit some real map elements that do not exist in the real map (due to deletions or strong perturbations).

Reducing the number of elements the Hungarian algorithm has to process is important because even the most efficient variant has cubic complexity ()[8]. This is not a major weakness of most current online HDMap acquisition methods, as the predicted maps are small (30m × 60m) and only three types of map elements are predicted. However, as online map generation develops further, it becomes necessary to accommodate an increasing number of map elements as predictive maps become larger and more complete.

Setup: We evaluated the MapEX framework on the nuScenes dataset as it is the standard evaluation dataset for online HDMap estimation . We are based on the MapTRv2 framework and official code base. Following common practice, we report the average accuracy for three map element types (divider, boundary, crossing) at different retrieval thresholds (0.5m, 1.0m and 1.5m chamfer distance), as well as the mAP for the three categories.

For each experiment, three experiments were conducted using three fixed random seeds. Importantly, for a given combination of seeds and map scenes, the existing map data provided during validation is fixed to facilitate comparison. For consistency, we report results as mean ± standard deviation, to the nearest decimal point, even if the standard deviation exceeds this precision.

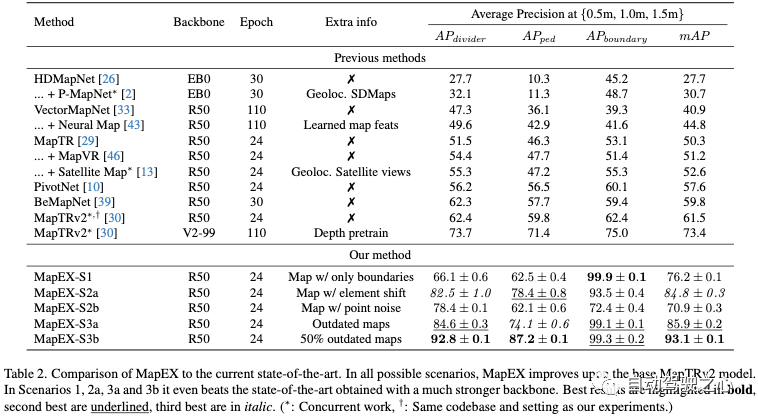

We provide a comparison of related methods in Table 2, as well as the performance of MapEX: maps without lane dividers or crosswalks (S1), with Noisy maps (S2a for shifted map elements, S2b for strong point-wise noise) and maps with large changes (S3a contains only these maps, S3b contains mixed real maps). We exhaustively compare the performance of MapEX to existing online HDMap evaluations on comparable settings (camera input, CNN backbone) and to the current state-of-the-art (which uses significantly more resources).

First, it is clear from Table 2 that any type of existing map information makes MapEX significantly outperform the existing literature in comparable settings, regardless of the scenario considered. In all but one case, the existing map information even allowed MapEX to perform better than the current state-of-the-art MapTRv2 model, which uses a large ViT backbone pretrained on an extensive depth estimation dataset in four Training in twice as many periods. Even the rather conservative S2a scenario with imprecise map element positioning gets an 11.4mAP score improvement (i.e. 16%).

Across all scenarios, we observe consistent improvements over the base MapTRv2 model on all 4 metrics. Understandably, scenario 3b (using accurate existing maps half the time) produced the best overall performance by a large margin, thus demonstrating the strong ability to identify and exploit fully accurate existing maps. Both Scenario 2a (with offset map elements) and Scenario 3a (with "outdated" map elements) provide very strong overall performance, with good performance for all three types of map elements. In Scenario 1 there are only roads boundaries are available, showing huge mAP gains due to its (expected) very powerful boundary retrieval. Even in the extremely challenging scenario 2b, where Gaussian noise with a standard deviation of 5 meters is applied to each map element point, Also gets significant gains over the base model, and has particularly good retrieval performance for delimiters and boundaries.

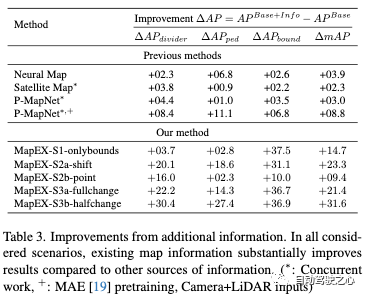

We now Focus more specifically on the improvements brought to MapEX by existing map information. For reference, we compare the MapEX gains with those brought by other sources of additional information: Neural Map Prior with globally learned feature maps, and using geo P-MapNet for localizing SDMaps. Importantly, MapModEX relies on a stronger base model than these methods. While this makes it harder to improve on the base model, it also makes it easier to achieve high scores. To avoid having an unfair The advantages, absolute scores are provided in Table 3.

We see from Table 3 that using MapEX for any type of existing map results in a more complex P-MapNet than any other source of additional information (including P-MapNet setting) for greater overall mAP gain. We observe a large improvement in the model's detection performance on both lane dividers and road boundaries. A slight example is scenario 1 (only access to road boundaries), where the model succeeds ground preserves map information on the borders, but only provides comparable improvements over previous methods on two map elements for which there is no prior information. Crosswalks appear to require more precise information from existing maps, as scenarios 1 and 2b (applying extremely destructive noise to each map point) only provides comparable improvements over existing techniques. Scenario 2a (the element has changed) and scenario 3a (the map is "out of date") result in high crosswalk detection scores, This may be because these two scenes contain more accurate crosswalk information.

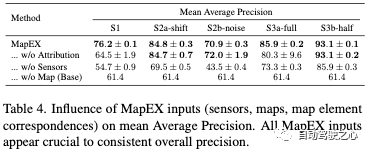

Table 4 shows how different types of inputs (existing maps, map element correspondences, and sensor inputs) affect MapEX. Existing maps greatly improve performance.

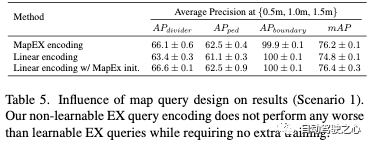

Table 5 shows that the learned EX query performs much worse than our simple unlearnable EX query. Interestingly, initializing with unlearnable values can Learned EX queries may lead to very small improvements that fail to justify the added complexity.

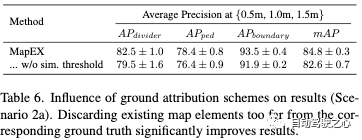

Due to prior Determining the attributes of map elements is important to make full use of existing map information, so it might be easy to predetermine attributes for all corresponding map elements rather than filtering them as in MapEX. Table 6 shows that when existing map elements are too different, discarding correspondence indeed leads to stronger performance than indiscriminate attribution. Essentially, this suggests that MapEX is better off using learnable queries rather than EX queries when existing map elements differ too far from the ground truth.

This article proposes leveraging existing maps to improve online HDMap construction. To investigate this, the authors outline three realistic scenarios where existing (simple, noisy or outdated) maps are available and introduce a new MapEX framework to exploit these maps. Since there is no mechanism in the current framework to take existing maps into account, we developed two new modules: one to encode map elements into EX queries and another to ensure that the model utilizes these queries.

Experimental results show that existing maps represent key information for online HDMap construction and that MapEX significantly improves comparable methods in all cases. In fact, in terms of mAP - Scenario 2a with randomly moving map elements - it improves by 38% over the base MapTRv2 model and by 16% over the current state-of-the-art.

We hope this work will lead to new online HDMap construction methods to interpret existing information. Existing maps, good or bad, are widely available. Ignoring them is giving up a key tool in the search for reliable online HDMap builds.

Original link: https://mp.weixin.qq.com/s/FMosLZ2VJVRyeCOzKl-GLw

The above is the detailed content of MapEX beyond SOTA: Stunning performance improvements and mapless perception technology. For more information, please follow other related articles on the PHP Chinese website!